为提高效率,请提供以下信息,问题描述清晰能够更快得到解决:

【 TiDB 使用环境】

测试环境,V5.0.4

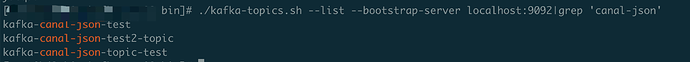

架构 tidb → ticdc → kafka。

【概述】 场景 + 问题概述

因某些问题,重新创建 changefeed。

新的changefeed表过滤跟老的一样。

【备份和数据迁移策略逻辑】

【背景】 做过哪些操作

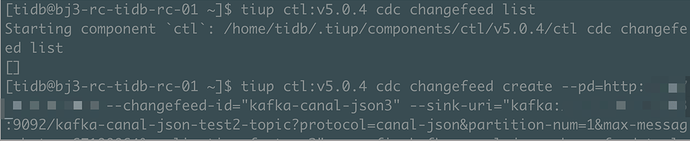

- 清理老的changefeed:tiup ctl cdc remove

- 创建新的changefeed: changefeed-id=kafka-canal-json3

[tidb@ log]$ tiup ctl:v5.0.4 cdc changefeed list

Starting component `ctl`: /home/tidb/.tiup/components/ctl/v5.0.4/ctl cdc changefeed list

[

{

"id": "kafka-canal-json3",

"summary": {

"state": "normal",

"tso": 432040937796927489,

"checkpoint": "2022-03-24 15:03:04.052",

"error": null

}

}

]

- tidb模拟DML操作

MySQL [sbtest]> insert into sbtest1 values(1,2,‘c’,‘c’);

Query OK, 1 row affected (0.00 sec)

【现象】 业务和数据库现象

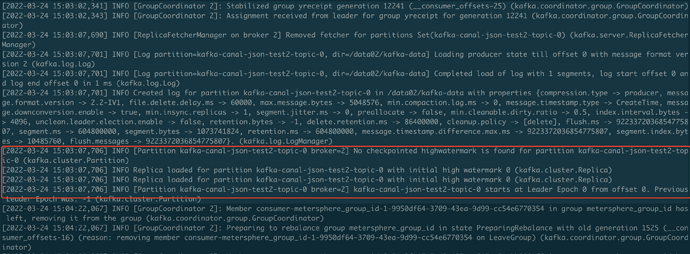

- 发现新changefeed没有收到事务消息

"create-time": "2022-03-24T15:03:04.079313501+08:00",

"start-ts": 432040937796927489,

"target-ts": 0,

"admin-job-type": 0,

"sort-engine": "unified",

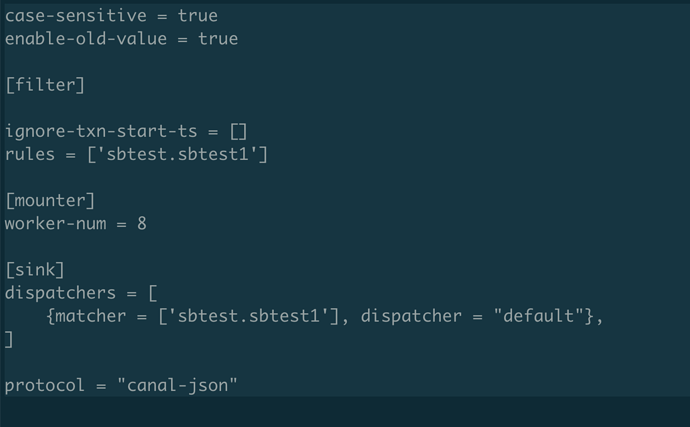

"filter": {

"rules": [

"sbtest.sbtest1"

],

"ignore-txn-start-ts": []

},

"mounter": {

"worker-num": 8

},

"sink": {

"dispatchers": [

{

"matcher": [

"sbtest.sbtest1"

],

"dispatcher": "default"

}

],

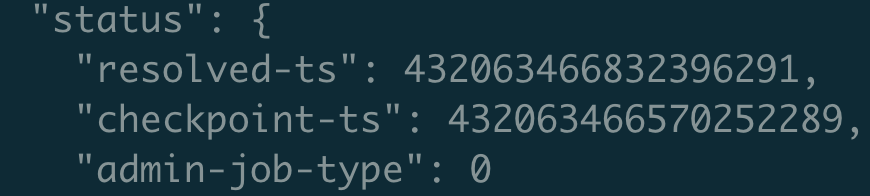

"status": {

"resolved-ts": 0,

"checkpoint-ts": 432040937796927489,

"admin-job-type": 0

【问题】 当前遇到的问题

问题1、查看日志看到:为什么ticdc有请求,但是changefeed未接收到消息请求?

[2022/03/24 15:03:19.988 +08:00] [INFO] [client.go:779] ["**start new request**"] [request="{\"header\":{\"cluster_id\":7009090993806118458,\"ticdc_version\":\"5.0.4\"},\"region_id\":98161,\"region_epoch\":{\"conf_ver\":5,\"version\":51},\"checkpoint_ts\":432040941912588289,\"start_key\":\"bURETEpvYkH/ZP9kSWR4TGn/c3T/AAAAAAD/AAAA9wAAAAD/AAAAbAAAAAD7\",\"end_key\":\"bURETEpvYkH/ZP9kSWR4TGn/c3T/AAAAAAD/AAAA9wAAAAD/AAAAbQAAAAD7\",\"request_id\":4344,\"Request\":null}"] [addr=localhost:20160]

[2022/03/24 15:03:20.233 +08:00] [INFO] [client.go:1258] ["region state entry will be replaced because received message of newer requestID"] [regionID=98161] [oldRequestID=4342] [requestID=4344] [addr=localhost:20160]

[2022/03/24 15:09:31.319 +08:00] [INFO] [client.go:959] ["EventFeed disconnected"] [regionID=98161] [requestID=4343] [span="[6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006c0000000000fa, 6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006d0000000000fa)"] [checkpoint=432041039194226689] [error="[CDC:ErrEventFeedEventError]not_leader:<region_id:98161 > "]

[2022/03/24 15:09:31.319 +08:00] [INFO] [client.go:959] ["EventFeed disconnected"] [regionID=98161] [requestID=4344] [span="[6d44444c4a6f6241ff64ff644964784c69ff7374ff0000000000ff000000f700000000ff0000006c00000000fb, 6d44444c4a6f6241ff64ff644964784c69ff7374ff0000000000ff000000f700000000ff0000006d00000000fb)"] [checkpoint=432041039194226689] [error="[CDC:ErrEventFeedEventError]not_leader:<region_id:98161 > "]

[2022/03/24 15:09:31.319 +08:00] [INFO] [region_range_lock.go:370] ["unlocked range"] [lockID=22] [regionID=98161] [startKey=6d44444c4a6f6241ff64ff644964784c69ff7374ff0000000000ff000000f700000000ff0000006c00000000fb] [endKey=6d44444c4a6f6241ff64ff644964784c69ff7374ff0000000000ff000000f700000000ff0000006d00000000fb] [checkpointTs=432041039194226689]

[2022/03/24 15:09:31.319 +08:00] [INFO] [region_cache.go:958] ["switch region peer to next due to NotLeader with NULL leader"] [currIdx=0] [regionID=98161]

[2022/03/24 15:09:31.319 +08:00] [INFO] [region_range_lock.go:370] ["unlocked range"] [lockID=21] [regionID=98161] [startKey=6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006c0000000000fa] [endKey=6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006d0000000000fa] [checkpointTs=432041039194226689]

[2022/03/24 15:09:31.319 +08:00] [INFO] [region_range_lock.go:218] ["range locked"] [lockID=22] [regionID=98161] [startKey=6d44444c4a6f6241ff64ff644964784c69ff7374ff0000000000ff000000f700000000ff0000006c00000000fb] [endKey=6d44444c4a6f6241ff64ff644964784c69ff7374ff0000000000ff000000f700000000ff0000006d00000000fb] [checkpointTs=432041039194226689]

[2022/03/24 15:09:31.319 +08:00] [INFO] [region_cache.go:958] ["switch region peer to next due to NotLeader with NULL leader"] [currIdx=0] [regionID=98161]

[2022/03/24 15:09:31.319 +08:00] [INFO] [region_range_lock.go:218] ["range locked"] [lockID=21] [regionID=98161] [startKey=6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006c0000000000fa] [endKey=6d44444c4a6f624cff69ff737400000000ff0000f90000000000ff00006d0000000000fa] [checkpointTs=432041039194226689]

[2022/03/24 15:09:31.319 +08:00] [INFO] [client.go:779] ["**start new request**"] [request="{\"header\":{\"cluster_id\":7009090993806118458,\"ticdc_version\":\"5.0.4\"},\"region_id\":98161,\"region_epoch\":{\"conf_ver\":5,\"version\":51},\"checkpoint_ts\":432041039194226689,\"start_key\":\"bURETEpvYkH/ZP9kSWR4TGn/c3T/AAAAAAD/AAAA9wAAAAD/AAAAbAAAAAD7\",\"end_key\":\"bURETEpvYkH/ZP9kSWR4TGn/c3T/AAAAAAD/AAAA9wAAAAD/AAAAbQAAAAD7\",\"request_id\":4345,\"Request\":null}"] [addr=localhost:20160]

[2022/03/24 15:09:31.319 +08:00] [INFO] [client.go:779] ["**start new request**"] [request="{\"header\":{\"cluster_id\":7009090993806118458,\"ticdc_version\":\"5.0.4\"},\"region_id\":98161,\"region_epoch\":{\"conf_ver\":5,\"version\":51},\"checkpoint_ts\":432041039194226689,\"start_key\":\"bURETEpvYkz/af9zdAAAAAD/AAD5AAAAAAD/AABsAAAAAAD6\",\"end_key\":\"bURETEpvYkz/af9zdAAAAAD/AAD5AAAAAAD/AABtAAAAAAD6\",\"request_id\":4346,\"Request\":null}"] [addr=localhost:20160]

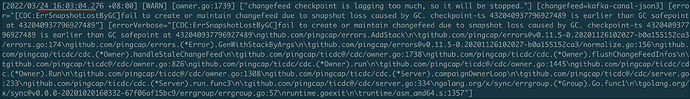

问题2: 既然changefeed没有收到请求,为什么还会" changefeed checkpoint is lagging too much, so it will be stopped. "?

创建一小时后,报错gc报错gc-ttl =3600 (1小时)

【业务影响】

【TiDB 版本】

测试环境,V5.0.4

【附件】

- 相关日志、配置文件、Grafana 监控(https://metricstool.pingcap.com/)

- TiUP Cluster Display 信息

- TiUP CLuster Edit config 信息

- TiDB-Overview 监控

- 对应模块的 Grafana 监控(如有 BR、TiDB-binlog、TiCDC 等)

- 对应模块日志(包含问题前后 1 小时日志)

若提问为性能优化、故障排查类问题,请下载脚本运行。终端输出的打印结果,请务必全选并复制粘贴上传。