tiup cluster edit-config tidb-test2

Starting component cluster: /home/admin/.tiup/components/cluster/v1.5.3/tiup-cluster edit-config tidb-test2

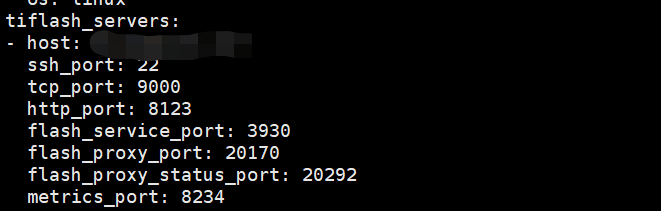

New topology could not be saved: immutable field changed: removed TiFlashServers.0.Host with value ‘10.21.206.77’, removed TiFlashServers.0.ssh_port with value ‘22’, removed TiFlashServers.0.TCPPort with value ‘9000’, removed TiFlashServers.0.HTTPPort with value ‘8123’, removed TiFlashServers.0.FlashServicePort with value ‘3930’, removed TiFlashServers.0.FlashProxyPort with value ‘20170’, removed TiFlashServers.0.FlashProxyStatusPort with value ‘20292’, removed TiFlashServers.0.StatusPort with value ‘8234’, removed TiFlashServers.0.DeployDir with value ‘/tidb-deploy/tiflash’, removed TiFlashServers.0.data_dir with value ‘/data17/tiflash,/data18/tiflash,/data19/tiflash,/data20/tiflash’, removed TiFlashServers.0.LogDir with value ‘/tidb-deploy/tiflash/log’, removed TiFlashServers.0.Offline with value ‘true’, removed TiFlashServers.0.Arch with value ‘amd64’, removed TiFlashServers.0.OS with value ‘linux’

Do you want to continue editing? [Y/n]: (default=Y) Y

New topology could not be saved: immutable field changed: removed TiFlashServers.0.Host with value ‘10.21.206.77’, removed TiFlashServers.0.ssh_port with value ‘22’, removed TiFlashServers.0.TCPPort with value ‘9000’, removed TiFlashServers.0.HTTPPort with value ‘8123’, removed TiFlashServers.0.FlashServicePort with value ‘3930’, removed TiFlashServers.0.FlashProxyPort with value ‘20170’, removed TiFlashServers.0.FlashProxyStatusPort with value ‘20292’, removed TiFlashServers.0.StatusPort with value ‘8234’, removed TiFlashServers.0.DeployDir with value ‘/tidb-deploy/tiflash’, removed TiFlashServers.0.data_dir with value ‘/data17/tiflash,/data18/tiflash,/data19/tiflash,/data20/tiflash’, removed TiFlashServers.0.LogDir with value ‘/tidb-deploy/tiflash/log’, removed TiFlashServers.0.Offline with value ‘true’, removed TiFlashServers.0.Arch with value ‘amd64’, removed TiFlashServers.0.OS with value ‘linux’

Do you want to continue editing? [Y/n]: (default=Y) Y

New topology could not be saved: immutable field changed: removed TiFlashServers.0.Host with value ‘10.21.206.77’, removed TiFlashServers.0.ssh_port with value ‘22’, removed TiFlashServers.0.TCPPort with value ‘9000’, removed TiFlashServers.0.HTTPPort with value ‘8123’, removed TiFlashServers.0.FlashServicePort with value ‘3930’, removed TiFlashServers.0.FlashProxyPort with value ‘20170’, removed TiFlashServers.0.FlashProxyStatusPort with value ‘20292’, removed TiFlashServers.0.StatusPort with value ‘8234’, removed TiFlashServers.0.DeployDir with value ‘/tidb-deploy/tiflash’, removed TiFlashServers.0.data_dir with value ‘/data17/tiflash,/data18/tiflash,/data19/tiflash,/data20/tiflash’, removed TiFlashServers.0.LogDir with value ‘/tidb-deploy/tiflash/log’, removed TiFlashServers.0.Offline with value ‘true’, removed TiFlashServers.0.Arch with value ‘amd64’, removed TiFlashServers.0.OS with value ‘linux’

Do you want to continue editing? [Y/n]: (default=Y) G

New topology could not be saved: immutable field changed: removed TiFlashServers.0.Host with value ‘10.21.206.77’, removed TiFlashServers.0.ssh_port with value ‘22’, removed TiFlashServers.0.TCPPort with value ‘9000’, removed TiFlashServers.0.HTTPPort with value ‘8123’, removed TiFlashServers.0.FlashServicePort with value ‘3930’, removed TiFlashServers.0.FlashProxyPort with value ‘20170’, removed TiFlashServers.0.FlashProxyStatusPort with value ‘20292’, removed TiFlashServers.0.StatusPort with value ‘8234’, removed TiFlashServers.0.DeployDir with value ‘/tidb-deploy/tiflash’, removed TiFlashServers.0.data_dir with value ‘/data17/tiflash,/data18/tiflash,/data19/tiflash,/data20/tiflash’, removed TiFlashServers.0.LogDir with value ‘/tidb-deploy/tiflash/log’, removed TiFlashServers.0.Offline with value ‘true’, removed TiFlashServers.0.Arch with value ‘amd64’, removed TiFlashServers.0.OS with value ‘linux’

Do you want to continue editing? [Y/n]: (default=Y) Y

New topology could not be saved: immutable field changed: removed TiFlashServers.0.Host with value ‘10.21.206.77’, removed TiFlashServers.0.ssh_port with value ‘22’, removed TiFlashServers.0.TCPPort with value ‘9000’, removed TiFlashServers.0.HTTPPort with value ‘8123’, removed TiFlashServers.0.FlashServicePort with value ‘3930’, removed TiFlashServers.0.FlashProxyPort with value ‘20170’, removed TiFlashServers.0.FlashProxyStatusPort with value ‘20292’, removed TiFlashServers.0.StatusPort with value ‘8234’, removed TiFlashServers.0.DeployDir with value ‘/tidb-deploy/tiflash’, removed TiFlashServers.0.data_dir with value ‘/data17/tiflash,/data18/tiflash,/data19/tiflash,/data20/tiflash’, removed TiFlashServers.0.LogDir with value ‘/tidb-deploy/tiflash/log’, removed TiFlashServers.0.Offline with value ‘true’, removed TiFlashServers.0.Arch with value ‘amd64’, removed TiFlashServers.0.OS with value ‘linux’

Do you want to continue editing? [Y/n]: (default=Y)

手动删除最后一步配置删除tiflash无法更新,点Y就重新进入编辑页面