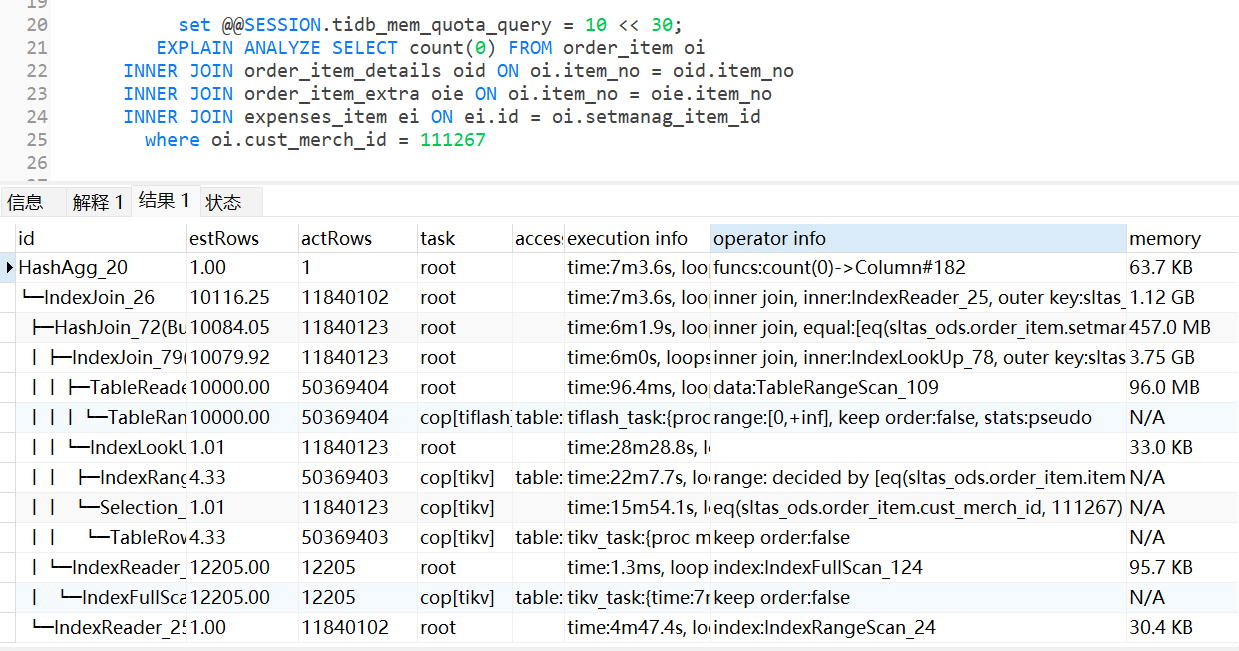

| └─IndexJoin_26 |

10116.25 |

11840102 |

root |

|

time:7m3.6s, loops:11564, inner:{total:5m12.6s, concurrency:5, task:471, construct:12.5s, fetch:4m56.7s, build:3.3s}, probe:3.92s |

inner join, inner:IndexReader_25, outer key:sltas_ods.order_item.item_no, inner key:sltas_ods.order_item_details.item_no, equal cond:eq(sltas_ods.order_item.item_no, sltas_ods.order_item_details.item_no) |

1.12 GB |

N/A |

| ├─HashJoin_72(Build) |

10084.05 |

11840123 |

root |

|

time:6m1.9s, loops:11565, build_hash_table:{total:6m0.1s, fetch:5m58.9s, build:1.15s}, probe:{concurrency:5, total:31m3.3s, max:7m3s, probe:1m3s, fetch:30m0.4s} |

inner join, equal:[eq(sltas_ods.order_item.setmanag_item_id, sltas_ods.expenses_item.id)] |

457.0 MB |

0 Bytes |

| │ ├─IndexJoin_79(Build) |

10079.92 |

11840123 |

root |

|

time:6m0s, loops:11564, inner:{total:29m57s, concurrency:5, task:1977, construct:52.6s, fetch:29m1s, build:3.41s}, probe:12.3s |

inner join, inner:IndexLookUp_78, outer key:sltas_ods.order_item_extra.item_no, inner key:sltas_ods.order_item.item_no, equal cond:eq(sltas_ods.order_item_extra.item_no, sltas_ods.order_item.item_no) |

3.75 GB |

N/A |

| │ │ ├─TableReader_110(Build) |

10000.00 |

50369404 |

root |

|

time:96.4ms, loops:49332, cop_task: {num: 290, max: 518ms, min: 3.84ms, avg: 93.2ms, p95: 171.9ms, rpc_num: 290, rpc_time: 27s, copr_cache_hit_ratio: 0.00} |

data:TableRangeScan_109 |

96.0 MB |

N/A |

| │ │ │ └─TableRangeScan_109 |

10000.00 |

50369404 |

cop[tiflash] |

table:oie |

tiflash_task:{proc max:59.8ms, min:2.47ms, p80:25.9ms, p95:35.4ms, iters:1468, tasks:290, threads:290} |

range:[0,+inf], keep order:false, stats:pseudo |

N/A |

N/A |

| │ │ └─IndexLookUp_78(Probe) |

1.01 |

11840123 |

root |

|

time:28m28.8s, loops:14579, index_task: {total_time: 22m9.8s, fetch_handle: 22m9.8s, build: 7.56ms, wait: 32.3ms}, table_task: {total_time: 2h7m44.4s, num: 9858, concurrency: 9885} |

|

33.0 KB |

N/A |

| │ │ ├─IndexRangeScan_75(Build) |

4.33 |

50369403 |

cop[tikv] |

table:oi, index:idx_item_no(item_no) |

time:22m7.7s, loops:55136, cop_task: {num: 3951, max: 1.15s, min: 570.5µs, avg: 348.3ms, p95: 768.4ms, max_proc_keys: 25000, p95_proc_keys: 25000, tot_proc: 21m20.8s, tot_wait: 279ms, rpc_num: 3951, rpc_time: 22m56s, copr_cache_hit_ratio: 0.02}, tikv_task:{proc max:1.09s, min:0s, p80:630ms, p95:719ms, iters:66776, tasks:3951}, scan_detail: {total_process_keys: 50338334, total_keys: 100676748, rocksdb: {delete_skipped_count: 0, key_skipped_count: 50338334, block: {cache_hit_count: 517151368, read_count: 22380, read_byte: 237.5 MB}}} |

range: decided by [eq(sltas_ods.order_item.item_no, sltas_ods.order_item_extra.item_no)], keep order:false |

N/A |

N/A |

| │ │ └─Selection_77(Probe) |

1.01 |

11840123 |

cop[tikv] |

|

time:15m54.1s, loops:28974, cop_task: {num: 10709, max: 368.3ms, min: 277.4µs, avg: 90.2ms, p95: 209.3ms, max_proc_keys: 9964, p95_proc_keys: 9672, tot_proc: 14m49s, tot_wait: 802ms, rpc_num: 10709, rpc_time: 16m5.5s, copr_cache_hit_ratio: 0.05}, tikv_task:{proc max:293ms, min:0s, p80:144ms, p95:195ms, iters:99505, tasks:10709}, scan_detail: {total_process_keys: 49757647, total_keys: 100753931, rocksdb: {delete_skipped_count: 0, key_skipped_count: 57001240, block: {cache_hit_count: 362250163, read_count: 245780, read_byte: 2.52 GB}}} |

eq(sltas_ods.order_item.cust_merch_id, 111267) |

N/A |

N/A |

| │ │ └─TableRowIDScan_76 |

4.33 |

50369403 |

cop[tikv] |

table:oi |

tikv_task:{proc max:293ms, min:0s, p80:143ms, p95:195ms, iters:99505, tasks:10709} |

keep order:false |

N/A |

N/A |

| │ └─IndexReader_125(Probe) |

12205.00 |

12205 |

root |

|

time:1.3ms, loops:13, cop_task: {num: 1, max: 1.25ms, proc_keys: 0, rpc_num: 1, rpc_time: 1.24ms, copr_cache_hit_ratio: 1.00} |

index:IndexFullScan_124 |

95.7 KB |

N/A |

| │ └─IndexFullScan_124 |

12205.00 |

12205 |

cop[tikv] |

table:ei, index:merchant_base_info_id(merchant_base_info_id) |

tikv_task:{time:7ms, loops:16} |

keep order:false |

N/A |

N/A |

| └─IndexReader_25(Probe) |

1.00 |

11840102 |

root |

|

time:4m47.4s, loops:12569, cop_task: {num: 1781, max: 1.01s, min: 262.6µs, avg: 188.2ms, p95: 700.2ms, max_proc_keys: 25000, p95_proc_keys: 25000, tot_proc: 5m3.1s, tot_wait: 142ms, rpc_num: 1781, rpc_time: 5m35.2s, copr_cache_hit_ratio: 0.08} |

index:IndexRangeScan_24 |

30.4 KB |

N/A |

| └─IndexRangeScan_24 |

1.00 |

11840102 |

cop[tikv] |

table:oid, index:idx_item_no_order_item_details_0(item_no) |

tikv_task:{proc max:880ms, min:0s, p80:562ms, p95:646ms, iters:18186, tasks:1781}, scan_detail: {total_process_keys: 11796605, total_keys: 23593240, rocksdb: {delete_skipped_count: 0, key_skipped_count: 11796605, block: {cache_hit_count: 122117697, read_count: 15331, read_byte: 222.9 MB}}} |

range: decided by [eq(sltas_ods.order_item_details.item_no, sltas_ods.order_item.item_no)], keep order:false |

N/A |

N/A |