为提高效率,提问时请提供以下信息,问题描述清晰可优先响应。

- 【TiDB 版本】:

v4.0.5 - 【问题描述】:

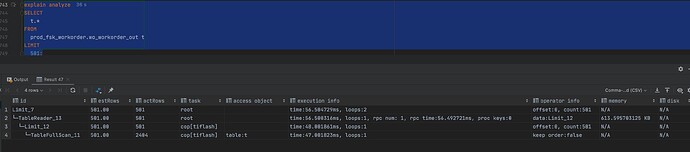

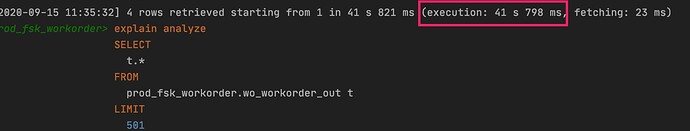

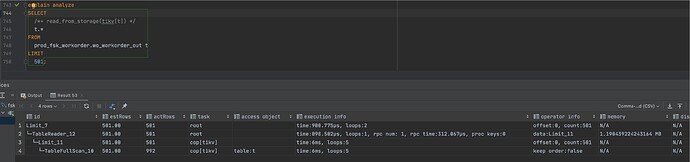

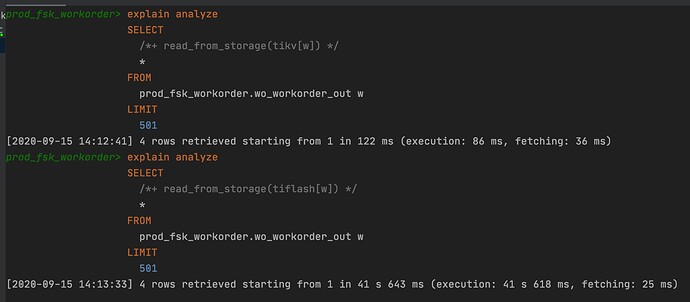

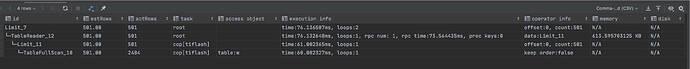

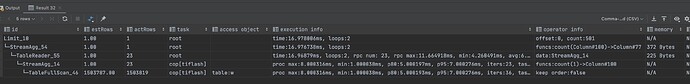

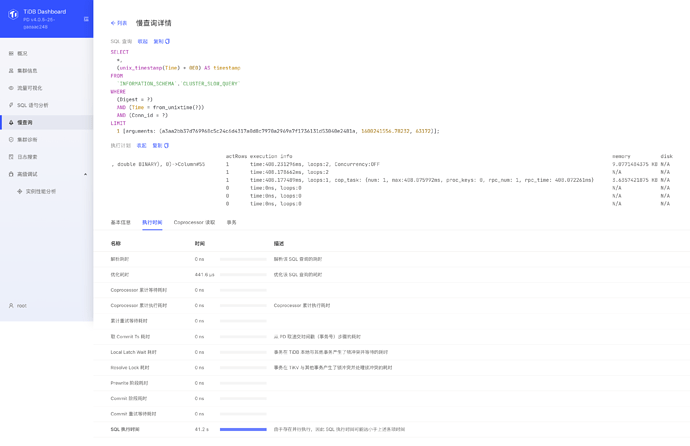

通过 explain 发现走tiflash,查询巨慢,40s+

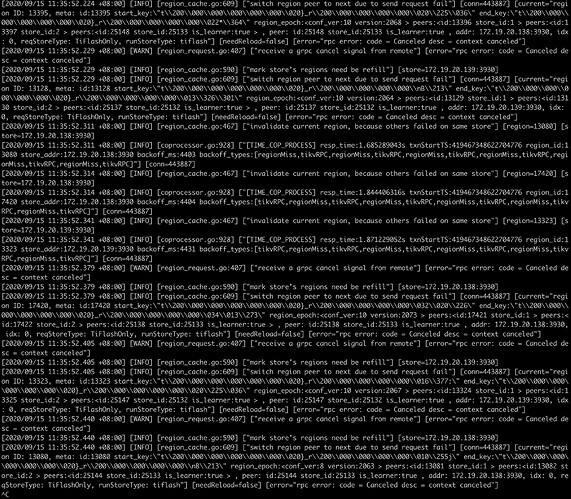

大量日志

[2020/09/10 11:06:13.553 +08:00] [INFO] [coprocessor.go:928] ["[TIME_COP_PROCESS] resp_time:1.622336401s txnStartTS:419353633513537578 region_id:17168 store_addr:172.19.20.138:3930 backoff_ms:14288 backoff_types:[tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC]"] [conn=150]

[2020/09/10 11:06:13.675 +08:00] [WARN] [region_request.go:407] ["receive a grpc cancel signal from remote"] [error="rpc error: code = Canceled desc = context canceled"]

[2020/09/10 11:06:13.676 +08:00] [INFO] [region_cache.go:590] ["mark store's regions need be refill"] [store=172.19.20.138:3930]

[2020/09/10 11:06:13.676 +08:00] [INFO] [region_cache.go:609] ["switch region peer to next due to send request fail"] [conn=150] [current="region ID: 13011, meta: id:13011 start_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\007\\031\\237\" end_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\010\\255j\" region_epoch:<conf_ver:4 version:2062 > peers:<id:13012 store_id:1 > peers:<id:13013 store_id:2 > peers:<id:18067 store_id:68 is_learner:true > , peer: id:18067 store_id:68 is_learner:true , addr: 172.19.20.138:3930, idx: 0, reqStoreType: TiFlashOnly, runStoreType: tiflash"] [needReload=false] [error="rpc error: code = Canceled desc = context canceled"]

[2020/09/10 11:06:13.686 +08:00] [INFO] [region_cache.go:467] ["invalidate current region, because others failed on same store"] [region=12945] [store=172.19.20.139:3930]

[2020/09/10 11:06:13.686 +08:00] [INFO] [coprocessor.go:928] ["[TIME_COP_PROCESS] resp_time:1.521358194s txnStartTS:419353633513537578 region_id:12945 store_addr:172.19.20.139:3930 backoff_ms:14422 backoff_types:[tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC]"] [conn=150]

[2020/09/10 11:06:13.733 +08:00] [INFO] [region_cache.go:467] ["invalidate current region, because others failed on same store"] [region=16424] [store=172.19.20.138:3930]

[2020/09/10 11:06:13.733 +08:00] [INFO] [coprocessor.go:928] ["[TIME_COP_PROCESS] resp_time:1.426339308s txnStartTS:419353633513537578 region_id:16424 store_addr:172.19.20.138:3930 backoff_ms:14468 backoff_types:[tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC]"] [conn=150]

[2020/09/10 11:06:13.871 +08:00] [WARN] [region_request.go:407] ["receive a grpc cancel signal from remote"] [error="rpc error: code = Canceled desc = context canceled"]

[2020/09/10 11:06:13.871 +08:00] [INFO] [region_cache.go:590] ["mark store's regions need be refill"] [store=172.19.20.138:3930]

[2020/09/10 11:06:13.871 +08:00] [INFO] [region_cache.go:609] ["switch region peer to next due to send request fail"] [conn=150] [current="region ID: 13080, meta: id:13080 start_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\010\\255j\" end_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\

B\\213\" region_epoch:<conf_ver:4 version:2063 > peers:<id:13081 store_id:1 > peers:<id:13082 store_id:2 > peers:<id:18068 store_id:68 is_learner:true > , peer: id:18068 store_id:68 is_learner:true , addr: 172.19.20.138:3930, idx: 0, reqStoreType: TiFlashOnly, runStoreType: tiflash"] [needReload=false] [error="rpc error: code = Canceled desc = context canceled"]

[2020/09/10 11:06:13.892 +08:00] [INFO] [region_cache.go:467] ["invalidate current region, because others failed on same store"] [region=13257] [store=172.19.20.139:3930]

[2020/09/10 11:06:13.892 +08:00] [INFO] [coprocessor.go:928] ["[TIME_COP_PROCESS] resp_time:1.359374604s txnStartTS:419353633513537578 region_id:13257 store_addr:172.19.20.139:3930 backoff_ms:14625 backoff_types:[tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC]"] [conn=150]

[2020/09/10 11:06:13.943 +08:00] [WARN] [region_request.go:407] ["receive a grpc cancel signal from remote"] [error="rpc error: code = Canceled desc = context canceled"]

通过 SELECT * FROM information_schema.tiflash_replica; 查看,数据正常

执行语句为 select * from xxx limit 500 offset 1000;

目前已经关闭改db所对应的tiflash,恢复正常,响应时间 20ms 左右

重新开启后,依然报错

[2020/09/10 11:26:50.649 +08:00] [INFO] [coprocessor.go:928] ["[TIME_COP_PROCESS] resp_time:1.883329513s txnStartTS:419353950786420802 region_id:17420 store_addr:172.19.20.139:3930 backoff_ms:41003 backoff_types:[regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC]"] [conn=225]

[2020/09/10 11:26:50.649 +08:00] [WARN] [backoff.go:316] ["regionMiss backoffer.maxSleep 40000ms is exceeded, errors:\

region_id:17420, region_ver:2073, store_type:tiflash, peer_addr:172.19.20.139:3930, error:epoch_not_match:<> at 2020-09-10T11:26:48.265896402+08:00\

send tikv request error: rpc error: code = Canceled desc = context canceled, ctx: region ID: 17420, meta: id:17420 start_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\032\\020\\226\" end_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\034\\013\\273\" region_epoch:<conf_ver:8 version:2073 > peers:<id:17421 store_id:1 > peers:<id:17422 store_id:2 > peers:<id:23019 store_id:18157 is_learner:true > , peer: id:23019 store_id:18157 is_learner:true , addr: 172.19.20.139:3930, idx: 0, reqStoreType: TiFlashOnly, runStoreType: tiflash, try next peer later at 2020-09-10T11:26:48.766190406+08:00\

region_id:17420, region_ver:2073, store_type:tiflash, peer_addr:172.19.20.139:3930, error:epoch_not_match:<> at 2020-09-10T11:26:50.649392364+08:00"]

[2020/09/10 11:26:50.744 +08:00] [INFO] [region_cache.go:467] ["invalidate current region, because others failed on same store"] [region=12945] [store=172.19.20.138:3930]

[2020/09/10 11:26:50.744 +08:00] [INFO] [coprocessor.go:928] ["[TIME_COP_PROCESS] resp_time:1.203336061s txnStartTS:419353950786420802 region_id:12945 store_addr:172.19.20.138:3930 backoff_ms:41098 backoff_types:[regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC]"] [conn=225]

[2020/09/10 11:26:50.744 +08:00] [WARN] [backoff.go:316] ["regionMiss backoffer.maxSleep 40000ms is exceeded, errors:\

region_id:12945, region_ver:2061, store_type:tiflash, peer_addr:172.19.20.138:3930, error:epoch_not_match:<> at 2020-09-10T11:26:49.040579365+08:00\

send tikv request error: rpc error: code = Canceled desc = context canceled, ctx: region ID: 12945, meta: id:12945 start_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\005\\204\\344\" end_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\007\\031\\237\" region_epoch:<conf_ver:8 version:2061 > peers:<id:12946 store_id:1 > peers:<id:12947 store_id:2 > peers:<id:23009 store_id:68 is_learner:true > , peer: id:23009 store_id:68 is_learner:true , addr: 172.19.20.138:3930, idx: 0, reqStoreType: TiFlashOnly, runStoreType: tiflash, try next peer later at 2020-09-10T11:26:49.541135504+08:00\

region_id:12945, region_ver:2061, store_type:tiflash, peer_addr:172.19.20.138:3930, error:epoch_not_match:<> at 2020-09-10T11:26:50.744346859+08:00"]

[2020/09/10 11:26:50.981 +08:00] [INFO] [region_cache.go:467] ["invalidate current region, because others failed on same store"] [region=12759] [store=172.19.20.138:3930]

[2020/09/10 11:26:50.981 +08:00] [INFO] [coprocessor.go:928] ["[TIME_COP_PROCESS] resp_time:1.688309744s txnStartTS:419353950786420802 region_id:12759 store_addr:172.19.20.138:3930 backoff_ms:41337 backoff_types:[regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC]"] [conn=225]

[2020/09/10 11:26:50.981 +08:00] [WARN] [backoff.go:316] ["regionMiss backoffer.maxSleep 40000ms is exceeded, errors:\

region_id:12759, region_ver:2061, store_type:tiflash, peer_addr:172.19.20.138:3930, error:epoch_not_match:<> at 2020-09-10T11:26:48.7932128+08:00\

send tikv request error: rpc error: code = Canceled desc = context canceled, ctx: region ID: 12759, meta: id:12759 start_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\" end_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\002[x\" region_epoch:<conf_ver:6 version:2061 > peers:<id:12760 store_id:1 > peers:<id:12761 store_id:2 > peers:<id:23007 store_id:68 is_learner:true > , peer: id:23007 store_id:68 is_learner:true , addr: 172.19.20.138:3930, idx: 0, reqStoreType: TiFlashOnly, runStoreType: tiflash, try next peer later at 2020-09-10T11:26:49.293741434+08:00\

region_id:12759, region_ver:2061, store_type:tiflash, peer_addr:172.19.20.138:3930, error:epoch_not_match:<> at 2020-09-10T11:26:50.981960744+08:00"]

[2020/09/10 11:26:51.083 +08:00] [INFO] [region_cache.go:467] ["invalidate current region, because others failed on same store"] [region=13011] [store=172.19.20.138:3930]

[2020/09/10 11:26:51.083 +08:00] [INFO] [coprocessor.go:928] ["[TIME_COP_PROCESS] resp_time:1.676328245s txnStartTS:419353950786420802 region_id:13011 store_addr:172.19.20.138:3930 backoff_ms:41436 backoff_types:[regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC]"] [conn=225]

[2020/09/10 11:26:51.083 +08:00] [WARN] [backoff.go:316] ["regionMiss backoffer.maxSleep 40000ms is exceeded, errors:\

region_id:13011, region_ver:2062, store_type:tiflash, peer_addr:172.19.20.138:3930, error:epoch_not_match:<> at 2020-09-10T11:26:48.906761904+08:00\

send tikv request error: rpc error: code = Canceled desc = context canceled, ctx: region ID: 13011, meta: id:13011 start_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\007\\031\\237\" end_key:\"t\\200\\000\\000\\000\\000\\000\\020}_r\\200\\000\\000\\000\\000\\010\\255j\" region_epoch:<conf_ver:6 version:2062 > peers:<id:13012 store_id:1 > peers:<id:13013 store_id:2 > peers:<id:23010 store_id:68 is_learner:true > , peer: id:23010 store_id:68 is_learner:true , addr: 172.19.20.138:3930, idx: 0, reqStoreType: TiFlashOnly, runStoreType: tiflash, try next peer later at 2020-09-10T11:26:49.407319591+08:00\

region_id:13011, region_ver:2062, store_type:tiflash, peer_addr:172.19.20.138:3930, error:epoch_not_match:<> at 2020-09-10T11:26:51.083512728+08:00"]

[2020/09/10 11:26:51.266 +08:00] [INFO] [region_cache.go:467] ["invalidate current region, because others failed on same store"] [region=13128] [store=172.19.20.138:3930]

疑似与该问题有关 tidb 配置修改,pd dashboard 时间显示错误

enable-streaming: true

若提问为性能优化、故障排查类问题,请下载脚本运行。终端输出的打印结果,请务必全选并复制粘贴上传。