» store

{

"count": 3,

"stores": [

{

"store": {

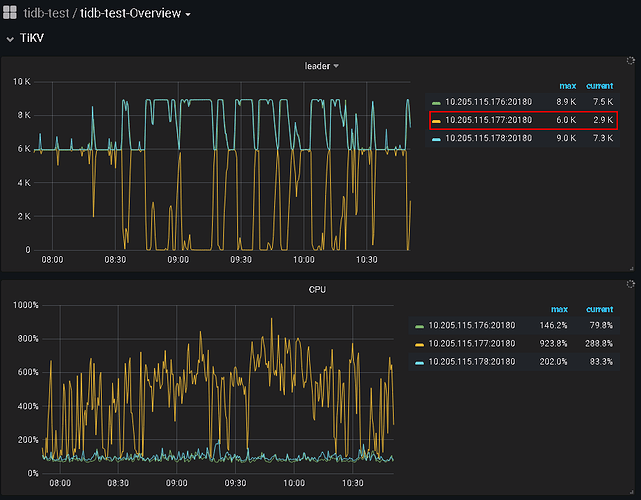

"id": 1,

"address": "10.205.115.176:20160",

"version": "4.0.4",

"status_address": "10.205.115.176:20180",

"git_hash": "28e3d44b00700137de4fa933066ab83e5f8306cf",

"start_timestamp": 1596434810,

"deploy_path": "/data/deploy/tikv-20160/bin",

"last_heartbeat": 1597374325297816963,

"state_name": "Up"

},

"status": {

"capacity": "590.5GiB",

"available": "236GiB",

"used_size": "302.1GiB",

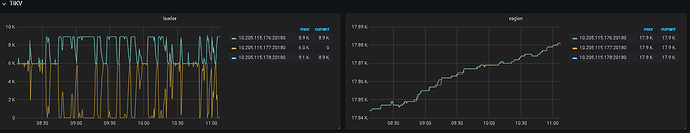

"leader_count": 8945,

"leader_weight": 1,

"leader_score": 8945,

"leader_size": 702553,

"region_count": 17881,

"region_weight": 1,

"region_score": 1400735,

"region_size": 1400735,

"start_ts": "2020-08-03T14:06:50+08:00",

"last_heartbeat_ts": "2020-08-14T11:05:25.297816963+08:00",

"uptime": "260h58m35.297816963s"

}

},

{

"store": {

"id": 4,

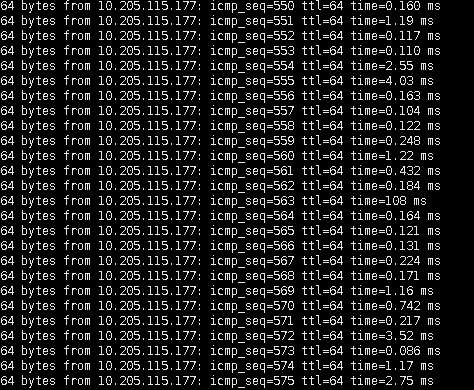

"address": "10.205.115.177:20160",

"version": "4.0.4",

"status_address": "10.205.115.177:20180",

"git_hash": "28e3d44b00700137de4fa933066ab83e5f8306cf",

"start_timestamp": 1596765346,

"deploy_path": "/data/deploy/tikv-20160/bin",

"last_heartbeat": 1597374299396790129,

"state_name": "Disconnected"

},

"status": {

"capacity": "590.5GiB",

"available": "250.3GiB",

"used_size": "302.9GiB",

"leader_count": 0,

"leader_weight": 1,

"leader_score": 0,

"leader_size": 0,

"region_count": 17881,

"region_weight": 1,

"region_score": 1399857,

"region_size": 1399857,

"start_ts": "2020-08-07T09:55:46+08:00",

"last_heartbeat_ts": "2020-08-14T11:04:59.396790129+08:00",

"uptime": "169h9m13.396790129s"

}

},

{

"store": {

"id": 5,

"address": "10.205.115.178:20160",

"version": "4.0.4",

"status_address": "10.205.115.178:20180",

"git_hash": "28e3d44b00700137de4fa933066ab83e5f8306cf",

"start_timestamp": 1596434999,

"deploy_path": "/data/deploy/tikv-20160/bin",

"last_heartbeat": 1597374323235548464,

"state_name": "Up"

},

"status": {

"capacity": "590.5GiB",

"available": "247.7GiB",

"used_size": "302.1GiB",

"leader_count": 8936,

"leader_weight": 1,

"leader_score": 8936,

"leader_size": 697304,

"region_count": 17881,

"region_weight": 1,

"region_score": 1400735,

"region_size": 1400735,

"start_ts": "2020-08-03T14:09:59+08:00",

"last_heartbeat_ts": "2020-08-14T11:05:23.235548464+08:00",

"uptime": "260h55m24.235548464s"

}

}

]

}

» scheduler show

[

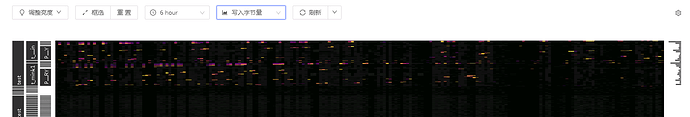

"balance-hot-region-scheduler",

"balance-leader-scheduler",

"balance-region-scheduler",

"label-scheduler"

]

»