在单机环境下模拟部署后如何卸载tidb集群?

请问部署方法是什么呢,tidb-ansible 还是 tiup,是否使用的是 tiup playground 呢。

使用的tiup cluster 进行部署

你好,

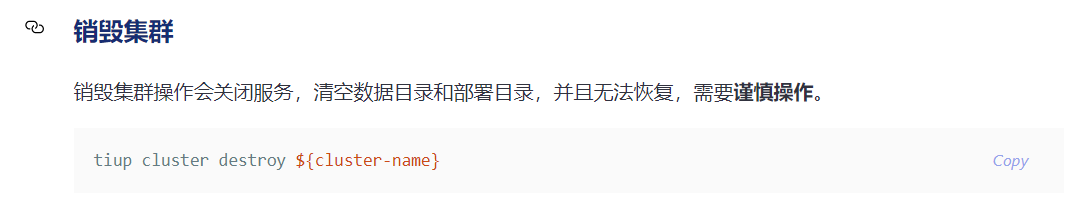

使用 tiup cluster deploy 部署的集群,可以使用 tiup cluster destory cluster-name 来进行销毁,具体可以通过 tiup cluster --help 看下帮助信息,执行完成后,需要检查对应节点的 deploy/data dir,路径信息可以通过 display 命令进行查看

https://docs.pingcap.com/zh/tidb/stable/maintain-tidb-using-tiup#销毁集群

在执行destroy 之前,我把 deploy和data目录删掉了,之后 destroy 就不成功。也无法重新安装,请问如何处理。

可否提供下 tiup debug 日志看下

ip 我改成xx了。

2020-08-10T14:41:55.634+0800 DEBUG retry error: operation timed out after 1m0s

2020-08-10T14:41:55.634+0800 ERROR 10.x.x.31 error destroying grafana: timed out waiting for port 3000 to be stopped after 1m0s

2020-08-10T14:41:55.634+0800 DEBUG TaskFinish {"task": "DestroyCluster", "error": "failed to destroy grafana: 10.54.100.31 error destroying grafana: timed out waiting for port 300

0 to be stopped after 1m0s: timed out waiting for port 3000 to be stopped after 1m0s", "errorVerbose": "timed out waiting for port 3000 to be stopped after 1m0s\

github.com/pingcap/tiup/pkg

/cluster/module.(*WaitFor).Execute\

\tgithub.com/pingcap/tiup@/pkg/cluster/module/wait_for.go:90\

github.com/pingcap/tiup/pkg/cluster/spec.PortStopped\

\tgithub.com/pingcap/tiup@/pkg/cluste

r/spec/instance.go:110\

github.com/pingcap/tiup/pkg/cluster/spec.(*instance).WaitForDown\

\tgithub.com/pingcap/tiup@/pkg/cluster/spec/instance.go:135\

github.com/pingcap/tiup/pkg/cluster/op

eration.DestroyComponent\

\tgithub.com/pingcap/tiup@/pkg/cluster/operation/destroy.go:200\

github.com/pingcap/tiup/pkg/cluster/operation.Destroy\

\tgithub.com/pingcap/tiup@/pkg/cluster/oper

ation/destroy.go:45\

github.com/pingcap/tiup/pkg/cluster.(*Manager).DestroyCluster.func2\

\tgithub.com/pingcap/tiup@/pkg/cluster/manager.go:234\

github.com/pingcap/tiup/pkg/cluster/task.(*F

unc).Execute\

\tgithub.com/pingcap/tiup@/pkg/cluster/task/func.go:32\

github.com/pingcap/tiup/pkg/cluster/task.(*Serial).Execute\

\tgithub.com/pingcap/tiup@/pkg/cluster/task/task.go:189\

gi

thub.com/pingcap/tiup/pkg/cluster.(*Manager).DestroyCluster\

\tgithub.com/pingcap/tiup@/pkg/cluster/manager.go:238\

github.com/pingcap/tiup/components/cluster/command.newDestroyCmd.func1\

\

tgithub.com/pingcap/tiup@/components/cluster/command/destroy.go:51\

github.com/spf13/cobra.(*Command).execute\

\tgithub.com/spf13/cobra@v1.0.0/command.go:842\

github.com/spf13/cobra.(*Comma

nd).ExecuteC\

\tgithub.com/spf13/cobra@v1.0.0/command.go:950\

github.com/spf13/cobra.(*Command).Execute\

\tgithub.com/spf13/cobra@v1.0.0/command.go:887\

github.com/pingcap/tiup/components/c

luster/command.Execute\

\tgithub.com/pingcap/tiup@/components/cluster/command/root.go:242\

main.main\

\tgithub.com/pingcap/tiup@/components/cluster/main.go:19\

runtime.main\

\truntime/proc.

go:203\

runtime.goexit\

\truntime/asm_arm64.s:1128\

10.x.x.31 error destroying grafana: timed out waiting for port 3000 to be stopped after 1m0s\

failed to destroy grafana"}

看下 grafana 服务器该服务是否被正常关闭,kill 或者 stop 一下,在进行 destroy ,这块的处理逻辑就是 stop service,删除 deploy data dir,通过 ps 或者 systemctl 看下。并且看下 3000 是否被其他应用所占用,这边只会关注端口是否被关闭。

嗯,果然 这个机器上还有一个 ambary的grafana,听掉就OK了,不过 又出了个9090端口,我看了下,是 我开始 按照 tiup playground安装的单机版,但是好像 tiup clean --all并没有卸载成功。建议这个玩意判断关闭还是搞个xxx.pid这种方式,去判断pid进程是否已经ko了。

这个需求我们反馈下,感谢反馈。

此话题已在最后回复的 1 分钟后被自动关闭。不再允许新回复。