我把关键的日志拿出来

开始dump:

[2020/07/13 17:08:44.715 +08:00] [INFO] [relay.go:89] [“current earliest active relay log”] [task=so_attachment] [unit=“binlog replication”] [“active relay log”=433f9876-b90c-11ea-ade9-fac753c33500.000001/mysql-bin.000029]

[2020/07/13 17:08:44.719 +08:00] [INFO] [subtask.go:204] [“start to run”] [subtask=so_attachment] [unit=Dump]

[2020/07/13 17:08:44.719 +08:00] [INFO] [worker.go:893] [“end to execute operation”] [component=“worker controller”] [“oplog ID”=302] []

[2020/07/13 17:08:45.012 +08:00] [WARN] [mydumper.go:167] ["–chunk-filesize disabled by --rows option"] [task=so_attachment] [unit=dump]

[2020/07/13 17:08:45.029 +08:00] [INFO] [mydumper.go:164] [“Server version reported as: 5.7.30-33-log”] [task=so_attachment] [unit=dump]

[2020/07/13 17:08:45.030 +08:00] [INFO] [mydumper.go:164] [“Connected to a MySQL server”] [task=so_attachment] [unit=dump]

5分钟后dump完成,从这里可以看出,修改了参数之后,dump文件是很快的

[2020/07/13 17:12:56.568 +08:00] [INFO] [mydumper.go:164] [“Finished dump at: 2020-07-13 17:12:56”] [task=so_attachment] [unit=dump]

[2020/07/13 17:12:56.578 +08:00] [INFO] [mydumper.go:116] [“dump data finished”] [task=so_attachment] [unit=dump] [“cost time”=4m11.858990186s]

[2020/07/13 17:12:56.580 +08:00] [INFO] [subtask.go:266] [“unit process returned”] [subtask=so_attachment] [unit=Dump] [stage=Finished] [status={}]

oms库存在,没问题,之后是建表。

[2020/07/13 17:12:56.648 +08:00] [ERROR] [baseconn.go:178] [“execute statement failed”] [task=so_attachment] [unit=load] [query=“CREATE DATABASE oms;”] [argument="[]"] [error=“Error 1007: Can’t create database ‘oms’; database exists”]

[2020/07/13 17:12:56.649 +08:00] [ERROR] [db.go:176] [“execute statements failed after retry”] [task=so_attachment] [unit=load] [queries="[CREATE DATABASE oms;]"] [arguments="[]"] [error="[code=10006:class=database:scope=not-set:level=high] execute statement failed: CREATE DATABASE oms;: Error 1007: Can’t create database ‘oms’; database exists"]

[2020/07/13 17:12:56.649 +08:00] [INFO] [loader.go:973] [“database already exists, skip it”] [task=so_attachment] [unit=load] [“db schema file”=./dumped_data.so_attachment/oms-schema-create.sql]

[2020/07/13 17:12:56.649 +08:00] [INFO] [loader.go:1117] [“finish to create schema”] [task=so_attachment] [unit=load] [“schema file”=./dumped_data.so_attachment/oms-schema-create.sql]

[2020/07/13 17:12:56.667 +08:00] [INFO] [loader.go:1139] [“start to create table”] [task=so_attachment] [unit=load] [“table file”=./dumped_data.so_attachment/oms.so_attachment-schema.sql]

[2020/07/13 17:12:58.211 +08:00] [WARN] [db.go:166] [“execute transaction”] [task=so_attachment] [unit=load] [query="[USE oms; CREATE TABLE so_attachment (#省略] [argument="[]"] [“cost time”=1.543604873s]

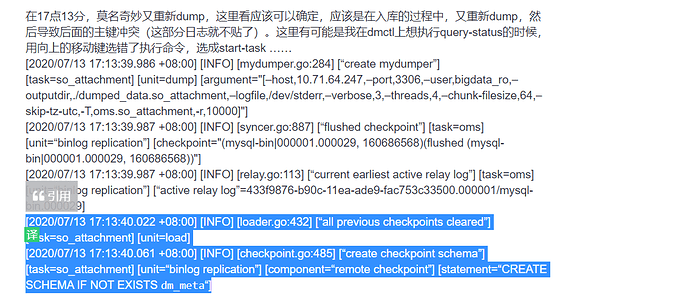

在17点13分,莫名奇妙又重新dump,这里看应该可以确定,应该是在入库的过程中,又重新dump,然后导致后面的主键冲突(这部分日志就不贴了)。这里有可能是我在dmctl上想执行query-status的时候,用向上的移动键选错了执行命令,选成start-task ……

[2020/07/13 17:13:39.986 +08:00] [INFO] [mydumper.go:284] [“create mydumper”] [task=so_attachment] [unit=dump] [argument="[–host,10.71.64.247,–port,3306,–user,bigdata_ro,–outputdir,./dumped_data.so_attachment,–logfile,/dev/stderr,–verbose,3,–threads,4,–chunk-filesize,64,–skip-tz-utc,-T,oms.so_attachment,-r,10000]"]

[2020/07/13 17:13:39.987 +08:00] [INFO] [syncer.go:887] [“flushed checkpoint”] [task=oms] [unit=“binlog replication”] [checkpoint="(mysql-bin|000001.000029, 160686568)(flushed (mysql-bin|000001.000029, 160686568))"]

[2020/07/13 17:13:39.987 +08:00] [INFO] [relay.go:113] [“current earliest active relay log”] [task=oms] [unit=“binlog replication”] [“active relay log”=433f9876-b90c-11ea-ade9-fac753c33500.000001/mysql-bin.000029]

[2020/07/13 17:13:40.022 +08:00] [INFO] [loader.go:432] [“all previous checkpoints cleared”] [task=so_attachment] [unit=load]

[2020/07/13 17:13:40.061 +08:00] [INFO] [checkpoint.go:485] [“create checkpoint schema”] [task=so_attachment] [unit=“binlog replication”] [component=“remote checkpoint”] [statement=“CREATE SCHEMA IF NOT EXISTS dm_meta“]

[2020/07/13 17:13:40.062 +08:00] [INFO] [checkpoint.go:504] [“create checkpoint table”] [task=so_attachment] [unit=“binlog replication”] [component=“remote checkpoint”] [statement=“CREATE TABLE IF NOT EXISTS dm_meta.so_attachment_syncer_checkpoint (\

\t\t\tid VARCHAR(32) NOT NULL,\

\t\t\tcp_schema VARCHAR(128) NOT NULL,\

\t\t\tcp_table VARCHAR(128) NOT NULL,\

\t\t\tbinlog_name VARCHAR(128),\

\t\t\tbinlog_pos INT UNSIGNED,\

\t\t\tis_global BOOLEAN,\

\t\t\tcreate_time timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,\

\t\t\tupdate_time timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,\

\t\t\tUNIQUE KEY uk_id_schema_table (id, cp_schema, cp_table)\

\t\t)”]

[2020/07/13 17:13:40.065 +08:00] [INFO] [syncer.go:384] [“all previous meta cleared”] [task=so_attachment] [unit=“binlog replication”]

[2020/07/13 17:13:40.070 +08:00] [INFO] [relay.go:89] [“current earliest active relay log”] [task=so_attachment] [unit=“binlog replication”] [“active relay log”=433f9876-b90c-11ea-ade9-fac753c33500.000001/mysql-bin.000029]

[2020/07/13 17:13:40.082 +08:00] [INFO] [subtask.go:204] [“start to run”] [subtask=so_attachment] [unit=Dump]

[2020/07/13 17:13:40.082 +08:00] [INFO] [worker.go:146] [initialized] [component=“worker controller”]

[2020/07/13 17:13:40.083 +08:00] [INFO] [purger.go:115] [“starting relay log purger”] [component=“relay purger”] [config=”{“interval”:3600,“expires”:0,“remain-space”:15}”]

[2020/07/13 17:13:40.083 +08:00] [INFO] [worker.go:182] [“start running”] [component=“worker controller”]

[2020/07/13 17:13:40.083 +08:00] [INFO] [server.go:112] [“start gRPC API”] [“listened address”=:8262]