您好,当前TiKV处于下线状态中,region 正在迁移,因为cpu 到瓶颈了,我需要重启服务器升级配置,因此我需要停掉整个tidb集群,请问我在升级配置之后启动tidb集群后,下线状态的TiKV 会继续迁移region吗?

{

当前TiKV处于下线状态中,region 正在迁移,此时我把tidb从3.0 升级到4.0会有影响吗?

来了老弟

2020 年6 月 29 日 02:09

4

跨大版本升级,为了稳妥起见,建议调大线程,加速 tikv 下线之后,在进行升级,因为 tidb 目前是不支持降级的,避免出现不必要的问题。

目前调整了如下参数,下线的速度还是很慢

来了老弟

2020 年6 月 29 日 02:45

6

先检查下 TIKV 磁盘使用是否达到 80% 容量,否则 PD 不会进行补副本操作。

可以上传下 grafana 监控中 pd 的 scheduler 和 operator 的全部监控项,看下调度的情况。

pd 监控中还有 region health

pd-ctl store 辛苦也返回下,看下当前 offline 节点的 region 和 leader 剩余多少。

以上信息,为了确认当前调度是否正常生成,调度是否正常运行,tikv 容量是否达标。监控时间区间为一天 。

能否提供一个邮箱地址?我给您生成一个 Grafana 账号,tikv 下线已经6天了还没结束

{

{

来了老弟

2020 年6 月 29 日 03:30

10

ok,看下 当前 offline 节点的 leader 比较多,可以在该节点添加 evict leader 调度,转移走上面的 leader 加速调度,先操作并观察下。

下线单个节点时,由于待操作的 Region 有很大一部分(3 副本配置下约 1/3)的 Leader 都集中在下线的节点上,下线速度会受限于这个单点生成 Snapshot 的速度。你可以通过手动给该节点添加一个 evict-leader-scheduler 调度器迁走 Leader 来加速。

scheduler add evict-leader-scheduler 11

来了老弟

2020 年6 月 29 日 04:02

12

您好, 如下

###########################################################

来了老弟

2020 年6 月 29 日 06:51

16

你好

邮件已收到。

明确下问题,帖子开始 store 11 为 offline,楼上反馈的信息中 store 10 为新增的 offline 节

请问下使用 tiup 部署吗,可以上传下 display 看下集群topo,否则可以上传下 inventory 文件。这边看下

此命令信息貌似没有反馈,辛苦执行下,如果是 ansible 部署,可以使用 pd-ctl 来代替

您好,store 11 ,store 10 两个都是要下线的,目前是使用asnsible 部署的3.0.2版本

#######################################

## TiDB Cluster Part

[tidb_servers]

#172.31.35.127

#172.31.43.8

#172.31.42.205

172.31.42.102

#172.31.16.209

#172.31.11.114

172.31.41.225

172.31.38.159

[tikv_servers]

172.31.42.165

#172.31.38.144

172.31.42.249

172.31.43.197

172.31.2.241

172.31.26.184

172.31.46.18

172.31.38.13

[pd_servers]

172.31.35.127

172.31.43.8

172.31.42.205

[spark_master]

[spark_slaves]

[lightning_server]

[importer_server]

## Monitoring Part

# prometheus and pushgateway servers

[monitoring_servers]

172.31.35.127

[grafana_servers]

172.31.35.127

# node_exporter and blackbox_exporter servers

[monitored_servers]

172.31.42.165

#172.31.38.144

172.31.42.249

172.31.43.197

172.31.2.241

172.31.26.184

172.31.35.127

172.31.43.8

172.31.42.205

172.31.42.102

#172.31.16.209

#172.31.11.114

172.31.46.18

172.31.38.13

172.31.41.225

172.31.38.159

[alertmanager_servers]

172.31.35.127

来了老弟

2020 年6 月 29 日 09:41

18

你好,

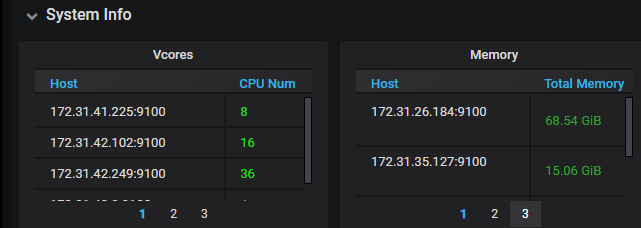

当前服务器资源不是很一致,所以在调度上速度就会有很大的偏差,当前集群可能是测试集群,为了加速下线,需要调大线程加速下线。

保留 evict-leader-scheduler 调度, 尝试将 limit 调大,看是否可以加速迁移。线上环境不建议同时下线 2 个 tikv 节点。