为提高效率,提问时请提供以下信息,问题描述清晰可优先响应。

- 【TiDB 版本】:v3.1 beta

- 【问题描述】:

-

之前遇到断电导致某一tikv出问题,已经将该tikv的region进行了转移。详细见https://asktug.com/t/topic/35012。

-

后续将该损坏的tikv所在节点数据清空,重新以新tikv节点的形式加入。发现其region均衡速度非常慢。

对应的配置:

{ "replication": { "location-labels": "", "max-replicas": 2, "strictly-match-label": "false" }, "schedule": { "disable-location-replacement": "false", "disable-make-up-replica": "false", "disable-namespace-relocation": "false", "disable-raft-learner": "false", "disable-remove-down-replica": "false", "disable-remove-extra-replica": "false", "disable-replace-offline-replica": "false", "enable-one-way-merge": "false", "high-space-ratio": 0.6, "hot-region-cache-hits-threshold": 3, "hot-region-schedule-limit": 4, "leader-schedule-limit": 16, "low-space-ratio": 0.8, "max-merge-region-keys": 200000, "max-merge-region-size": 20, "max-pending-peer-count": 32, "max-snapshot-count": 32, "max-store-down-time": "30m0s", "merge-schedule-limit": 16, "patrol-region-interval": "100ms", "region-schedule-limit": 32, "replica-schedule-limit": 8, "scheduler-max-waiting-operator": 3, "schedulers-v2": [ { "args": null, "disable": false, "type": "balance-region" }, { "args": null, "disable": false, "type": "balance-leader" }, { "args": null, "disable": false, "type": "hot-region" }, { "args": null, "disable": false, "type": "label" }, { "args": [ "1" ], "disable": false, "type": "evict-leader" }, { "args": [ "10" ], "disable": false, "type": "evict-leader" }, { "args": [ "127001" ], "disable": false, "type": "evict-leader" }, { "args": [ "151120" ], "disable": false, "type": "evict-leader" }, { "args": [ "4" ], "disable": false, "type": "evict-leader" } ], "split-merge-interval": "1h0m0s", "store-balance-rate": 15, "tolerant-size-ratio": 5 } }

-

开始在工作人员的建议下,将副本从2–>3,但发现当前磁盘空间不满足该需求且耗时过长,故再此通过pd-ctl将副本数改为2.

-

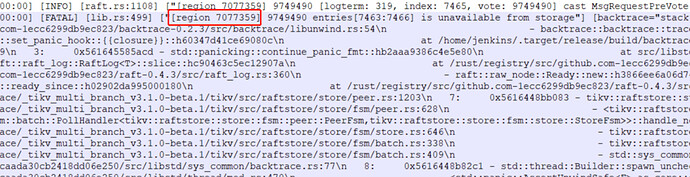

已经均衡超过两天了,且观察各tikv的store size基本上稳定了。但目前在使用时,仍然频繁出现region is unavailable.

新加入的tikv节点为10.12.5.233,对应的监控信息如下:

screencapture-10-12-5-232-3000-d-eDbRZpnWk-test-cluster-overview-2020-06-07-12_47_32.pdf (592.3 KB)

info_garhering.py执行结果:

script_info.txt (5.4 KB)