按照官方文档扩容cdc

https://pingcap.com/docs-cn/stable/scale-tidb-using-tiup/

报错信息如下:

[root@tidb ~]# tiup cluster scale-out tidb-test ./scale-out.yaml

Starting component cluster: /root/.tiup/components/cluster/v0.6.2/cluster scale-out tidb-test ./scale-out.yaml

Please confirm your topology:

TiDB Cluster: tidb-test

TiDB Version: v4.0.0-rc.2

Type Host Ports OS/Arch Directories

cdc 10.186.61.2 8300 linux/x86_64 deploy/cdc-8300

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: y

-

[ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

- Download node_exporter:v0.17.0 (linux/amd64) … Done

-

[ Serial ] - RootSSH: user=root, host=10.186.61.2, port=22, key=/root/.ssh/id_rsa

-

[ Serial ] - EnvInit: user=tidb, host=10.186.61.2

-

[ Serial ] - Mkdir: host=10.186.61.2, directories=‘/tidb-deploy’,‘/tidb-data’

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.51

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.51

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.51

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.51

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.37

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.39

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.37

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.42

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.51

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.51

-

[Parallel] - UserSSH: user=tidb, host=10.186.61.37

-

[ Serial ] - UserSSH: user=tidb, host=10.186.61.2

-

[ Serial ] - Mkdir: host=10.186.61.2, directories=‘/home/tidb/deploy/cdc-8300’,‘/home/tidb/deploy/cdc-8300/log’,‘/home/tidb/deploy/cdc-8300/bin’,'/home/tidb/

- Copy node_exporter → 10.186.61.2 … ⠹ CopyComponent: component=node_exporter, version=v0.17.0, remote=10.186.61.2:/tidb-deploy/monitor-9100 os=linux, …

- Copy node_exporter → 10.186.61.2 … ⠼ CopyComponent: component=node_exporter, version=v0.17.0, remote=10.186.61.2:/tidb-deploy/monitor-9100 os=linux, …

- Copy node_exporter → 10.186.61.2 … Done

-

[ Serial ] - ScaleConfig: cluster=tidb-test, user=tidb, host=10.186.61.2, service=cdc-8300.service, deploy_dir=/home/tidb/deploy/cdc-8300, data_dir=[/home/tidb], log_dir=/home/tidb/deploy/cdc-8300/log, cache_dir=

-

[ Serial ] - ClusterOperate: operation=StartOperation, options={Roles:[] Nodes:[] Force:false SSHTimeout:0 OptTimeout:60 APITimeout:0}

Starting component pd

Starting instance pd 10.186.61.51:2379

Start pd 10.186.61.51:2379 success

Starting component node_exporter

Starting instance 10.186.61.51

Start 10.186.61.51 success

Starting component blackbox_exporter

Starting instance 10.186.61.51

Start 10.186.61.51 success

Starting component tikv

Starting instance tikv 10.186.61.42:20160

Starting instance tikv 10.186.61.37:20160

Starting instance tikv 10.186.61.39:20160

Start tikv 10.186.61.42:20160 success

Start tikv 10.186.61.37:20160 success

Start tikv 10.186.61.39:20160 success

Starting component node_exporter

Starting instance 10.186.61.37

Start 10.186.61.37 success

Starting component blackbox_exporter

Starting instance 10.186.61.37

Start 10.186.61.37 success

Starting component node_exporter

Starting instance 10.186.61.39

Start 10.186.61.39 success

Starting component blackbox_exporter

Starting instance 10.186.61.39

Start 10.186.61.39 success

Starting component node_exporter

Starting instance 10.186.61.42

Start 10.186.61.42 success

Starting component blackbox_exporter

Starting instance 10.186.61.42

Start 10.186.61.42 success

Starting component tidb

Starting instance tidb 10.186.61.51:4000

Start tidb 10.186.61.51:4000 success

Starting component tiflash

Starting instance tiflash 10.186.61.51:9000

Start tiflash 10.186.61.51:9000 success

Starting component cdc

Starting instance cdc 10.186.61.37:11111

Starting instance cdc 10.186.61.37:8300

Start cdc 10.186.61.37:11111 success

Start cdc 10.186.61.37:8300 success

Starting component prometheus

Starting instance prometheus 10.186.61.51:9090

Start prometheus 10.186.61.51:9090 success

Starting component grafana

Starting instance grafana 10.186.61.51:3000

Start grafana 10.186.61.51:3000 success

Starting component alertmanager

Starting instance alertmanager 10.186.61.51:9093

Start alertmanager 10.186.61.51:9093 success

Checking service state of pd

10.186.61.51 Active: active (running) since Mon 2020-05-25 02:40:56 UTC; 1 weeks 4 days ago

Checking service state of tikv

10.186.61.37 Active: active (running) since Mon 2020-05-25 02:40:48 UTC; 1 weeks 4 days ago

10.186.61.42 Active: active (running) since Mon 2020-05-25 02:40:54 UTC; 1 weeks 4 days ago

10.186.61.39 Active: active (running) since Mon 2020-05-25 02:40:50 UTC; 1 weeks 4 days ago

Checking service state of tidb

10.186.61.51 Active: active (running) since Mon 2020-05-25 02:40:56 UTC; 1 weeks 4 days ago

Checking service state of tiflash

10.186.61.51 Active: active (running) since Tue 2020-05-26 09:32:58 UTC; 1 weeks 2 days ago

Checking service state of cdc

10.186.61.37 Active: activating (auto-restart) (Result: exit-code) since Fri 2020-06-05 03:02:07 UTC; 1s ago

10.186.61.37 Active: activating (auto-restart) (Result: exit-code) since Fri 2020-06-05 03:02:07 UTC; 1s ago

Checking service state of prometheus

10.186.61.51 Active: active (running) since Mon 2020-05-25 02:40:56 UTC; 1 weeks 4 days ago

Checking service state of grafana

10.186.61.51 Active: active (running) since Mon 2020-05-25 02:40:56 UTC; 1 weeks 4 days ago

Checking service state of alertmanager

10.186.61.51 Active: active (running) since Mon 2020-05-25 02:40:56 UTC; 1 weeks 4 days ago -

[Parallel] - UserSSH: user=tidb, host=10.186.61.2

-

[ Serial ] - save meta

-

[ Serial ] - ClusterOperate: operation=StartOperation, options={Roles:[] Nodes:[] Force:false SSHTimeout:0 OptTimeout:60 APITimeout:0}

Starting component cdc

Starting instance cdc 10.186.61.2:8300

retry error: operation timed out after 1m0s

cdc 10.186.61.2:8300 failed to start: timed out waiting for port 8300 to be started after 1m0s, please check the log of the instance

Error: failed to start: failed to start cdc: cdc 10.186.61.2:8300 failed to start: timed out waiting for port 8300 to be started after 1m0s, please check the log of the instance: timed out waiting for port 8300 to be started after 1m0s

Verbose debug logs has been written to /root/logs/tiup-cluster-debug-2020-06-05-03-03-10.log.

Error: run /root/.tiup/components/cluster/v0.6.2/cluster (wd:/root/.tiup/data/S10E86d) failed: exit status 1

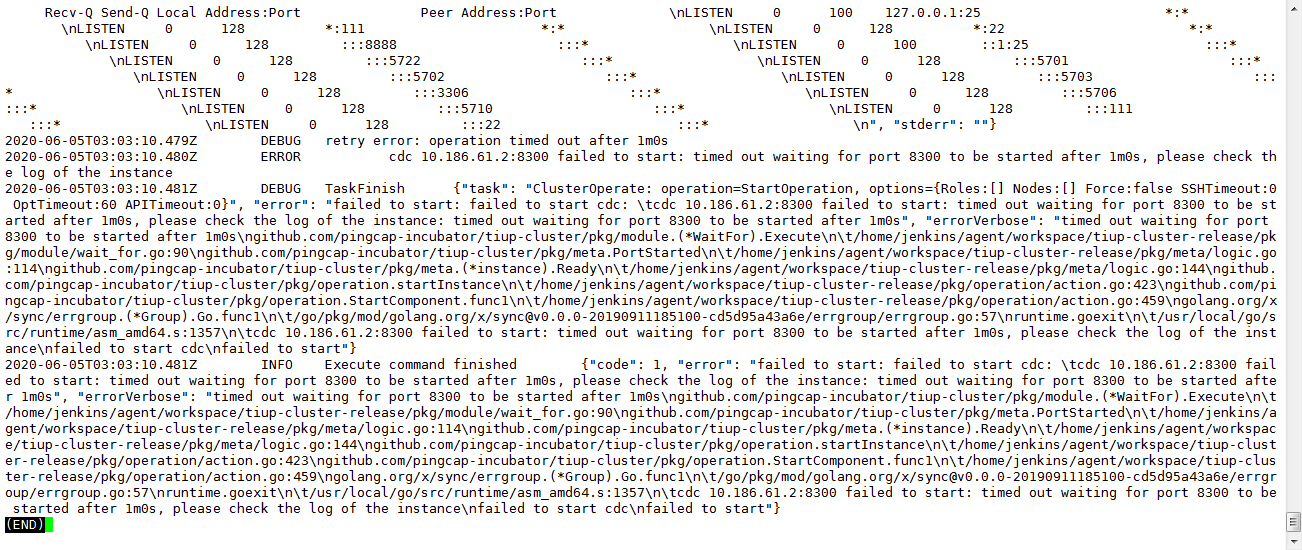

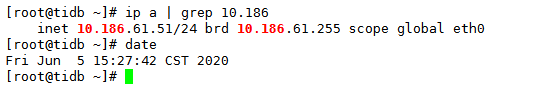

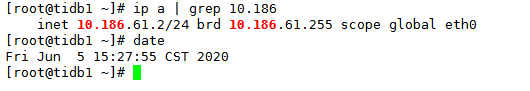

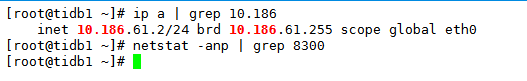

端口占用情况如下:

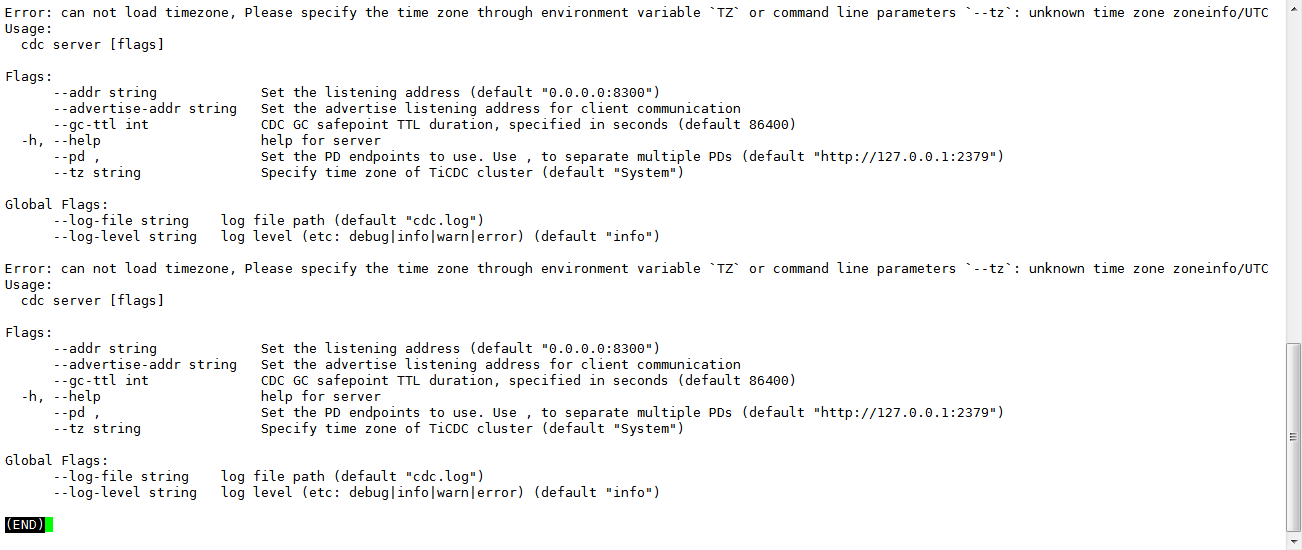

日志内容如下: