起初,因为断电或者关机后,ansible-playbook start.yml 启动不了Tidb,卡在waiting for Tikv

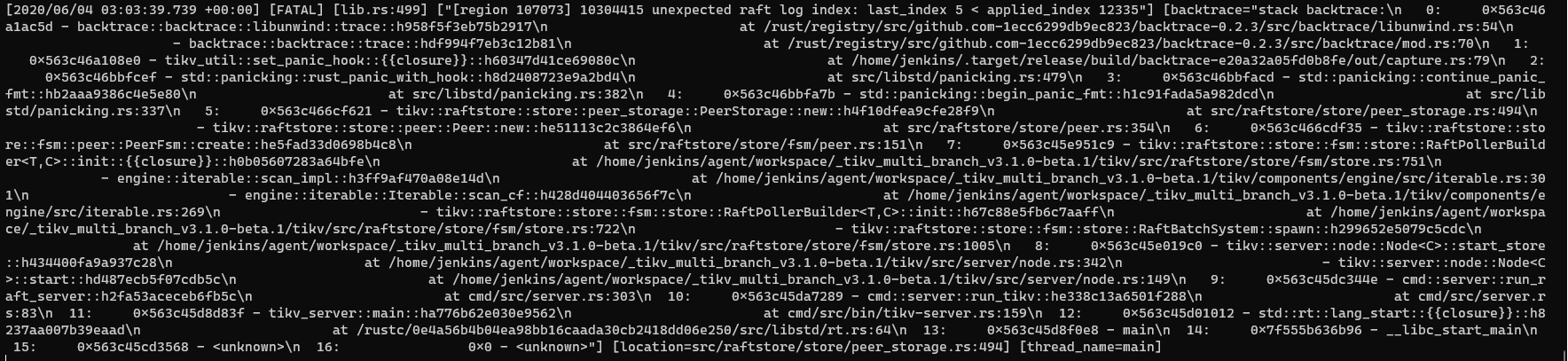

Port Up。tikv.log如下图

[“[region 107073] 10304415 unexpected raft log index: last_index 5 < applied_index 12335”]

后来寻找博客,建议使用pd-ctl将失败Tikv节点delete掉,经过此操作,发现依旧不可以。一直卡在是waiting for Tidb Port up。

然后在官网上发现可以强制 Region 从多副本失败状态恢复服务,使用unsafe-recover remove-fail-stores 命令可以将故障机器从指定 Region 的 peer 列表中移除。tikv-ctl --db /path/to/tikv/db unsafe-recover remove-fail-stores -s 4,5 --all-regions

目前还有个问题在所有健康的store上执行此命令是什么意思啊?

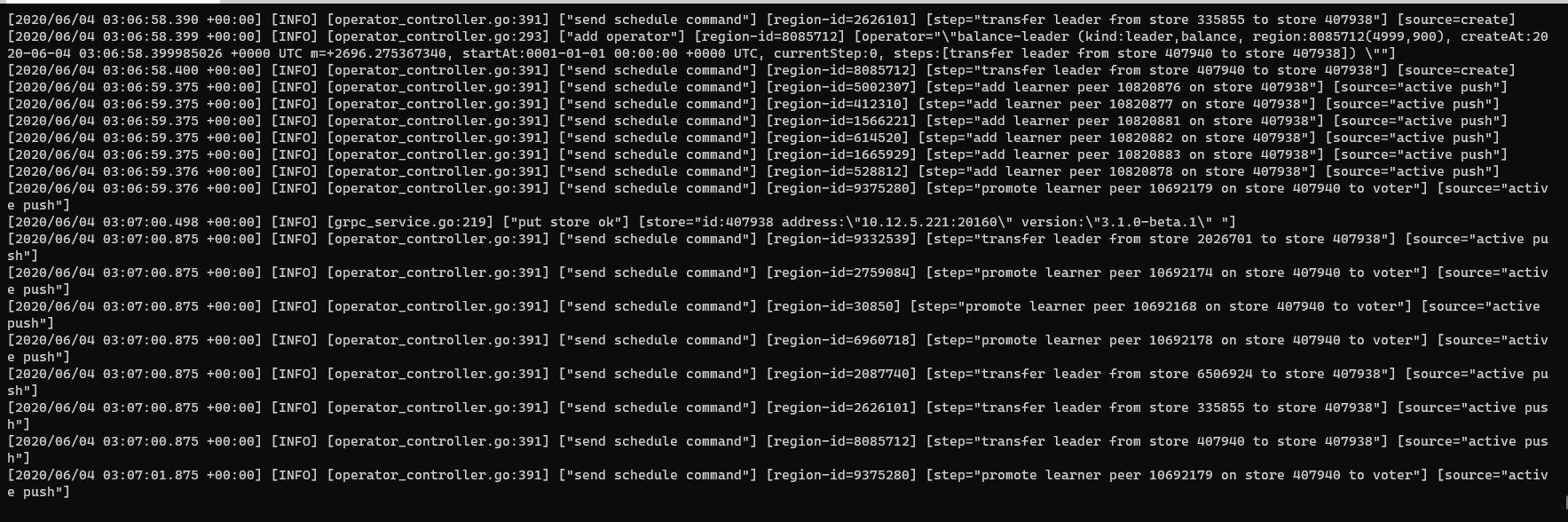

tikv-ctl不是只有中控机上才有嘛执行后仍然不可以。目前的tidb.log如下

[2020/06/04 10:55:21.311 +08:00] [INFO] [region_cache.go:564] [“switch region peer to next due to NotLeader with NULL leader”] [currIdx=1] [regionID=10597951]

[2020/06/04 10:55:21.440 +08:00] [INFO] [region_cache.go:324] [“invalidate current region, because others failed on same store”] [region=10597951] [store=10.12.5.222:20160]

麻烦大家看看这是什么问题啊