升级到4.0 rc2 之后 ,同样的查询,报错了:

1105 - open /tmp/1000_tidb/MC4wLjAuMDo0MDAwLzAuMC4wLjA6MTAwODA=/tmp-storage/chunk.ListInDisk566770649: no such file or directory

升级到4.0 rc2 之后 ,同样的查询,报错了:

1105 - open /tmp/1000_tidb/MC4wLjAuMDo0MDAwLzAuMC4wLjA6MTAwODA=/tmp-storage/chunk.ListInDisk566770649: no such file or directory

碰到同样的问题:

1105 - open /tmp/1000_tidb/MC4wLjAuMDo0MDAwLzAuMC4wLjA6MTAwODA=/tmp-storage/chunk.ListInDisk909715224: no such file or directory, Time: 56.651000s

相关的 tidb.log tidb.log.zip (2.2 MB)

对了,我这边的 TiDB 版本是 5.7.25-TiDB-v4.0.0。

另外,tidb_stderr.log 里面应该没有相关的日志,因为它最后一条日志是 6月28日(两天前)。

对了,执行的是这个查询,

SELECT

user_sid,gjj_sid,apply_id,product_cid,apply_time,confirm_time,orderId

,inserttimespan,lc_countrate,lc_3countrate

,lc_6countrate,call_3time_15srate,top10_call_count

,top10_call_3count,top10_call_6time,call_6time,call_count,call_3count,call_type_countrate

,call_type_6countrate,total_3fee,now()

FROM

(

SELECT

a.apply_id

,a.user_sid

,a.gjj_sid

,a.product_cid

,a.apply_time

,a.confirm_time

,case when a.confirm_time > 0 then TIMESTAMPDIFF(minute,b.inserttimespan,a.confirm_time)

else TIMESTAMPDIFF(minute,b.inserttimespan,a.apply_time)end time_diff

,b.*

FROM dwd.dwd_gjj_apply_info a

LEFT JOIN hidden.hidden_mongo_tel_analysis_apply b on a.yys_order_sn = b.orderId

left join hidden.hidden_tel_analysis_apply c on a.apply_id=c.apply_id

WHERE b.orderId is not null and c.apply_id is null and a.confirm_time> 0

ORDER BY a.apply_id,abs(time_diff)

)a

GROUP BY apply_id;

其中 hidden.hidden_mongo_tel_analysis_apply 是一个视图,它的底表是有 json 字段的。

这是相应的执行计划,

"Projection_18" "2677251.24" "root" "dwd.dwd_gjj_apply_info.user_sid, dwd.dwd_gjj_apply_info.gjj_sid, dwd.dwd_gjj_apply_info.apply_id, dwd.dwd_gjj_apply_info.product_cid, dwd.dwd_gjj_apply_info.apply_time, dwd.dwd_gjj_apply_info.confirm_time, Column#52, Column#53, Column#54, Column#55, Column#56, Column#57, Column#58, Column#59, Column#60, Column#61, Column#62, Column#63, Column#64, Column#65, Column#66, 2020-06-30 16:22:04->Column#90"

"└─Projection_112" "2677251.24" "root" "dwd.dwd_gjj_apply_info.apply_id, dwd.dwd_gjj_apply_info.user_sid, dwd.dwd_gjj_apply_info.gjj_sid, dwd.dwd_gjj_apply_info.product_cid, dwd.dwd_gjj_apply_info.apply_time, dwd.dwd_gjj_apply_info.confirm_time, Column#89, Column#52, Column#53, Column#54, Column#55, Column#56, Column#57, Column#58, Column#59, Column#60, Column#61, Column#62, Column#63, Column#64, Column#65, Column#66"

" └─Sort_19" "2677251.24" "root" "dwd.dwd_gjj_apply_info.apply_id:asc, Column#148:asc"

" └─Projection_113" "2677251.24" "root" "dwd.dwd_gjj_apply_info.apply_id, dwd.dwd_gjj_apply_info.user_sid, dwd.dwd_gjj_apply_info.gjj_sid, dwd.dwd_gjj_apply_info.product_cid, dwd.dwd_gjj_apply_info.apply_time, dwd.dwd_gjj_apply_info.confirm_time, Column#89, Column#52, Column#53, Column#54, Column#55, Column#56, Column#57, Column#58, Column#59, Column#60, Column#61, Column#62, Column#63, Column#64, Column#65, Column#66, abs(Column#89)->Column#148"

" └─Projection_20" "2677251.24" "root" "dwd.dwd_gjj_apply_info.apply_id, dwd.dwd_gjj_apply_info.user_sid, dwd.dwd_gjj_apply_info.gjj_sid, dwd.dwd_gjj_apply_info.product_cid, dwd.dwd_gjj_apply_info.apply_time, dwd.dwd_gjj_apply_info.confirm_time, case(gt(dwd.dwd_gjj_apply_info.confirm_time, 0000-00-00 00:00:00), timestampdiff(MINUTE, Column#53, dwd.dwd_gjj_apply_info.confirm_time), timestampdiff(MINUTE, Column#53, dwd.dwd_gjj_apply_info.apply_time))->Column#89, Column#52, Column#53, Column#54, Column#55, Column#56, Column#57, Column#58, Column#59, Column#60, Column#61, Column#62, Column#63, Column#64, Column#65, Column#66"

" └─Selection_21" "2677251.24" "root" "isnull(hidden.hidden_tel_analysis_apply.apply_id)"

" └─HashJoin_32" "3346564.05" "root" "left outer join, equal:[eq(dwd.dwd_gjj_apply_info.apply_id, hidden.hidden_tel_analysis_apply.apply_id)]"

" ├─IndexReader_107(Build)" "3922787.00" "root" "index:IndexFullScan_106"

" │ └─IndexFullScan_106" "3922787.00" "cop[tikv]" "table:c, index:idx_orderid(user_sid)" "keep order:false"

" └─HashJoin_50(Probe)" "3346564.05" "root" "inner join, equal:[eq(dwd.dwd_gjj_apply_info.yys_order_sn, Column#52)]"

" ├─TableReader_99(Build)" "3269229.55" "root" "data:Selection_98"

" │ └─Selection_98" "3269229.55" "cop[tikv]" "gt(dwd.dwd_gjj_apply_info.confirm_time, 0000-00-00 00:00:00), not(isnull(dwd.dwd_gjj_apply_info.yys_order_sn))"

" │ └─TableFullScan_97" "10762000.00" "cop[tikv]" "table:a" "keep order:false"

" └─Projection_51(Probe)" "1446548.74" "root" "mongo.mongo_mobile_report_data_recent_analysis.orderid, date_sub(str_to_date(json_unquote(cast(json_extract(mongo.mongo_mobile_datas.thedata, $.create_date), var_string(16777216))), %Y-%m-%d %h:%i:%s), 8, HOUR)->Column#53, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.lc_countrate), var_string(16777216)))->Column#54, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.lc_3countrate), var_string(16777216)))->Column#55, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.lc_6countrate), var_string(16777216)))->Column#56, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.call_3time_15srate), var_string(16777216)))->Column#57, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.top10_call_count), var_string(16777216)))->Column#58, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.top10_call_3count), var_string(16777216)))->Column#59, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.top10_call_6time), var_string(16777216)))->Column#60, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.call_6time), var_string(16777216)))->Column#61, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.call_count), var_string(16777216)))->Column#62, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.call_3count), var_string(16777216)))->Column#63, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.call_type_countrate), var_string(16777216)))->Column#64, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.call_type_6countrate), var_string(16777216)))->Column#65, json_unquote(cast(json_extract(mongo.mongo_mobile_report_data_recent_analysis.thedata, $.total_3fee), var_string(16777216)))->Column#66"

" └─IndexMergeJoin_62" "1446548.74" "root" "inner join, inner:Projection_60, outer key:mongo.mongo_mobile_report_data_recent_analysis.orderid, inner key:mongo.mongo_mobile_datas.orderid"

" ├─TableReader_91(Build)" "1433986.00" "root" "data:Selection_90"

" │ └─Selection_90" "1433986.00" "cop[tikv]" "not(isnull(mongo.mongo_mobile_report_data_recent_analysis.orderid))"

" │ └─TableFullScan_89" "1433986.00" "cop[tikv]" "table:a" "keep order:false"

" └─Projection_60(Probe)" "1.00" "root" "mongo.mongo_mobile_datas.orderid, mongo.mongo_mobile_datas.thedata"

" └─IndexLookUp_59" "1.00" "root"

" ├─IndexRangeScan_57(Build)" "1.00" "cop[tikv]" "table:d, index:PRIMARY(orderid)" "range: decided by [eq(mongo.mongo_mobile_datas.orderid, mongo.mongo_mobile_report_data_recent_analysis.orderid)], keep order:true"

" └─TableRowIDScan_58(Probe)" "1.00" "cop[tikv]" "table:d" "keep order:false"

我也遇到了,而且还遇到查询时间长了出现TiKV server timeout ,感觉4.0问题蛮大

是否方便反馈一下上面的信息,方便查询?

查询的执行计划:

Projection_16 10545732947.55 root div(test.stock.open, test.stock.close)->Column#41, div(test.stock.high, test.stock.close)->Column#42, div(test.stock.low, test.stock.close)->Column#43, div(test.stock.close, test.stock.close)->Column#44, div(test.stock.volume, test.stock.volume)->Column#45, div(test.stock.amount, test.stock.amount)->Column#46, test.stock.p_change, div(test.stock.open, test.stock.close)->Column#47, div(test.stock.high, test.stock.close)->Column#48, div(test.stock.low, test.stock.close)->Column#49, cast(plus(div(test.stock.p_change, 3), 4), bigint(22) UNSIGNED BINARY)->Column#50, test.stock.date, test.stock.p_change └─HashJoin_34 10545732947.55 root inner join, equal:[eq(test.days.z_day, test.stock.date) eq(test.stock.code, test.stock.code)] ├─HashJoin_140(Build) 9167042.52 root inner join, equal:[eq(test.days.m_day, test.stock.date) eq(test.stock.code, test.stock.code)] │ ├─HashJoin_201(Build) 7968.59 root inner join, equal:[eq(test.days.n_day, test.stock.date)] │ │ ├─IndexLookUp_218(Build) 6.93 root │ │ │ ├─IndexRangeScan_215(Build) 6.93 cop[tikv] table:d, index:day_index(n_day) range:(2020-01-01,2020-01-09), keep order:false │ │ │ └─Selection_217(Probe) 6.93 cop[tikv] not(isnull(test.days.m_day)), not(isnull(test.days.z_day)) │ │ │ └─TableRowIDScan_216 6.93 cop[tikv] table:d keep order:false │ │ └─IndexLookUp_240(Probe) 10976.92 root │ │ ├─IndexRangeScan_237(Build) 10976.92 cop[tikv] table:d1, index:index_date(date) range:(2020-01-01,2020-01-09), keep order:false │ │ └─Selection_239(Probe) 10976.92 cop[tikv] not(isnull(test.stock.code)) │ │ └─TableRowIDScan_238 10976.92 cop[tikv] table:d1 keep order:false │ └─TableReader_246(Probe) 10288358.00 root data:Selection_245 │ └─Selection_245 10288358.00 cop[tiflash] not(isnull(test.stock.code)), not(isnull(test.stock.date)) │ └─TableFullScan_244 10288358.00 cop[tiflash] table:d2 keep order:false └─TableReader_260(Probe) 10288358.00 root data:Selection_259 └─Selection_259 10288358.00 cop[tiflash] not(isnull(test.stock.code)), not(isnull(test.stock.date)) └─TableFullScan_258 10288358.00 cop[tiflash] table:d3 keep order:false

tidb日志:>

[2020/07/08 11:17:09.085 +08:00] [INFO] [region_cache.go:533] [“mark store’s regions need be refill”] [store=192.168.0.230:3930]

[2020/07/08 11:17:09.085 +08:00] [INFO] [region_cache.go:552] [“switch region peer to next due to send request fail”] [conn=13534] [current=“region ID: 72969, meta: id:72969 start_key:"t\200\000\000\000\000\000#\377_r\200\000\000\000\000\201\327\244" end_key:"t\200\000\000\000\000\000#\377_r\200\000\000\000\000\211h\252" region_epoch:<conf_ver:6 version:3566 > peers:<id:72970 store_id:1 > peers:<id:72971 store_id:4 > peers:<id:72972 store_id:7 > peers:<id:81113 store_id:81081 is_learner:true > , peer: id:81113 store_id:81081 is_learner:true , addr: 192.168.0.230:3930, idx: 3”] [needReload=false] [error=“context deadline exceeded”]

[2020/07/08 11:17:10.980 +08:00] [INFO] [region_cache.go:414] [“invalidate current region, because others failed on same store”] [region=72969] [store=192.168.0.230:3930]

[2020/07/08 11:17:10.981 +08:00] [INFO] [coprocessor.go:871] [“[TIME_COP_PROCESS] resp_time:9.823120844s txnStartTS:417904127911657479 region_id:72969 store_addr:192.168.0.230:3930 backoff_ms:41637 backoff_types:[regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC]”] [conn=13534]

[2020/07/08 11:17:10.981 +08:00] [WARN] [backoff.go:319] [“regionMiss backoffer.maxSleep 40000ms is exceeded, errors:

region_id:72969, region_ver:3566, store_type:tiflash, peer_addr:192.168.0.230:3930, error:epoch_not_match:<> at 2020-07-08T11:17:00.657322631+08:00

send tikv request error: context deadline exceeded, ctx: region ID: 72969, meta: id:72969 start_key:"t\200\000\000\000\000\000#\377_r\200\000\000\000\000\201\327\244" end_key:"t\200\000\000\000\000\000#\377_r\200\000\000\000\000\211h\252" region_epoch:<conf_ver:6 version:3566 > peers:<id:72970 store_id:1 > peers:<id:72971 store_id:4 > peers:<id:72972 store_id:7 > peers:<id:81113 store_id:81081 is_learner:true > , peer: id:81113 store_id:81081 is_learner:true , addr: 192.168.0.230:3930, idx: 3, try next peer later at 2020-07-08T11:17:09.085724095+08:00

region_id:72969, region_ver:3566, store_type:tiflash, peer_addr:192.168.0.230:3930, error:epoch_not_match:<> at 2020-07-08T11:17:10.981104026+08:00”]

[2020/07/08 11:17:14.085 +08:00] [WARN] [client_batch.go:223] [“init create streaming fail”] [target=192.168.0.230:3930] [error=“context deadline exceeded”]

[2020/07/08 11:17:14.085 +08:00] [INFO] [region_cache.go:1523] [“[liveness] request kv status fail”] [store=192.168.0.230:20292] [error=“Get http://192.168.0.230:20292/status: dial tcp 192.168.0.230:20292: connect: no route to host”]

[2020/07/08 11:17:14.085 +08:00] [INFO] [region_cache.go:552] [“switch region peer to next due to send request fail”] [conn=13534] [current=“region ID: 72965, meta: id:72965 start_key:"t\200\000\000\000\000\000#\377_r\200\000\000\000\000zF\236" end_key:"t\200\000\000\000\000\000#\377_r\200\000\000\000\000\201\327\244" region_epoch:<conf_ver:6 version:3565 > peers:<id:72966 store_id:1 > peers:<id:72967 store_id:4 > peers:<id:72968 store_id:7 > peers:<id:81112 store_id:81081 is_learner:true > , peer: id:81112 store_id:81081 is_learner:true , addr: 192.168.0.230:3930, idx: 3”] [needReload=false] [error=“context deadline exceeded”]

[2020/07/08 11:17:15.482 +08:00] [INFO] [region_cache.go:414] [“invalidate current region, because others failed on same store”] [region=72965] [store=192.168.0.230:3930]

[2020/07/08 11:17:15.482 +08:00] [INFO] [coprocessor.go:871] [“[TIME_COP_PROCESS] resp_time:9.551379169s txnStartTS:417904127911657479 region_id:72965 store_addr:192.168.0.230:3930 backoff_ms:40506 backoff_types:[regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC,regionMiss,tikvRPC]”] [conn=13534]

[2020/07/08 11:17:15.482 +08:00] [WARN] [backoff.go:319] [“regionMiss backoffer.maxSleep 40000ms is exceeded, errors:

region_id:72965, region_ver:3565, store_type:tiflash, peer_addr:192.168.0.230:3930, error:epoch_not_match:<> at 2020-07-08T11:17:05.429825937+08:00

send tikv request error: context deadline exceeded, ctx: region ID: 72965, meta: id:72965 start_key:"t\200\000\000\000\000\000#\377_r\200\000\000\000\000zF\236" end_key:"t\200\000\000\000\000\000#\377_r\200\000\000\000\000\201\327\244" region_epoch:<conf_ver:6 version:3565 > peers:<id:72966 store_id:1 > peers:<id:72967 store_id:4 > peers:<id:72968 store_id:7 > peers:<id:81112 store_id:81081 is_learner:true > , peer: id:81112 store_id:81081 is_learner:true , addr: 192.168.0.230:3930, idx: 3, try next peer later at 2020-07-08T11:17:14.086006556+08:00

region_id:72965, region_ver:3565, store_type:tiflash, peer_addr:192.168.0.230:3930, error:epoch_not_match:<> at 2020-07-08T11:17:15.482461952+08:00”]

[2020/07/08 11:17:15.483 +08:00] [ERROR] [conn.go:728] [“command dispatched failed”] [conn=13534] [connInfo=“id:13534, addr:192.168.0.250:55748 status:10, collation:utf8_general_ci, user:root”] [command=Query] [status=“inTxn:0, autocommit:1”] [sql=“select d1.open/d3.close,d1.high/d3.close,d1.low/d3.close,d1.close/d3.close,d1.volume/d3.volume,d1.amount/d3.amount,\r

d3.p_change,d3.open/d3.close,d3.high/d3.close,d3.low/d3.close, cast(d2.p_change/3+4 as UNSIGNED)\r

,d1.date,d2.p_change\r

from stock d1\r

left join days d on d1.date=d.n_day\r

inner join stock d2 on d2.date=d.m_day and d2.code=d1.code\r

inner join stock d3 on d3.date=d.z_day and d3.code=d1.code\r

\t\t\t\t\t\twhere d1.date>‘2020-01-01’ and d1.date<‘2020-01-09’”] [txn_mode=OPTIMISTIC] [err=“[tikv:9002]TiKV server timeout

github.com/pingcap/errors.AddStack

\t/home/jenkins/agent/workspace/tidb_v4.0.0-rc.2/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20190809092503-95897b64e011/errors.go:174

github.com/pingcap/errors.Trace

\t/home/jenkins/agent/workspace/tidb_v4.0.0-rc.2/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20190809092503-95897b64e011/juju_adaptor.go:15

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleCopResponse

\t/home/jenkins/agent/workspace/tidb_v4.0.0-rc.2/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:934

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTaskOnce

\t/home/jenkins/agent/workspace/tidb_v4.0.0-rc.2/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:758

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTask

\t/home/jenkins/agent/workspace/tidb_v4.0.0-rc.2/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:672

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).run

\t/home/jenkins/agent/workspace/tidb_v4.0.0-rc.2/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:494

runtime.goexit

\t/usr/local/go/src/runtime/asm_amd64.s:1357”]

感谢,已经在查看问题了,有进展会尽快答复,多谢。

好的,辛苦了~

hi, 是每次必出现还是偶发的?

hi,麻烦确认一下

两个问题都是在执行我发的SQL必然触发的

1,目录是存在的

2,稳定出现这个问题

3,我没有调过tidb_mem_quota_query参数,不确定是不是这个造成的,但是我查询的表的确有点大,大概900w的数据表

好的,我们确认一下问题,有进度及时同步,感谢理解

感谢你的回复 目前还有几个问题需要确认

1:版本为:5.7.25-TiDB-v4.0.0-rc.2,默认的确是1G

2:目前只有一个tidb节点,三个tikv和一个tiflash

3:

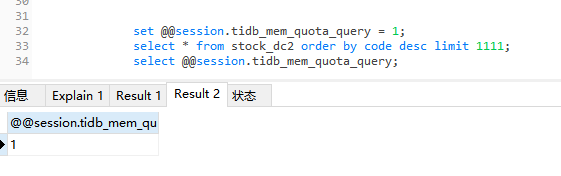

4:我改一下看看