如题,

tiup 4.0.0-rc2

tidb 扩容提示timeout失败,start 也提示这个,

tidb 172.7.160.15:4000 failed to start: timed out waiting for port 4000 to be started after 1m0s, please check the log of the instance

Error: failed to start: failed to start tidb: tidb 172.7.160.15:4000 failed to start: timed out waiting for port 4000 to be started after 1m0s, please check the log of the instance: timed out waiting for port 4000 to be started after 1m0s

yilong

2020 年5 月 28 日 09:18

2

您好,反馈 报错的debug 日志 和 tidb 日志,多谢。

debug.log (104.0 KB) tidb.log (27.5 KB)

一个提示的错误日志

yilong

2020 年5 月 28 日 12:06

4

查看tidb.log 日志

[2020/05/28 18:33:11.650 +08:00] [WARN] [client_batch.go:223] [“init create streaming fail”] [target=172.7.160.231:20160] [error=“context deadline exceeded”]

[2020/05/28 18:33:11.650 +08:00] [INFO] [region_cache.go:1523] ["[liveness] request kv status fail"] [store=172.7.160.231:20180] [error=“Get http://172.7.160.231:20180/status: dial tcp 172.7.160.231:20180: connect: connection refused”]

请查看 tikv 172.7.160.231 是否正常,多谢。

可以使用 pd-ctl 命令 查看 store 状态,判断是否为 up 正常,多谢。

pd 扩容也是同样错误

现在3个pd 都是无法使用 down 状态,td 都缩容去掉了

如何才能重装而不丢数据

我新建了一个集群

如果将 之前那个集群的 2个tikv 节点导入这个新集群呢。谢谢

之前那个集群彻底不可用了,一开始是硬盘可能出问题了,打算缩容下再扩容,结果各种错误

tidb 和tikv彻底不可用了

sst文件还存在,问下怎么让新集群使用这sst文件

一开始服务响应很慢,看了下监控,5个tikv里io都满了

就先缩容了2个tikv,缩容提示 timeout ,就加了 --force 强制退出了

然后 重启 集群,重启就提示 tidb 无法启动,试了几次也是这样

想着就缩容tidb,重新扩容吧,结果缩容后,就再也装不上 tidb了

然后又升级了tiup到 v1.0

手动重新ssh互信,均无法启动和安装tidb

001.txt (194.5 KB)

到目前为止,只跑起来了一个 pd、一个tikv,另一个tikv状态未知

原本是 3个pd,5个tikv,3个td

有一个这提示 cluster ID mismatch, local 6813007363201430470 != remote 6831897245719706867

新建的集群可以正常使用,有没有办法让新集群使用旧集群的sst数据

来了老弟

2020 年5 月 29 日 02:12

10

你好,

和你确认一些信息,希望积极反馈下,帖子中的信息上传也不是很完全。

当前的排查方向是尽量恢复旧集群

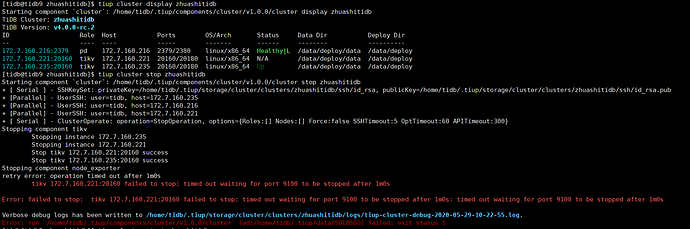

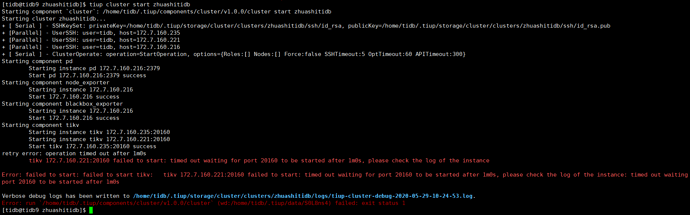

display old-cluster-name,

stop old-cluster-name 并 start old-cluster-name,其中任何步骤报错请上传操作截图 和 debug 日志 。

感觉问题和复杂呢,我搞了一晚上, 可能执行了一些错误操作,问题很多,能帮助远程看下吗,我给你服务器信息

这是221 tikv的日志,提示 id 不匹配221tilv.log (16.0 KB)

editconfig.log (1.3 KB)

[tidb@tidb9 zhuashitidb]$ tiup cluster edit-config zhuashitidb

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.0.0/cluster edit-config zhuashitidb

global:

user: tidb

ssh_port: 23

deploy_dir: /data/deploy

data_dir: /data/deploy/data

os: linux

arch: amd64

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

deploy_dir: /data/deploy/monitor-9100

data_dir: /data/deploy/data/monitor-9100

log_dir: /data/deploy/monitor-9100/log

server_configs:

tidb: {}

tikv:

readpool.coprocessor.use-unified-pool: true

readpool.storage.use-unified-pool: false

readpool.unified.max-thread-count: 13

pd: {}

tiflash: {}

tiflash-learner: {}

pump: {}

drainer: {}

cdc: {}

tidb_servers: []

tikv_servers:

- host: 172.7.160.221

ssh_port: 23

port: 20160

status_port: 20180

deploy_dir: /data/deploy

data_dir: /data/deploy/data

log_dir: /data/deploy/log

arch: amd64

os: linux

- host: 172.7.160.235

ssh_port: 23

port: 20160

status_port: 20180

deploy_dir: /data/deploy

data_dir: /data/deploy/data

log_dir: /data/deploy/log

arch: amd64

os: linux

tiflash_servers: []

pd_servers:

- host: 172.7.160.216

ssh_port: 23

name: pd-172.7.160.216-2379

失败的221 tikv /data/deploy/monitor/log

blackbox_exporter.log

level=info ts=2020-05-28T13:56:08.81309114Z caller=main.go:213 msg="Starting blackbox_exporter" version="(version=0.12.0, branch=HEAD, revision=4a22506cf0cf139d9b2f9cde099f0012d9fcabde)"

level=info ts=2020-05-28T13:56:08.814162456Z caller=main.go:220 msg="Loaded config file"

level=info ts=2020-05-28T13:56:08.814379285Z caller=main.go:324 msg="Listening on address" address=:9115

level=info ts=2020-05-28T15:17:28.24879446Z caller=main.go:213 msg="Starting blackbox_exporter" version="(version=0.12.0, branch=HEAD, revision=4a22506cf0cf139d9b2f9cde099f0012d9fcabde)"

level=info ts=2020-05-28T15:17:28.250041259Z caller=main.go:220 msg="Loaded config file"

level=info ts=2020-05-28T15:17:28.250258375Z caller=main.go:324 msg="Listening on address" address=:9115

level=info ts=2020-05-29T02:23:31.958024887Z caller=main.go:233 msg="Reloaded config file"

[root@tidb221 log]# cat node_exporter.log

time="2020-05-28T21:56:08+08:00" level=info msg="Starting node_exporter (version=0.17.0, branch=HEAD, revision=f6f6194a436b9a63d0439abc585c76b19a206b21)" source="node_exporter.go:82"

time="2020-05-28T21:56:08+08:00" level=info msg="Build context (go=go1.11.2, user=root@322511e06ced, date=20181130-15:51:33)" source="node_exporter.go:83"

time="2020-05-28T21:56:08+08:00" level=info msg="Enabled collectors:" source="node_exporter.go:90"

time="2020-05-28T21:56:08+08:00" level=info msg=" - arp" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - bcache" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - bonding" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - conntrack" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - cpu" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - diskstats" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - edac" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - entropy" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - filefd" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - filesystem" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - hwmon" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - infiniband" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - interrupts" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - ipvs" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - loadavg" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - mdadm" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - meminfo" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - meminfo_numa" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - mountstats" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - netclass" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - netdev" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - netstat" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - nfs" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - nfsd" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - sockstat" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - stat" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - systemd" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - tcpstat" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - textfile" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - time" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - timex" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - uname" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - vmstat" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - xfs" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg=" - zfs" source="node_exporter.go:97"

time="2020-05-28T21:56:08+08:00" level=info msg="Listening on :9100" source="node_exporter.go:111"

time="2020-05-28T23:17:33+08:00" level=info msg="Starting node_exporter (version=0.17.0, branch=HEAD, revision=f6f6194a436b9a63d0439abc585c76b19a206b21)" source="node_exporter.go:82"

time="2020-05-28T23:17:33+08:00" level=info msg="Build context (go=go1.11.2, user=root@322511e06ced, date=20181130-15:51:33)" source="node_exporter.go:83"

time="2020-05-28T23:17:33+08:00" level=info msg="Enabled collectors:" source="node_exporter.go:90"

time="2020-05-28T23:17:33+08:00" level=info msg=" - arp" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - bcache" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - bonding" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - conntrack" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - cpu" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - diskstats" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - edac" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - entropy" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - filefd" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - filesystem" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - hwmon" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - infiniband" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - interrupts" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - ipvs" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - loadavg" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - mdadm" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - meminfo" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - meminfo_numa" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - mountstats" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - netclass" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - netdev" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - netstat" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - nfs" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - nfsd" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - sockstat" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - stat" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - systemd" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - tcpstat" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - textfile" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - time" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - timex" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - uname" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - vmstat" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - xfs" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg=" - zfs" source="node_exporter.go:97"

time="2020-05-28T23:17:33+08:00" level=info msg="Listening on :9100" source="node_exporter.go:111"

4.

来了老弟

2020 年5 月 29 日 03:18

18

你好,

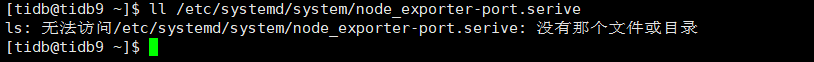

非常感谢反馈的信息,很全也很规范,第四步辛苦将 port 换成 monitor 的 port 谢谢,主要是看看 node_exporter 服务文件是否存在。

操作上一个步骤之后,辛苦继续提供下信息

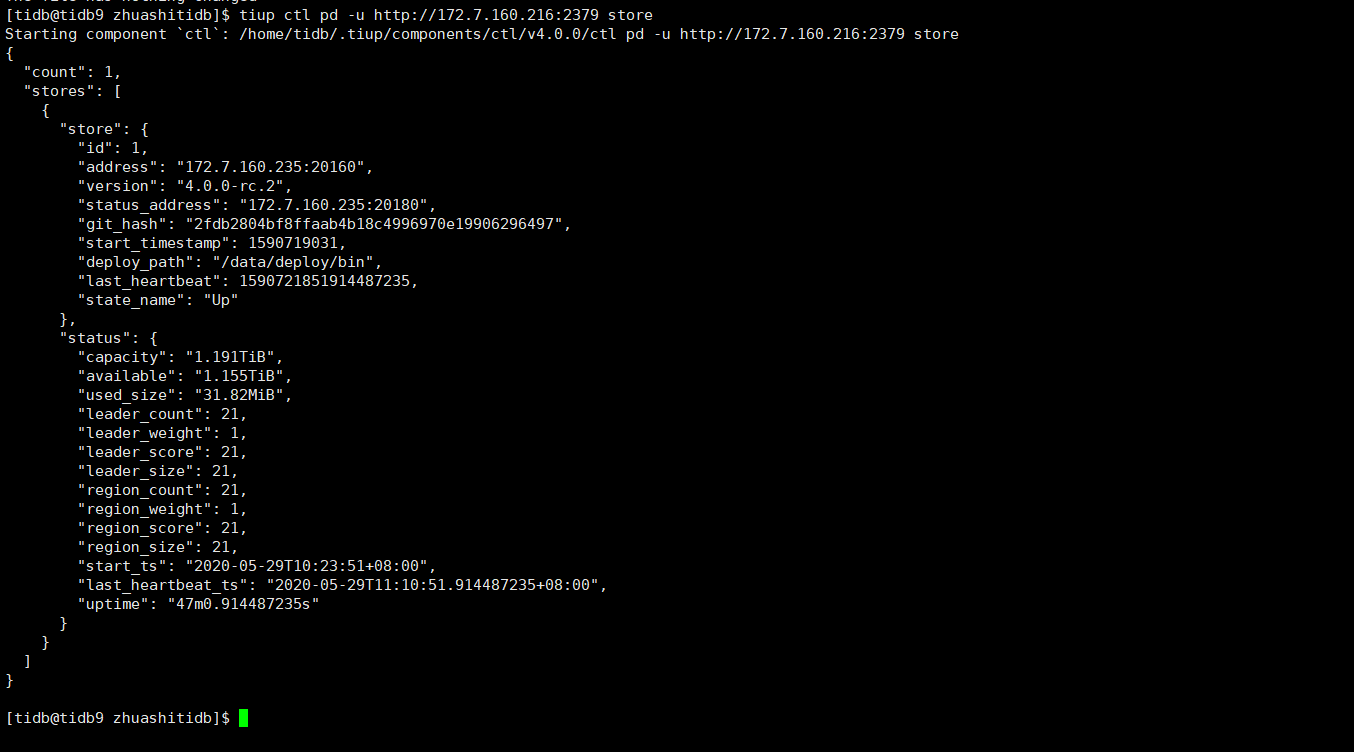

将 edit-config 中删除 221 tikv 的配置信息。并 reload -R tikv,反馈下 display 的结果

执行 stop 命令看是否成功,否则反馈下日志 tikv 报错日志和 debug 日志。

[root@tidb221 log]# ll /etc/systemd/system/node_exporter-9100.serive

ls: 无法访问/etc/systemd/system/node_exporter-9100.serive: 没有那个文件或目录

[root@tidb221 log]# ll /etc/systemd/system/node_exporter-9115.serive

ls: 无法访问/etc/systemd/system/node_exporter-9115.serive: 没有那个文件或目录

tidb9 是中控机

reload成功了

[tidb@tidb9 ~]$ tiup cluster display zhuashitidb

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.0.0/cluster display zhuashitidb

TiDB Cluster: zhuashitidb

TiDB Version: v4.0.0-rc.2

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

172.7.160.216:2379 pd 172.7.160.216 2379/2380 linux/x86_64 Healthy|L /data/deploy/data /data/deploy

172.7.160.235:20160 tikv 172.7.160.235 20160/20180 linux/x86_64 Up /data/deploy/data /data/deploy

第二步reload成功了,display正常

stop失败了

[tidb@tidb9 ~]$ tiup cluster stop zhuashitidb

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.0.0/cluster stop zhuashitidb

+ [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.235

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.216

+ [ Serial ] - ClusterOperate: operation=StopOperation, options={Roles:[] Nodes:[] Force:false SSHTimeout:5 OptTimeout:60 APITimeout:300}

Stopping component tikv

Stopping instance 172.7.160.235

Stop tikv 172.7.160.235:20160 success

Stopping component node_exporter

Stopping component blackbox_exporter

Stopping component pd

Stopping instance 172.7.160.216

Stop pd 172.7.160.216:2379 success

Stopping component node_exporter

retry error: operation timed out after 1m0s

pd 172.7.160.216:2379 failed to stop: timed out waiting for port 9100 to be stopped after 1m0s

Error: failed to stop: pd 172.7.160.216:2379 failed to stop: timed out waiting for port 9100 to be stopped after 1m0s: timed out waiting for port 9100 to be stopped after 1m0s

Verbose debug logs has been written to /home/tidb/logs/tiup-cluster-debug-2020-05-29-11-31-10.log.

Error: run `/home/tidb/.tiup/components/cluster/v1.0.0/cluster` (wd:/home/tidb/.tiup/data/S0LPdBy) failed: exit status 1

stop-error.log (39.6 KB)

来了老弟

2020 年5 月 29 日 03:45

20

你好,

现在需要恢复下 node_exporter_port

将 edit-config 中 monitored 配置信息剪贴到 scale-out 文件(vi 一个空文件放进去)中,目的是将 monitored 重新部署,恢复两个监控组件的基本信息。(尝试下,目前没有做过类似的测试)

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

我从其他节点复制了 monitor-9100 目录,现在可以启动成功了,并扩容了2个tidb

现在tikv235可以了,但是 tikv 221 节点的数据提示 id不匹配,改如何使用起这个数据,只235的是不是数据不全

[tidb@tidb9 ~]$ tiup cluster display zhuashitidb

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.0.0/cluster display zhuashitidb

TiDB Cluster: zhuashitidb

TiDB Version: v4.0.0-rc.2

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

172.7.160.216:2379 pd 172.7.160.216 2379/2380 linux/x86_64 Healthy|L /data/deploy/data /data/deploy

172.7.160.36:4000 tidb 172.7.160.36 4000/10080 linux/x86_64 Up - /data/deploy

172.7.160.37:4000 tidb 172.7.160.37 4000/10080 linux/x86_64 Up - /data/deploy

172.7.160.235:20160 tikv 172.7.160.235 20160/20180 linux/x86_64 Up /data/deploy/data /data/deploy