现状:

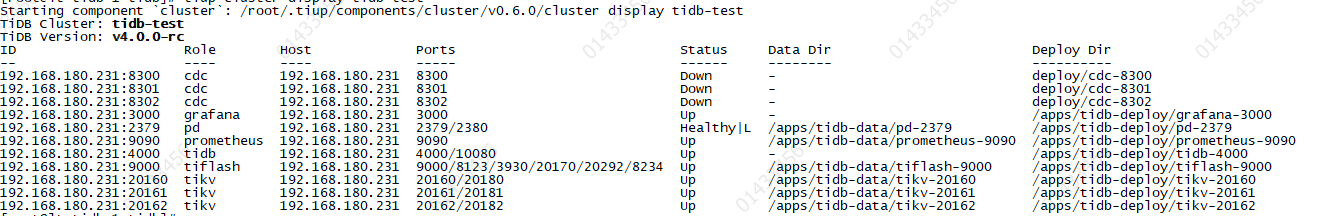

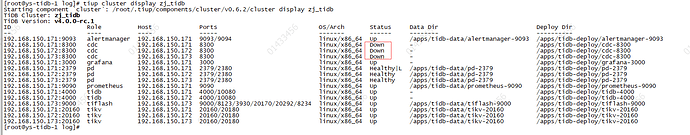

TiDB Version: v4.0.0-rc

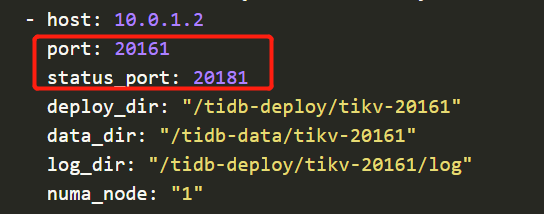

单机部署多实例:1 pd 3 kv 1tidb

正常可以启动和使用。

2.需求需要按爪给你CDC组件

当在现有集群通过tiup工具的scale-out命令扩容cdc

配置文件如下:

cdc_servers:

-

host: 192.168.180.231

port: 8300 -

host: 192.168.180.231

port: 8301 -

host: 192.168.180.231

port: 8302

3.执行扩容命令后: -

Download cdc:v4.0.0-rc … Done

- [ Serial ] - UserSSH: user=tidb, host=192.168.180.231

- [ Serial ] - UserSSH: user=tidb, host=192.168.180.231

- [ Serial ] - Mkdir: host=192.168.180.231, directories=‘/home/tidb/deploy/cdc-8300’,‘’,‘/home/tidb/deploy/cdc-8300/log’,‘/home/tidb/deploy/cdc-8300/bin’,‘/home/tidb/deploy/cdc-8300/conf’,‘/home/tidb/deploy/cdc-8300/scripts’

- [ Serial ] - Mkdir: host=192.168.180.231, directories=‘/home/tidb/deploy/cdc-8302’,‘’,‘/home/tidb/deploy/cdc-8302/log’,‘/home/tidb/deploy/cdc-8302/bin’,‘/home/tidb/deploy/cdc-8302/conf’,‘/home/tidb/deploy/cdc-8302/scripts’

- [ Serial ] - UserSSH: user=tidb, host=192.168.180.231

- [ Serial ] - Mkdir: host=192.168.180.231, directories=‘/home/tidb/deploy/cdc-8301’,‘’,‘/home/tidb/deploy/cdc-8301/log’,‘/home/tidb/deploy/cdc-8301/bin’,‘/home/tidb/deploy/cdc-8301/conf’,‘/home/tidb/deploy/cdc-8301/scripts’

- [ Serial ] - CopyComponent: component=cdc, version=v4.0.0-rc, remote=192.168.180.231:/home/tidb/deploy/cdc-8301

- [ Serial ] - CopyComponent: component=cdc, version=v4.0.0-rc, remote=192.168.180.231:/home/tidb/deploy/cdc-8302

- [ Serial ] - CopyComponent: component=cdc, version=v4.0.0-rc, remote=192.168.180.231:/home/tidb/deploy/cdc-8300

- [ Serial ] - ScaleConfig: cluster=tidb-test, user=tidb, host=192.168.180.231, service=cdc-8301.service, deploy_dir=/home/tidb/deploy/cdc-8301, data_dir=, log_dir=/home/tidb/deploy/cdc-8301/log, cache_dir=

- [ Serial ] - ScaleConfig: cluster=tidb-test, user=tidb, host=192.168.180.231, service=cdc-8302.service, deploy_dir=/home/tidb/deploy/cdc-8302, data_dir=, log_dir=/home/tidb/deploy/cdc-8302/log, cache_dir=

- [ Serial ] - ScaleConfig: cluster=tidb-test, user=tidb, host=192.168.180.231, service=cdc-8300.service, deploy_dir=/home/tidb/deploy/cdc-8300, data_dir=, log_dir=/home/tidb/deploy/cdc-8300/log, cache_dir=

- [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [ Serial ] - ClusterOperate: operation=StartOperation, options={Roles:[] Nodes:[] Force:false Timeout:0}

Starting component pd

Starting instance pd 192.168.180.231:2379

Start pd 192.168.180.231:2379 success

Starting component node_exporter

Starting instance 192.168.180.231

Start 192.168.180.231 success

Starting component blackbox_exporter

Starting instance 192.168.180.231

Start 192.168.180.231 success

Starting component tikv

Starting instance tikv 192.168.180.231:20162

Starting instance tikv 192.168.180.231:20161

Starting instance tikv 192.168.180.231:20160

Start tikv 192.168.180.231:20162 success

Start tikv 192.168.180.231:20160 success

Start tikv 192.168.180.231:20161 success

Starting component tidb

Starting instance tidb 192.168.180.231:4000

Start tidb 192.168.180.231:4000 success

Starting component tiflash

Starting instance tiflash 192.168.180.231:9000

Start tiflash 192.168.180.231:9000 success

Starting component prometheus

Starting instance prometheus 192.168.180.231:9090

Start prometheus 192.168.180.231:9090 success

Starting component grafana

Starting instance grafana 192.168.180.231:3000

Start grafana 192.168.180.231:3000 success

Checking service state of pd

192.168.180.231 Active: active (running) since Thu 2020-05-21 17:52:55 CST; 52min ago

Checking service state of tikv

192.168.180.231 Active: active (running) since Thu 2020-05-21 17:52:56 CST; 52min ago

192.168.180.231 Active: active (running) since Thu 2020-05-21 17:52:56 CST; 52min ago

192.168.180.231 Active: active (running) since Thu 2020-05-21 17:52:56 CST; 52min ago

Checking service state of tidb

192.168.180.231 Active: active (running) since Thu 2020-05-21 17:53:01 CST; 52min ago

Checking service state of tiflash

192.168.180.231 Active: active (running) since Thu 2020-05-21 17:53:14 CST; 52min ago

Checking service state of prometheus

192.168.180.231 Active: active (running) since Thu 2020-05-21 18:45:36 CST; 2s ago

Checking service state of grafana

192.168.180.231 Active: active (running) since Thu 2020-05-21 18:45:37 CST; 2s ago - [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [Parallel] - UserSSH: user=tidb, host=192.168.180.231

- [ Serial ] - save meta

- [ Serial ] - ClusterOperate: operation=StartOperation, options={Roles:[] Nodes:[] Force:false Timeout:0}

Starting component cdc

Starting instance cdc 192.168.180.231:8302

Starting instance cdc 192.168.180.231:8300

Starting instance cdc 192.168.180.231:8301

cdc 192.168.180.231:8301 failed to start: timed out waiting for port 8301 to be started after 1m0s, please check the log of the instance

cdc 192.168.180.231:8300 failed to start: timed out waiting for port 8300 to be started after 1m0s, please check the log of the instance

cdc 192.168.180.231:8302 failed to start: timed out waiting for port 8302 to be started after 1m0s, please check the log of the instance

Error: failed to start: failed to start cdc: cdc 192.168.180.231:8301 failed to start: timed out waiting for port 8301 to be started after 1m0s, please check the log of the instance: timed out waiting for port 8301 to be started after 1m0s

Verbose debug logs has been written to /root/logs/tiup-cluster-debug-2020-05-21-18-46-40.log.

Error: run /root/.tiup/components/cluster/v0.6.0/cluster (wd:/root/.tiup/data/RzcOwx2) failed: exit status 1