今天自己熟悉tiup的时候遇到的小问题,希望tiup的同学帮忙确认下。

环境

os:CentOS Linux release 7.4.1708 (Core)

tiup: tiup cluster v0.6.0

tidb: v3.0.9

拓扑结构:

Starting component `cluster`: /root/.tiup/components/cluster/v0.6.0/cluster display test_cluster

TiDB Cluster: test_cluster

TiDB Version: v3.0.9

ID Role Host Ports Status Data Dir Deploy Dir

-- ---- ---- ----- ------ -------- ----------

172.21.141.61:3000 grafana 172.21.141.61 3000 Up - /apps/tidb/test_cluster/deploy/grafana-3000

172.21.141.61:2379 pd 172.21.141.61 2379/2380 Healthy i/apps/tidb/test_cluster/data/pd-2379 /apps/tidb/test_cluster/deploy/pd-2379

172.21.141.61:2381 pd 172.21.141.61 2381/2382 Healthy|L i/apps/tidb/test_cluster/data/pd-2381 /apps/tidb/test_cluster/deploy/pd-2381

172.21.141.61:2383 pd 172.21.141.61 2383/2384 Healthy i/apps/tidb/test_cluster/data/pd-2383 /apps/tidb/test_cluster/deploy/pd-2383

172.21.141.61:9090 prometheus 172.21.141.61 9090 Up i/apps/tidb/test_cluster/data/prometheus-9090 /apps/tidb/test_cluster/deploy/prometheus-9090

172.21.141.61:4000 tidb 172.21.141.61 4000/10080 Up - /apps/tidb/test_cluster/deploy/tidb-4000

172.21.141.61:20160 tikv 172.21.141.61 20160/20180 Up i/apps/tidb/test_cluster/data/tikv-20160 /apps/tidb/test_cluster/deploy/tikv-20160

172.21.141.61:20161 tikv 172.21.141.61 20161/20181 Up i/apps/tidb/test_cluster/data/tikv-20161 /apps/tidb/test_cluster/deploy/tikv-20161

172.21.141.61:20162 tikv 172.21.141.61 20162/20182 Up i/apps/tidb/test_cluster/data/tikv-20162 /apps/tidb/test_cluster/deploy/tikv-20162

172.21.141.61:20163 tikv 172.21.141.61 20163/20183 Up i/apps/tidb/test_cluster/data/tikv-20163 /apps/tidb/test_cluster/deploy/tikv-20163

scale-out配置文件

[root@dbatest05 tiup]# cat scale_out_pd.yaml

# # Global variables are applied to all deployments and used as the default value of

# # the deployments if a specific deployment value is missing.

global:

user: "tidb"

ssh_port: 22

deploy_dir: "/apps/tidb/test_cluster/deploy"

data_dir: "i/apps/tidb/test_cluster/data"

# # Monitored variables are applied to all the machines.

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

server_configs:

tidb:

log.slow-threshold: 300

tikv:

readpool.storage.use-unified-pool: false

readpool.coprocessor.use-unified-pool: true

pd:

replication.enable-placement-rules: true

tiflash:

logger.level: "info"

pd_servers:

- host: 172.21.141.61

client_port: 2385

peer_port: 2386

执行命令

tiup cluster scale-out test_cluster test_cluster_scale_out_pd.yaml -y

部署日志

tiup cluster scale-in test_cluster -N 172.21.141.61:2385

Starting component `cluster`: /root/.tiup/components/cluster/v0.6.0/cluster scale-in test_cluster -N 172.21.141.61:2385

This operation will delete the 172.21.141.61:2385 nodes in `test_cluster` and all their data.

Do you want to continue? [y/N]: y

Scale-in nodes...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/test_cluster/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/test_cluster/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [ Serial ] - ClusterOperate: operation=ScaleInOperation, options={Roles:[] Nodes:[172.21.141.61:2385] Force:false Timeout:300}

Stopping component pd

Stopping instance 172.21.141.61

Stop pd 172.21.141.61:2385 success

Destroying component pd

Destroying instance 172.21.141.61

Deleting paths on 172.21.141.61: i/apps/tidb/test_cluster/data/pd-2385 /apps/tidb/test_cluster/deploy/pd-2385 /apps/tidb/test_cluster/deploy/pd-2385/log /etc/systemd/system/pd-2385.service

Destroy 172.21.141.61 success

+ [ Serial ] - UpdateMeta: cluster=test_cluster, deleted=`'172.21.141.61:2385'`

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/tikv-20160.service, deploy_dir=/apps/tidb/test_cluster/deploy/tikv-20160, data_dir=i/apps/tidb/test_cluster/data/tikv-20160, log_dir=/apps/tidb/test_cluster/deploy/tikv-20160/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/tikv-20161.service, deploy_dir=/apps/tidb/test_cluster/deploy/tikv-20161, data_dir=i/apps/tidb/test_cluster/data/tikv-20161, log_dir=/apps/tidb/test_cluster/deploy/tikv-20161/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/pd-2381.service, deploy_dir=/apps/tidb/test_cluster/deploy/pd-2381, data_dir=i/apps/tidb/test_cluster/data/pd-2381, log_dir=/apps/tidb/test_cluster/deploy/pd-2381/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/pd-2383.service, deploy_dir=/apps/tidb/test_cluster/deploy/pd-2383, data_dir=i/apps/tidb/test_cluster/data/pd-2383, log_dir=/apps/tidb/test_cluster/deploy/pd-2383/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/pd-2379.service, deploy_dir=/apps/tidb/test_cluster/deploy/pd-2379, data_dir=i/apps/tidb/test_cluster/data/pd-2379, log_dir=/apps/tidb/test_cluster/deploy/pd-2379/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/grafana-3000.service, deploy_dir=/apps/tidb/test_cluster/deploy/grafana-3000, data_dir=, log_dir=/apps/tidb/test_cluster/deploy/grafana-3000/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/tidb-4000.service, deploy_dir=/apps/tidb/test_cluster/deploy/tidb-4000, data_dir=, log_dir=/apps/tidb/test_cluster/deploy/tidb-4000/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/tikv-20162.service, deploy_dir=/apps/tidb/test_cluster/deploy/tikv-20162, data_dir=i/apps/tidb/test_cluster/data/tikv-20162, log_dir=/apps/tidb/test_cluster/deploy/tikv-20162/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/tikv-20163.service, deploy_dir=/apps/tidb/test_cluster/deploy/tikv-20163, data_dir=i/apps/tidb/test_cluster/data/tikv-20163, log_dir=/apps/tidb/test_cluster/deploy/tikv-20163/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/tidb-4001.service, deploy_dir=/apps/tidb/test_cluster/deploy/tidb-4001, data_dir=, log_dir=/apps/tidb/test_cluster/deploy/tidb-4001/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

+ [ Serial ] - InitConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, path=/root/.tiup/storage/cluster/clusters/test_cluster/config/prometheus-9090.service, deploy_dir=/apps/tidb/test_cluster/deploy/prometheus-9090, data_dir=i/apps/tidb/test_cluster/data/prometheus-9090, log_dir=/apps/tidb/test_cluster/deploy/prometheus-9090/log, cache_dir=/root/.tiup/storage/cluster/clusters/test_cluster/config

Scaled cluster `test_cluster` in successfully

[root@dbatest05 tiup]#

[root@dbatest05 tiup]#

[root@dbatest05 tiup]# tiup cluster scale-out test_cluster ./scale_out_pd.yaml -y

Starting component `cluster`: /root/.tiup/components/cluster/v0.6.0/cluster scale-out test_cluster ./scale_out_pd.yaml -y

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/test_cluster/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/test_cluster/ssh/id_rsa.pub

- Download pd:v3.0.9 ... Done

- Download blackbox_exporter:v0.12.0 ... Done

+ [ Serial ] - UserSSH: user=tidb, host=172.21.141.61

+ [ Serial ] - Mkdir: host=172.21.141.61, directories='/apps/tidb/test_cluster/deploy/pd-2385','i/apps/tidb/test_cluster/data/pd-2385','/apps/tidb/test_cluster/deploy/pd-2385/log','/apps/tidb/test_cluster/deploy/pd-2385/bin','/apps/tidb/test_cluster/deploy/pd-2385/conf','/apps/tidb/test_cluster/deploy/pd-2385/scripts'

+ [ Serial ] - CopyComponent: component=pd, version=v3.0.9, remote=172.21.141.61:/apps/tidb/test_cluster/deploy/pd-2385

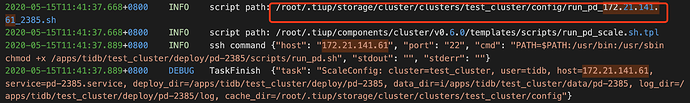

+ [ Serial ] - ScaleConfig: cluster=test_cluster, user=tidb, host=172.21.141.61, service=pd-2385.service, deploy_dir=/apps/tidb/test_cluster/deploy/pd-2385, data_dir=i/apps/tidb/test_cluster/data/pd-2385, log_dir=/apps/tidb/test_cluster/deploy/pd-2385/log, cache_dir=

script path: /root/.tiup/storage/cluster/clusters/test_cluster/config/run_pd_172.21.141.61_2385.sh

script path: /root/.tiup/components/cluster/v0.6.0/templates/scripts/run_pd_scale.sh.tpl

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [ Serial ] - ClusterOperate: operation=StartOperation, options={Roles:[] Nodes:[] Force:false Timeout:0}

Starting component pd

Starting instance pd 172.21.141.61:2383

Starting instance pd 172.21.141.61:2379

Starting instance pd 172.21.141.61:2381

Start pd 172.21.141.61:2383 success

Start pd 172.21.141.61:2381 success

Start pd 172.21.141.61:2379 success

Starting component node_exporter

Starting instance 172.21.141.61

Start 172.21.141.61 success

Starting component blackbox_exporter

Starting instance 172.21.141.61

Start 172.21.141.61 success

Starting component tikv

Starting instance tikv 172.21.141.61:20163

Starting instance tikv 172.21.141.61:20160

Starting instance tikv 172.21.141.61:20161

Starting instance tikv 172.21.141.61:20162

Start tikv 172.21.141.61:20161 success

Start tikv 172.21.141.61:20163 success

Start tikv 172.21.141.61:20162 success

Start tikv 172.21.141.61:20160 success

Starting component tidb

Starting instance tidb 172.21.141.61:4001

Starting instance tidb 172.21.141.61:4000

Start tidb 172.21.141.61:4001 success

Start tidb 172.21.141.61:4000 success

Starting component prometheus

Starting instance prometheus 172.21.141.61:9090

Start prometheus 172.21.141.61:9090 success

Starting component grafana

Starting instance grafana 172.21.141.61:3000

Start grafana 172.21.141.61:3000 success

Checking service state of pd

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:29:52 CST; 11min ago

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:29:50 CST; 11min ago

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:29:48 CST; 11min ago

Checking service state of tikv

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:29:57 CST; 11min ago

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:30:58 CST; 10min ago

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:29:59 CST; 11min ago

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:30:01 CST; 11min ago

Checking service state of tidb

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:31:01 CST; 10min ago

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:30:59 CST; 10min ago

Checking service state of prometheus

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:31:02 CST; 10min ago

Checking service state of grafana

172.21.141.61 Active: active (running) since Fri 2020-05-15 11:31:02 CST; 10min ago

+ [Parallel] - UserSSH: user=tidb, host=172.21.141.61

+ [ Serial ] - save meta

+ [ Serial ] - ClusterOperate: operation=StartOperation, options={Roles:[] Nodes:[] Force:false Timeout:0}

Starting component pd

Starting instance pd 172.21.141.61:2385

pd 172.21.141.61:2385 failed to start: timed out waiting for port 2385 to be started after 1m0s, please check the log of the instance

Error: failed to start: failed to start pd: pd 172.21.141.61:2385 failed to start: timed out waiting for port 2385 to be started after 1m0s, please check the log of the instance: timed out waiting for port 2385 to be started after 1m0s

Verbose debug logs has been written to /apps/tiup/logs/tiup-cluster-debug-2020-05-15-11-42-42.log.

Error: run `/root/.tiup/components/cluster/v0.6.0/cluster` (wd:/root/.tiup/data/Rz1bA32) failed: exit status 1

报错信息

2020-05-15T11:42:42.409+0800 ERROR pd 172.21.141.61:2385 failed to start: timed out waiting for port 2385 to be started after 1m0s, please check the log of the instance

2020-05-15T11:42:42.409+0800 DEBUG TaskFinish {"task": "ClusterOperate: operation=StartOperation, options={Roles:[] Nodes:[] Force:false Timeout:0}", "error": "failed to start: failed to start pd: \tpd 172.21.141.61:2385 failed to start: timed out waiting for port 2385 to be started after 1m0s, please check the log of the instance: timed out waiting for port 2385 to be started after 1m0s", "errorVerbose": "timed out waiting for port 2385 to be started after 1m0s\

github.com/pingcap-incubator/tiup-cluster/pkg/module.(*WaitFor).Execute

\t/home/jenkins/agent/workspace/tiup-cluster-release/pkg/module/wait_for.go:89

github.com/pingcap-incubator/tiup-cluster/pkg/meta.PortStarted

\t/home/jenkins/agent/workspace/tiup-cluster-release/pkg/meta/logic.go:106

github.com/pingcap-incubator/tiup-cluster/pkg/meta.(*instance).Ready

\t/home/jenkins/agent/workspace/tiup-cluster-release/pkg/meta/logic.go:135

github.com/pingcap-incubator/tiup-cluster/pkg/operation.startInstance

\t/home/jenkins/agent/workspace/tiup-cluster-release/pkg/operation/action.go:421

github.com/pingcap-incubator/tiup-cluster/pkg/operation.StartComponent.func1

\t/home/jenkins/agent/workspace/tiup-cluster-release/pkg/operation/action.go:454

golang.org/x/sync/errgroup.(*Group).Go.func1

\t/go/pkg/mod/golang.org/x/sync@v0.0.0-20190911185100-cd5d95a43a6e/errgroup/errgroup.go:57

runtime.goexit

\t/usr/local/go/src/runtime/asm_amd64.s:1357

\tpd 172.21.141.61:2385 failed to start: timed out waiting for port 2385 to be started after 1m0s, please check the log of the instance

failed to start pd

failed to start"}

2020-05-15T11:42:42.409+0800 INFO Execute command finished {“code”: 1, “error”: “failed to start: failed to start pd: \tpd 172.21.141.61:2385 failed to start: timed out waiting for port 2385 to be started after 1m0s, please check the log of the instance: timed out waiting for port 2385 to be started after 1m0s”, “errorVerbose”: “timed out waiting for port 2385 to be started after 1m0s

github.com/pingcap-incubator/tiup-cluster/pkg/module.(*WaitFor).Execute

\t/home/jenkins/agent/workspace/tiup-cluster-release/pkg/module/wait_for.go:89

github.com/pingcap-incubator/tiup-cluster/pkg/meta.PortStarted

\t/home/jenkins/agent/workspace/tiup-cluster-release/pkg/meta/logic.go:106

github.com/pingcap-incubator/tiup-cluster/pkg/meta.(*instance).Ready

\t/home/jenkins/agent/workspace/tiup-cluster-release/pkg/meta/logic.go:135

github.com/pingcap-incubator/tiup-cluster/pkg/operation.startInstance

\t/home/jenkins/agent/workspace/tiup-cluster-release/pkg/operation/action.go:421

github.com/pingcap-incubator/tiup-cluster/pkg/operation.StartComponent.func1

\t/home/jenkins/agent/workspace/tiup-cluster-release/pkg/operation/action.go:454

golang.org/x/sync/errgroup.(*Group).Go.func1

\t/go/pkg/mod/golang.org/x/sync@v0.0.0-20190911185100-cd5d95a43a6e/errgroup/errgroup.go:57

runtime.goexit

\t/usr/local/go/src/runtime/asm_amd64.s:1357

\tpd 172.21.141.61:2385 failed to start: timed out waiting for port 2385 to be started after 1m0s, please check the log of the instance

failed to start pd

failed to start”}

问题分析

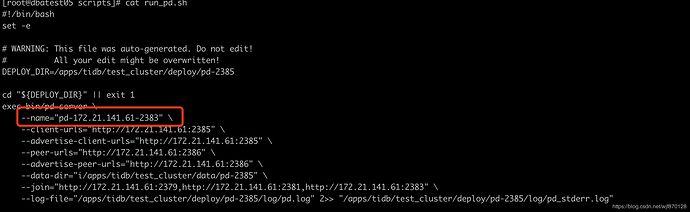

查看部署路径/apps/tidb/test_cluster/deploy/pd-2385已经包含,pd无法启动起来。排查问题发现/apps/tidb/test_cluster/deploy/pd-2385/scripts中的run_pd.sh脚本有问题

问题解决

1)将该name改为pd-172.21.141.61-2385,执行脚本/apps/tidb/test_cluster/deploy/pd-2385/scripts/run_pd.sh 即可成功启动。

2)tiup cluster edit-config test_cluster 手工添加pd-2385的配置信息

- host: 172.21.141.61

ssh_port: 22

name: pd-172.21.141.61-2385

client_port: 2385

peer_port: 2386

deploy_dir: /apps/tidb/test_cluster/deploy/pd-2385

data_dir: i/apps/tidb/test_cluster/data/pd-2385

- reload cluster

后续

1)在单机扩展tidb和tikv的时候没有遇到该问题。

2)考虑是脚本在生成pd name的时候出现问题。

3)在scale-out脚本中手工指定pd-name同样会报错,run_pd.sh中的name怀疑是按照pd同步数据端口来自动生成,会造成名称重复,应该是tiup本身bug。