- 先找一下其他节点的node_exporter.service ,目录/etc/systemd/system/下面, 看看记录的 类似如下 sh 文件在本机存在吗? 如果存在,从其他节点copy一个service文件,再修改为本机的路径即可。

ExecStart=/home/tidb/lqh-clusters/root_test/deploy02/monitor-19100/scripts/run_node_exporter.sh - 如果不存在,没有monitor-19100这样的目录,按照扩容文档,扩容一个node exporter

不存在任何监控包在上面,应该是升级前这个节点以前也没装过监控。

通过扩展scale-out.yaml

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

依然升级不能指定部署上去,刚刚执行了一下还是报这个错

- 麻烦发一下您的 tiup cluster display cluster-name 信息 。

- 扩容成功了吗? 在/etc/systemd/system下有service文件了吗?扩容有什么报错吗?

- 当前这个节点上有什么? pd,tidb,tikv?

- 当前48上的tikv时ansible导入的对吧。

- 麻烦在48上扩容一个 tidb吧,之后再缩容,就有监控信息了。

- 抱歉当前没有单独增加监控的功能,这个我们再和产品沟通下,多谢

是导入的,刚刚也单独扩了下一个TiDB依然报监控弄不上去的错 也尝试了TiKV的扩容,也是卡到这里了。

[tidb@sit2-tidb-mysql-v-l-01:/home/tidb]$tiup cluster scale-out test-cluster scale-out.yaml

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v0.6.0/cluster scale-out test-cluster scale-out.yaml

Please confirm your topology:

TiDB Cluster: test-cluster

TiDB Version: v4.0.0-rc.1

Type Host Ports Directories

---- ---- ----- -----------

tidb 10.204.54.48 4000/10080 /data/TIDB1

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: y

+ [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub

- Download blackbox_exporter:v0.12.0 ... Done

+ [ Serial ] - UserSSH: user=tidb, host=10.204.54.48

+ [ Serial ] - Mkdir: host=10.204.54.48, directories='/data/TIDB1','','/data/TIDB1/log','/data/TIDB1/bin','/data/TIDB1/conf','/data/TIDB1/scripts'

+ [ Serial ] - CopyComponent: component=tidb, version=v4.0.0-rc.1, remote=10.204.54.48:/data/TIDB1

+ [ Serial ] - ScaleConfig: cluster=test-cluster, user=tidb, host=10.204.54.48, service=tidb-4000.service, deploy_dir=/data/TIDB1, data_dir=, log_dir=/data/TIDB1/log, cache_dir=

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.78

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.86

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.85

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.48

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.78

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.78

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.86

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.78

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.86

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.86

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.78

+ [Parallel] - UserSSH: user=tidb, host=10.204.54.85

+ [ Serial ] - ClusterOperate: operation=StartOperation, options={Roles:[] Nodes:[] Force:false Timeout:0}

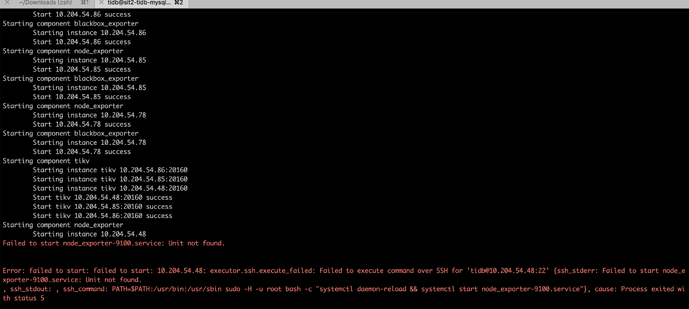

Starting component pd

Starting instance pd 10.204.54.78:2379

Starting instance pd 10.204.54.86:2379

Starting instance pd 10.204.54.85:2379

Start pd 10.204.54.78:2379 success

Start pd 10.204.54.85:2379 success

Start pd 10.204.54.86:2379 success

Starting component node_exporter

Starting instance 10.204.54.86

Start 10.204.54.86 success

Starting component blackbox_exporter

Starting instance 10.204.54.86

Start 10.204.54.86 success

Starting component node_exporter

Starting instance 10.204.54.85

Start 10.204.54.85 success

Starting component blackbox_exporter

Starting instance 10.204.54.85

Start 10.204.54.85 success

Starting component node_exporter

Starting instance 10.204.54.78

Start 10.204.54.78 success

Starting component blackbox_exporter

Starting instance 10.204.54.78

Start 10.204.54.78 success

Starting component tikv

Starting instance tikv 10.204.54.86:20160

Starting instance tikv 10.204.54.48:20160

Starting instance tikv 10.204.54.85:20160

Start tikv 10.204.54.85:20160 success

Start tikv 10.204.54.48:20160 success

Start tikv 10.204.54.86:20160 success

Starting component node_exporter

Starting instance 10.204.54.48

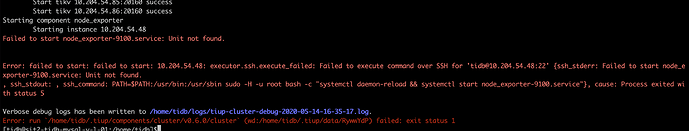

Failed to start node_exporter-9100.service: Unit not found.

Error: failed to start: failed to start: 10.204.54.48: executor.ssh.execute_failed: Failed to execute command over SSH for 'tidb@10.204.54.48:22' {ssh_stderr: Failed to start node_exporter-9100.service: Unit not found.

, ssh_stdout: , ssh_command: PATH=$PATH:/usr/bin:/usr/sbin sudo -H -u root bash -c "systemctl daemon-reload && systemctl start node_exporter-9100.service"}, cause: Process exited with status 5

Verbose debug logs has been written to /home/tidb/logs/tiup-cluster-debug-2020-05-14-16-59-36.log.

Error: run `/home/tidb/.tiup/components/cluster/v0.6.0/cluster` (wd:/home/tidb/.tiup/data/Ryx2fWa) failed: exit status 1

稍等,我们再研究下。

好的,没事,这是测试环境。晚点我重建下就好

着急吗?要是可以的话,先别重建,我们要是解决不了,再辛苦重建。多谢

好的,等你们回复

请问下,您其他节点有这个监控信息吗?

有的,这个单独没部署的节点应该是ansible的时候没放进monitor里面导致的。

- 当前可以考虑从其他节点copy监控信息:

/etc/systemd/system/ : blackbox_exporter xx.service 和 node_exporter-9100.service /home/tidb/deploy/ 你的deploy目录下 copy 所有目录过来. 对比修改下内容。

-

如果是测试环境,方便点,感觉还是拆了,重新装比较好。

-

这个问题应该是由于 ansible 当时没有安装监控,我们也考虑下如何避免问题,多谢。

我这边还涉及不同集群混部一台服务器的情况,导致A集群有对这部机器监控得到,B集群就监控不到

可以尝试将监控部署为不同的端口

此话题已在最后回复的 1 分钟后被自动关闭。不再允许新回复。