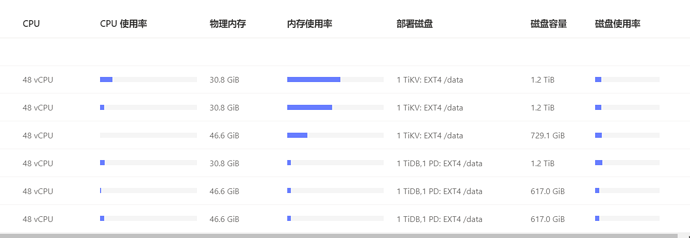

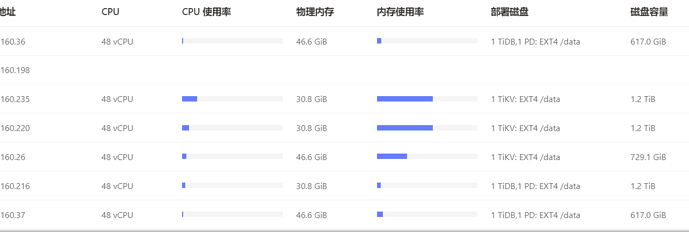

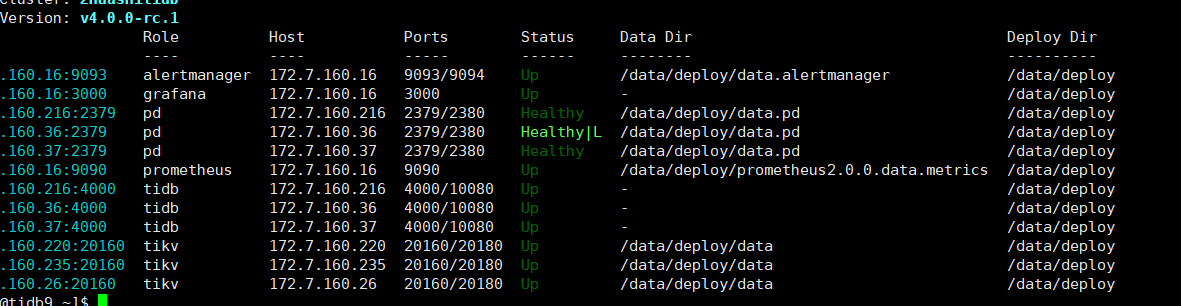

拓扑结构如图,在缩容一个tikv节点时出错,错误提示如下,日志在附件,请问什么原因?

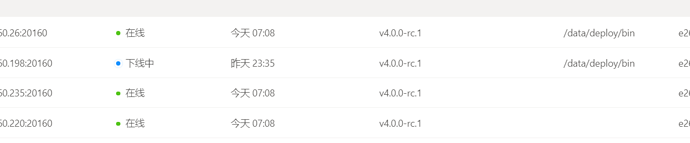

后来 tidb cluster diplay 名,看着也下线了

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v0.6.0/cluster scale-in zhuashitidb --node 172.7.160.198:20160

This operation will delete the 172.7.160.198:20160 nodes in `zhuashitidb` and all their data.

Do you want to continue? [y/N]: y

Scale-in nodes...

+ [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.16

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.37

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.36

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.220

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.36

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.16

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.198

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.26

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.216

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.235

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.37

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.16

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.216

+ [ Serial ] - ClusterOperate: operation=ScaleInOperation, options={Roles:[] Nodes:[172.7.160.198:20160] Force:false Timeout:300}

The component `tikv` will be destroyed when display cluster info when it become tombstone, maybe exists in several minutes or hours

+ [ Serial ] - UpdateMeta: cluster=zhuashitidb, deleted=`''`

+ [ Serial ] - Download: component=alertmanager, version=v0.17.0

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.37, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/pd-2379.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data.pd, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - CopyComponent: component=alertmanager, version=v0.17.0, remote=172.7.160.16:/data/deploy

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.37, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tidb-4000.service, deploy_dir=/data/deploy, data_dir=, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - Download: component=prometheus, version=v4.0.0-rc.1

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.235, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tikv-20160.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - CopyComponent: component=prometheus, version=v4.0.0-rc.1, remote=172.7.160.16:/data/deploy

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.26, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tikv-20160.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.216, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/pd-2379.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data.pd, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.36, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tidb-4000.service, deploy_dir=/data/deploy, data_dir=, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.36, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/pd-2379.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data.pd, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - Download: component=grafana, version=v4.0.0-rc.1

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.220, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tikv-20160.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - CopyComponent: component=grafana, version=v4.0.0-rc.1, remote=172.7.160.16:/data/deploy

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.216, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tidb-4000.service, deploy_dir=/data/deploy, data_dir=, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.16, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/alertmanager-9093.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data.alertmanager, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.16, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/prometheus-9090.service, deploy_dir=/data/deploy, data_dir=/data/deploy/prometheus2.0.0.data.metrics, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.16, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/grafana-3000.service, deploy_dir=/data/deploy, data_dir=, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

Error: Process exited with status 1

Verbose debug logs has been written to /home/tidb/logs/tiup-cluster-debug-2020-05-09-07-04-01.log.

Error: run `/home/tidb/.tiup/components/cluster/v0.6.0/cluster` (wd:/home/tidb/.tiup/data/RyRNuoC) failed: exit status 1

tiup-cluster-debug-2020-05-09-07-04-01.log (69.2 KB)

在这个报错后,reload 集群也失败,无法reload

[tidb@tidb9 ~]$ tiup cluster reload zhuashitidb

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v0.6.0/cluster reload zhuashitidb

+ [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.16

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.37

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.235

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.26

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.216

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.36

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.220

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.16

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.216

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.37

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.16

+ [Parallel] - UserSSH: user=tidb, host=172.7.160.36

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.220

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.235

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.16

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.37

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.235, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tikv-20160.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.36

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.220, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tikv-20160.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.36

+ [ Serial ] - Download: component=alertmanager, version=v0.17.0

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.26

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.37

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.37, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tidb-4000.service, deploy_dir=/data/deploy, data_dir=, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.216

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.16

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.16

+ [ Serial ] - Download: component=grafana, version=v4.0.0-rc.1

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.216, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/pd-2379.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data.pd, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - UserSSH: user=tidb, host=172.7.160.216

+ [ Serial ] - CopyComponent: component=alertmanager, version=v0.17.0, remote=172.7.160.16:/data/deploy

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.216, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tidb-4000.service, deploy_dir=/data/deploy, data_dir=, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.36, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tidb-4000.service, deploy_dir=/data/deploy, data_dir=, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.37, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/pd-2379.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data.pd, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.36, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/pd-2379.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data.pd, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - Download: component=prometheus, version=v4.0.0-rc.1

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.26, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/tikv-20160.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - CopyComponent: component=prometheus, version=v4.0.0-rc.1, remote=172.7.160.16:/data/deploy

+ [ Serial ] - CopyComponent: component=grafana, version=v4.0.0-rc.1, remote=172.7.160.16:/data/deploy

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.16, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/alertmanager-9093.service, deploy_dir=/data/deploy, data_dir=/data/deploy/data.alertmanager, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.16, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/prometheus-9090.service, deploy_dir=/data/deploy, data_dir=/data/deploy/prometheus2.0.0.data.metrics, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

+ [ Serial ] - InitConfig: cluster=zhuashitidb, user=tidb, host=172.7.160.16, path=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config/grafana-3000.service, deploy_dir=/data/deploy, data_dir=, log_dir=/data/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/zhuashitidb/config

Error: Process exited with status 1

Verbose debug logs has been written to /home/tidb/logs/tiup-cluster-debug-2020-05-09-07-37-23.log.

Error: run `/home/tidb/.tiup/components/cluster/v0.6.0/cluster` (wd:/home/tidb/.tiup/data/RyRWQmy) failed: exit status 1