【TiDB 版本】:

4.0 RC

【问题描述】:

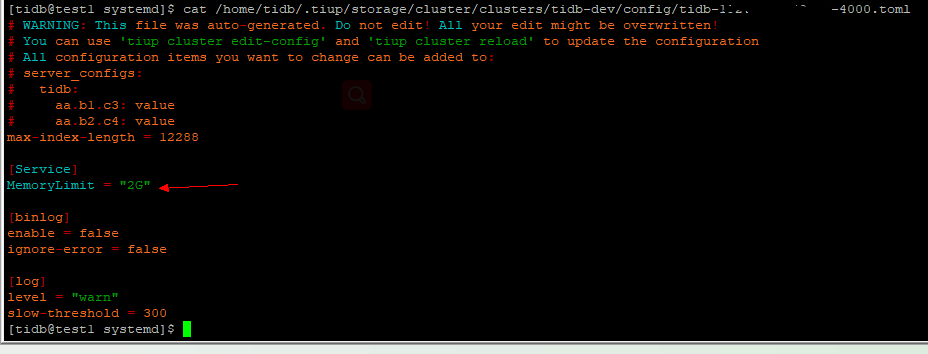

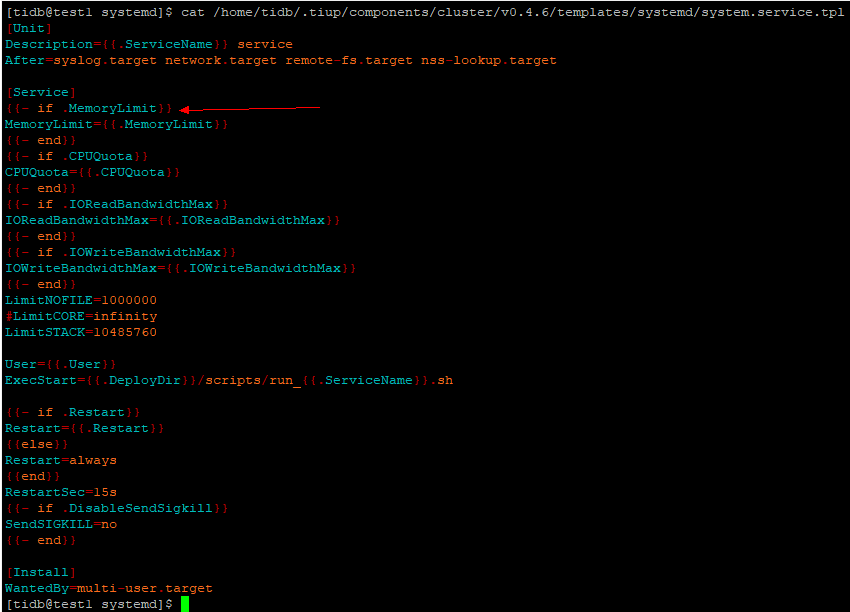

tiup cluster edit-config tidb-dev

server_configs:

tidb:

binlog.enable: false

binlog.ignore-error: false

log.level: warn

log.slow-threshold: 300

max-index-length: 12288

max-memory: 25G # 添加这个不起作用

memory-limit: 25G # 添加这个也不起作用

MemoryLimit: 25G # 添加这个也不起作用

对比 3.0.11的 inventory.ini 文件

## TiDB Cluster Part

[tidb_servers]

177.6.192.31

177.6.192.32

#177.6.180.58

#177.6.180.59

[tikv_servers]

#177.6.192.34

#177.6.192.35

#177.6.192.36

177.6.181.110

177.6.181.111

177.6.181.112

[pd_servers]

177.6.192.31

177.6.192.32

177.6.192.33

[spark_master]

[spark_slaves]

[lightning_server]

[importer_server]

## Monitoring Part

# prometheus and pushgateway servers

[monitoring_servers]

177.6.192.33

[grafana_servers]

177.6.192.33

# node_exporter and blackbox_exporter servers

[monitored_servers]

177.6.192.31

177.6.192.32

177.6.192.33

#177.6.180.58

#177.6.180.59

#177.6.192.34

#177.6.192.35

#177.6.192.36

177.6.181.110

177.6.181.111

177.6.181.112

[alertmanager_servers]

177.6.192.33

[kafka_exporter_servers]

## Binlog Part

[pump_servers]

[drainer_servers]

## Group variables

[pd_servers:vars]

# location_labels = ["zone","rack","host"]

## Global variables

[all:vars]

deploy_dir = /home/tidb/deploy

## Connection

# ssh via normal user

ansible_user = tidb

cluster_name = test-cluster

tidb_version = v3.0.11

# process supervision, [systemd, supervise]

process_supervision = systemd

timezone = Asia/Shanghai

enable_firewalld = False

# check NTP service

enable_ntpd = True

set_hostname = False

## binlog trigger

enable_binlog = False

# kafka cluster address for monitoring, example:

# kafka_addrs = "177.6.0.11:9092,177.6.0.12:9092,177.6.0.13:9092"

kafka_addrs = ""

# zookeeper address of kafka cluster for monitoring, example:

# zookeeper_addrs = "177.6.0.11:2181,177.6.0.12:2181,177.6.0.13:2181"

zookeeper_addrs = ""

# enable TLS authentication in the TiDB cluster

enable_tls = False

# KV mode

deploy_without_tidb = False

# wait for region replication complete before start tidb-server.

wait_replication = True

# Optional: Set if you already have a alertmanager server.

# Format: alertmanager_host:alertmanager_port

alertmanager_target = ""

grafana_admin_user = "admin"

grafana_admin_password = "admin"

### Collect diagnosis

collect_log_recent_hours = 2

enable_bandwidth_limit = True

# default: 10Mb/s, unit: Kbit/s

collect_bandwidth_limit = 10000

MemoryLimit = 58G