为提高效率,提问时请提供以下信息,问题描述清晰可优先响应。

- 【TiDB 版本】:3.0.5

- 【问题描述】:

dm同步过程中由于sql异常中断,tidb数据库暂停同步。

tidb数据库暂时没有连接向其输入数据,查询对应表中数据一直为空。

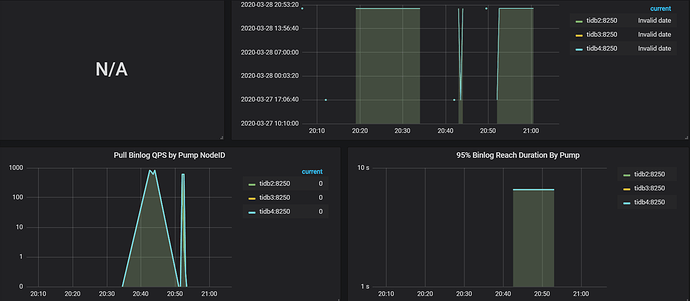

目前drainer一直像kafka中写数据,数据量已过万,但对应数据库并未写入数据。

drainer_error.log

goroutine 262 [running]:

github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run.func2(0xc004684030, 0xc0022e0ee0)

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:188 +0xa9

created by github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:184 +0xd0

2020/03/27 18:52:06 Connected to 192.168.10.203:2181

2020/03/27 18:52:06 Authenticated: id=216314377583788199, timeout=40000

2020/03/27 18:52:06 Re-submitting `0` credentials after reconnect

2020/03/27 18:52:06 Recv loop terminated: err=EOF

2020/03/27 18:52:06 Send loop terminated: err=<nil>

panic: kafka: Failed to produce message to topic kafka_binlog: kafka server: Message was too large, server rejected it to avoid allocation erro

r.

goroutine 260 [running]:

github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run.func2(0xc004e00000, 0xc004da8ee0)

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:188 +0xa9

created by github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:184 +0xd0

2020/03/27 18:52:27 Connected to 192.168.10.204:2181

2020/03/27 18:52:27 Authenticated: id=288371984454123681, timeout=40000

2020/03/27 18:52:27 Re-submitting `0` credentials after reconnect

2020/03/27 18:52:27 Recv loop terminated: err=EOF

2020/03/27 18:52:27 Send loop terminated: err=<nil>

panic: kafka: Failed to produce message to topic kafka_binlog: kafka server: Message was too large, server rejected it to avoid allocation erro

r.

goroutine 211 [running]:

github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run.func2(0xc005010000, 0xc004ff8770)

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:188 +0xa9

created by github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:184 +0xd0

2020/03/27 18:52:48 Connected to 192.168.10.203:2181

2020/03/27 18:52:48 Authenticated: id=216314377583788200, timeout=40000

2020/03/27 18:52:48 Re-submitting `0` credentials after reconnect

2020/03/27 18:52:48 Recv loop terminated: err=EOF

2020/03/27 18:52:48 Send loop terminated: err=<nil>

panic: kafka: Failed to produce message to topic kafka_binlog: kafka server: Message was too large, server rejected it to avoid allocation erro

r.

goroutine 236 [running]:

github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run.func2(0xc004be2000, 0xc004acdf10)

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:188 +0xa9

created by github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:184 +0xd0

2020/03/27 18:53:09 Connected to 192.168.10.202:2181

2020/03/27 18:53:09 Authenticated: id=144262481109385386, timeout=40000

2020/03/27 18:53:09 Re-submitting `0` credentials after reconnect

2020/03/27 18:53:09 Recv loop terminated: err=EOF

2020/03/27 18:53:09 Send loop terminated: err=<nil>

panic: kafka: Failed to produce message to topic kafka_binlog: kafka server: Message was too large, server rejected it to avoid allocation erro

r.

goroutine 260 [running]:

github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run.func2(0xc004a44000, 0xc003c20a80)

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:188 +0xa9

created by github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:184 +0xd0

2020/03/27 18:53:31 Connected to 192.168.10.203:2181

2020/03/27 18:53:31 Authenticated: id=216314377583788201, timeout=40000

2020/03/27 18:53:31 Re-submitting `0` credentials after reconnect

2020/03/27 18:53:31 Recv loop terminated: err=EOF

2020/03/27 18:53:31 Send loop terminated: err=<nil>

panic: kafka: Failed to produce message to topic kafka_binlog: kafka server: Message was too large, server rejected it to avoid allocation erro

r.

goroutine 254 [running]:

github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run.func2(0xc004ea0310, 0xc003b768c0)

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:188 +0xa9

created by github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:184 +0xd0

2020/03/27 18:53:51 Connected to 192.168.10.201:2181

2020/03/27 18:53:51 Authenticated: id=72204371365855368, timeout=40000

2020/03/27 18:53:51 Re-submitting `0` credentials after reconnect

2020/03/27 18:53:51 Recv loop terminated: err=EOF

2020/03/27 18:53:51 Send loop terminated: err=<nil>

panic: kafka: Failed to produce message to topic kafka_binlog: kafka server: Message was too large, server rejected it to avoid allocation erro

r.

goroutine 266 [running]:

github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run.func2(0xc0045e6040, 0xc0021d57a0)

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:188 +0xa9

created by github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:184 +0xd0

2020/03/27 18:54:12 Connected to 192.168.10.202:2181

2020/03/27 18:54:12 Authenticated: id=144262481109385387, timeout=40000

2020/03/27 18:54:12 Re-submitting `0` credentials after reconnect

2020/03/27 18:54:12 Recv loop terminated: err=EOF

2020/03/27 18:54:12 Send loop terminated: err=<nil>

panic: kafka: Failed to produce message to topic kafka_binlog: kafka server: Message was too large, server rejected it to avoid allocation erro

r.

goroutine 191 [running]:

github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run.func2(0xc0019cc010, 0xc003eaaa80)

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:188 +0xa9

created by github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run

/home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:184 +0xd0

2020/03/27 18:54:33 Connected to 192.168.10.202:2181

2020/03/27 18:54:33 Authenticated: id=144262481109385388, timeout=40000

2020/03/27 18:54:33 Re-submitting `0` credentials after reconnect

2020/03/27 18:54:33 Recv loop terminated: err=EOF

2020/03/27 18:54:33 Send loop terminated: err=<nil>

panic: kafka: Failed to produce message to topic kafka_binlog: kafka server: Message was too large, server rejected it to avoid allocation erro

r.