[root@master tidb-cluster]# kubectl describe nodes node1

Name: node1

Roles:

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=node1

kubernetes.io/os=linux

Annotations: flannel.alpha.coreos.com/backend-data: {“VtepMAC”:“be:3b:19:d9:ac:ba”}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 172.30.0.154

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 01 Mar 2020 12:00:41 +0800

Taints:

Unschedulable: false

Lease:

HolderIdentity: node1

AcquireTime:

RenewTime: Tue, 17 Mar 2020 11:30:38 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

MemoryPressure False Tue, 17 Mar 2020 11:26:46 +0800 Sun, 01 Mar 2020 12:00:41 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Tue, 17 Mar 2020 11:26:46 +0800 Sun, 01 Mar 2020 12:00:41 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Tue, 17 Mar 2020 11:26:46 +0800 Sun, 01 Mar 2020 12:00:41 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Tue, 17 Mar 2020 11:26:46 +0800 Sun, 01 Mar 2020 12:01:42 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 10.7.10.154

Hostname: node1

Capacity:

cpu: 8

ephemeral-storage: 51175Mi

hugepages-2Mi: 0

memory: 20607492Ki

pods: 110

Allocatable:

cpu: 8

ephemeral-storage: 48294789041

hugepages-2Mi: 0

memory: 20505092Ki

pods: 110

System Info:

Machine ID: b2d71c4f4af44ca09bbd58f3a38cb0ae

System UUID: 564DB120-B5BF-C553-92B9-9CDE4A16197C

Boot ID: 1442ebad-b76b-44c5-b973-1df392753eb7

Kernel Version: 3.10.0-862.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://19.3.6

Kubelet Version: v1.17.3

Kube-Proxy Version: v1.17.3

PodCIDR: 10.244.1.0/24

PodCIDRs: 10.244.1.0/24

Non-terminated Pods: (7 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

default itswk-deployment-f96f5f7b4-k7lbt 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d13h

kube-system kube-flannel-ds-amd64-nxwfd 100m (1%) 100m (1%) 50Mi (0%) 50Mi (0%) 15d

kube-system kube-proxy-vwtsh 0 (0%) 0 (0%) 0 (0%) 0 (0%) 15d

kube-system local-volume-provisioner-rnjz8 100m (1%) 100m (1%) 100Mi (0%) 100Mi (0%) 115m

kube-system tiller-deploy-6d8dfbb696-89bvl 0 (0%) 0 (0%) 0 (0%) 0 (0%) 5d17h

kubernetes-dashboard kubernetes-dashboard-866f987876-l9s7d 0 (0%) 0 (0%) 0 (0%) 0 (0%) 12d

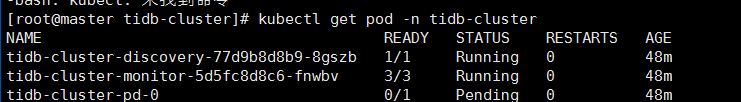

tidb-cluster tidb-cluster-discovery-77d9b8d8b9-8gszb 80m (1%) 250m (3%) 50Mi (0%) 150Mi (0%) 70m

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

cpu 280m (3%) 450m (5%)

memory 200Mi (0%) 300Mi (1%)

ephemeral-storage 0 (0%) 0 (0%)

Events:

[root@master tidb-cluster]# clear

[root@master tidb-cluster]# kubectl describe nodes node1

Name: node1

Roles:

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=node1

kubernetes.io/os=linux

Annotations: flannel.alpha.coreos.com/backend-data: {“VtepMAC”:“be:3b:19:d9:ac:ba”}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 172.30.0.154

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 01 Mar 2020 12:00:41 +0800

Taints:

Unschedulable: false

Lease:

HolderIdentity: node1

AcquireTime:

RenewTime: Tue, 17 Mar 2020 11:31:38 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

MemoryPressure False Tue, 17 Mar 2020 11:26:46 +0800 Sun, 01 Mar 2020 12:00:41 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Tue, 17 Mar 2020 11:26:46 +0800 Sun, 01 Mar 2020 12:00:41 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Tue, 17 Mar 2020 11:26:46 +0800 Sun, 01 Mar 2020 12:00:41 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Tue, 17 Mar 2020 11:26:46 +0800 Sun, 01 Mar 2020 12:01:42 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 10.7.10.154

Hostname: node1

Capacity:

cpu: 8

ephemeral-storage: 51175Mi

hugepages-2Mi: 0

memory: 20607492Ki

pods: 110

Allocatable:

cpu: 8

ephemeral-storage: 48294789041

hugepages-2Mi: 0

memory: 20505092Ki

pods: 110

System Info:

Machine ID: b2d71c4f4af44ca09bbd58f3a38cb0ae

System UUID: 564DB120-B5BF-C553-92B9-9CDE4A16197C

Boot ID: 1442ebad-b76b-44c5-b973-1df392753eb7

Kernel Version: 3.10.0-862.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://19.3.6

Kubelet Version: v1.17.3

Kube-Proxy Version: v1.17.3

PodCIDR: 10.244.1.0/24

PodCIDRs: 10.244.1.0/24

Non-terminated Pods: (7 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

default itswk-deployment-f96f5f7b4-k7lbt 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d14h

kube-system kube-flannel-ds-amd64-nxwfd 100m (1%) 100m (1%) 50Mi (0%) 50Mi (0%) 15d

kube-system kube-proxy-vwtsh 0 (0%) 0 (0%) 0 (0%) 0 (0%) 15d

kube-system local-volume-provisioner-rnjz8 100m (1%) 100m (1%) 100Mi (0%) 100Mi (0%) 116m

kube-system tiller-deploy-6d8dfbb696-89bvl 0 (0%) 0 (0%) 0 (0%) 0 (0%) 5d17h

kubernetes-dashboard kubernetes-dashboard-866f987876-l9s7d 0 (0%) 0 (0%) 0 (0%) 0 (0%) 12d

tidb-cluster tidb-cluster-discovery-77d9b8d8b9-8gszb 80m (1%) 250m (3%) 50Mi (0%) 150Mi (0%) 71m

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

cpu 280m (3%) 450m (5%)

memory 200Mi (0%) 300Mi (1%)

ephemeral-storage 0 (0%) 0 (0%)

Events: