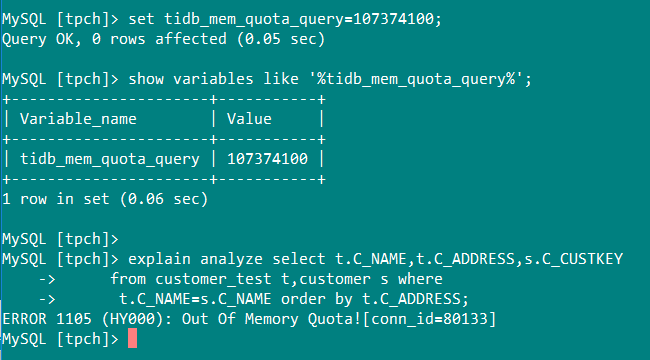

在做《08课-22课_TiDB_系统管理基础_实验手册.pdf》这个实验的时候,报错

MySQL [tpch]> explain analyze select t.C_NAME,t.C_ADDRESS,s.C_CUSTKEY

-> from customer_test t,customer s where

-> t.C_NAME=s.C_NAME order by t.C_ADDRESS;

ERROR 1105 (HY000): Out Of Memory Quota![conn_id=80133]

MySQL [tpch]>

有什么处理办法吗?

1 个赞

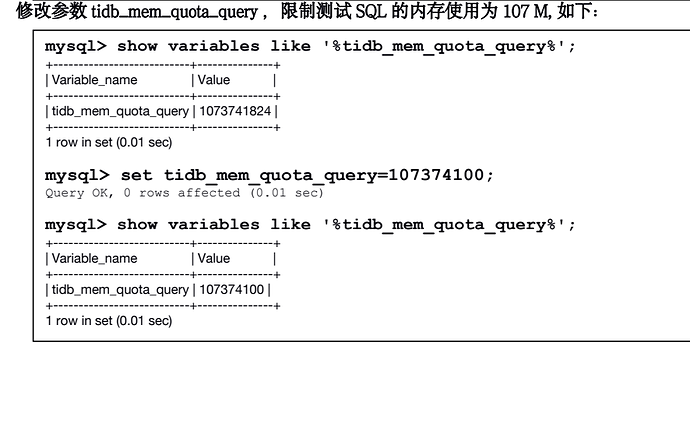

可以先放大 session 级的 mem-quota-query 参数

set tidb_mem_quota_query=16073741824;

如果想让参数持久化生效的话,再改下配置。

1 个赞

MySQL [tpch]> explain select t.C_NAME,t.C_ADDRESS,s.C_CUSTKEY

-> from customer_test t,customer s where

-> t.C_NAME=s.C_NAME order by t.C_ADDRESS;

+------------------------------------+-----------+-----------+----------------------------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| id | estRows | task | access object | operator info |

+------------------------------------+-----------+-----------+----------------------------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Sort_9 | 187500.00 | root | | tpch.customer_test.c_address |

| └─Projection_12 | 187500.00 | root | | tpch.customer_test.c_name, tpch.customer_test.c_address, tpch.customer.c_custkey |

| └─IndexJoin_16 | 187500.00 | root | | inner join, inner:IndexLookUp_15, outer key:tpch.customer.c_name, inner key:tpch.customer_test.c_name, equal cond:eq(tpch.customer.c_name, tpch.customer_test.c_name) |

| ├─TableReader_28(Build) | 150000.00 | root | | data:TableFullScan_27 |

| │ └─TableFullScan_27 | 150000.00 | cop[tikv] | table:s | keep order:false, stats:pseudo |

| └─IndexLookUp_15(Probe) | 1.25 | root | | |

| ├─IndexRangeScan_13(Build) | 1.25 | cop[tikv] | table:t, index:idx_cname(C_NAME) | range: decided by [eq(tpch.customer_test.c_name, tpch.customer.c_name)], keep order:false, stats:pseudo |

| └─TableRowIDScan_14(Probe) | 1.25 | cop[tikv] | table:t | keep order:false, stats:pseudo |

+------------------------------------+-----------+-----------+----------------------------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------+

8 rows in set (0.05 sec)

1 个赞

db_user

2022 年1 月 5 日 02:38

9

看下你这个参数的配置是什么 oom-action

MySQL [tpcc]> show config where name like '%oom-action%';

+------+-----------------+------------+--------+

| Type | Instance | Name | Value |

+------+-----------------+------------+--------+

| tidb | 172.17.0.4:4000 | oom-action | cancel |

| tidb | 172.17.0.4:4001 | oom-action | cancel |

+------+-----------------+------------+--------+

2 rows in set (0.07 sec)

db_user

2022 年1 月 5 日 02:53

11

那就是了,value为log表示超过值记录日志,为cancel则表示超过值回滚,改成log就行了

2 个赞

Kongdom

2022 年1 月 5 日 03:02

14

2 个赞

像我这种的情况,如何修改呢?

[root@lhrtidb .tiup]# tiup cluster list

Starting component `cluster`: /root/.tiup/components/cluster/v1.8.1/tiup-cluster list

Name User Version Path PrivateKey

---- ---- ------- ---- ----------

[root@lhrtidb .tiup]# ps -ef|grep tiup

root 1587 199 0 Jan01 pts/0 00:00:28 tiup playground v5.3.0 --db 2 --pd 3 --kv 3 --host=0.0.0.0

root 1598 1587 0 Jan01 pts/0 00:00:37 /root/.tiup/components/playground/v1.6.1/tiup-playground v5.3.0 --db 2 --pd 3 --kv 3 --host=0.0.0.0

root 1618 1598 5 Jan01 pts/0 04:56:31 /root/.tiup/components/prometheus/v5.3.0/prometheus/prometheus --config.file=/root/.tiup/data/StFki1x/prometheus/prometheus.yml --web.external-url=http://0.0.0.0:9090 --web.listen-address=0.0.0.0:9090 --storage.tsdb.path=/root/.tiup/data/StFki1x/prometheus/data

root 1652 1598 4 Jan01 pts/0 04:29:05 /root/.tiup/components/grafana/v5.3.0/bin/grafana-server --homepath /root/.tiup/data/StFki1x/grafana --config /root/.tiup/data/StFki1x/grafana/conf/custom.ini cfg:default.paths.logs=/root/.tiup/data/StFki1x/grafana/log

root 1662 1598 12 Jan01 pts/0 11:10:55 /root/.tiup/components/pd/v5.3.0/pd-server --name=pd-0 --data-dir=/root/.tiup/data/StFki1x/pd-0/data --peer-urls=http://172.17.0.4:2380 --advertise-peer-urls=http://172.17.0.4:2380 --client-urls=http://172.17.0.4:2379 --advertise-client-urls=http://172.17.0.4:2379 --log-file=/root/.tiup/data/StFki1x/pd-0/pd.log --initial-cluster=pd-0=http://172.17.0.4:2380,pd-1=http://172.17.0.4:2381,pd-2=http://172.17.0.4:2383

root 1673 1598 4 Jan01 pts/0 03:43:42 /root/.tiup/components/pd/v5.3.0/pd-server --name=pd-1 --data-dir=/root/.tiup/data/StFki1x/pd-1/data --peer-urls=http://172.17.0.4:2381 --advertise-peer-urls=http://172.17.0.4:2381 --client-urls=http://172.17.0.4:2382 --advertise-client-urls=http://172.17.0.4:2382 --log-file=/root/.tiup/data/StFki1x/pd-1/pd.log --initial-cluster=pd-0=http://172.17.0.4:2380,pd-1=http://172.17.0.4:2381,pd-2=http://172.17.0.4:2383

root 1682 1598 4 Jan01 pts/0 03:43:36 /root/.tiup/components/pd/v5.3.0/pd-server --name=pd-2 --data-dir=/root/.tiup/data/StFki1x/pd-2/data --peer-urls=http://172.17.0.4:2383 --advertise-peer-urls=http://172.17.0.4:2383 --client-urls=http://172.17.0.4:2384 --advertise-client-urls=http://172.17.0.4:2384 --log-file=/root/.tiup/data/StFki1x/pd-2/pd.log --initial-cluster=pd-0=http://172.17.0.4:2380,pd-1=http://172.17.0.4:2381,pd-2=http://172.17.0.4:2383

root 1699 1598 3 Jan01 pts/0 03:26:36 /root/.tiup/components/tikv/v5.3.0/tikv-server --addr=172.17.0.4:20160 --advertise-addr=172.17.0.4:20160 --status-addr=172.17.0.4:20180 --pd=http://172.17.0.4:2379,http://172.17.0.4:2382,http://172.17.0.4:2384 --config=/root/.tiup/data/StFki1x/tikv-0/tikv.toml --data-dir=/root/.tiup/data/StFki1x/tikv-0/data --log-file=/root/.tiup/data/StFki1x/tikv-0/tikv.log

root 1703 1598 3 Jan01 pts/0 03:06:01 /root/.tiup/components/tikv/v5.3.0/tikv-server --addr=172.17.0.4:20161 --advertise-addr=172.17.0.4:20161 --status-addr=172.17.0.4:20181 --pd=http://172.17.0.4:2379,http://172.17.0.4:2382,http://172.17.0.4:2384 --config=/root/.tiup/data/StFki1x/tikv-1/tikv.toml --data-dir=/root/.tiup/data/StFki1x/tikv-1/data --log-file=/root/.tiup/data/StFki1x/tikv-1/tikv.log

root 1706 1598 3 Jan01 pts/0 03:27:34 /root/.tiup/components/tikv/v5.3.0/tikv-server --addr=172.17.0.4:20162 --advertise-addr=172.17.0.4:20162 --status-addr=172.17.0.4:20182 --pd=http://172.17.0.4:2379,http://172.17.0.4:2382,http://172.17.0.4:2384 --config=/root/.tiup/data/StFki1x/tikv-2/tikv.toml --data-dir=/root/.tiup/data/StFki1x/tikv-2/data --log-file=/root/.tiup/data/StFki1x/tikv-2/tikv.log

root 1711 1598 6 Jan01 pts/0 05:33:09 /root/.tiup/components/tidb/v5.3.0/tidb-server -P 4000 --store=tikv --host=172.17.0.4 --status=10080 --path=172.17.0.4:2379,172.17.0.4:2382,172.17.0.4:2384 --log-file=/root/.tiup/data/StFki1x/tidb-0/tidb.log

root 1747 1598 4 Jan01 pts/0 04:27:55 /root/.tiup/components/tidb/v5.3.0/tidb-server -P 4001 --store=tikv --host=172.17.0.4 --status=10081 --path=172.17.0.4:2379,172.17.0.4:2382,172.17.0.4:2384 --log-file=/root/.tiup/data/StFki1x/tidb-1/tidb.log

root 2308 1598 13 Jan01 pts/0 12:10:23 /root/.tiup/components/tiflash/v5.3.0/tiflash/tiflash server --config-file=/root/.tiup/data/StFki1x/tiflash-0/tiflash.toml

root 19028 32213 0 11:06 pts/6 00:00:00 grep --color=auto tiup

1 个赞

db_user

2022 年1 月 5 日 03:13

17

试下在线修改集群配置,目前还是实验特性,不过你这种环境无所谓了com-action=‘log’

Kongdom

2022 年1 月 5 日 03:14

18

嗯,这种方式创建的集群,不适用tiup cluster edit-config的方式

1 个赞

命令不支持哟,如下:

MySQL [tpcc]> set config tidb oom-action='log';

ERROR 1105 (HY000): TiDB doesn't support to change configs online, please use SQL variables