为提高效率,请提供以下信息,问题描述清晰能够更快得到解决:

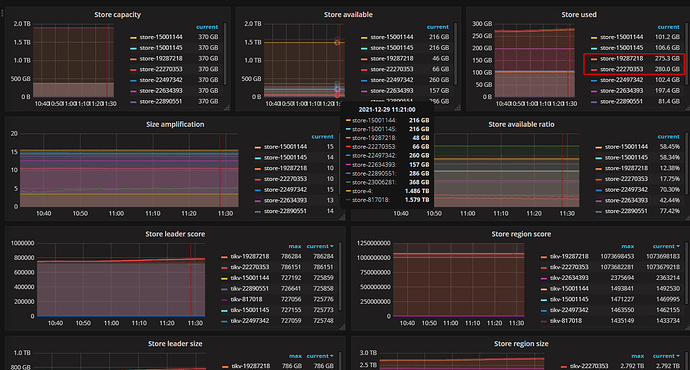

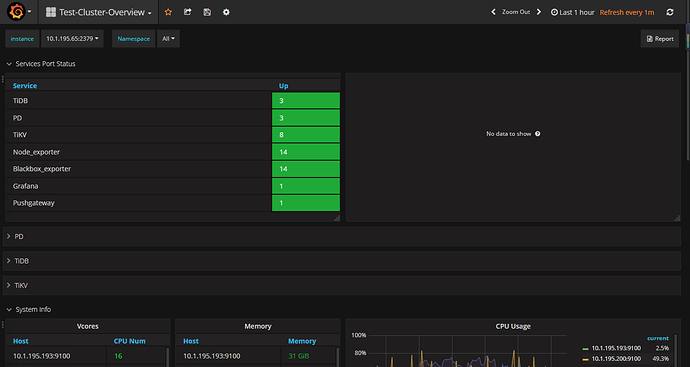

【 TiDB 使用环境】3 tidb,3 pd,8 tikv,配置都是官方配置,tidb数据盘500G,pd 200G,tikv 350G,都是ssd。

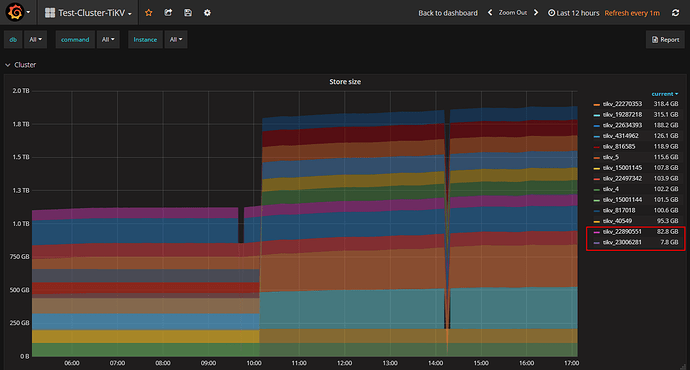

【概述】2个tikv节点的数据远大于其他节点

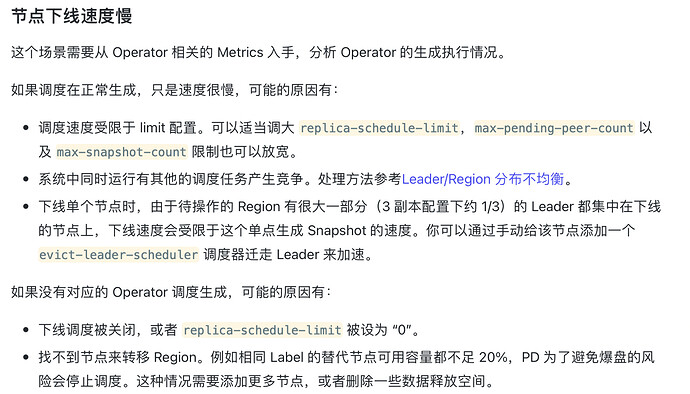

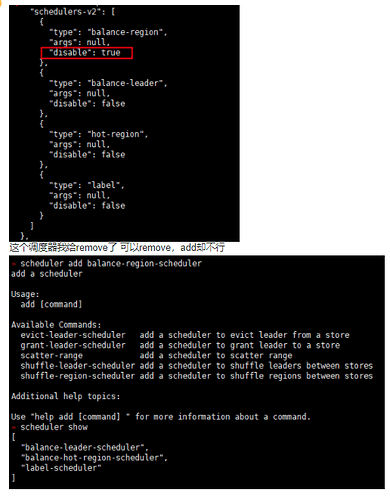

【背景】今天上线了一台tkiv,有两台tikv在下线中

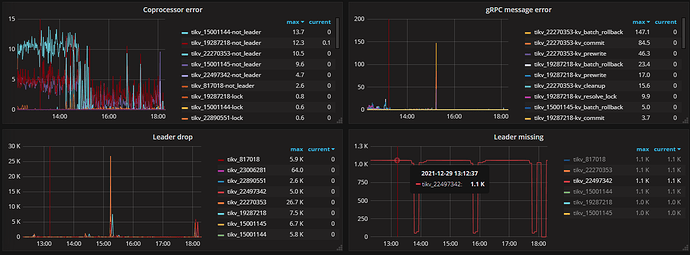

【现象】同步的业务会出现 region unavailable

【业务影响】因为下线了两台tikv节点,目前有两台tikv的磁盘快达到300G了,怕爆盘了。其他的tikv节点的空间还很富裕,新加的节点空间使用也不高。

【TiDB 版本】2.1.9

【附件】

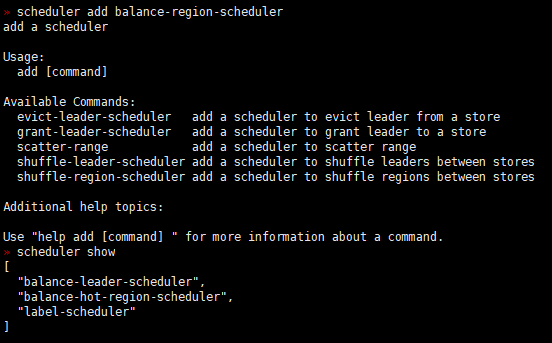

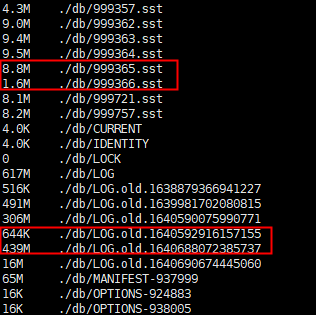

- 相关日志 和 监控

- tikv 空间 信息

- pd 面板信息

- TiDB- Overview 监控

- 对应模块日志(包含问题前后1小时日志)