为提高效率,请提供以下信息,问题描述清晰能够更快得到解决:

【 TiDB 使用环境】

centos

【现象】 业务和数据库现象

drainer异常宕机

【TiDB 版本】

v4.0.7

【附件】

- 相关日志、配置文件、Grafana 监控(https://metricstool.pingcap.com/)

- TiUP Cluster Display 信息

- TiUP CLuster Edit config 信息

- TiDB-Overview 监控

- 对应模块的 Grafana 监控(如有 BR、TiDB-binlog、TiCDC 等)

- 对应模块日志(包含问题前后 1 小时日志)

drainer同步到Kafka,今早四点一台drainer异常宕机

主要日志如下:

[2021/12/22 04:07:53.936 +08:00] [INFO] [async_producer.go:813] [“[sarama] producer/broker/0 maximum request accumulated, waiting for space

“]

[2021/12/22 04:07:58.490 +08:00] [INFO] [async_producer.go:918] [”[sarama] producer/broker/0 state change to [retrying] on bi2b_tidb_yecai/0 because kafka server: Request exceeded the user-specified time limit in the request.

“]

[2021/12/22 04:07:58.492 +08:00] [INFO] [async_producer.go:578] [”[sarama] producer/leader/bi2b_tidb_yecai/0 state change to [retrying-1]

“]

[2021/12/22 04:07:58.492 +08:00] [INFO] [async_producer.go:588] [”[sarama] producer/leader/bi2b_tidb_yecai/0 abandoning broker 0

“]

[2021/12/22 04:07:58.492 +08:00] [INFO] [async_producer.go:743] [”[sarama] producer/broker/0 state change to [closed] on bi2b_tidb_yecai/0

“]

[2021/12/22 04:07:58.492 +08:00] [INFO] [async_producer.go:717] [”[sarama] producer/broker/0 input chan closed

“]

[2021/12/22 04:07:58.493 +08:00] [INFO] [async_producer.go:801] [”[sarama] producer/broker/0 shut down

“]

[2021/12/22 04:07:58.993 +08:00] [INFO] [client.go:772] [”[sarama] client/metadata fetching metadata for [bi2b_tidb_yecai] from broker kfk_ip:9092

“]

[2021/12/22 04:07:58.995 +08:00] [INFO] [async_producer.go:711] [”[sarama] producer/broker/0 starting up

“]

[2021/12/22 04:07:58.995 +08:00] [INFO] [async_producer.go:727] [”[sarama] producer/broker/0 state change to [open] on bi2b_tidb_yecai/0

“]

[2021/12/22 04:07:58.995 +08:00] [INFO] [async_producer.go:570] [”[sarama] producer/leader/bi2b_tidb_yecai/0 selected broker 0

“]

[2021/12/22 04:07:58.997 +08:00] [INFO] [async_producer.go:813] [”[sarama] producer/broker/0 maximum request accumulated, waiting for space

“]

[2021/12/22 04:08:08.999 +08:00] [INFO] [async_producer.go:918] [”[sarama] producer/broker/0 state change to [retrying] on bi2b_tidb_yecai/0 because kafka server: Request exceeded the user-specified time limit in the request.

“]

[2021/12/22 04:08:09.000 +08:00] [INFO] [async_producer.go:594] [”[sarama] producer/leader/bi2b_tidb_yecai/0 state change to [flushing-1]

“]

[2021/12/22 04:08:09.001 +08:00] [INFO] [async_producer.go:616] [”[sarama] producer/leader/bi2b_tidb_yecai/0 state change to [normal]

“]

[2021/12/22 04:08:09.001 +08:00] [INFO] [async_producer.go:578] [”[sarama] producer/leader/bi2b_tidb_yecai/0 state change to [retrying-2]

“]

[2021/12/22 04:08:09.001 +08:00] [INFO] [async_producer.go:588] [”[sarama] producer/leader/bi2b_tidb_yecai/0 abandoning broker 0

“]

[2021/12/22 04:08:09.001 +08:00] [INFO] [async_producer.go:743] [”[sarama] producer/broker/0 state change to [closed] on bi2b_tidb_yecai/0

“]

[2021/12/22 04:08:09.001 +08:00] [INFO] [async_producer.go:717] [”[sarama] producer/broker/0 input chan closed

“]

[2021/12/22 04:08:09.001 +08:00] [INFO] [async_producer.go:801] [”[sarama] producer/broker/0 shut down

“]

[2021/12/22 04:08:09.501 +08:00] [INFO] [client.go:772] [”[sarama] client/metadata fetching metadata for [bi2b_tidb_yecai] from broker kfk_ip:9092

“]

[2021/12/22 04:08:09.503 +08:00] [INFO] [async_producer.go:711] [”[sarama] producer/broker/0 starting up

“]

[2021/12/22 04:08:09.504 +08:00] [INFO] [async_producer.go:727] [”[sarama] producer/broker/0 state change to [open] on bi2b_tidb_yecai/0

“]

[2021/12/22 04:08:09.504 +08:00] [INFO] [async_producer.go:570] [”[sarama] producer/leader/bi2b_tidb_yecai/0 selected broker 0

“]

[2021/12/22 04:08:09.505 +08:00] [INFO] [async_producer.go:813] [”[sarama] producer/broker/0 maximum request accumulated, waiting for space

“]

[2021/12/22 04:08:19.127 +08:00] [INFO] [syncer.go:257] [“write save point”] [ts=429946901167341601]

[2021/12/22 04:08:19.129 +08:00] [ERROR] [syncer.go:457] [“Failed to close syncer”] [error=“fail to push msg to kafka after 30s, check if kafka is up and working”] [errorVerbose=“fail to push msg to kafka after 30s, check if kafka is up and working

github.com/pingcap/tidb-binlog/drainer/sync.(*KafkaSyncer).run

\t/home/jenkins/agent/workspace/build_binlog_multi_branch_v4.0.7/go/src/github.com/pingcap/tidb-binlog/drainer/sync/kafka.go:236

runtime.goexit

\t/usr/local/go/src/runtime/asm_amd64.s:1357”]

[2021/12/22 04:08:19.507 +08:00] [INFO] [async_producer.go:918] [”[sarama] producer/broker/0 state change to [retrying] on bi2b_tidb_yecai/0 because kafka server: Request exceeded the user-specified time limit in the request.

“]

[2021/12/22 04:08:19.509 +08:00] [INFO] [async_producer.go:594] [”[sarama] producer/leader/bi2b_tidb_yecai/0 state change to [flushing-2]

“]

[2021/12/22 04:08:19.509 +08:00] [INFO] [async_producer.go:616] [”[sarama] producer/leader/bi2b_tidb_yecai/0 state change to [normal]

“]

[2021/12/22 04:08:19.509 +08:00] [INFO] [async_producer.go:578] [”[sarama] producer/leader/bi2b_tidb_yecai/0 state change to [retrying-3]

“]

[2021/12/22 04:08:19.509 +08:00] [INFO] [async_producer.go:588] [”[sarama] producer/leader/bi2b_tidb_yecai/0 abandoning broker 0

“]

[2021/12/22 04:08:19.509 +08:00] [INFO] [async_producer.go:743] [”[sarama] producer/broker/0 state change to [closed] on bi2b_tidb_yecai/0

“]

[2021/12/22 04:08:19.509 +08:00] [INFO] [async_producer.go:717] [”[sarama] producer/broker/0 input chan closed

“]

[2021/12/22 04:08:19.511 +08:00] [INFO] [async_producer.go:801] [”[sarama] producer/broker/0 shut down

“]

[2021/12/22 04:08:20.009 +08:00] [INFO] [client.go:772] [”[sarama] client/metadata fetching metadata for [bi2b_tidb_yecai] from broker ip:9092

“]

[2021/12/22 04:08:20.012 +08:00] [INFO] [async_producer.go:711] [”[sarama] producer/broker/0 starting up

“]

[2021/12/22 04:08:20.012 +08:00] [INFO] [async_producer.go:727] [”[sarama] producer/broker/0 state change to [open] on bi2b_tidb_yecai/0

“]

[2021/12/22 04:08:20.012 +08:00] [INFO] [async_producer.go:570] [”[sarama] producer/leader/bi2b_tidb_yecai/0 selected broker 0

“]

[2021/12/22 04:08:20.013 +08:00] [INFO] [async_producer.go:813] [”[sarama] producer/broker/0 maximum request accumulated, waiting for space

“]

[2021/12/22 04:08:20.018 +08:00] [INFO] [async_producer.go:594] [”[sarama] producer/leader/bi2b_tidb_yecai/0 state change to [flushing-3]

“]

[2021/12/22 04:08:20.018 +08:00] [INFO] [async_producer.go:616] [”[sarama] producer/leader/bi2b_tidb_yecai/0 state change to [normal]

“]

[2021/12/22 04:08:29.129 +08:00] [INFO] [server.go:451] [“begin to close drainer server”]

[2021/12/22 04:08:29.130 +08:00] [ERROR] [util.go:65] [“Recovered from panic”] [err=”"Waiting too long for Syncer.run to quit."”] [“real stack”=“github.com/pingcap/tidb-binlog/drainer.(*taskGroup).start.func1.1

\t/home/jenkins/agent/workspace/build_binlog_multi_branch_v4.0.7/go/src/github.com/pingcap/tidb-binlog/drainer/util.go:67

runtime.gopanic

\t/usr/local/go/src/runtime/panic.go:679

github.com/pingcap/tidb-binlog/drainer.(*Syncer).run

\t/home/jenkins/agent/workspace/build_binlog_multi_branch_v4.0.7/go/src/github.com/pingcap/tidb-binlog/drainer/syncer.go:463

github.com/pingcap/tidb-binlog/drainer.(*Syncer).Start

\t/home/jenkins/agent/workspace/build_binlog_multi_branch_v4.0.7/go/src/github.com/pingcap/tidb-binlog/drainer/syncer.go:132

github.com/pingcap/tidb-binlog/drainer.(*Server).Start.func4

\t/home/jenkins/agent/workspace/build_binlog_multi_branch_v4.0.7/go/src/github.com/pingcap/tidb-binlog/drainer/server.go:288

github.com/pingcap/tidb-binlog/drainer.(*taskGroup).start.func1

\t/home/jenkins/agent/workspace/build_binlog_multi_branch_v4.0.7/go/src/github.com/pingcap/tidb-binlog/drainer/util.go:75”] [name=syncer]

[2021/12/22 04:08:29.130 +08:00] [INFO] [util.go:72] [Exit] [name=syncer]

[2021/12/22 04:08:29.131 +08:00] [INFO] [server.go:416] [“has already update status”] [id=ip:8249]

[2021/12/22 04:08:29.131 +08:00] [INFO] [server.go:455] [“commit status done”]

[2021/12/22 04:08:29.131 +08:00] [INFO] [collector.go:135] [“publishBinlogs quit”]

[2021/12/22 04:08:29.131 +08:00] [INFO] [pump.go:77] [“pump is closing”] [id=ip:8250]

[2021/12/22 04:08:29.131 +08:00] [INFO] [util.go:72] [Exit] [name=heartbeat]

[2021/12/22 04:08:29.131 +08:00] [INFO] [pump.go:77] [“pump is closing”] [id=ip:8250]

[2021/12/22 04:08:29.131 +08:00] [INFO] [pump.go:77] [“pump is closing”] [id=ip:8250]

[2021/12/22 04:08:29.134 +08:00] [INFO] [util.go:72] [Exit] [name=collect]

[2021/12/22 04:08:29.134 +08:00] [INFO] [main.go:73] [“drainer exit”]

[2021/12/22 04:08:29.134 +08:00] [INFO] [server.go:470] [“drainer exit”]

[2021/12/22 04:08:29.134 +08:00] [INFO] [server.go:322] [“drainer http server stopped”] [error=“mux: listener closed”]

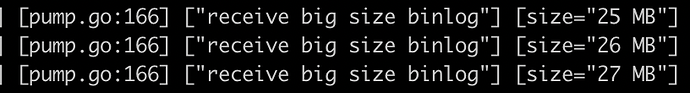

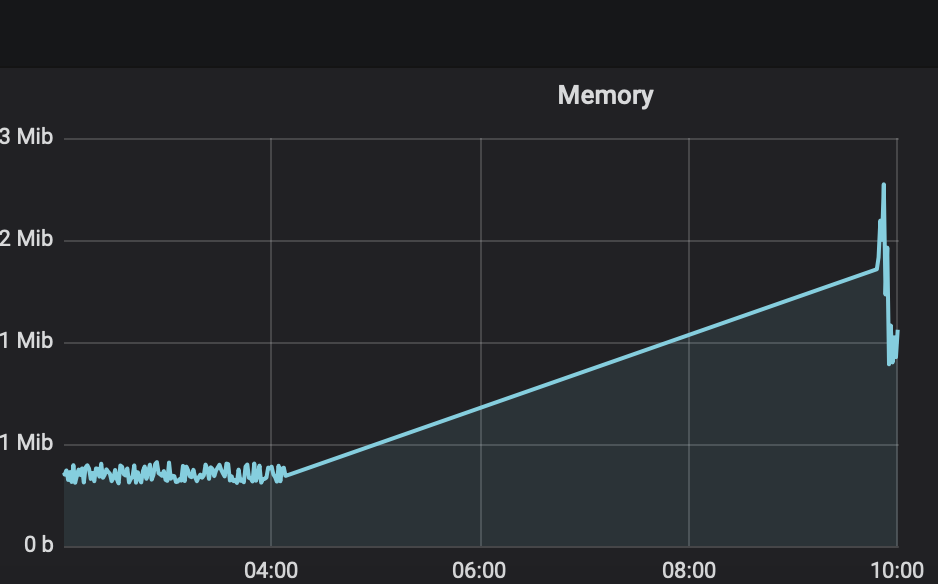

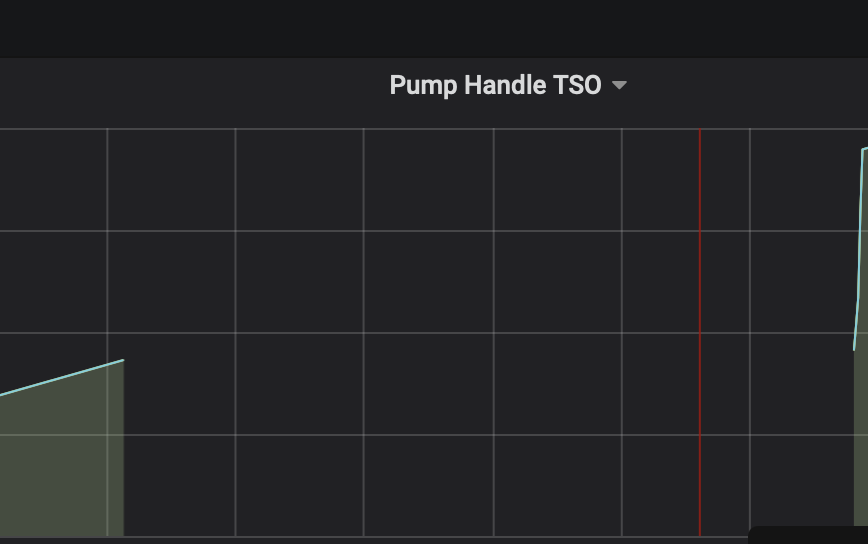

重要监控如下

看内存监控没有oom,只是kafka消费不起,不明白为啥会造成drainer的宕机,看了gc大佬的帖子,采用的解决办法是换成cdc,不知道binlog该怎么解决这个问题

若提问为性能优化、故障排查类问题,请下载脚本运行。终端输出的打印结果,请务必全选并复制粘贴上传。