【TiDB 版本】:

3.0.5

【问题描述】:

tikv.log

[2020/01/08 22:10:30.691 +08:00] [INFO] [raft.rs:723] ["[region 12857] 86225 became follower at term 219"]

[2020/01/08 22:10:30.691 +08:00] [INFO] [raft.rs:1108] ["[region 12857] 86225 [logterm: 218, index: 350, vote: 0] cast MsgRequestVote for 86229 [logterm: 218, index: 350] at term 219"]

[2020/01/08 22:10:30.701 +08:00] [INFO] [raft.rs:924] ["[region 37578] 82636 [term: 117] received a MsgRequestVote message with higher term from 82691 [term: 118]"]

[2020/01/08 22:10:30.701 +08:00] [INFO] [raft.rs:723] ["[region 37578] 82636 became follower at term 118"]

[2020/01/08 22:10:30.701 +08:00] [INFO] [raft.rs:1108] ["[region 37578] 82636 [logterm: 117, index: 130, vote: 0] cast MsgRequestVote for 82691 [logterm: 117, index: 130] at term 118"]

[2020/01/08 22:11:00.004 +08:00] [ERROR] [endpoint.rs:454] [error-response] [err="region message: \"peer is not leader for region 88025, leader may Some(id: 88028 store_id: 33067)\" not_leader { region_id: 88025 leader { id: 88028 store_id: 33067 } }"]

[2020/01/08 22:11:00.196 +08:00] [INFO] [process.rs:188] ["get snapshot failed"] [err="Request(message: \"peer is not leader for region 392, leader may Some(id: 65811 store_id: 33067)\" not_leader { region_id: 392 leader { id: 65811 store_id: 33067 } })"] [cid=178420]

[2020/01/08 22:11:01.885 +08:00] [INFO] [process.rs:188] ["get snapshot failed"] [err="Request(message: \"peer is not leader for region 2372, leader may Some(id: 35678 store_id: 33067)\" not_leader { region_id: 2372 leader { id: 35678 store_id: 33067 } })"] [cid=178584]

[2020/01/08 22:11:02.596 +08:00] [INFO] [process.rs:188] ["get snapshot failed"] [err="Request(message: \"peer is not leader for region 2920, leader may Some(id: 63219 store_id: 33067)\" not_leader { region_id: 2920 leader { id: 63219 store_id: 33067 } })"] [cid=178654]

[2020/01/08 22:13:24.351 +08:00] [INFO] [mod.rs:26] ["Welcome to TiKV."]

[2020/01/08 22:13:24.351 +08:00] [INFO] [mod.rs:28] []

[2020/01/08 22:13:24.351 +08:00] [INFO] [mod.rs:28] ["Release Version: 3.0.5"]

[2020/01/08 22:13:24.351 +08:00] [INFO] [mod.rs:28] ["Git Commit Hash: 01c872bf105dc68dda346ceda087e994c56e2702"]

[2020/01/08 22:13:24.351 +08:00] [INFO] [mod.rs:28] ["Git Commit Branch: HEAD"]

[2020/01/08 22:13:24.351 +08:00] [INFO] [mod.rs:28] ["UTC Build Time: 2019-10-25 01:03:08"]

[2020/01/08 22:13:24.351 +08:00] [INFO] [mod.rs:28] ["Rust Version: rustc 1.37.0-nightly (0e4a56b4b 2019-06-13)"]

[2020/01/08 22:13:24.351 +08:00] [INFO] [mod.rs:30] []

@

系统日志

Jan 8 22:01:01 dev18 systemd: Removed slice User Slice of root.

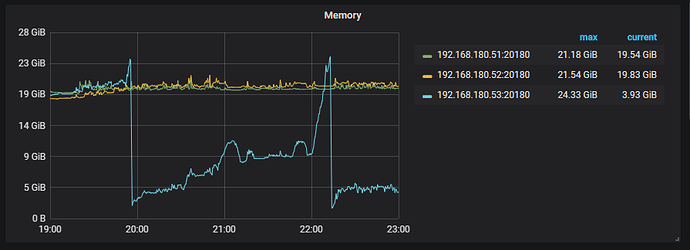

Jan 8 22:13:08 dev18 kernel: cop-low2 invoked oom-killer: gfp_mask=0x6000c0(GFP_KERNEL), order=0, oom_score_adj=0

Jan 8 22:13:08 dev18 kernel: CPU: 0 PID: 26175 Comm: cop-low2 Not tainted 5.0.3-1.el7.elrepo.x86_64 #1

Jan 8 22:13:08 dev18 kernel: Hardware name: Gigabyte Technology Co., Ltd. Z390 UD/Z390 UD, BIOS F3 08/29/2018

Jan 8 22:13:08 dev18 kernel: Call Trace:

Jan 8 22:13:08 dev18 kernel: dump_stack+0x63/0x88

Jan 8 22:13:08 dev18 kernel: dump_header+0x56/0x314

Jan 8 22:13:08 dev18 kernel: ? mem_cgroup_scan_tasks+0x9c/0xf0

Jan 8 22:13:08 dev18 kernel: oom_kill_process+0x26b/0x290

Jan 8 22:13:08 dev18 kernel: out_of_memory+0x140/0x4b0

Jan 8 22:13:08 dev18 kernel: mem_cgroup_out_of_memory+0x4b/0x80

Jan 8 22:13:08 dev18 kernel: try_charge+0x6e2/0x750

Jan 8 22:13:08 dev18 kernel: ? __alloc_pages_nodemask+0x133/0x2e0

Jan 8 22:13:08 dev18 kernel: mem_cgroup_try_charge+0x8c/0x1e0

Jan 8 22:13:08 dev18 kernel: mem_cgroup_try_charge_delay+0x22/0x50

Jan 8 22:13:08 dev18 kernel: do_anonymous_page+0x11a/0x650

Jan 8 22:13:08 dev18 kernel: __handle_mm_fault+0xc1d/0xee0

Jan 8 22:13:08 dev18 kernel: ? __switch_to_asm+0x40/0x70

Jan 8 22:13:08 dev18 kernel: handle_mm_fault+0x102/0x220

Jan 8 22:13:08 dev18 kernel: __do_page_fault+0x1f9/0x480

Jan 8 22:13:08 dev18 kernel: do_page_fault+0x37/0x140

Jan 8 22:13:08 dev18 kernel: ? page_fault+0x8/0x30

Jan 8 22:13:08 dev18 kernel: page_fault+0x1e/0x30

Jan 8 22:13:08 dev18 kernel: RIP: 0033:0x7f367e5cc822

Jan 8 22:13:08 dev18 kernel: Code: e0 f3 0f 6f 5e d0 f3 0f 6f 66 c0 f3 0f 6f 6e b0 f3 0f 6f 76 a0 f3 0f 6f 7e 90 f3 44 0f 6f 46 80 66 0f 7f 4f f0 66 0f 7f 57 e0 <66> 0f 7f 5f d0 66 0f 7f 67 c0 66 0f 7f 6f b0 66 0f 7f 77 a0 66 0f

Jan 8 22:13:08 dev18 kernel: RSP: 002b:00007f36060531f8 EFLAGS: 00010202

Jan 8 22:13:08 dev18 kernel: RAX: 00007f2a00f11007 RBX: 00007f3606053420 RCX: 000000000007af19

Jan 8 22:13:08 dev18 kernel: RDX: 000000000003af19 RSI: 00007f35cb89f919 RDI: 00007f2a00f4c020

Jan 8 22:13:08 dev18 kernel: RBP: 000000000007af25 R08: 00007f2a00f8bf1c R09: 000000000000000c

Jan 8 22:13:08 dev18 kernel: R10: 00007f3606057290 R11: 00007f367e603b80 R12: 00007f35cb864900

Jan 8 22:13:08 dev18 kernel: R13: 00007f3606053420 R14: 00007f3606053278 R15: 000000000007af25

Jan 8 22:13:08 dev18 kernel: memory: usage 26214400kB, limit 26214400kB, failcnt 1086825

Jan 8 22:13:08 dev18 kernel: memory+swap: usage 26214400kB, limit 9007199254740988kB, failcnt 0

Jan 8 22:13:08 dev18 kernel: kmem: usage 66828kB, limit 9007199254740988kB, failcnt 0

Jan 8 22:13:08 dev18 kernel: Memory cgroup stats for /system.slice/tikv-20160.service: cache:288KB rss:26147272KB rss_huge:12978176KB shmem:0KB mapped_file:0KB dirty:396KB writeback:0KB swap:0KB inactive_anon:0KB active_anon:26147520KB inactive_file:0KB active_file:4KB unevictable:0KB

Jan 8 22:13:08 dev18 kernel: Tasks state (memory values in pages):

Jan 8 22:13:08 dev18 kernel: [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name

Jan 8 22:13:08 dev18 kernel: [ 26096] 1000 26096 13355166 6539613 60739584 0 0 tikv-server

Jan 8 22:13:08 dev18 kernel: oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0,oom_memcg=/system.slice/tikv-20160.service,task_memcg=/system.slice/tikv-20160.service,task=tikv-server,pid=26096,uid=1000

Jan 8 22:13:08 dev18 kernel: Memory cgroup out of memory: Kill process 26096 (tikv-server) score 1000 or sacrifice child

Jan 8 22:13:08 dev18 kernel: Killed process 26096 (tikv-server) total-vm:53420664kB, anon-rss:26138716kB, file-rss:19736kB, shmem-rss:0kB

Jan 8 22:13:09 dev18 kernel: oom_reaper: reaped process 26096 (tikv-server), now anon-rss:0kB, file-rss:0kB, shmem-rss:0kB

Jan 8 22:13:09 dev18 systemd: tikv-20160.service: main process exited, code=killed, status=9/KILL

Jan 8 22:13:09 dev18 systemd: Unit tikv-20160.service entered failed state.

Jan 8 22:13:09 dev18 systemd: tikv-20160.service failed.

Jan 8 22:13:24 dev18 systemd: tikv-20160.service holdoff time over, scheduling restart.

Jan 8 22:13:24 dev18 systemd: Stopped tikv-20160 service.

Jan 8 22:13:24 dev18 systemd: Started tikv-20160 service.

Jan 8 22:13:24 dev18 run_tikv.sh: sync ...

Jan 8 22:13:24 dev18 run_tikv.sh: real#0110m0.003s

Jan 8 22:13:24 dev18 run_tikv.sh: user#0110m0.001s

Jan 8 22:13:24 dev18 run_tikv.sh: sys#0110m0.000s

Jan 8 22:13:24 dev18 run_tikv.sh: ok

Jan 8 23:01:02 dev18 systemd: Created slice User Slice of root.