config show all

[tidb@dev10 ~]$ /home/tidb/tidb-ansible/resources/bin/pd-ctl -u http://192.168.180.33:2379 config show all

{

"client-urls": "http://192.168.181.56:2379",

"peer-urls": "http://192.168.181.56:2380",

"advertise-client-urls": "http://192.168.181.56:2379",

"advertise-peer-urls": "http://192.168.181.56:2380",

"name": "pd_dev27",

"data-dir": "/home/tidb/deploy/data.pd",

"force-new-cluster": false,

"enable-grpc-gateway": true,

"initial-cluster": "pd_dev11=http://192.168.180.33:2380,pd_dev26=http://192.168.181.55:2380,pd_dev27=http://192.168.181.56:2380",

"initial-cluster-state": "new",

"join": "",

"lease": 3,

"log": {

"level": "info",

"format": "text",

"disable-timestamp": false,

"file": {

"filename": "/home/tidb/deploy/log/pd.log",

"log-rotate": true,

"max-size": 300,

"max-days": 0,

"max-backups": 0

},

"development": false,

"disable-caller": false,

"disable-stacktrace": false,

"disable-error-verbose": true,

"sampling": null

},

"log-file": "",

"log-level": "",

"tso-save-interval": "3s",

"metric": {

"job": "pd_dev27",

"address": "",

"interval": "15s"

},

"schedule": {

"max-snapshot-count": 3,

"max-pending-peer-count": 16,

"max-merge-region-size": 2,

"max-merge-region-keys": 2000,

"split-merge-interval": "1h0m0s",

"enable-one-way-merge": "false",

"patrol-region-interval": "10ms",

"max-store-down-time": "30m0s",

"leader-schedule-limit": 4,

"region-schedule-limit": 4,

"replica-schedule-limit": 8,

"merge-schedule-limit": 16,

"hot-region-schedule-limit": 4,

"hot-region-cache-hits-threshold": 3,

"store-balance-rate": 15,

"tolerant-size-ratio": 5,

"low-space-ratio": 0.8,

"high-space-ratio": 0.6,

"scheduler-max-waiting-operator": 3,

"disable-raft-learner": "false",

"disable-remove-down-replica": "false",

"disable-replace-offline-replica": "false",

"disable-make-up-replica": "false",

"disable-remove-extra-replica": "false",

"disable-location-replacement": "false",

"disable-namespace-relocation": "false",

"schedulers-v2": [

{

"type": "balance-region",

"args": null,

"disable": false

},

{

"type": "balance-leader",

"args": null,

"disable": false

},

{

"type": "hot-region",

"args": null,

"disable": false

},

{

"type": "label",

"args": null,

"disable": false

}

]

},

"replication": {

"max-replicas": 3,

"location-labels": "",

"strictly-match-label": "false"

},

"namespace": {},

"pd-server": {

"use-region-storage": "true"

},

"cluster-version": "3.0.5",

"quota-backend-bytes": "0B",

"auto-compaction-mode": "periodic",

"auto-compaction-retention-v2": "1h",

"TickInterval": "500ms",

"ElectionInterval": "3s",

"PreVote": true,

"security": {

"cacert-path": "",

"cert-path": "",

"key-path": ""

},

"label-property": {},

"WarningMsgs": null,

"namespace-classifier": "table",

"LeaderPriorityCheckInterval": "1m0s"

}

[tidb@dev10 ~]$

store

[tidb@dev10 ~]$ /home/tidb/tidb-ansible/resources/bin/pd-ctl -u http://192.168.180.33:2379 store

{

"count": 3,

"stores": [

{

"store": {

"id": 72015,

"address": "192.168.180.51:20160",

"version": "3.0.5",

"state_name": "Up"

},

"status": {

"capacity": "392.7GiB",

"available": "249.9GiB",

"leader_count": 242,

"leader_weight": 1,

"leader_score": 8344,

"leader_size": 8344,

"region_count": 6202,

"region_weight": 1,

"region_score": 24883,

"region_size": 24883,

"start_ts": "2020-01-07T17:53:36+08:00",

"last_heartbeat_ts": "2020-01-08T16:02:22.388962962+08:00",

"uptime": "22h8m46.388962962s"

}

},

{

"store": {

"id": 33067,

"address": "192.168.180.52:20160",

"version": "3.0.5",

"state_name": "Up"

},

"status": {

"capacity": "392.7GiB",

"available": "345.1GiB",

"leader_count": 2641,

"leader_weight": 1,

"leader_score": 8250,

"leader_size": 8250,

"region_count": 6202,

"region_weight": 1,

"region_score": 24883,

"region_size": 24883,

"start_ts": "2020-01-07T17:52:21+08:00",

"last_heartbeat_ts": "2020-01-08T16:02:25.188217127+08:00",

"uptime": "22h10m4.188217127s"

}

},

{

"store": {

"id": 72014,

"address": "192.168.180.53:20160",

"version": "3.0.5",

"state_name": "Up"

},

"status": {

"capacity": "392.7GiB",

"available": "230.5GiB",

"leader_count": 3319,

"leader_weight": 1,

"leader_score": 8289,

"leader_size": 8289,

"region_count": 6202,

"region_weight": 1,

"region_score": 24883,

"region_size": 24883,

"start_ts": "2020-01-07T17:54:28+08:00",

"last_heartbeat_ts": "2020-01-08T16:02:30.092476143+08:00",

"uptime": "22h8m2.092476143s"

}

}

]

}

[tidb@dev10 ~]$

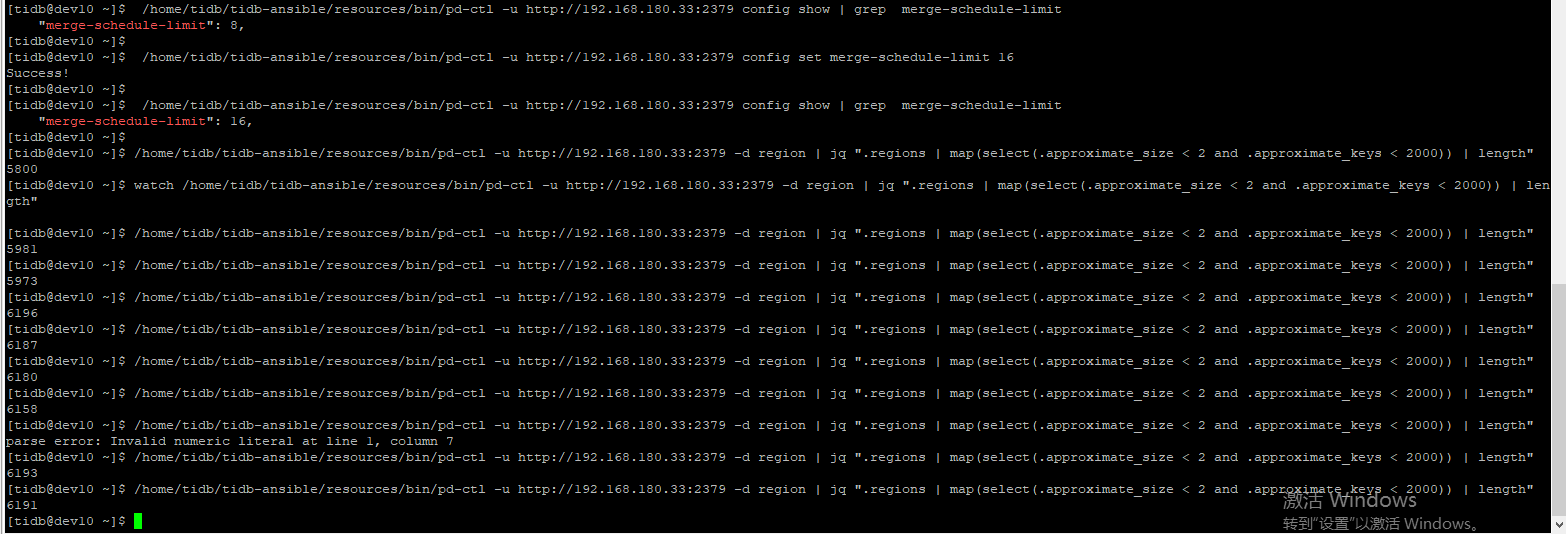

region 数量

[tidb@dev10 ~]$ /home/tidb/tidb-ansible/resources/bin/pd-ctl -u http://192.168.180.33:2379 -d region | jq ".regions | map(select(.approximate_size < 2 and .approximate_keys < 2000)) | length"

5792

[tidb@dev10 ~]$ /home/tidb/tidb-ansible/resources/bin/pd-ctl -u http://192.168.180.33:2379 -d region | jq ".regions | map(select(.approximate_size < 2 and .approximate_keys < 2000)) | length"

5792

[tidb@dev10 ~]$ /home/tidb/tidb-ansible/resources/bin/pd-ctl -u http://192.168.180.33:2379 -d region | jq ".regions | map(select(.approximate_size < 2 and .approximate_keys < 2000)) | length"

5792

[tidb@dev10 ~]$ /home/tidb/tidb-ansible/resources/bin/pd-ctl -u http://192.168.180.33:2379 -d region | jq ".regions | map(select(.approximate_size < 2 and .approximate_keys < 2000)) | length"

5792

[tidb@dev10 ~]$