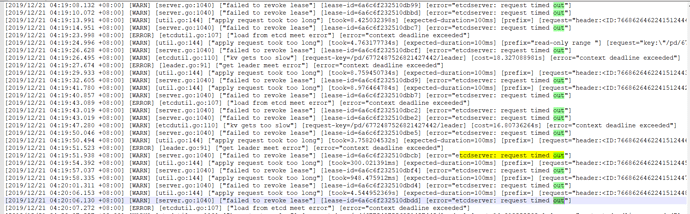

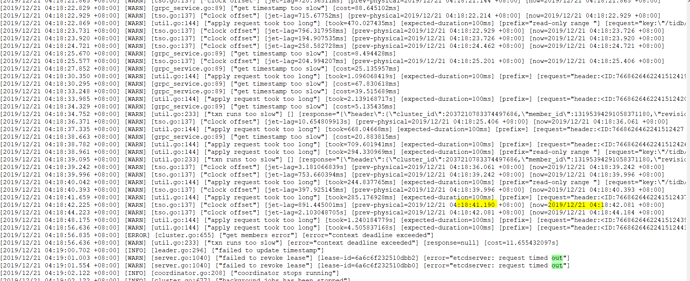

用sysbench导入数据,报错: [2019/12/04 08:52:00.786 +08:00] [INFO] [region_cache.go:401] [“switch region peer to next due to send request fail”] [current=“region ID: 894, meta: id:894 start_key:“t\200\000\000\000\000\000\0002_i\200\000\000\000\000\000\000\001\003\200\000\000\000\000e\330Z\003\200\000\000\000\000].\317” end_key:“t\200\000\000\000\000\000\0002_i\200\000\000\000\000\000\000\001\003\200\000\000\000\000qhT\003\200\000\000\000\0009\354>” region_epoch:<conf_ver:1 version:37 > peers:<id:895 store_id:1 > , peer: id:895 store_id:1 , addr: 127.0.0.1:20160, idx: 0”] [needReload=true] [error=“no available connections”] [errorVerbose=“no available connections github.com/pingcap/tidb/store/tikv.(*batchConn).getClientAndSend /home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/client_batch.go:472 github.com/pingcap/tidb/store/tikv.(*batchConn).batchSendLoop /home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/client_batch.go:451 runtime.goexit /usr/local/go/src/runtime/asm_amd64.s:1357”] [2019/12/04 08:52:00.799 +08:00] [INFO] [region_cache.go:295] [“invalidate current region, because others failed on same store”] [region=714] [store=127.0.0.1:20160] [2019/12/04 08:52:00.801 +08:00] [WARN] [client_batch.go:469] [“no available connections”] [target=127.0.0.1:20160] [2019/12/04 08:52:00.801 +08:00] [INFO] [region_cache.go:902] [“mark store’s regions need be refill”] [store=127.0.0.1:20160] [2019/12/04 08:52:00.801 +08:00] [INFO] [region_cache.go:401] [“switch region peer to next due to send request fail”] [current=“region ID: 714, meta: id:714 start_key:“t\200\000\000\000\000\000\0002_r\200\000\000\000\000\270\314\031” end_key:“t\200\000\000\000\000\000\0002_r\200\000\000\000\000\276z\345” region_epoch:<conf_ver:1 version:62 > peers:<id:715 store_id:1 > , peer: id:715 store_id:1 , addr: 127.0.0.1:20160, idx: 0”] [needReload=true] [error=“no available connections”] [errorVerbose=“no available connections github.com/pingcap/tidb/store/tikv.(*batchConn).getClientAndSend /home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/client_batch.go:472 github.com/pingcap/tidb/store/tikv.(*batchConn).batchSendLoop /home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/client_batch.go:451 runtime.goexit /usr/local/go/src/runtime/asm_amd64.s:1357”] [2019/12/04 08:52:00.802 +08:00] [INFO] [region_cache.go:295] [“invalidate current region, because others failed on same store”] [region=4] [store=127.0.0.1:20160] [2019/12/04 08:52:00.811 +08:00] [WARN] [client_batch.go:469] [“no available connections”] [target=127.0.0.1:20160] [2019/12/04 08:52:00.811 +08:00] [INFO] [region_cache.go:902] [“mark store’s regions need be refill”] [store=127.0.0.1:20160] [2019/12/04 08:52:00.811 +08:00] [INFO] [region_cache.go:401] [“switch region peer to next due to send request fail”] [current=“region ID: 4, meta: id:4 end_key:“t\200\000\000\000\000\000\000\005” region_epoch:<conf_ver:1 version:2 > peers:<id:5 store_id:1 > , peer: id:5 store_id:1 , addr: 127.0.0.1:20160, idx: 0”] [needReload=true] [error=“no available connections”] [errorVerbose=“no available connections github.com/pingcap/tidb/store/tikv.(*batchConn).getClientAndSend /home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/client_batch.go:472 github.com/pingcap/tidb/store/tikv.(*batchConn).batchSendLoop /home/jenkins/agent/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/client_batch.go:451 runtime.goexit /usr/local/go/src/runtime/asm_amd64.s:1357”]

系统: 16核 32GB内存 导入50G数据时失败 TiDB版本: 3.0.6 在TiDB alpha版本上也有同样的问题。单机版,利用tidb-v3.0.6的package包安装的。