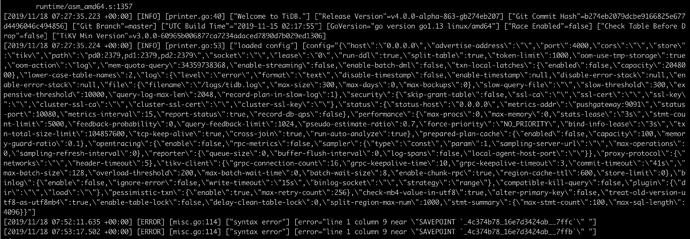

我把hive的元数据库改回到mysql,捕捉到的执行log如下:

| 2019-11-18 13:33:59.265732 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `TBLS` (`TBL_ID`,`CREATE_TIME`,`DB_ID`,`LAST_ACCESS_TIME`,`OWNER`,`RETENTION`,`IS_REWRITE_ENABLED`,`SD_ID`,`TBL_NAME`,`TBL_TYPE`,`VIEW_EXPANDED_TEXT`,`VIEW_ORIGINAL_TEXT`) VALUES (7,1574055239,11,0,'root',0,0,7,'inn_part_table','MANAGED_TABLE',null,null) |

| 2019-11-18 13:33:59.265301 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `SDS` (`SD_ID`,`CD_ID`,`INPUT_FORMAT`,`IS_COMPRESSED`,`IS_STOREDASSUBDIRECTORIES`,`LOCATION`,`NUM_BUCKETS`,`OUTPUT_FORMAT`,`SERDE_ID`) VALUES (7,7,'org.apache.hadoop.mapred.TextInputFormat',0,0,'hdfs://hadoop-test/dam/warehouse/inn_part_table',-1,'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat',7) |

| 2019-11-18 13:33:59.215706 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | SELECT DISTINCT 'org.apache.hadoop.hive.metastore.model.MTable' AS `NUCLEUS_TYPE`,`A0`.`CREATE_TIME`,`A0`.`LAST_ACCESS_TIME`,`A0`.`OWNER`,`A0`.`RETENTION`,`A0`.`IS_REWRITE_ENABLED`,`A0`.`TBL_NAME`,`A0`.`TBL_TYPE`,`A0`.`TBL_ID` FROM `TBLS` `A0` LEFT OUTER JOIN `DBS` `B0` ON `A0`.`DB_ID` = `B0`.`DB_ID` WHERE `A0`.`TBL_NAME` = 'inn_part_table' AND `B0`.`NAME` = 'dam' |

| 2019-11-18 13:33:59.271821 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `TBL_PRIVS` (`TBL_GRANT_ID`,`CREATE_TIME`,`GRANT_OPTION`,`GRANTOR`,`GRANTOR_TYPE`,`PRINCIPAL_NAME`,`PRINCIPAL_TYPE`,`TBL_PRIV`,`TBL_ID`) VALUES (18,1574055239,1,'root','USER','root','USER','DELETE',7) |

| 2019-11-18 13:33:59.271336 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `TBL_PRIVS` (`TBL_GRANT_ID`,`CREATE_TIME`,`GRANT_OPTION`,`GRANTOR`,`GRANTOR_TYPE`,`PRINCIPAL_NAME`,`PRINCIPAL_TYPE`,`TBL_PRIV`,`TBL_ID`) VALUES (17,1574055239,1,'root','USER','root','USER','UPDATE',7) |

| 2019-11-18 13:33:59.270776 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `TBL_PRIVS` (`TBL_GRANT_ID`,`CREATE_TIME`,`GRANT_OPTION`,`GRANTOR`,`GRANTOR_TYPE`,`PRINCIPAL_NAME`,`PRINCIPAL_TYPE`,`TBL_PRIV`,`TBL_ID`) VALUES (16,1574055239,1,'root','USER','root','USER','SELECT',7) |

| 2019-11-18 13:33:59.267988 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `TBL_PRIVS` (`TBL_GRANT_ID`,`CREATE_TIME`,`GRANT_OPTION`,`GRANTOR`,`GRANTOR_TYPE`,`PRINCIPAL_NAME`,`PRINCIPAL_TYPE`,`TBL_PRIV`,`TBL_ID`) VALUES (15,1574055239,1,'root','USER','root','USER','INSERT',7) |

| 2019-11-18 13:33:59.267256 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `PARTITION_KEYS` (`TBL_ID`,`PKEY_COMMENT`,`PKEY_NAME`,`PKEY_TYPE`,`INTEGER_IDX`) VALUES (7,null,'hour','string',1) |

| 2019-11-18 13:33:59.266913 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `PARTITION_KEYS` (`TBL_ID`,`PKEY_COMMENT`,`PKEY_NAME`,`PKEY_TYPE`,`INTEGER_IDX`) VALUES (7,null,'day','string',0) |

| 2019-11-18 13:33:59.266469 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `TABLE_PARAMS` (`PARAM_VALUE`,`TBL_ID`,`PARAM_KEY`) VALUES ('1574055239',7,'transient_lastDdlTime') |

| 2019-11-18 13:33:59.265732 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `TBLS` (`TBL_ID`,`CREATE_TIME`,`DB_ID`,`LAST_ACCESS_TIME`,`OWNER`,`RETENTION`,`IS_REWRITE_ENABLED`,`SD_ID`,`TBL_NAME`,`TBL_TYPE`,`VIEW_EXPANDED_TEXT`,`VIEW_ORIGINAL_TEXT`) VALUES (7,1574055239,11,0,'root',0,0,7,'inn_part_table','MANAGED_TABLE',null,null) |

| 2019-11-18 13:33:59.265301 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `SDS` (`SD_ID`,`CD_ID`,`INPUT_FORMAT`,`IS_COMPRESSED`,`IS_STOREDASSUBDIRECTORIES`,`LOCATION`,`NUM_BUCKETS`,`OUTPUT_FORMAT`,`SERDE_ID`) VALUES (7,7,'org.apache.hadoop.mapred.TextInputFormat',0,0,'hdfs://hadoop-test/dam/warehouse/inn_part_table',-1,'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat',7) |

| 2019-11-18 13:33:59.265042 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `COLUMNS_V2` (`CD_ID`,`COMMENT`,`COLUMN_NAME`,`TYPE_NAME`,`INTEGER_IDX`) VALUES (7,null,'cluster','string',6) |

| 2019-11-18 13:33:59.264720 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `COLUMNS_V2` (`CD_ID`,`COMMENT`,`COLUMN_NAME`,`TYPE_NAME`,`INTEGER_IDX`) VALUES (7,null,'dst','string',5) |

| 2019-11-18 13:33:59.264459 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `COLUMNS_V2` (`CD_ID`,`COMMENT`,`COLUMN_NAME`,`TYPE_NAME`,`INTEGER_IDX`) VALUES (7,'IP Address of the User','ip','string',4) |

| 2019-11-18 13:33:59.264144 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `COLUMNS_V2` (`CD_ID`,`COMMENT`,`COLUMN_NAME`,`TYPE_NAME`,`INTEGER_IDX`) VALUES (7,null,'src','string',3) |

| 2019-11-18 13:33:59.263791 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `COLUMNS_V2` (`CD_ID`,`COMMENT`,`COLUMN_NAME`,`TYPE_NAME`,`INTEGER_IDX`) VALUES (7,null,'ugi','string',2) |

| 2019-11-18 13:33:59.263424 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `COLUMNS_V2` (`CD_ID`,`COMMENT`,`COLUMN_NAME`,`TYPE_NAME`,`INTEGER_IDX`) VALUES (7,null,'cmd','string',1) |

| 2019-11-18 13:33:59.263058 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `COLUMNS_V2` (`CD_ID`,`COMMENT`,`COLUMN_NAME`,`TYPE_NAME`,`INTEGER_IDX`) VALUES (7,null,'time','bigint',0) |

| 2019-11-18 13:33:59.262606 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `CDS` (`CD_ID`) VALUES (7) |

| 2019-11-18 13:33:59.262032 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `SERDE_PARAMS` (`PARAM_VALUE`,`SERDE_ID`,`PARAM_KEY`) VALUES (' ',7,'field.delim') |

| 2019-11-18 13:33:59.261775 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `SERDE_PARAMS` (`PARAM_VALUE`,`SERDE_ID`,`PARAM_KEY`) VALUES (' ',7,'serialization.format') |

| 2019-11-18 13:33:59.260703 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 772 | 0 | Query | INSERT INTO `SERDES` (`SERDE_ID`,`NAME`,`SLIB`) VALUES (7,null,'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe') |

| 2019-11-18 13:33:59.201805 | root[root] @ qdc-qtt-bigdata-emrtest-02 [10.0.2.46] | 776 | 0 | Query | insert into HIVE_LOCKS (hl_lock_ext_id, hl_lock_int_id, hl_txnid, hl_db, hl_table, hl_partition,hl_lock_state, hl_lock_type, hl_last_heartbeat, hl_user, hl_host, hl_agent_info) values(11, 1,0, 'dam', null, null, 'w', 'r', 1574055239000, 'root', 'qdc-qtt-bigdata-emrtest-02', 'root_20191118133359_3bfb0046-1378-4e2c-af66-beff3cd6a56d') |