为提高效率,请提供以下信息,问题描述清晰能够更快得到解决:

【 TiDB 使用环境】

【概述】场景+问题概述

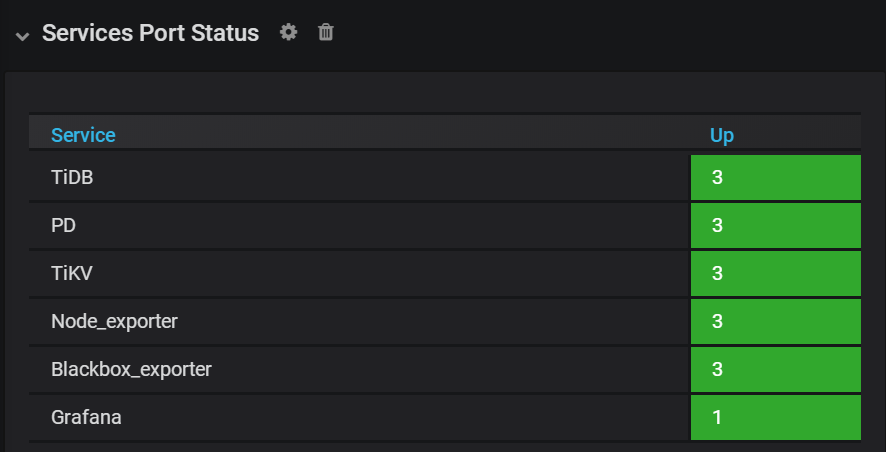

集群三台机,systeminfo只有一台机的IO图表

探针日志文件3GB+:more node_exporter.log

time=“2021-08-20T12:35:18+08:00” level=error msg="error encoding and sending metric family: write tcp 20.100.6.72:9100->20.100.6.72:58790: write: broken pipe

" source=“log.go:172”

【背景】做过哪些操作

重新启动9100服务

V4.0.9版本也遇到过类似的问题,启动探针后正常

【现象】业务和数据库现象

【业务影响】

【TiDB 版本】

V5.1.0

【附件】

- TiUP Cluster Display 信息

[root@CRM-tidb2 log]# tiup cluster display tidb-prod

Starting component cluster: /root/.tiup/components/cluster/v1.5.2/tiup-cluster display tidb-prod

Cluster type: tidb

Cluster name: tidb-prod

Cluster version: v5.1.0

Deploy user: root

SSH type: builtin

Dashboard URL: http://20.100.6.72:2379/dashboard

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

20.100.6.73:9093 alertmanager 20.100.6.73 9093/9094 linux/x86_64 Up /home/ti_deploy/TI_Data/alertmanager-9093 /home/ti_deploy/TI_Deploy/alertmanager-9093

20.100.6.71:3000 grafana 20.100.6.71 3000 linux/x86_64 Up - /home/ti_deploy/TI_Deploy/grafana-3000

20.100.6.71:2379 pd 20.100.6.71 2379/2380 linux/x86_64 Up|L /home/ti_deploy/TI_Data/pd-2379 /home/ti_deploy/TI_Deploy/pd-2379

20.100.6.72:2379 pd 20.100.6.72 2379/2380 linux/x86_64 Up|UI /home/ti_deploy/TI_Data/pd-2379 /home/ti_deploy/TI_Deploy/pd-2379

20.100.6.73:2379 pd 20.100.6.73 2379/2380 linux/x86_64 Up /home/ti_deploy/TI_Data/pd-2379 /home/ti_deploy/TI_Deploy/pd-2379

20.100.6.72:9090 prometheus 20.100.6.72 9090 linux/x86_64 Up /home/ti_deploy/TI_Data/prometheus-9090 /home/ti_deploy/TI_Deploy/prometheus-9090

20.100.6.71:4000 tidb 20.100.6.71 4000/10080 linux/x86_64 Up - /home/ti_deploy/TI_Deploy/tidb-4000

20.100.6.72:4000 tidb 20.100.6.72 4000/10080 linux/x86_64 Up - /home/ti_deploy/TI_Deploy/tidb-4000

20.100.6.73:4000 tidb 20.100.6.73 4000/10080 linux/x86_64 Up - /home/ti_deploy/TI_Deploy/tidb-4000

20.100.6.71:20160 tikv 20.100.6.71 20160/20180 linux/x86_64 Up /home/ti_deploy/TI_Data/tikv-20160 /home/ti_deploy/TI_Deploy/tikv-20160

20.100.6.72:20160 tikv 20.100.6.72 20160/20180 linux/x86_64 Up /home/ti_deploy/TI_Data/tikv-20160 /home/ti_deploy/TI_Deploy/tikv-20160

20.100.6.73:20160 tikv 20.100.6.73 20160/20180 linux/x86_64 Up /home/ti_deploy/TI_Data/tikv-20160 /home/ti_deploy/TI_Deploy/tikv-20160

Total nodes: 12

-

TiUP Cluster Edit Config 信息

-

TiDB- Overview 监控

3 个赞

xfworld

(魔幻之翼)

2

麻烦描述准确点

9100 是端口,你重启了哪些服务?导致了这个现象?

现在的环境,是生产,还是测试?

2 个赞

1、生产环境

2、集群部署后几小时后就这样了,当时没发现

3、这样操作没用:tiup cluster restart tidb-prod -N 20.100.6.71:9100

4、这样也没有变化:tiup cluster restart tidb-prod -R grafana

2 个赞

xfworld

(魔幻之翼)

4

如果你需要获得 “加急”处理问题的权限,加快问题响应速度, 点击完成认证,获得“加急”处理问题的权限,方便您更快速地解决问题。

4 个赞

spc_monkey

(carry@pingcap.com)

7

我没太理解问题 ,是说 有写 节点上的 node_exporter 服务有异常?

,是说 有写 节点上的 node_exporter 服务有异常?

2 个赞

我也认为探针服务有异常,执行下面的命令后报端口冲突,很明显服务是启动了的

./run_node_exporter.sh &

./run_blackbox_exporter.sh &

执行下面命令也没效果:

tiup cluster restart tidb-prod -N 20.100.6.71:9100

没有图表数据的服务器产生了3.4G的日志文件(node_exporter.log)

2 个赞

spc_monkey

(carry@pingcap.com)

9

1、有个疑问哦,tiup cluster restart tidb-prod -N 20.100.6.71:9100,这个 20.100.6.71:9100 你是怎么获得的,tiup display 应该不展示这个吧

2、可以登录到对应的服务器上,看看 run_node_exporter 服务的状态:systemctl status xxx

2 个赞

启动了,看样子很正常

[root@CRM-tidb2 init.d]# systemctl status node_exporter-9100.service

● node_exporter-9100.service - node_exporter service

Loaded: loaded (/etc/systemd/system/node_exporter-9100.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2021-08-20 11:26:18 CST; 2 weeks 5 days ago

Main PID: 14009 (node_exporter)

Tasks: 53

CGroup: /system.slice/node_exporter-9100.service

├─14009 bin/node_exporter/node_exporter --web.listen-address=:9100 --collector.tcpstat --collector.systemd --collector.mountstats --collector.m…

├─14020 /bin/bash /home/ti_deploy/TI_Deploy/monitor-9100/scripts/run_node_exporter.sh

└─14031 tee -i -a /home/ti_deploy/TI_Deploy/monitor-9100/log/node_exporter.log

9月 08 11:39:47 CRM-tidb2 run_node_exporter.sh[14009]: time=“2021-09-08T11:39:47+08:00” level=error msg=“error encoding and sending metric family…go:172”

9月 08 11:39:47 CRM-tidb2 run_node_exporter.sh[14009]: time=“2021-09-08T11:39:47+08:00” level=error msg=“error encoding and sending metric family…go:172”

9月 08 11:39:47 CRM-tidb2 run_node_exporter.sh[14009]: time=“2021-09-08T11:39:47+08:00” level=error msg=“error encoding and sending metric family…go:172”

9月 08 11:39:47 CRM-tidb2 run_node_exporter.sh[14009]: time=“2021-09-08T11:39:47+08:00” level=error msg=“error encoding and sending metric family…go:172”

9月 08 11:39:47 CRM-tidb2 run_node_exporter.sh[14009]: time=“2021-09-08T11:39:47+08:00” level=error msg=“error encoding and sending metric family…go:172”

9月 08 11:39:47 CRM-tidb2 run_node_exporter.sh[14009]: time=“2021-09-08T11:39:47+08:00” level=error msg=“error encoding and sending metric family…go:172”

9月 08 11:39:47 CRM-tidb2 run_node_exporter.sh[14009]: time=“2021-09-08T11:39:47+08:00” level=error msg=“error encoding and sending metric family…go:172”

9月 08 11:39:47 CRM-tidb2 run_node_exporter.sh[14009]: time=“2021-09-08T11:39:47+08:00” level=error msg=“error encoding and sending metric family…go:172”

9月 08 11:39:47 CRM-tidb2 run_node_exporter.sh[14009]: time=“2021-09-08T11:39:47+08:00” level=error msg=“error encoding and sending metric family…go:172”

9月 08 11:39:47 CRM-tidb2 run_node_exporter.sh[14009]: 2021/09/08 11:39:47 http: multiple response.WriteHeader calls

Hint: Some lines were ellipsized, use -l to show in full.

2 个赞

spc_monkey

(carry@pingcap.com)

11

,哪咱们的问题到底是啥

,哪咱们的问题到底是啥 监控grafana 显示有问题?

监控grafana 显示有问题?

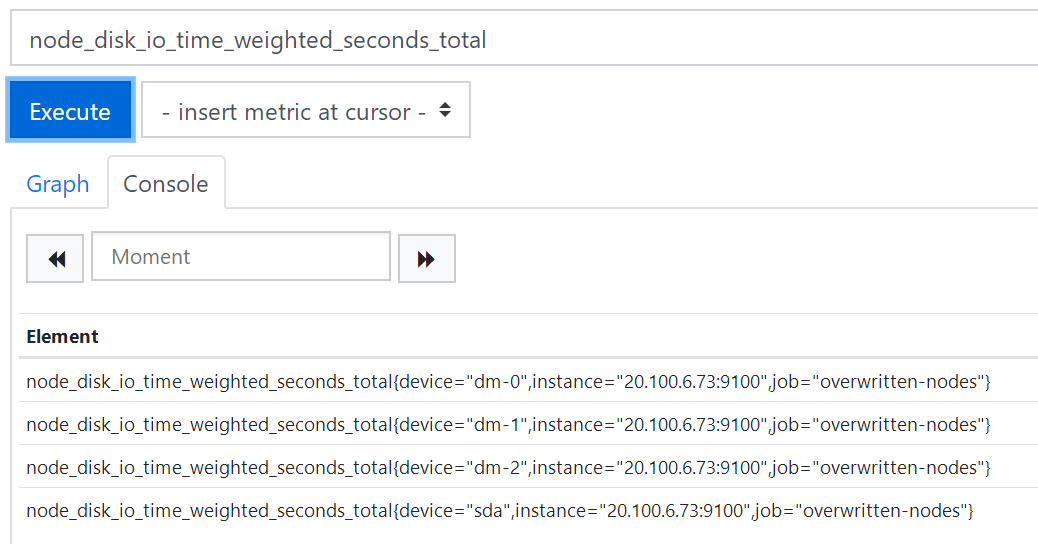

1、可以通过 grafana 的对应的监控指标里的表达式,登录到 prometheus 的界面,执行一下这个 表达式,看看结果(prometheus 的界面登录方式和 grafann 一样,只是端口换成 prometheus 的)

2 个赞

我试下,确定prometheus里数据不全,与图表一致。

返回的数据只有一台机器,集群另外两台监控数据没有

[root@CRM-tidb2 log]# more node_exporter.log

time=“2021-09-08T09:24:21+08:00” level=info msg=“Starting node_exporter (version=0.17.0, branch=HEAD, revision=f6f6194a436b9a63d0439abc585c76b19a206b21)” sou

rce=“node_exporter.go:82”

time=“2021-09-08T09:24:21+08:00” level=info msg=“Build context (go=go1.11.2, user=root@322511e06ced, date=20181130-15:51:33)” source=“node_exporter.go:83”

time=“2021-09-08T09:24:21+08:00” level=info msg=“Enabled collectors:” source=“node_exporter.go:90”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - arp" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - bcache" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - bonding" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - buddyinfo" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - conntrack" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - cpu" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - diskstats" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - edac" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - entropy" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - filefd" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - filesystem" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - hwmon" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - infiniband" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - interrupts" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - ipvs" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - loadavg" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - mdadm" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - meminfo" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - meminfo_numa" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - mountstats" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - netclass" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - netdev" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - netstat" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - nfs" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - nfsd" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - sockstat" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - stat" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - systemd" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - tcpstat" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - textfile" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - time" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - timex" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - uname" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - vmstat" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - xfs" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=" - zfs" source=“node_exporter.go:97”

time=“2021-09-08T09:24:21+08:00” level=info msg=“Listening on :9100” source=“node_exporter.go:111”

time=“2021-09-08T09:24:21+08:00” level=fatal msg=“listen tcp :9100: bind: address already in use” source=“node_exporter.go:114”

怀疑有节点node_exporter没有把数据传到prometheus

1 个赞

spc_monkey

(carry@pingcap.com)

14

可以看看 /etc/systemd/system 下有几个 node_exporter 服务

1 个赞

在中控机上查询的:

[root@CRM-tidb2 system]# ls |grep ex

blackbox_exporter-9115.service

node_exporter-9100.service

1 个赞

spc_monkey

(carry@pingcap.com)

18

1、是确认服务是否正常启动(这点已确认),并确认启动的端口服务是否是预期启动的(和 tiup 部署的是一致的)

2、prometheus的配置文件,可以在对应的模块中,查看对应的 IP 是否存在

1 个赞

1、检查结果

[root@CRM-tidb2 system]# netstat -an|grep 9100

tcp 0 0 20.100.6.72:52978 20.100.6.72:9100 TIME_WAIT

tcp 0 0 20.100.6.72:53306 20.100.6.73:9100 ESTABLISHED

tcp 0 0 20.100.6.72:47918 20.100.6.73:9100 TIME_WAIT

tcp 0 0 20.100.6.72:59560 20.100.6.71:9100 TIME_WAIT

tcp 0 0 20.100.6.72:59812 20.100.6.71:9100 TIME_WAIT

tcp 0 0 20.100.6.72:53230 20.100.6.72:9100 TIME_WAIT

tcp 0 0 20.100.6.72:48168 20.100.6.73:9100 TIME_WAIT

tcp6 0 0 :::9100 :::* LISTEN

tcp6 0 0 20.100.6.72:20160 20.100.6.73:49100 ESTABLISHED

[root@CRM-tidb2 system]# netstat -an|grep 9115

tcp 0 0 20.100.6.72:35788 20.100.6.73:9115 ESTABLISHED

tcp 0 0 20.100.6.72:58774 20.100.6.73:9115 TIME_WAIT

tcp 0 0 20.100.6.72:45178 20.100.6.71:9115 TIME_WAIT

tcp 0 0 20.100.6.72:50618 20.100.6.71:9115 ESTABLISHED

tcp 0 0 20.100.6.72:59022 20.100.6.73:9115 TIME_WAIT

tcp 0 0 20.100.6.72:45426 20.100.6.71:9115 TIME_WAIT

tcp 0 0 20.100.6.72:33272 20.100.6.72:9115 TIME_WAIT

tcp 0 0 20.100.6.72:43934 20.100.6.72:9115 ESTABLISHED

tcp 0 0 20.100.6.72:33024 20.100.6.72:9115 TIME_WAIT

tcp 0 0 20.100.6.72:57152 20.100.6.72:9115 ESTABLISHED

tcp6 0 0 :::9115 :::* LISTEN

tcp6 0 0 20.100.6.72:9115 20.100.6.72:43934 ESTABLISHED

tcp6 0 0 20.100.6.72:9115 20.100.6.72:57152 ESTABLISHED

2、不知道如何操作,变量是与图表一致的,有节点数据缺失

1 个赞

xfworld

(魔幻之翼)

20

time=“2021-09-08T09:24:21+08:00” level=fatal msg=“listen tcp :9100: bind: address already in use” source=“node_exporter.go:114”

你提供的日志有这一段… 端口已经用了…无法绑定?

1 个赞