honor100

2021 年10 月 12 日 01:40

21

哇,那么晚还在,辛苦辛苦。

tiup ctl:v5.2.0 pd -u 172.21.11.59:2379 region store 89241ctl: /home/tidb/.tiup/components/ctl/v5.2.0/ctl pd -u 172.21.11.59:2379 region store 89241

这道题我不会

2021 年10 月 12 日 07:06

22

honor100:

103455

从上面提供的信息看, store 89241 一直没有下线成功的原因是上面还存在两个 region (region id 分别为18201 和 18277),但这两个 region 没有 leader ,需要确认下原因,麻烦提供下涉及到这两个 region 的日志。

honor100

2021 年10 月 14 日 02:21

23

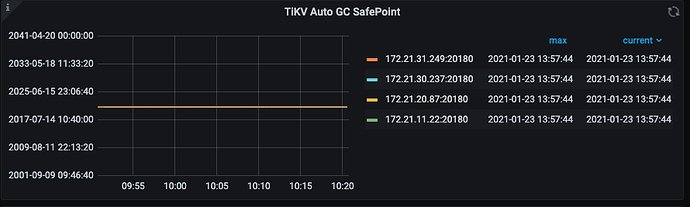

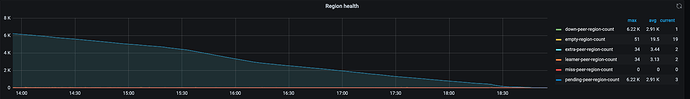

这两天在救火,aws机器例行维护;我们新加节点迁移数据,还没迁移完他们就关机了,因为使用的是本地nvme的磁盘,数据全丢了;这个时候发现gc任务已经停了半年了,现在手工gc /home/tidb/tikv-ctl --host 127.0.0.1:20160 compact &;真是 屋漏偏逢连夜雨,那2个region,我看下能否强制删除不,不要了

honor100

2021 年10 月 14 日 02:42

25

是啊 大数据的;哎

tiup ctl:v5.2.0 pd -u 172.21.11.59:2379 operator add remove-peer 18201 103455ctl: /home/tidb/.tiup/components/ctl/v5.2.0/ctl pd -u 172.21.11.59:2379 operator add remove-peer 18201 103455

这道题我不会

2021 年10 月 14 日 02:52

26

这两个 region 副本所在的 store_id”: 103455 和 “store_id”: 135592 是当前集群中的 Store 吗?如果方便的话麻烦还是提供下涉及到这两个 region 的日志,需要分析下没有选举出 leader 原因,可能需要进行 unsafe recover

honor100

2021 年10 月 14 日 02:58

27

好的,谢谢;

[2021/10/14 03:00:18.099 +00:00] [INFO] [endpoint.rs:382] [“deregister observe region”] [observe_id=ObserveID(33779)] [region_id=1427722] [store_id=Some(103455)]

honor100

2021 年10 月 14 日 03:10

28

honor100:

89241

9月3号,发帖子的时候 store 89241上面region已经是0了哈

这道题我不会

2021 年10 月 14 日 03:24

29

现在通过 pd-ctl 看下 region 18201 和 region 103455 ,里面还残留 store 89241 的信息吗?

honor100

2021 年10 月 14 日 03:35

31

感觉陷入死循环了

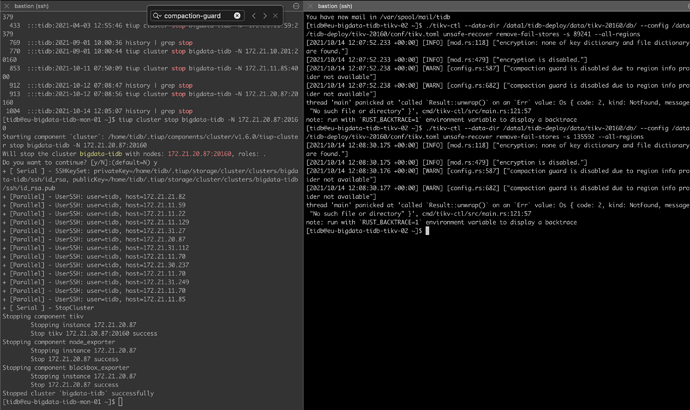

./tikv-ctl --data-dir /data1/tidb-deploy/data/tikv-20160/db/ --config /data1/tidb-deploy/tikv-20160/conf/tikv.toml unsafe-recover remove-fail-stores -s 89241 --all-regionsResult::unwrap() on an Err value: Os { code: 2, kind: NotFound, message: “No such file or directory” }2021/10/14 03:34:42.293 +00:00’, ]cmd/tikv-ctl/src/main.rs :[121WARN:]57RUST_BACKTRACE=1 environment variable to display a backtrace

这道题我不会

2021 年10 月 14 日 05:41

32

你这里是已经关闭了 tikv 在所有 tikv 节点上执行了一遍 unsafe recover ?

honor100

2021 年10 月 14 日 06:10

33

tikv版本是 5.2.1, 7月份的时候北京参会 唐刘大佬说可以在线修复的哈

这道题我不会

2021 年10 月 14 日 06:38

34

额,目前还不支持在线 unsafe recover ,功能还在开发中,需要停掉 tikv 节点。。。

honor100

2021 年10 月 14 日 07:11

35

那个关闭影响有点大,请教有优雅的关闭方案吗?

这道题我不会

2021 年10 月 14 日 07:31

36

目前没有, region 18201 和 region 103455 除掉位于不可用的 store 89241 副本外,还有两个副本是在 store_id”: 103455 和 “store_id”: 135592 上,若这两个 store 是状态 Up 的,正常来说是满足多数派原则可以选举出 leader ,但现在一直无法选出 leader 。所以首先需要确认下 store 状态是否正常,其次获取完整 tikv 上的 region 日志,分析下无法选举出 leader 原因。

honor100

2021 年10 月 14 日 11:55

37

嗯嗯,我现在把leader迁走、关机、unsafe recover;

哎,前几天aws维护 本地nvme磁盘的机器down掉1台; 这两天新加了节点一直在迁移region过去;今天晚上才迁移的差不多了,但是还有遗留的region, 我一并unsafe recoer吧;

honor100

2021 年10 月 14 日 12:19

39

export RUST_BACKTRACE=fullResult::unwrap() on an Err value: Os { code: 2, kind: NotFound, message: “No such file or directory” } ', [cmd/tikv-ctl/src/main.rsconfig.rs::121682:]57

这道题我不会

2021 年10 月 15 日 02:59

40