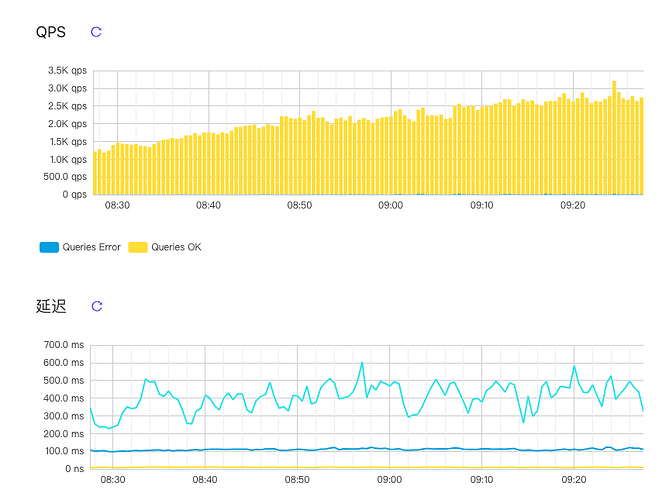

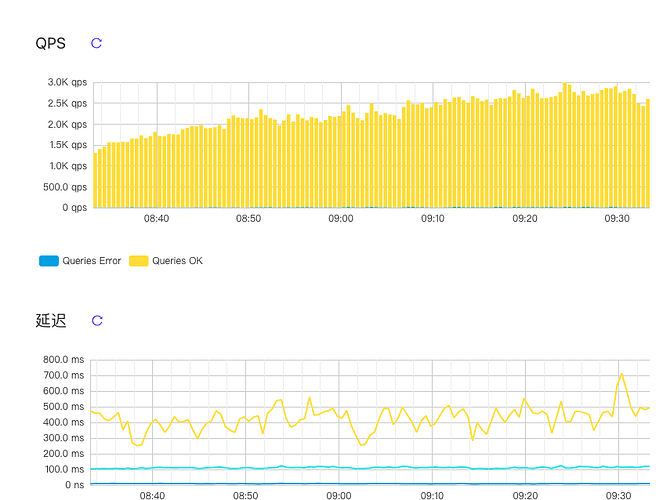

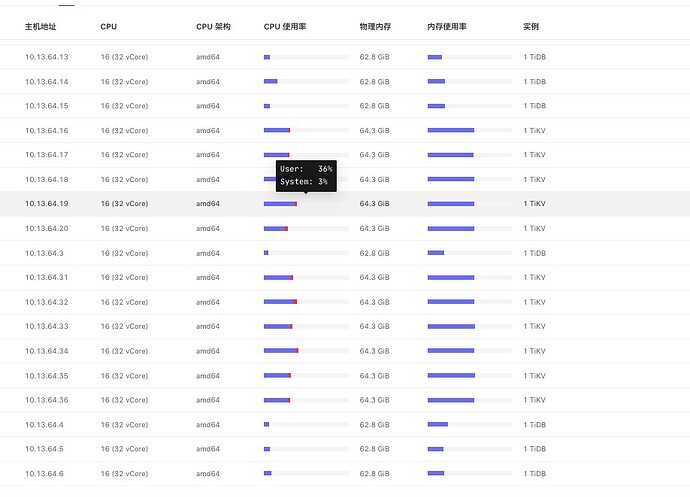

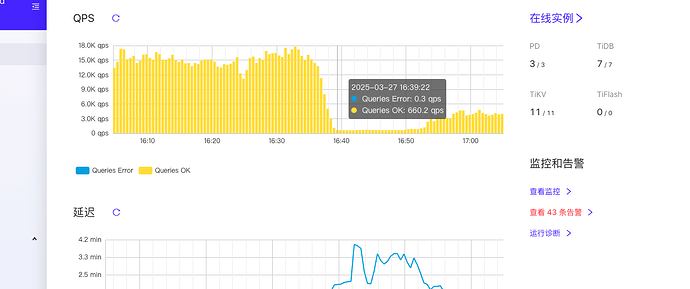

问题描述:

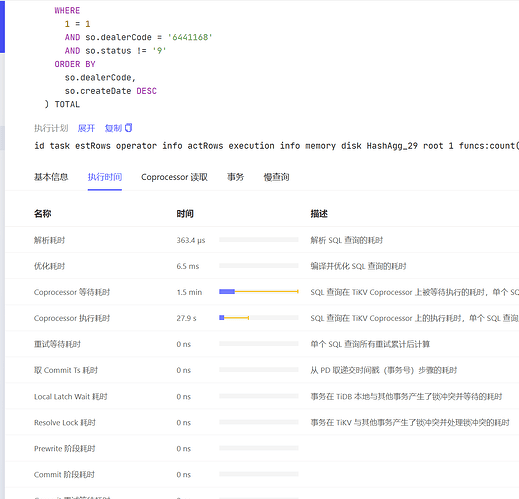

我使用的版本是5.2.1。 近期数据库很不稳定,有时候几天卡一次,有时候几个小时内连续卡多次,通过dashboard分析sql,sql执行时间都很长,但是查看索引执行计划都是正常的。看不到有问题的sql。 以下是其中一个sql的执行计划: id task estRows operator info actRows execution info memory disk

HashAgg_20 root 1 funcs:count(1)->Column#655 0 time:22.8s, loops:1, partial_worker:{wall_time:22.81680262s, concurrency:5, task_num:0, tot_wait:1m54.083899119s, tot_exec:0s, tot_time:1m54.083903907s, max:22.816785352s, p95:22.816785352s}, final_worker:{wall_time:22.816820353s, concurrency:5, task_num:0, tot_wait:1m54.084008738s, tot_exec:7.661µs, tot_time:1m54.084019576s, max:22.816808352s, p95:22.816808352s} 13.9 KB N/A

└─Sort_22 root 381.84 svc.svc_service_order.dealercode, svc.svc_service_order.createdate:desc 0 time:22.8s, loops:1 0 Bytes 0 Bytes

└─HashJoin_26 root 381.84 left outer join, equal:[eq(svc.svc_service_order.serviceordercode, svc.svc_service_order.serviceordercode) eq(svc.svc_service_order.dealercode, svc.svc_service_order.dealercode)] 0 time:22.8s, loops:1, build_hash_table:{total:22.8s, fetch:22.8s, build:0s} 0 Bytes 0 Bytes

├─HashJoin_69 root 381.84 left outer join, equal:[eq(svc.svc_service_order.serviceordercode, svc.svc_service_order.serviceordercode) eq(svc.svc_service_order.dealercode, svc.svc_service_order.dealercode)] 0 time:22.8s, loops:1, build_hash_table:{total:22.8s, fetch:22.8s, build:0s} 0 Bytes 0 Bytes

│ ├─IndexLookUp_88 root 381.84 0 time:22.8s, loops:1, index_task: {total_time: 3.5s, fetch_handle: 45.2ms, build: 5.62µs, wait: 3.45s}, table_task: {total_time: 1m52.4s, num: 8, concurrency: 5} 1.40 MB N/A

│ │ ├─IndexRangeScan_85 cop[tikv] 517.46 table:so, index:dealerCode_serviceOrderCode_index(dealerCode, serviceOrderCode, createDate), range:[“6531138”,“6531139”), keep order:false 90219 time:41.9ms, loops:91, cop_task: {num: 1, max: 41.6ms, proc_keys: 90219, tot_proc: 39ms, rpc_num: 1, rpc_time: 41.6ms, copr_cache_hit_ratio: 0.00}, tikv_task:{time:36ms, loops:93}, scan_detail: {total_process_keys: 90219, total_keys: 90220, rocksdb: {delete_skipped_count: 0, key_skipped_count: 90219, block: {cache_hit_count: 105, read_count: 0, read_byte: 0 Bytes}}} N/A N/A

│ │ └─Selection_87 cop[tikv] 381.84 ge(svc.svc_service_order.settlementdate, 2025-04-01 00:00:00.000000), le(svc.svc_service_order.settlementdate, 2025-04-13 23:59:59.000000), ne(svc.svc_service_order.status, 9) 770 time:1m52.1s, loops:10, cop_task: {num: 1420, max: 4.7s, min: 486.2µs, avg: 206.4ms, p95: 1.82s, max_proc_keys: 209, p95_proc_keys: 135, tot_proc: 1m9.6s, tot_wait: 3m42s, rpc_num: 1420, rpc_time: 4m53s, copr_cache_hit_ratio: 0.00}, tikv_task:{proc max:9ms, min:0s, p80:3ms, p95:5ms, iters:2771, tasks:1420}, scan_detail: {total_process_keys: 86776, total_keys: 86776, rocksdb: {delete_skipped_count: 0, key_skipped_count: 0, block: {cache_hit_count: 1036322, read_count: 0, read_byte: 0 Bytes}}} N/A N/A

│ │ └─TableRowIDScan_86 cop[tikv] 517.46 table:so, keep order:false 86776 tikv_task:{proc max:9ms, min:0s, p80:3ms, p95:5ms, iters:2771, tasks:1420} N/A N/A

│ └─HashAgg_89 root 49726.36 group by:svc.svc_service_order.dealercode, svc.svc_service_order.serviceordercode, funcs:firstrow(svc.svc_service_order.dealercode)->svc.svc_service_order.dealercode, funcs:firstrow(svc.svc_service_order.serviceordercode)->svc.svc_service_order.serviceordercode, funcs:count(1)->Column#658 0 time:22.8s, loops:1, partial_worker:{wall_time:22.816687421s, concurrency:5, task_num:10, tot_wait:1m54.077484488s, tot_exec:5.33879ms, tot_time:1m54.083186431s, max:22.816667291s, p95:22.816667291s}, final_worker:{wall_time:22.816877288s, concurrency:5, task_num:5, tot_wait:1m54.083130861s, tot_exec:965.158µs, tot_time:1m54.084100167s, max:22.816861682s, p95:22.816861682s} 1.28 MB N/A

│ └─IndexHashJoin_97 root 144358.11 left outer join, inner:IndexReader_94, outer key:svc.svc_service_order.id, inner key:svc.svc_service_order_part.serviceorderid, equal cond:eq(svc.svc_service_order.id, svc.svc_service_order_part.serviceorderid) 10240 time:22.8s, loops:11, inner:{total:178.2ms, concurrency:5, task:6, construct:2.53ms, fetch:167.1ms, build:638.4µs, join:8.47ms} 541.8 KB N/A

│ ├─IndexLookUp_122 root 62617.31 4064 time:22.8s, loops:8, index_task: {total_time: 3.56s, fetch_handle: 53.6ms, build: 14.5µs, wait: 3.51s}, table_task: {total_time: 1m52.5s, num: 12, concurrency: 5} 6.36 MB N/A

│ │ ├─Selection_121 cop[tikv] 62617.31 not(isnull(svc.svc_service_order.serviceordercode)) 90219 time:49.5ms, loops:95, cop_task: {num: 1, max: 49.3ms, proc_keys: 90219, tot_proc: 45ms, tot_wait: 1ms, rpc_num: 1, rpc_time: 49.3ms, copr_cache_hit_ratio: 0.00}, tikv_task:{time:44ms, loops:93}, scan_detail: {total_process_keys: 90219, total_keys: 90220, rocksdb: {delete_skipped_count: 0, key_skipped_count: 90219, block: {cache_hit_count: 105, read_count: 0, read_byte: 0 Bytes}}} N/A N/A

│ │ │ └─IndexRangeScan_119 cop[tikv] 62617.31 table:so, index:dealerCode_serviceOrderCode_index(dealerCode, serviceOrderCode, createDate), range:[“6531138”,“6531139”), keep order:false 90219 tikv_task:{time:35ms, loops:93} N/A N/A

│ │ └─TableRowIDScan_120 cop[tikv] 62617.31 table:so, keep order:false 86651 time:1m52.2s, loops:97, cop_task: {num: 1470, max: 4.73s, min: 442µs, avg: 210.9ms, p95: 1.83s, max_proc_keys: 209, p95_proc_keys: 135, tot_proc: 1m16.6s, tot_wait: 3m51.9s, rpc_num: 1470, rpc_time: 5m10s, copr_cache_hit_ratio: 0.00}, tikv_task:{proc max:10ms, min:0s, p80:3ms, p95:5ms, iters:2813, tasks:1470}, scan_detail: {total_process_keys: 86461, total_keys: 86461, rocksdb: {delete_skipped_count: 0, key_skipped_count: 0, block: {cache_hit_count: 1032763, read_count: 0, read_byte: 0 Bytes}}} N/A N/A

│ └─IndexReader_94 root 2.88 index:Selection_93 13255 time:164.5ms, loops:22, cop_task: {num: 19, max: 101.8ms, min: 1.02ms, avg: 16.8ms, p95: 101.8ms, max_proc_keys: 1831, p95_proc_keys: 1831, tot_proc: 166ms, tot_wait: 123ms, rpc_num: 19, rpc_time: 318.2ms, copr_cache_hit_ratio: 0.00} 2.28 KB N/A

│ └─Selection_93 cop[tikv] 2.88 not(isnull(svc.svc_service_order_part.serviceorderid)) 13255 tikv_task:{proc max:19ms, min:0s, p80:13ms, p95:19ms, iters:83, tasks:19}, scan_detail: {total_process_keys: 13255, total_keys: 23199, rocksdb: {delete_skipped_count: 0, key_skipped_count: 19167, block: {cache_hit_count: 46650, read_count: 0, read_byte: 0 Bytes}}} N/A N/A

│ └─IndexRangeScan_92 cop[tikv] 2.88 table:sp, index:idx_svc_service_order_part_serviceOrderId(serviceOrderId), range: decided by [eq(svc.svc_service_order_part.serviceorderid, svc.svc_service_order.id)], keep order:false 13255 tikv_task:{proc max:18ms, min:0s, p80:13ms, p95:18ms, iters:83, tasks:19} N/A N/A

└─HashAgg_125 root 49726.36 group by:svc.svc_service_order.dealercode, svc.svc_service_order.serviceordercode, funcs:firstrow(svc.svc_service_order.dealercode)->svc.svc_service_order.dealercode, funcs:firstrow(svc.svc_service_order.serviceordercode)->svc.svc_service_order.serviceordercode, funcs:count(1)->Column#659 0 time:22.8s, loops:1, partial_worker:{wall_time:22.816801246s, concurrency:5, task_num:5, tot_wait:1m54.079172349s, tot_exec:4.054589ms, tot_time:1m54.083692896s, max:22.816779147s, p95:22.816779147s}, final_worker:{wall_time:22.817043827s, concurrency:5, task_num:6, tot_wait:1m54.083613674s, tot_exec:1.205728ms, tot_time:1m54.084823667s, max:22.81701947s, p95:22.81701947s} 1.07 MB N/A

└─IndexHashJoin_133 root 80725.24 left outer join, inner:IndexReader_130, outer key:svc.svc_service_order.id, inner key:svc.svc_service_order_item.serviceorderid, equal cond:eq(svc.svc_service_order.id, svc.svc_service_order_item.serviceorderid) 5120 time:22.8s, loops:6, inner:{total:101.7ms, concurrency:5, task:6, construct:2.46ms, fetch:94.9ms, build:570.2µs, join:4.32ms} 495.7 KB N/A

├─IndexLookUp_158 root 62617.31 4064 time:22.8s, loops:8, index_task: {total_time: 3.56s, fetch_handle: 58.9ms, build: 12.5µs, wait: 3.5s}, table_task: {total_time: 1m52.5s, num: 12, concurrency: 5} 6.34 MB N/A

│ ├─Selection_157 cop[tikv] 62617.31 not(isnull(svc.svc_service_order.serviceordercode)) 90219 time:54.4ms, loops:95, cop_task: {num: 1, max: 54.2ms, proc_keys: 90219, tot_proc: 49ms, tot_wait: 1ms, rpc_num: 1, rpc_time: 54.2ms, copr_cache_hit_ratio: 0.00}, tikv_task:{time:48ms, loops:93}, scan_detail: {total_process_keys: 90219, total_keys: 90220, rocksdb: {delete_skipped_count: 0, key_skipped_count: 90219, block: {cache_hit_count: 105, read_count: 0, read_byte: 0 Bytes}}} N/A N/A

│ │ └─IndexRangeScan_155 cop[tikv] 62617.31 table:so, index:dealerCode_serviceOrderCode_index(dealerCode, serviceOrderCode, createDate), range:[“6531138”,“6531139”), keep order:false 90219 tikv_task:{time:42ms, loops:93} N/A N/A

│ └─TableRowIDScan_156 cop[tikv] 62617.31 table:so, keep order:false 86651 time:1m52.1s, loops:97, cop_task: {num: 1470, max: 4.72s, min: 430.3µs, avg: 214.9ms, p95: 1.87s, max_proc_keys: 209, p95_proc_keys: 132, tot_proc: 1m21.4s, tot_wait: 3m53.1s, rpc_num: 1470, rpc_time: 5m15.9s, copr_cache_hit_ratio: 0.03}, tikv_task:{proc max:11ms, min:0s, p80:3ms, p95:5ms, iters:2813, tasks:1470}, scan_detail: {total_process_keys: 81671, total_keys: 81671, rocksdb: {delete_skipped_count: 0, key_skipped_count: 0, block: {cache_hit_count: 975983, read_count: 0, read_byte: 0 Bytes}}} N/A N/A

└─IndexReader_130 root 1.61 index:Selection_129 7221 time:91.9ms, loops:17, cop_task: {num: 13, max: 26.8ms, min: 848.1µs, avg: 11.3ms, p95: 26.8ms, max_proc_keys: 1356, p95_proc_keys: 1356, tot_proc: 127ms, tot_wait: 7ms, rpc_num: 13, rpc_time: 146.6ms, copr_cache_hit_ratio: 0.00} 1.38 KB N/A

└─Selection_129 cop[tikv] 1.61 not(isnull(svc.svc_service_order_item.serviceorderid)) 7221 tikv_task:{proc max:24ms, min:1ms, p80:20ms, p95:24ms, iters:53, tasks:13}, scan_detail: {total_process_keys: 7221, total_keys: 12299, rocksdb: {delete_skipped_count: 0, key_skipped_count: 9179, block: {cache_hit_count: 46019, read_count: 0, read_byte: 0 Bytes}}} N/A N/A

└─IndexRangeScan_128 cop[tikv] 1.61 table:si, index:idx_svc_service_order_item_serviceOrderId(serviceOrderId), range: decided by [eq(svc.svc_service_order_item.serviceorderid, svc.svc_service_order.id)], keep order:false 7221 tikv_task:{proc max:24ms, min:1ms, p80:20ms, p95:24ms, iters:53, tasks:13} N/A N/A

但是Coprocessor 等待耗时 和 Coprocessor 执行耗时 时间很长。

有没有大佬给一些排查思路,或者协助排查。 +V gengquan2020 (有偿,可以谈。)