templey

(templey)

1

【TiDB 使用环境】生产环境 /测试/ Poc

【TiDB 版本】v8.5.1

【操作系统】centos 7.9

【部署方式】机器部署

【集群数据量】

【集群节点数】3

【问题复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】tiup cluster deploy tidb-prod v8.5.1 …/to.yaml --user tidb -i /home/tidb/.ssh/id_rsa

-

Detect CPU Arch Name

- Detecting node 10.2.4.184 Arch info … Done

- Detecting node 10.2.4.185 Arch info … Done

- Detecting node 10.2.4.186 Arch info … Done

-

Detect CPU OS Name

- Detecting node 10.2.4.184 OS info … Done

- Detecting node 10.2.4.185 OS info … Done

- Detecting node 10.2.4.186 OS info … Done

Please confirm your topology:

Cluster type: tidb

Cluster name: tidb-prod

Cluster version: v8.5.1

Role Host Ports OS/Arch Directories

pd 10.2.4.184 2379/2380 linux/x86_64 /data2/tidb-deploy/pd-184,/data2/tidb-data/pd-184

pd 10.2.4.185 2379/2380 linux/x86_64 /data2/tidb-deploy/pd-185,/data2/tidb-data/pd-185

pd 10.2.4.186 2379/2380 linux/x86_64 /data2/tidb-deploy/pd-186,/data2/tidb-data/pd-186

tikv 10.2.4.184 20160/20180 linux/x86_64 /data1/tidb-deploy/tikv-184,/data1/tidb-data/tikv-184

tikv 10.2.4.185 20160/20180 linux/x86_64 /data1/tidb-deploy/tikv-185,/data1/tidb-data/tikv-185

tikv 10.2.4.186 20160/20180 linux/x86_64 /data1/tidb-deploy/tikv-186,/data1/tidb-data/tikv-186

tidb 10.2.4.184 4000/10080 linux/x86_64 /data2/tidb-deploy/tidb-184

tidb 10.2.4.185 4000/10080 linux/x86_64 /data2/tidb-deploy/tidb-185

tidb 10.2.4.186 4000/10080 linux/x86_64 /data2/tidb-deploy/tidb-186

tiflash 10.2.4.184 9000/3930/20170/20292/8234/8123 linux/x86_64 /data2/tidb-deploy/tiflash-184,/data2/tidb-data/tiflash-184

tiflash 10.2.4.185 9000/3930/20170/20292/8234/8123 linux/x86_64 /data2/tidb-deploy/tiflash-185,/data2/tidb-data/tiflash-185

tiflash 10.2.4.186 9000/3930/20170/20292/8234/8123 linux/x86_64 /data2/tidb-deploy/tiflash-186,/data2/tidb-data/tiflash-186

tikv-cdc 10.2.4.185 8600 linux/x86_64 /data2/tidb-deploy/tikv-cdc-8600,/data2/tidb-data/tikv-cdc-185

tikv-cdc 10.2.4.186 8600 linux/x86_64 /data2/tidb-deploy/tikv-cdc-8600,/data2/tidb-data/tikv-cdc-186

prometheus 10.2.4.184 9090/12020 linux/x86_64 /data2/tidb-deploy/prometheus-184,/data2/tidb-data/prometheus-184

grafana 10.2.4.185 3000 linux/x86_64 /data2/tidb-deploy/grafana-185

alertmanager 10.2.4.186 9093/9094 linux/x86_64 /data2/tidb-deploy/alertmanager-186,/data2/tidb-data/alertmanager-186

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

- Generate SSH keys … Done

- Download TiDB components

- Download pd:v8.5.1 (linux/amd64) … Done

- Download tikv:v8.5.1 (linux/amd64) … Done

- Download tidb:v8.5.1 (linux/amd64) … Done

- Download tiflash:v8.5.1 (linux/amd64) … Done

- Download tikv-cdc: (linux/amd64) … Error

- Download prometheus:v8.5.1 (linux/amd64) … Done

- Download grafana:v8.5.1 (linux/amd64) … Done

- Download alertmanager: (linux/amd64) … Done

- Download node_exporter: (linux/amd64) … Done

- Download blackbox_exporter: (linux/amd64) … Done

Error: unknown component

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【复制黏贴 ERROR 报错的日志】

【其他附件:截图/日志/监控】

端口不冲突,目录还没有新建

Kongdom

(Kongdom)

2

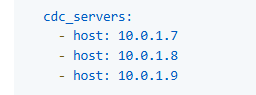

tikv-cdc?这个是什么?应该是cdc_servers吧

tikv-cdc?这个是什么?应该是cdc_servers吧

templey

(templey)

5

global:

user: "tidb"

group: "tidb"

ssh_port: 22

deploy_dir: "/data2/tidb-deploy"

data_dir: "/data2/tidb-data"

listen_host: 0.0.0.0

arch: "amd64"

resource_control:

memory_limit: "2G"

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

deploy_dir: "/data2/tidb-deploy/monitored-9100"

data_dir: "/data2/tidb-data/monitored-9100"

log_dir: "/data2/tidb-deploy/monitored-9100/log"

pd_servers:

- host: 10.2.4.184

ssh_port: 22

name: "pd-184"

client_port: 2379

peer_port: 2380

deploy_dir: "/data2/tidb-deploy/pd-184"

data_dir: "/data2/tidb-data/pd-184"

log_dir: "/data2/tidb-deploy/pd-184/log"

- host: 10.2.4.185

ssh_port: 22

name: "pd-185"

client_port: 2379

peer_port: 2380

deploy_dir: "/data2/tidb-deploy/pd-185"

data_dir: "/data2/tidb-data/pd-185"

log_dir: "/data2/tidb-deploy/pd-185/log"

- host: 10.2.4.186

ssh_port: 22

name: "pd-186"

client_port: 2379

peer_port: 2380

deploy_dir: "/data2/tidb-deploy/pd-186"

data_dir: "/data2/tidb-data/pd-186"

log_dir: "/data2/tidb-deploy/pd-186/log"

tidb_servers:

- host: 10.2.4.184

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: "/data2/tidb-deploy/tidb-184"

log_dir: "/data2/tidb-deploy/tidb-184/log"

- host: 10.2.4.185

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: "/data2/tidb-deploy/tidb-185"

log_dir: "/data2/tidb-deploy/tidb-185/log"

- host: 10.2.4.186

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: "/data2/tidb-deploy/tidb-186"

log_dir: "/data2/tidb-deploy/tidb-186/log"

tikv_servers:

- host: 10.2.4.184

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: "/data1/tidb-deploy/tikv-184"

data_dir: "/data1/tidb-data/tikv-184"

log_dir: "/data1/tidb-deploy/tikv-184/log"

- host: 10.2.4.185

port: 20160

status_port: 20180

deploy_dir: "/data1/tidb-deploy/tikv-185"

data_dir: "/data1/tidb-data/tikv-185"

log_dir: "/data1/tidb-deploy/tikv-185/log"

- host: 10.2.4.186

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: "/data1/tidb-deploy/tikv-186"

data_dir: "/data1/tidb-data/tikv-186"

log_dir: "/data1/tidb-deploy/tikv-186/log"

tiflash_servers:

- host: 10.2.4.184

ssh_port: 22

tcp_port: 9000

flash_service_port: 3930

flash_proxy_port: 20170

flash_proxy_status_port: 20292

metrics_port: 8234

deploy_dir: /data2/tidb-deploy/tiflash-184

data_dir: /data2/tidb-data/tiflash-184

log_dir: /data2/tidb-deploy/tiflash-184/log

- host: 10.2.4.185

ssh_port: 22

tcp_port: 9000

flash_service_port: 3930

flash_proxy_port: 20170

flash_proxy_status_port: 20292

metrics_port: 8234

deploy_dir: /data2/tidb-deploy/tiflash-185

data_dir: /data2/tidb-data/tiflash-185

log_dir: /data2/tidb-deploy/tiflash-185/log

- host: 10.2.4.186

ssh_port: 22

tcp_port: 9000

flash_service_port: 3930

flash_proxy_port: 20170

flash_proxy_status_port: 20292

metrics_port: 8234

deploy_dir: /data2/tidb-deploy/tiflash-186

data_dir: /data2/tidb-data/tiflash-186

log_dir: /data2/tidb-deploy/tiflash-186/log

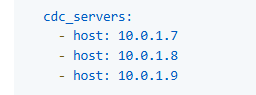

kvcdc_servers:

- host: 10.2.4.185

ssh_port: 22

port: 8600

data_dir: "/data2/tidb-data/tikv-cdc-185"

log_dir: "/data2/tidb-deploy/tikv-cdc-185/log"

- host: 10.2.4.186

ssh_port: 22

port: 8600

data_dir: "/data2/tidb-data/tikv-cdc-186"

log_dir: "/data2/tidb-deploy/tikv-cdc-186/log"

monitoring_servers:

- host: 10.2.4.184

ssh_port: 22

port: 9090

ng_port: 12020

deploy_dir: "/data2/tidb-deploy/prometheus-184"

data_dir: "/data2/tidb-data/prometheus-184"

log_dir: "/data2/tidb-deploy/prometheus-184/log"

rule_dir: /data2/prometheus_rule

grafana_servers:

- host: 10.2.4.185

port: 3000

deploy_dir: /data2/tidb-deploy/grafana-185

dashboard_dir: /data2/dashboards

alertmanager_servers:

- host: 10.2.4.186

ssh_port: 22

listen_host: 0.0.0.0

web_port: 9093

cluster_port: 9094

deploy_dir: "/data2/tidb-deploy/alertmanager-186"

data_dir: "/data2/tidb-data/alertmanager-186"

log_dir: "/data2/tidb-deploy/alertmanager-186/log"

config_file: "/data2/tidb-deploy/alertmanager-186/bin/alertmanager/alertmanager.yml"

小龙虾爱大龙虾

(Minghao Ren)

8

这可不是两种写法,而是两种不同的组件,kvcdc 是给 raw kv 用的同步工具,你要是普通集群就用 cdc_servers 这个就好了

1 个赞

templey

(templey)

9

kvcdc用的是哪个组件包,我想看看是哪里出了问题,如果仅仅是处理报错的话,我完全可以先不装这个组件

templey

(templey)

13

感谢,找到问题了,是离线镜像没有这个组件,改成在线的就好了,谢谢,你的这个方法也没问题

2 个赞

system

(system)

关闭

14

此话题已在最后回复的 7 天后被自动关闭。不再允许新回复。