【TiDB 使用环境】测试

【TiDB 版本】

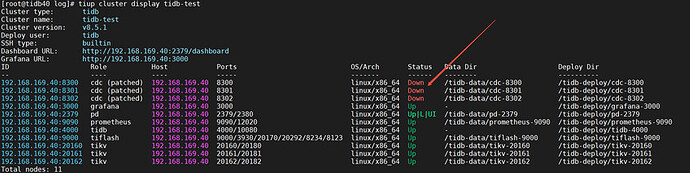

TiDB v8.5.1 单机集群, TiCDC升级到 v9.0.0

【操作系统】

rocky9.5

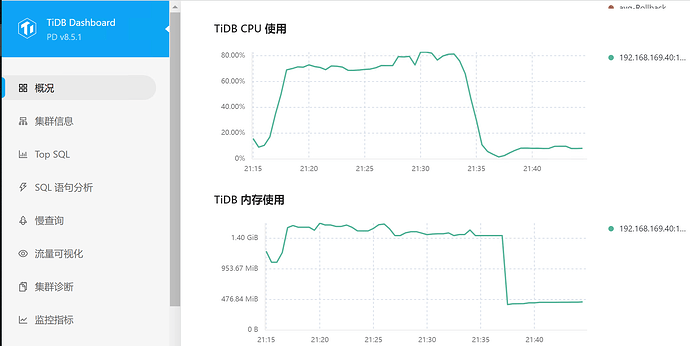

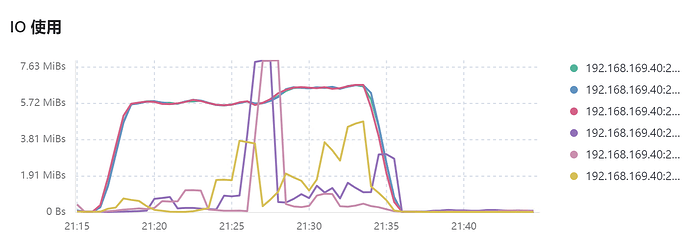

128G MEM, 16CPU, SSD

【部署方式】

单机集群

【问题复现路径】做过哪些操作出现的问题

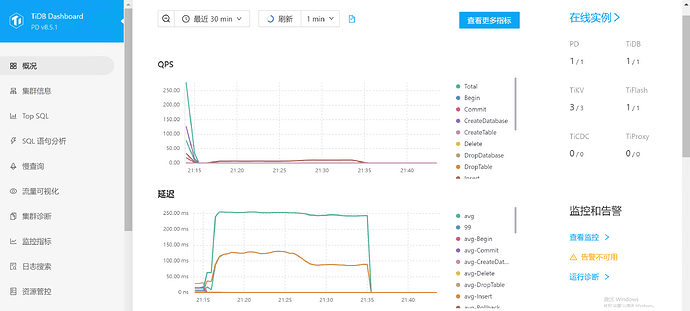

配置完成TiCDC,TiDB 8.5.1 到mysql5.7.40的数据同步。

小批量数据同步测试正常。

大批量数据加载同步过程中报错。:

# tiup bench tpcc -H192.168.169.40 -P4000 -D tpcc -Uroot -ptidb --warehouses 40 --parts 4 prepare

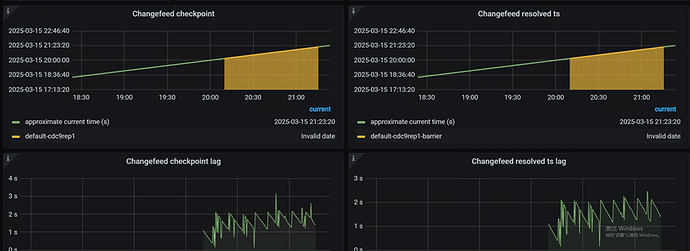

【遇到的问题:问题现象及影响】

发现TiCDC down了,随后刷新集群状态TiCDC 在Up和Down间切换,数次后彻底为down状态。

重启TiDB 集群,显示TiCDC启动成功,但看状态还是为done.

[root@tidb40 log]# tiup cluster start tidb-test

Starting cluster tidb-test...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.40

+ [ Serial ] - StartCluster

Starting component pd

Starting instance 192.168.169.40:2379

Start instance 192.168.169.40:2379 success

Starting component tikv

Starting instance 192.168.169.40:20162

Starting instance 192.168.169.40:20160

Starting instance 192.168.169.40:20161

Start instance 192.168.169.40:20162 success

Start instance 192.168.169.40:20160 success

Start instance 192.168.169.40:20161 success

Starting component tidb

Starting instance 192.168.169.40:4000

Start instance 192.168.169.40:4000 success

Starting component tiflash

Starting instance 192.168.169.40:9000

Start instance 192.168.169.40:9000 success

Starting component cdc

Starting instance 192.168.169.40:8302

Starting instance 192.168.169.40:8300

Starting instance 192.168.169.40:8301

Start instance 192.168.169.40:8302 success

Start instance 192.168.169.40:8300 success

Start instance 192.168.169.40:8301 success

Starting component prometheus

Starting instance 192.168.169.40:9090

Start instance 192.168.169.40:9090 success

Starting component grafana

Starting instance 192.168.169.40:3000

Start instance 192.168.169.40:3000 success

Starting component node_exporter

Starting instance 192.168.169.40

Start 192.168.169.40 success

Starting component blackbox_exporter

Starting instance 192.168.169.40

Start 192.168.169.40 success

+ [ Serial ] - UpdateTopology: cluster=tidb-test

Started cluster `tidb-test` successfully

【复制黏贴 ERROR 报错的日志】

CDC 的错误日志显示panic

[root@tidb40 log]# pwd

/tidb-deploy/cdc-8302/log

[root@tidb40 log]# more cdc_stderr.log

panic: should not reach here

goroutine 932 [running]:

go.uber.org/zap/zapcore.CheckWriteAction.OnWrite(0x1?, 0x1?, {0x0?, 0x0?, 0xc00b0ceac0?})

go.uber.org/zap@v1.27.0/zapcore/entry.go:196 +0x54

go.uber.org/zap/zapcore.(*CheckedEntry).Write(0xc0144420d0, {0xc0058f5340, 0x1, 0x1})

go.uber.org/zap@v1.27.0/zapcore/entry.go:262 +0x24e

go.uber.org/zap.(*Logger).Panic(0x0?, {0x58e273d?, 0xc0058f5340?}, {0xc0058f5340, 0x1, 0x1})

go.uber.org/zap@v1.27.0/logger.go:285 +0x51

github.com/pingcap/log.Panic({0x58e273d?, 0x5726f40?}, {0xc0058f5340?, 0x407838?, 0x55b11b?})

github.com/pingcap/log@v1.1.1-0.20241212030209-7e3ff8601a2a/global.go:54 +0x85

github.com/pingcap/ticdc/logservice/schemastore.extractTableInfoFuncForDropTable(0x4ea1fc0?, 0xc003499aa0?)

github.com/pingcap/ticdc/logservice/schemastore/persist_storage_ddl_handlers.go:1352 +0xc9

github.com/pingcap/ticdc/logservice/schemastore.(*versionedTableInfoStore).doApplyDDL(0xc008de6960, 0xc00dd8e380)

github.com/pingcap/ticdc/logservice/schemastore/multi_version.go:205 +0x5ad

github.com/pingcap/ticdc/logservice/schemastore.(*versionedTableInfoStore).applyDDL(0xc008de6960, 0xc003b7e360?)

github.com/pingcap/ticdc/logservice/schemastore/multi_version.go:187 +0x1b6

github.com/pingcap/ticdc/logservice/schemastore.(*persistentStorage).handleDDLJob.func1({0xc005922640, 0x4, 0xc00557b818?})

github.com/pingcap/ticdc/logservice/schemastore/persist_storage.go:704 +0x19e

github.com/pingcap/ticdc/logservice/schemastore.iterateEventTablesForSingleTableDDL(0xc00dd8e380, 0xc00b0ceb60)

github.com/pingcap/ticdc/logservice/schemastore/persist_storage_ddl_handlers.go:1208 +0x16e

github.com/pingcap/ticdc/logservice/schemastore.(*persistentStorage).handleDDLJob(0xc0045022c0, 0xc00019a4e0)

github.com/pingcap/ticdc/logservice/schemastore/persist_storage.go:694 +0x3dd

github.com/pingcap/ticdc/logservice/schemastore.(*schemaStore).updateResolvedTsPeriodically.func1()

github.com/pingcap/ticdc/logservice/schemastore/schema_store.go:210 +0x133b

github.com/pingcap/ticdc/logservice/schemastore.(*schemaStore).updateResolvedTsPeriodically(0xc00413c2d0, {0x63cc6a0, 0xc0036ea230})

github.com/pingcap/ticdc/logservice/schemastore/schema_store.go:231 +0xe8

github.com/pingcap/ticdc/logservice/schemastore.(*schemaStore).Run.func2()

github.com/pingcap/ticdc/logservice/schemastore/schema_store.go:140 +0x1f

golang.org/x/sync/errgroup.(*Group).Go.func1()

golang.org/x/sync@v0.10.0/errgroup/errgroup.go:78 +0x50

created by golang.org/x/sync/errgroup.(*Group).Go in goroutine 1035

golang.org/x/sync@v0.10.0/errgroup/errgroup.go:75 +0x96

panic: should not reach here

3个节点的TiCDC 日志和报错日志如下:

8302cdc_stderr.zip (5.7 KB)

8302cdc.zip (739.8 KB)

8301cdc.zip (923.3 KB)

8300cdc_stderr.zip (6.6 KB)

8300cdc.zip (839.9 KB)

8301cdc_stderr.zip (7.0 KB)

【其他附件:截图/日志/监控】