【TiDB 使用环境】测试

【TiDB 版本】V8.5.1

【操作系统】

[root@tidbcluster log]# cat /etc/centos-release

CentOS Linux release 7.9.2009 (Core)

[root@tidbcluster log]# hostnamectl

Static hostname: tidbcluster

Icon name: computer-vm

Chassis: vm

Machine ID: 17b2ead1d66b43f3b633fb09f016042e

Boot ID: eaa877c9baa243f280cf66a8e02f4055

Virtualization: vmware

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-1160.118.1.el7.x86_64

Architecture: x86-64

【部署方式】云上部署(什么云)/机器部署(什么机器配置、什么硬盘)

VMware平台,单个虚拟机;CPU*16;MEM128G; SAS SSD;

目标数据库:mysql 5.7.40;

参照官方文档《 在单机上模拟部署生产环境集群》

https://docs.pingcap.com/zh/tidb/stable/quick-start-with-tidb/

TiCDC新架构部署方式参考:

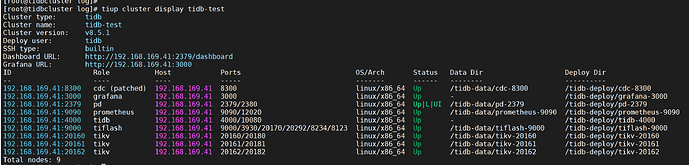

部署集群状态如下:

【TICDC升级过程】

升级过程无报错

[root@tidbcluster soft]# tiup cluster patch tidb-test ./cdc-v9.0.0-alpha-nightly-linux-amd64.tar.gz -R cdc --overwrite

Will patch the cluster tidb-test with package path is ./cdc-v9.0.0-alpha-nightly-linux-amd64.tar.gz, nodes: , roles: cdc.

Do you want to continue? [y/N]:(default=N) y

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.41

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.41

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.41

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.41

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.41

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.41

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.41

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.41

+ [Parallel] - UserSSH: user=tidb, host=192.168.169.41

+ [ Serial ] - BackupComponent: component=cdc, currentVersion=v8.5.1, remote=192.168.169.41:/tidb-deploy/cdc-8300

+ [ Serial ] - InstallPackage: srcPath=./cdc-v9.0.0-alpha-nightly-linux-amd64.tar.gz, remote=192.168.169.41:/tidb-deploy/cdc-8300

+ [ Serial ] - UpgradeCluster

Upgrading component cdc

Restarting instance 192.168.169.41:8300

Restart instance 192.168.169.41:8300 success

Stopping component node_exporter

Stopping instance 192.168.169.41

Stop 192.168.169.41 success

Stopping component blackbox_exporter

Stopping instance 192.168.169.41

Stop 192.168.169.41 success

Starting component node_exporter

Starting instance 192.168.169.41

Start 192.168.169.41 success

Starting component blackbox_exporter

Starting instance 192.168.169.41

Start 192.168.169.41 success

修改 TiCDC 配置并启用新架构

修改配置,添加如下内容

tiup cluster edit-config <cluster-name>

#vim

#cdc_servers:

# ...

# config:

# newarch: true

重新加载配置

[root@tidbcluster soft]# tiup cluster reload tidb-test

查看配置已生效:

[root@tidbcluster soft]# tiup cluster show-config tidb-test

………………………………

cdc_servers:

- host: 192.168.169.41

ssh_port: 22

patched: true

port: 8300

deploy_dir: /tidb-deploy/cdc-8300

data_dir: /tidb-data/cdc-8300

log_dir: /tidb-deploy/cdc-8300/log

gc-ttl: 86400

ticdc_cluster_id: ""

config:

newarch: true

arch: amd64

os: linux

查看CDC版本

[root@tidbcluster bin]# pwd

/tidb-deploy/cdc-8300/bin

[root@tidbcluster bin]# ./cdc version

Release Version: v9.0.0-alpha-143-gfe0e19a

Git Commit Hash: fe0e19ac8286a77e02b51707cbaa82a364f56e27

Git Branch: HEAD

UTC Build Time: 2025-03-11 01:51:50

Go Version: go1.23.7

Failpoint Build: false

【TiCDC同步任务配置】

两个同步命令最终表现出的问题一样。

原创建同步任务命令

# ./cdc cli changefeed create --pd=http://192.168.169.41:2379 --sink-uri="mysql://root:Abcd_1234@192.168.169.121:3307/" --changefeed-id="cdc9rep1" --sort-engine="unified"

发现问题删除同步任务;

# ./cdc cli changefeed remove --server=http://192.168.169.41:8300 --changefeed-id cdc9rep1

重建同步任务

# ./cdc cli changefeed create --pd=http://192.168.169.41:2379 --sink-uri="mysql://root:Abcd_1234@192.168.169.121:3307/" --changefeed-id="cdc9rep2"

查看同步任务状态,正常无报错

[root@tidbcluster bin]# ./cdc cli changefeed query --pd=http://192.168.169.41:2379 --changefeed-id=cdc9rep2

{

"upstream_id": 7475530864378024323,

"namespace": "default",

"id": "cdc9rep2",

"sink_uri": "mysql://root:xxxxx@192.168.169.121:3307/",

"config": {

"memory_quota": 1073741824,

"case_sensitive": false,

"force_replicate": false,

"ignore_ineligible_table": false,

"check_gc_safe_point": true,

"enable_sync_point": false,

"enable_table_monitor": false,

"bdr_mode": false,

"sync_point_interval": 600000000000,

"sync_point_retention": 86400000000000,

"filter": {

"rules": [

"*.*"

]

},

"mounter": {

"worker_num": 16

},

"sink": {

"csv": {

"delimiter": ",",

"quote": "\"",

"null": "\\N",

"include_commit_ts": false,

"binary_encoding_method": "base64",

"output_old_value": false,

"output_handle_key": false

},

"encoder_concurrency": 32,

"terminator": "\r\n",

"date_separator": "day",

"enable_partition_separator": true,

"enable_kafka_sink_v2": false,

"only_output_updated_columns": false,

"delete_only_output_handle_key_columns": false,

"content_compatible": false,

"advance_timeout": 150,

"send_bootstrap_interval_in_sec": 120,

"send_bootstrap_in_msg_count": 10000,

"send_bootstrap_to_all_partition": true,

"send-all-bootstrap-at-start": false,

"debezium_disable_schema": false,

"debezium": {

"output_old_value": true

},

"open": {

"output_old_value": true

}

},

"consistent": {

"level": "none",

"max_log_size": 64,

"flush_interval": 2000,

"meta_flush_interval": 200,

"encoding_worker_num": 16,

"flush_worker_num": 8,

"use_file_backend": false,

"memory_usage": {

"memory_quota_percentage": 50

}

},

"scheduler": {

"enable_table_across_nodes": false,

"region_threshold": 100000,

"write_key_threshold": 0

},

"integrity": {

"integrity_check_level": "none",

"corruption_handle_level": "warn"

},

"changefeed_error_stuck_duration": 1800000000000,

"synced_status": {

"synced_check_interval": 300,

"checkpoint_interval": 15

}

},

"create_time": "2025-03-14 19:40:13.007",

"start_ts": 456642373350588418,

"resolved_ts": 456642409067708422,

"target_ts": 0,

"checkpoint_tso": 456642409067708422,

"checkpoint_time": "2025-03-14 19:42:29.239",

"state": "normal",

"creator_version": "v9.0.0-alpha-143-gfe0e19a"

}

[root@tidbcluster bin]# ./cdc cli changefeed list --pd=http://192.168.169.41:2379

[

{

"id": "cdc9rep2",

"namespace": "default",

"summary": {

"state": "normal",

"tso": 456642411177705474,

"checkpoint": "2025-03-14 19:42:37.288",

"error": null

}

}

]

【遇到的问题:问题现象及影响】

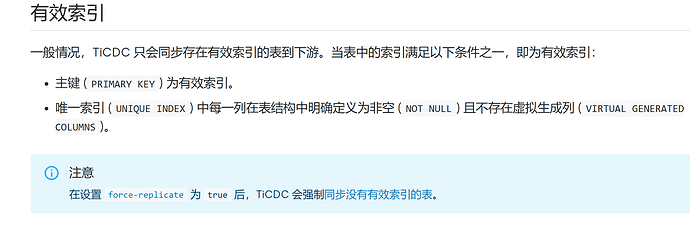

TiCDC 新架构,创建数据库的命令可以同步,但数据库下的创建的表和插入的数据不同步。

示例:

1.源库TiDB 上: create database t1; 目标库mysql 上可以看到立即同步有了 t1 schema;

2.源库 在t1 创建表 t11; 目标库不同步t11;

3.目标库手动创建同样的表t11,源库在t11插入数据,目标库查看数据不同步。

4. 源库再创建 schema t2;目标库快速同步出现 schema t2;

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【其他附件:截图/日志/监控】

TiCDC日志如下:

cdc日志.log (225.1 KB)