【 TiDB 使用环境】

[tidb@rocky ~]$ uname -a

Linux rocky 5.14.0-503.14.1.el9_5.x86_64 #1 SMP PREEMPT_DYNAMIC Fri Nov 15 12:04:32 UTC 2024 x86_64 x86_64 x86_64 GNU/Linux

【 TiDB 版本】 8.5.1

【复现路径】 cluster deploy

【遇到的问题:问题现象及影响】安装部署时总是报ssh错误 ssh免密是正常的

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【复制黏贴 ERROR 报错的日志】

[tidb@rocky ~]$ tiup cluster deploy tidb-test v8.5.1 ./topology_v2.yaml

-

Detect CPU Arch Name

- Detecting node 192.168.58.142 Arch info … Done

- Detecting node 192.168.58.143 Arch info … Done

- Detecting node 192.168.58.144 Arch info … Done

-

Detect CPU OS Name

- Detecting node 192.168.58.142 OS info … Done

- Detecting node 192.168.58.143 OS info … Done

- Detecting node 192.168.58.144 OS info … Done

Please confirm your topology:

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v8.5.1

Role Host Ports OS/Arch Directories

pd 192.168.58.142 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

pd 192.168.58.143 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

pd 192.168.58.144 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

tikv 192.168.58.142 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 192.168.58.143 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 192.168.58.144 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tidb 192.168.58.142 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tidb 192.168.58.143 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tidb 192.168.58.144 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tiflash 192.168.58.142 9000/3930/20170/20292/8234/8123 linux/x86_64 /tidb-deploy/tiflash-9000,/tidb-data/tiflash-9000

prometheus 192.168.58.142 9090/12020 linux/x86_64 /tidb-deploy/prometheus-9090,/tidb-data/prometheus-9090

grafana 192.168.58.142 3000 linux/x86_64 /tidb-deploy/grafana-3000

alertmanager 192.168.58.142 9093/9094 linux/x86_64 /tidb-deploy/alertmanager-9093,/tidb-data/alertmanager-9093

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) Y

- Generate SSH keys … Done

- Download TiDB components

- Download pd:v8.5.1 (linux/amd64) … Done

- Download tikv:v8.5.1 (linux/amd64) … Done

- Download tidb:v8.5.1 (linux/amd64) … Done

- Download tiflash:v8.5.1 (linux/amd64) … Done

- Download prometheus:v8.5.1 (linux/amd64) … Done

- Download grafana:v8.5.1 (linux/amd64) … Done

- Download alertmanager: (linux/amd64) … Done

- Download node_exporter: (linux/amd64) … Done

- Download blackbox_exporter: (linux/amd64) … Done

- Initialize target host environments

- Prepare 192.168.58.144:22 … Done

- Prepare 192.168.58.142:22 … Done

- Prepare 192.168.58.143:22 … Done

- Deploy TiDB instance

- Copy pd → 192.168.58.142 … Done

- Copy pd → 192.168.58.143 … Done

- Copy pd → 192.168.58.144 … Done

- Copy tikv → 192.168.58.142 … Done

- Copy tikv → 192.168.58.143 … Done

- Copy tikv → 192.168.58.144 … Done

- Copy tidb → 192.168.58.142 … Done

- Copy tidb → 192.168.58.143 … Done

- Copy tidb → 192.168.58.144 … Done

- Copy tiflash → 192.168.58.142 … Done

- Copy prometheus → 192.168.58.142 … Done

- Copy grafana → 192.168.58.142 … Done

- Copy alertmanager → 192.168.58.142 … Done

- Deploy node_exporter → 192.168.58.144 … Done

- Deploy node_exporter → 192.168.58.142 … Done

- Deploy node_exporter → 192.168.58.143 … Done

- Deploy blackbox_exporter → 192.168.58.143 … Done

- Deploy blackbox_exporter → 192.168.58.144 … Done

- Deploy blackbox_exporter → 192.168.58.142 … Done

- Copy certificate to remote host

- Init instance configs

- Generate config pd → 192.168.58.142:2379 … Done

- Generate config pd → 192.168.58.143:2379 … Done

- Generate config pd → 192.168.58.144:2379 … Done

- Generate config tikv → 192.168.58.142:20160 … Done

- Generate config tikv → 192.168.58.143:20160 … Done

- Generate config tikv → 192.168.58.144:20160 … Done

- Generate config tidb → 192.168.58.142:4000 … Done

- Generate config tidb → 192.168.58.143:4000 … Done

- Generate config tidb → 192.168.58.144:4000 … Done

- Generate config tiflash → 192.168.58.142:9000 … Done

- Generate config prometheus → 192.168.58.142:9090 … Done

- Generate config grafana → 192.168.58.142:3000 … Done

- Generate config alertmanager → 192.168.58.142:9093 … Done

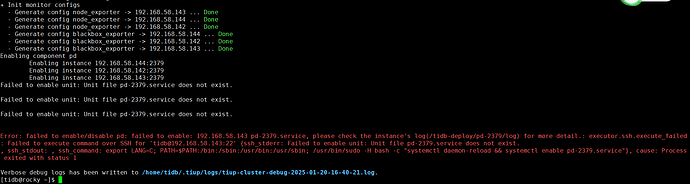

- Init monitor configs

- Generate config node_exporter → 192.168.58.143 … Done

- Generate config node_exporter → 192.168.58.144 … Done

- Generate config node_exporter → 192.168.58.142 … Done

- Generate config blackbox_exporter → 192.168.58.144 … Done

- Generate config blackbox_exporter → 192.168.58.142 … Done

- Generate config blackbox_exporter → 192.168.58.143 … Done

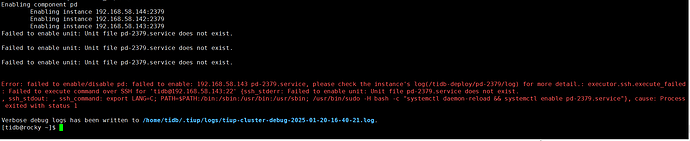

Enabling component pd

Enabling instance 192.168.58.144:2379

Enabling instance 192.168.58.142:2379

Enabling instance 192.168.58.143:2379

Failed to enable unit: Unit file pd-2379.service does not exist.

Failed to enable unit: Unit file pd-2379.service does not exist.

Failed to enable unit: Unit file pd-2379.service does not exist.

Error: failed to enable/disable pd: failed to enable: 192.168.58.143 pd-2379.service, please check the instance’s log(/tidb-deploy/pd-2379/log) for more detail.: executor.ssh.execute_failed: Failed to execute command over SSH for ‘tidb@192.168.58.143:22’ {ssh_stderr: Failed to enable unit: Unit file pd-2379.service does not exist.

, ssh_stdout: , ssh_command: export LANG=C; PATH=$PATH:/bin:/sbin:/usr/bin:/usr/sbin; /usr/bin/sudo -H bash -c “systemctl daemon-reload && systemctl enable pd-2379.service”}, cause: Process exited with status 1

Verbose debug logs has been written to /home/tidb/.tiup/logs/tiup-cluster-debug-2025-01-20-16-40-21.log.

【其他附件:截图/日志/监控】

topology_v2.yaml (10.7 KB)