3节点tikv缩容为1节点tikv之后,状态一直为pending offline,已经持续3-4天了

日志如下:

[root@zz-pw-db-4 ~]# tiup cluster scale-in tidb-test --node 10.188.110.31:20161

tiup is checking updates for component cluster …

Starting component cluster: /root/.tiup/components/cluster/v1.14.0/tiup-cluster scale-in tidb-test --node 10.188.110.31:20161

This operation will delete the 10.188.110.31:20161 nodes in tidb-test and all their data.

Do you want to continue? [y/N]:(default=N) y

The component [tikv] will become tombstone, maybe exists in several minutes or hours, after that you can use the prune command to clean it

Do you want to continue? [y/N]:(default=N) y

Scale-in nodes…

- [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

- [Parallel] - UserSSH: user=tidb, host=10.188.110.31

- [Parallel] - UserSSH: user=tidb, host=10.188.110.31

- [Parallel] - UserSSH: user=tidb, host=10.188.110.31

- [Parallel] - UserSSH: user=tidb, host=10.188.110.31

- [ Serial ] - ClusterOperate: operation=DestroyOperation, options={Roles: Nodes:[10.188.110.31:20161] Force:false SSHTimeout:5 OptTimeout:120 APITimeout:600 IgnoreConfigCheck:false NativeSSH:false SSHType: Concurrency:5 SSHProxyHost: SSHProxyPort:22 SSHProxyUser:root SSHProxyIdentity:/root/.ssh/id_rsa SSHProxyUsePassword:false SSHProxyTimeout:5 SSHCustomScripts:{BeforeRestartInstance:{Raw:} AfterRestartInstance:{Raw:}} CleanupData:false CleanupLog:false CleanupAuditLog:false RetainDataRoles: RetainDataNodes: DisplayMode:default Operation:StartOperation}

The componenttikvwill become tombstone, maybe exists in several minutes or hours, after that you can use the prune command to clean it - [ Serial ] - UpdateMeta: cluster=tidb-test, deleted=

'' - [ Serial ] - UpdateTopology: cluster=tidb-test

- Refresh instance configs

- Generate config pd → 10.188.110.31:2379 … Done

- Generate config tikv → 10.188.110.31:20160 … Done

- Generate config tidb → 10.188.110.31:4000 … Done

- Reload prometheus and grafana

Scaled clustertidb-testin successfully

[root@zz-pw-db-4 ~]#

region分布如下

mysql> select t1.store_id,sum(case when t1.is_leader=1 then 1 else 0 end) leader_cnt,count(t1.peer_id) region_cnt from INFORMATION_SCHEMA.tikv_region_peers t1 group by t1.store_id;

±---------±-----------±-----------+

| store_id | leader_cnt | region_cnt |

±---------±-----------±-----------+

| 2 | 96 | 96 |

| 1 | 0 | 3 |

±---------±-----------±-----------+

2 rows in set (0.09 sec)

mysql>

3 副本最少 3 个 tikv ,不然没有地方补充副本,你得先扩容再缩容

操作步骤如下:

(1)使用pd_ctl修改3副本为单副本

tiup ctl:v7.5.0 pd -i -u http://127.0.0.1:2379

» config show replication

{

“max-replicas”: 3,

“location-labels”: “host”,

“strictly-match-label”: “false”,

“enable-placement-rules”: “true”,

“enable-placement-rules-cache”: “false”,

“isolation-level”: “”

}

» config set max-replicas 1

Success!

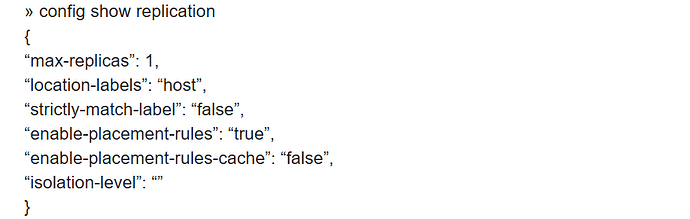

» config show replication

{

“max-replicas”: 1,

“location-labels”: “host”,

“strictly-match-label”: “false”,

“enable-placement-rules”: “true”,

“enable-placement-rules-cache”: “false”,

“isolation-level”: “”

}

(2)对tikv进行缩容

tiup cluster scale-in tidb-test --node 10.188.110.31:20162

tiup cluster scale-in tidb-test --node 10.188.110.31:20161

(3)其中

20162很快缩容完成,但20161卡了3-4天还这样?

有什么办法么

默认3副本,我已经修改成1副本啦

去看 PD 监控,看看调度正常不,看你前边发的就剩 3 个没迁移走,不行手工加下 operator,用 pd-ctl 命令

你怎么改的,生效了吗

show config where name like ‘replication.max-replicas’

查下

重启下这个tikv的实例进程呢? 然后看下pdserver的日志和这个tikv的日志有报错么

这不是还有3个region吗

store weight 1 0 0

这个 你设置一下,把这个store-id的leader和region参数都设置成0,他会自动转移region。

有可能这个他一直有region在传输到这个节点。

[root@zz-pw-db-4 ~]# tiup ctl:v7.5.0 pd -i -u http://127.0.0.1:2379

Starting component ctl: /root/.tiup/components/ctl/v7.5.0/ctl pd -i -u http://127.0.0.1:2379

» store weight 1 0 0

Success! The store’s label is updated."

» exit

[root@zz-pw-db-4 ~]#

执行了这个也不好使。

重启了这个tikv实例之后,就好了。

[root@zz-pw-db-4 ~]# tiup cluster stop tidb-test -N 10.188.110.31:20161

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.14.0/tiup-cluster stop tidb-test -N 10.188.110.31:20161

Will stop the cluster tidb-test with nodes: 10.188.110.31:20161, roles: .

Do you want to continue? [y/N]:(default=N) y

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [ Serial ] - StopCluster

Stopping component tikv

Stopping instance 10.188.110.31

Stop tikv 10.188.110.31:20161 success

Stopping component node_exporter

Stopping component blackbox_exporter

Stopped cluster `tidb-test` successfully

[root@zz-pw-db-4 ~]#

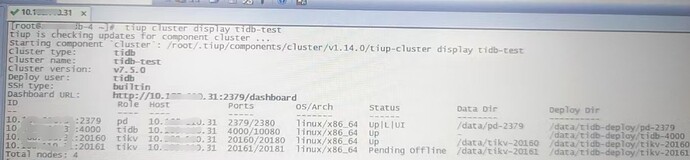

[root@zz-pw-db-4 ~]# tiup cluster display tidb-test

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.14.0/tiup-cluster display tidb-test

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v7.5.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://10.188.110.31:2379/dashboard

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

10.188.110.31:2379 pd 10.188.110.31 2379/2380 linux/x86_64 Up|L|UI /data/pd-2379 /data/tidb-deploy/pd-2379

10.188.110.31:4000 tidb 10.188.110.31 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

10.188.110.31:20160 tikv 10.188.110.31 20160/20180 linux/x86_64 Up /data/tikv-20160 /data/tidb-deploy/tikv-20160

10.188.110.31:20161 tikv 10.188.110.31 20161/20181 linux/x86_64 Pending Offline /data/tikv-20161 /data/tidb-deploy/tikv-20161

Total nodes: 4

[root@zz-pw-db-4 ~]# tiup cluster start tidb-test -N 10.188.110.31:20161

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.14.0/tiup-cluster start tidb-test -N 10.188.110.31:20161

Starting cluster tidb-test...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [ Serial ] - StartCluster

Starting component tikv

Starting instance 10.188.110.31:20161

Start instance 10.188.110.31:20161 success

Starting component node_exporter

Starting instance 10.188.110.31

Start 10.188.110.31 success

Starting component blackbox_exporter

Starting instance 10.188.110.31

Start 10.188.110.31 success

+ [ Serial ] - UpdateTopology: cluster=tidb-test

Started cluster `tidb-test` successfully

[root@zz-pw-db-4 ~]# tiup cluster display tidb-test

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.14.0/tiup-cluster display tidb-test

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v7.5.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://10.188.110.31:2379/dashboard

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

10.188.110.31:2379 pd 10.188.110.31 2379/2380 linux/x86_64 Up|L|UI /data/pd-2379 /data/tidb-deploy/pd-2379

10.188.110.31:4000 tidb 10.188.110.31 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

10.188.110.31:20160 tikv 10.188.110.31 20160/20180 linux/x86_64 Up /data/tikv-20160 /data/tidb-deploy/tikv-20160

10.188.110.31:20161 tikv 10.188.110.31 20161/20181 linux/x86_64 Pending Offline /data/tikv-20161 /data/tidb-deploy/tikv-20161

Total nodes: 4

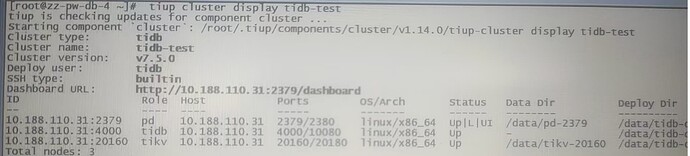

[root@zz-pw-db-4 ~]# tiup cluster display tidb-test

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.14.0/tiup-cluster display tidb-test

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v7.5.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://10.188.110.31:2379/dashboard

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

10.188.110.31:2379 pd 10.188.110.31 2379/2380 linux/x86_64 Up|L|UI /data/pd-2379 /data/tidb-deploy/pd-2379

10.188.110.31:4000 tidb 10.188.110.31 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

10.188.110.31:20160 tikv 10.188.110.31 20160/20180 linux/x86_64 Up /data/tikv-20160 /data/tidb-deploy/tikv-20160

10.188.110.31:20161 tikv 10.188.110.31 20161/20181 linux/x86_64 Tombstone /data/tikv-20161 /data/tidb-deploy/tikv-20161

Total nodes: 4

There are some nodes can be pruned:

Nodes: [10.188.110.31:20161]

You can destroy them with the command: `tiup cluster prune tidb-test`

[root@zz-pw-db-4 ~]#

mysql> select t1.store_id,sum(case when t1.is_leader=1 then 1 else 0 end) leader_cnt,count(t1.peer_id) region_cnt from INFORMATION_SCHEMA.tikv_region_peers t1 group by t1.store_id;

+----------+------------+------------+

| store_id | leader_cnt | region_cnt |

+----------+------------+------------+

| 2 | 91 | 91 |

+----------+------------+------------+

1 row in set (0.00 sec)

mysql>

[root@zz-pw-db-4 ~]# tiup cluster prune tidb-test

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.14.0/tiup-cluster prune tidb-test

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [Parallel] - UserSSH: user=tidb, host=10.188.110.31

+ [ Serial ] - FindTomestoneNodes

Will destroy these nodes: [10.188.110.31:20161]

Do you confirm this action? [y/N]:(default=N) y

Start destroy Tombstone nodes: [10.188.110.31:20161] ...

+ [ Serial ] - ClusterOperate: operation=ScaleInOperation, options={Roles:[] Nodes:[] Force:true SSHTimeout:5 OptTimeout:120 APITimeout:600 IgnoreConfigCheck:true NativeSSH:false SSHType: Concurrency:5 SSHProxyHost: SSHProxyPort:22 SSHProxyUser:root SSHProxyIdentity:/root/.ssh/id_rsa SSHProxyUsePassword:false SSHProxyTimeout:5 SSHCustomScripts:{BeforeRestartInstance:{Raw:} AfterRestartInstance:{Raw:}} CleanupData:false CleanupLog:false CleanupAuditLog:false RetainDataRoles:[] RetainDataNodes:[] DisplayMode:default Operation:StartOperation}

Stopping component tikv

Stopping instance 10.188.110.31

Stop tikv 10.188.110.31:20161 success

Destroying component tikv

Destroying instance 10.188.110.31

Destroy 10.188.110.31 finished

- Destroy tikv paths: [/data/tikv-20161 /data/tidb-deploy/tikv-20161/log /data/tidb-deploy/tikv-20161 /etc/systemd/system/tikv-20161.service]

+ [ Serial ] - UpdateMeta: cluster=tidb-test, deleted=`'10.188.110.31:20161'`

+ [ Serial ] - UpdateTopology: cluster=tidb-test

+ Refresh instance configs

- Generate config pd -> 10.188.110.31:2379 ... Done

- Generate config tikv -> 10.188.110.31:20160 ... Done

- Generate config tidb -> 10.188.110.31:4000 ... Done

+ Reload prometheus and grafana

Destroy success

[root@zz-pw-db-4 ~]# tiup cluster display tidb-test

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.14.0/tiup-cluster display tidb-test

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v7.5.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://10.188.110.31:2379/dashboard

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

10.188.110.31:2379 pd 10.188.110.31 2379/2380 linux/x86_64 Up|L|UI /data/pd-2379 /data/tidb-deploy/pd-2379

10.188.110.31:4000 tidb 10.188.110.31 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

10.188.110.31:20160 tikv 10.188.110.31 20160/20180 linux/x86_64 Up /data/tikv-20160 /data/tidb-deploy/tikv-20160

Total nodes: 3

[root@zz-pw-db-4 ~]#

那应该就是卡了

为咐重启就好了呢,

此话题已在最后回复的 7 天后被自动关闭。不再允许新回复。