【 TiDB 使用环境】生产环境

【 TiDB 版本】7.5.4

目前集群中的drainer只是把binlog落地到本地file文件

遇到两个问题

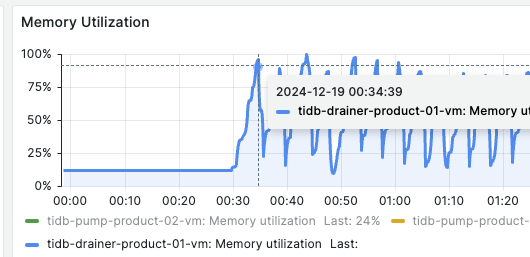

1、从日志看到检测到一个“表不存在”之后,然后每个4分钟因为内存满了之后重启drainer

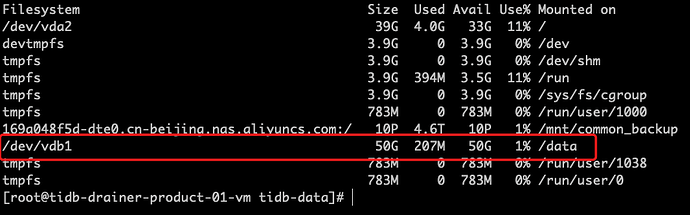

drainer节点的配置的4C8G, 50G独立数据盘

[2024/12/19 00:30:03.876 +08:00] [ERROR] [server.go:311] ["syncer exited abnormal"] [error="skipDMLEvent failed: not found table id: 117727"] [errorVerbose="not found table id: 117727\ngithub.com/pingcap/tidb-binlog/drainer.skipDMLEvent\n\t/workspace/so

urce/tidb-binlog/drainer/syncer.go:601\ngithub.com/pingcap/tidb-binlog/drainer.(*Syncer).run\n\t/workspace/source/tidb-binlog/drainer/syncer.go:412\ngithub.com/pingcap/tidb-binlog/drainer.(*Syncer).Start\n\t/workspace/source/tidb-binlog/drainer/syncer.g

o:152\ngithub.com/pingcap/tidb-binlog/drainer.(*Server).Start.func5\n\t/workspace/source/tidb-binlog/drainer/server.go:310\ngithub.com/pingcap/tidb-binlog/drainer.(*taskGroup).start.func1\n\t/workspace/source/tidb-binlog/drainer/util.go:82\nruntime.goex

it\n\t/usr/local/go/src/runtime/asm_amd64.s:1650\nskipDMLEvent failed"]

[2024/12/19 00:30:03.876 +08:00] [INFO] [util.go:79] [Exit] [name=syncer]

[2024/12/19 00:30:03.876 +08:00] [INFO] [server.go:485] ["begin to close drainer server"]

[2024/12/19 00:30:03.880 +08:00] [INFO] [server.go:450] ["has already update status"] [id=192.168.3.212:8249]

[2024/12/19 00:30:03.880 +08:00] [INFO] [server.go:489] ["commit status done"]

[2024/12/19 00:30:03.880 +08:00] [INFO] [collector.go:136] ["publishBinlogs quit"]

[2024/12/19 00:30:03.880 +08:00] [INFO] [util.go:79] [Exit] [name=heartbeat]

[2024/12/19 00:30:03.880 +08:00] [INFO] [pump.go:79] ["pump is closing"] [id=192.168.3.210:8250]

[2024/12/19 00:30:03.880 +08:00] [INFO] [pump.go:79] ["pump is closing"] [id=192.168.3.209:8250]

[2024/12/19 00:30:03.880 +08:00] [INFO] [util.go:79] [Exit] [name=collect]

[2024/12/19 00:30:03.880 +08:00] [ERROR] [main.go:69] ["start drainer server failed"] [error="skipDMLEvent failed: not found table id: 117727"] [errorVerbose="not found table id: 117727\ngithub.com/pingcap/tidb-binlog/drainer.skipDMLEvent\n\t/workspace/

source/tidb-binlog/drainer/syncer.go:601\ngithub.com/pingcap/tidb-binlog/drainer.(*Syncer).run\n\t/workspace/source/tidb-binlog/drainer/syncer.go:412\ngithub.com/pingcap/tidb-binlog/drainer.(*Syncer).Start\n\t/workspace/source/tidb-binlog/drainer/syncer

.go:152\ngithub.com/pingcap/tidb-binlog/drainer.(*Server).Start.func5\n\t/workspace/source/tidb-binlog/drainer/server.go:310\ngithub.com/pingcap/tidb-binlog/drainer.(*taskGroup).start.func1\n\t/workspace/source/tidb-binlog/drainer/util.go:82\nruntime.go

exit\n\t/usr/local/go/src/runtime/asm_amd64.s:1650\nskipDMLEvent failed"]

[2024/12/19 00:30:19.111 +08:00] [INFO] [version.go:50] ["Welcome to Drainer"] ["Release Version"=v7.5.3] ["Git Commit Hash"=35b674dea9c4f3184a7e86800ab83b9c5504fc3d] ["Build TS"="2024-06-13 04:38:28"] ["Go Version"=go1.21.10] ["Go OS/Arch"=linux/amd64]

[2024/12/19 00:30:19.111 +08:00] [INFO] [main.go:46] ["start drainer..."] [config="{\"log-level\":\"info\",\"node-id\":\"192.168.3.212:8249\",\"addr\":\"http://192.168.3.212:8249\",\"advertise-addr\":\"http://192.168.3.212:8249\",\"data-dir\":\"/data/ti

db-data/drainer-8249\",\"detect-interval\":5,\"pd-urls\":\"http://192.168.3.143:2379,http://192.168.3.144:2379,http://192.168.3.145:2379\",\"log-file\":\"/opt/app/tidb-deploy/drainer-8249/log/drainer.log\",\"initial-commit-ts\":-1,\"sycner\":{\"sql-mode

\":null,\"ignore-txn-commit-ts\":null,\"ignore-schemas\":\"INFORMATION_SCHEMA,PERFORMANCE_SCHEMA,mysql\",\"ignore-table\":null,\"txn-batch\":20,\"loopback-control\":false,\"sync-ddl\":true,\"channel-id\":0,\"worker-count\":1,\"to\":{\"host\":\"\",\"user

\":\"\",\"password\":\"\",\"security\":{\"ssl-ca\":\"\",\"ssl-cert\":\"\",\"ssl-key\":\"\",\"cert-allowed-cn\":null},\"encrypted_password\":\"\",\"sync-mode\":0,\"port\":0,\"checkpoint\":{\"type\":\"\",\"schema\":\"\",\"table\":\"\",\"host\":\"\",\"user

\":\"\",\"password\":\"\",\"encrypted_password\":\"\",\"port\":0,\"security\":{\"ssl-ca\":\"\",\"ssl-cert\":\"\",\"ssl-key\":\"\",\"cert-allowed-cn\":null},\"oracle-service-name\":\"\",\"oracle-connect-string\":\"\"},\"dir\":\"/data/tidb-data/drainer-82

49\",\"retention-time\":0,\"params\":null,\"read-timeout\":\"\",\"merge\":false,\"zookeeper-addrs\":\"\",\"kafka-addrs\":\"\",\"kafka-version\":\"\",\"kafka-max-messages\":0,\"kafka-client-id\":\"\",\"kafka-max-message-size\":0,\"topic-name\":\"\",\"ora

cle-service-name\":\"\",\"oracle-connect-string\":\"\"},\"replicate-do-table\":null,\"replicate-do-db\":null,\"db-type\":\"file\",\"relay\":{\"log-dir\":\"\",\"max-file-size\":10485760},\"disable-dispatch-flag\":null,\"enable-dispatch-flag\":null,\"disa

ble-dispatch\":null,\"enable-dispatch\":null,\"safe-mode\":false,\"disable-detect-flag\":null,\"enable-detect-flag\":null,\"disable-detect\":null,\"enable-detect\":null,\"load-schema-snapshot\":false,\"case-sensitive\":false,\"table-migrate-rule\":null}

,\"security\":{\"ssl-ca\":\"\",\"ssl-cert\":\"\",\"ssl-key\":\"\",\"cert-allowed-cn\":null},\"synced-check-time\":5,\"compressor\":\"\",\"EtcdTimeout\":5000000000,\"MetricsAddr\":\"\",\"MetricsInterval\":15}"]

[2024/12/19 00:30:19.112 +08:00] [INFO] [client.go:289] ["[pd] create pd client with endpoints"] [pd-address="[http://192.168.3.143:2379,http://192.168.3.144:2379,http://192.168.3.145:2379]"]

[2024/12/19 00:30:19.124 +08:00] [INFO] [base_client.go:487] ["[pd] switch leader"] [new-leader=http://192.168.3.145:2379] [old-leader=]

[2024/12/19 00:30:19.124 +08:00] [INFO] [base_client.go:164] ["[pd] init cluster id"] [cluster-id=7414376980477572603]

[2024/12/19 00:30:19.124 +08:00] [INFO] [tso_request_dispatcher.go:287] ["[pd/tso] tso dispatcher created"] [dc-location=global]

[2024/12/19 00:30:19.124 +08:00] [INFO] [server.go:123] ["get cluster id from pd"] [id=7414376980477572603]

[2024/12/19 00:30:19.125 +08:00] [INFO] [server.go:132] ["set InitialCommitTS"] [ts=454699101487104001]

[2024/12/19 00:30:19.125 +08:00] [INFO] [base_client.go:398] ["[pd] cannot update member from this address"] [address=http://192.168.3.143:2379] [error="[PD:client:ErrClientGetMember]error:rpc error: code = Canceled desc = context canceled target:192.16

8.3.143:2379 status:READY: error:rpc error: code = Canceled desc = context canceled target:192.168.3.143:2379 status:READY"]

[2024/12/19 00:30:19.125 +08:00] [ERROR] [base_client.go:203] ["[pd] failed to update member"] [error="[PD:client:ErrClientGetMember]error:rpc error: code = Canceled desc = context canceled target:192.168.3.143:2379 status:READY: error:rpc error: code =

Canceled desc = context canceled target:192.168.3.143:2379 status:READY"] [errorVerbose="[PD:client:ErrClientGetMember]error:rpc error: code = Canceled desc = context canceled target:192.168.3.143:2379 status:READY: error:rpc error: code = Canceled des

c = context canceled target:192.168.3.143:2379 status:READY\ngithub.com/tikv/pd/client.(*pdBaseClient).updateMember\n\t/root/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20230301094509-c82b237672a0/base_client.go:403\ngithub.com/tikv/pd/client.(*pdBaseCl

ient).memberLoop\n\t/root/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20230301094509-c82b237672a0/base_client.go:202\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1650"]

[2024/12/19 00:30:19.126 +08:00] [INFO] [checkpoint.go:69] ["initialize checkpoint"] [type=file] [checkpoint=454699097423872390] [version=462898] [cfg="{\"CheckpointType\":\"file\",\"Db\":null,\"Schema\":\"\",\"Table\":\"\",\"ClusterID\":741437698047757

2603,\"InitialCommitTS\":454699101487104001,\"dir\":\"/data/tidb-data/drainer-8249/savepoint\"}"]

[2024/12/19 00:30:19.126 +08:00] [INFO] [store.go:76] ["new store"] [path="tikv://192.168.3.143:2379,192.168.3.144:2379,192.168.3.145:2379?disableGC=true"]

[2024/12/19 00:30:19.126 +08:00] [INFO] [client.go:289] ["[pd] create pd client with endpoints"] [pd-address="[192.168.3.143:2379,192.168.3.144:2379,192.168.3.145:2379]"]

[2024/12/19 00:30:19.126 +08:00] [INFO] [tso_request_dispatcher.go:368] ["[pd/tso] stop fetching the pending tso requests due to context canceled"] [dc-location=global]

[2024/12/19 00:30:19.126 +08:00] [INFO] [tso_request_dispatcher.go:305] ["[pd/tso] exit tso dispatcher"] [dc-location=global]

[2024/12/19 00:30:19.135 +08:00] [INFO] [base_client.go:487] ["[pd] switch leader"] [new-leader=http://192.168.3.145:2379] [old-leader=]

[2024/12/19 00:30:19.135 +08:00] [INFO] [base_client.go:164] ["[pd] init cluster id"] [cluster-id=7414376980477572603]

[2024/12/19 00:30:19.135 +08:00] [INFO] [tso_request_dispatcher.go:287] ["[pd/tso] tso dispatcher created"] [dc-location=global]

[2024/12/19 00:30:19.135 +08:00] [INFO] [tikv_driver.go:191] ["using API V1."]

[2024/12/19 00:30:19.136 +08:00] [INFO] [store.go:82] ["new store with retry success"]

[2024/12/19 00:34:40.751 +08:00] [INFO] [binlogger.go:93] ["open binlogger"] [directory=/data/tidb-data/drainer-8249]

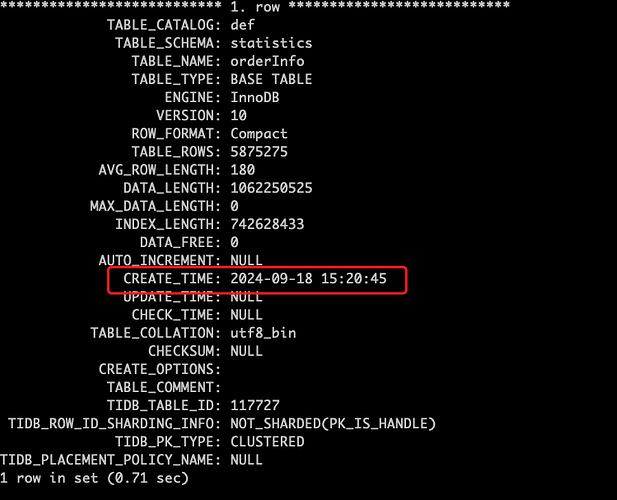

目前看这个表是存在的,

第二个问题是

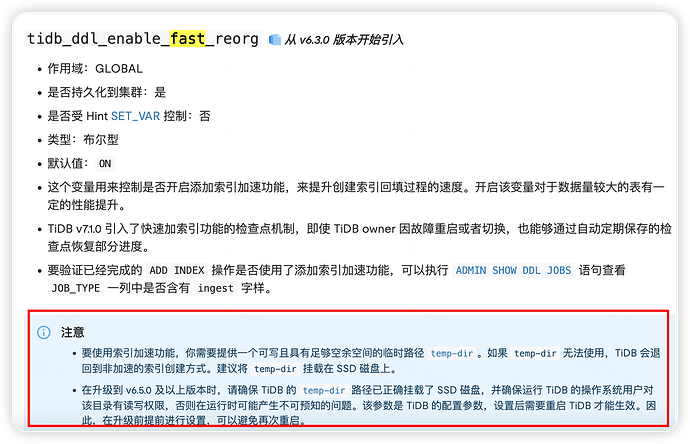

有告警,提示 no enough space in /tmp/tidb/tmp_ddl-4002

但是当前主机节点 50G数据盘,没有怎么使用

[2024/12/19 15:19:58.264 +08:00] [WARN] [schema.go:303] ["ddl job schema version is less than current version, skip this ddl job"] [job="ID:45905, Type:add index, State:cancelled, SchemaState:none, SchemaID:5779, TableID:5977, RowCount:0, ArgLen:0, start time: 2024-10-24 22:40:39.282 +0800 CST, Err:[ddl:8256]Check ingest environment failed: no enough space in /tmp/tidb/tmp_ddl-4002, ErrCount:1, SnapshotVersion:0, UniqueWarnings:0"] [currentVersion=0]

有人遇到过这个问题吗?