[tidb@4 log]$ tail -25000 tidb.log|more

[2024/12/16 09:53:07.414 +08:00] [WARN] [pd.go:152] [“get timestamp too slow”] [“cost time”=110.245855ms]

[2024/12/16 09:53:07.489 +08:00] [WARN] [2pc.go:1803] [“schemaLeaseChecker is not set for this transaction”] [conn=3911621278] [session_alias=] [sessionID=3911621278] [startTS=454640005928714244] [checkTS=45464

0005928714251]

[2024/12/16 09:53:08.048 +08:00] [WARN] [2pc.go:1803] [“schemaLeaseChecker is not set for this transaction”] [conn=3911621068] [session_alias=] [sessionID=3911621068] [startTS=454640006072631315] [checkTS=45464

0006072631323]

[2024/12/16 09:53:08.066 +08:00] [WARN] [2pc.go:1803] [“schemaLeaseChecker is not set for this transaction”] [conn=3911621068] [session_alias=] [sessionID=3911621068] [startTS=454640006072631326] [checkTS=45464

0006086000642]

[2024/12/16 09:53:08.220 +08:00] [WARN] [2pc.go:1803] [“schemaLeaseChecker is not set for this transaction”] [conn=3911621048] [session_alias=] [sessionID=3911621048] [startTS=454640006125322245] [checkTS=45464

0006125322248]

[2024/12/16 09:53:08.339 +08:00] [WARN] [2pc.go:1803] [“schemaLeaseChecker is not set for this transaction”] [conn=3911621048] [session_alias=] [sessionID=3911621048] [startTS=454640006153109514] [checkTS=45464

0006153109516]

[2024/12/16 09:53:10.116 +08:00] [INFO] [coprocessor.go:1343] [“[TIME_COP_WAIT] resp_time:1.73551262s txnStartTS:454640006153109517 region_id:136001 store_addr:192.168.6.34:20160 kv_process_ms:0 kv_wait_ms:0 kv_

read_ms:0 processed_versions:16 total_versions:16 rocksdb_delete_skipped_count:0 rocksdb_key_skipped_count:0 rocksdb_cache_hit_count:67 rocksdb_read_count:0 rocksdb_read_byte:0”] [conn=3911621266] [session_alia

s=]

[2024/12/16 09:53:10.117 +08:00] [INFO] [coprocessor.go:1343] [“[TIME_COP_WAIT] resp_time:1.743043426s txnStartTS:454640006138953748 region_id:136005 store_addr:192.168.6.31:20160 kv_process_ms:40 kv_wait_ms:0 k

v_read_ms:40 processed_versions:2052 total_versions:2325 rocksdb_delete_skipped_count:0 rocksdb_key_skipped_count:939 rocksdb_cache_hit_count:7443 rocksdb_read_count:0 rocksdb_read_byte:0”] [conn=3911620702] [s

ession_alias=]

[2024/12/16 09:53:10.117 +08:00] [INFO] [coprocessor.go:1343] [“[TIME_COP_PROCESS] resp_time:1.821191036s txnStartTS:454640006138953743 region_id:108149 store_addr:192.168.6.31:20160 kv_process_ms:107 kv_wait_ms

:0 kv_read_ms:100 processed_versions:80204 total_versions:80257 rocksdb_delete_skipped_count:14 rocksdb_key_skipped_count:80270 rocksdb_cache_hit_count:301 rocksdb_read_count:0 rocksdb_read_byte:0”] [conn=39116

21280] [session_alias=]

[2024/12/16 09:56:04.334 +08:00] [WARN] [client_batch.go:376] [“no available connections”] [target=192.168.6.34:20160]

[2024/12/16 09:56:04.334 +08:00] [WARN] [client_batch.go:376] [“no available connections”] [target=192.168.6.34:20160]

[2024/12/16 09:56:04.334 +08:00] [INFO] [region_cache.go:2954] [“[health check] check health error”] [store=192.168.6.34:20160] [error=“rpc error: code = Unavailable desc = connection error: desc = "transport:

Error while dialing: dial tcp 192.168.6.34:20160: connect: connection refused"”]

[2024/12/16 09:56:04.334 +08:00] [INFO] [region_request.go:1131] [“mark store’s regions need be refill”] [id=1] [addr=192.168.6.34:20160] [error=“no available connections”]

[2024/12/16 09:56:04.334 +08:00] [INFO] [region_cache.go:2682] [“change store resolve state”] [store=1] [addr=192.168.6.34:20160] [from=resolved] [to=needCheck] [liveness-state=unknown]

[2024/12/16 09:56:04.335 +08:00] [INFO] [region_cache.go:2682] [“change store resolve state”] [store=1] [addr=192.168.6.34:20160] [from=needCheck] [to=resolved] [liveness-state=unknown]

[2024/12/16 09:56:04.335 +08:00] [WARN] [client_batch.go:376] [“no available connections”] [target=192.168.6.34:20160]

[2024/12/16 09:56:04.335 +08:00] [WARN] [client_batch.go:376] [“no available connections”] [target=192.168.6.34:20160]

[2024/12/16 09:56:04.335 +08:00] [WARN] [client_batch.go:376] [“no available connections”] [target=192.168.6.34:20160]

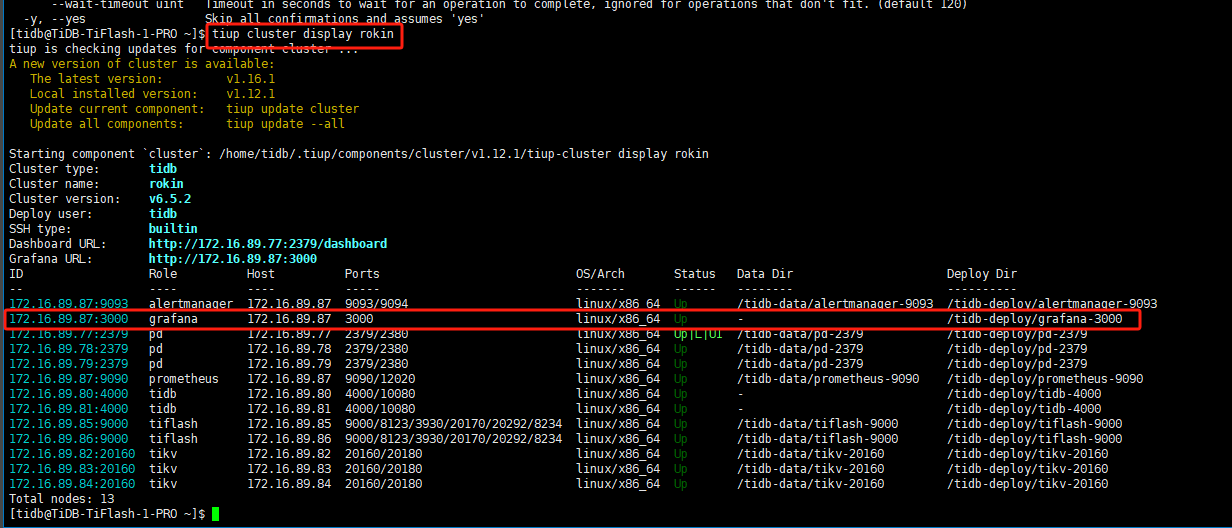

[tidb@1 ~]$ tiup cluster display ti-yfhis

Checking updates for component cluster… Timedout (after 2s)

Cluster type: tidb

Cluster name: ti-yfhis

Cluster version: v7.5.1

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.6.34:2379/dashboard

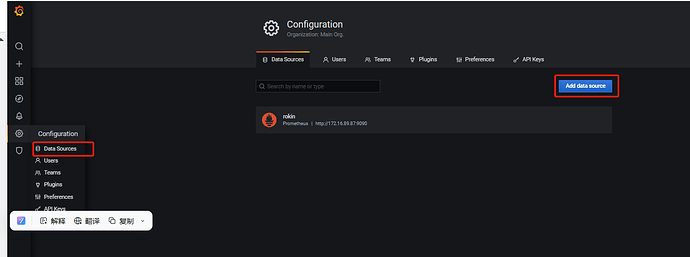

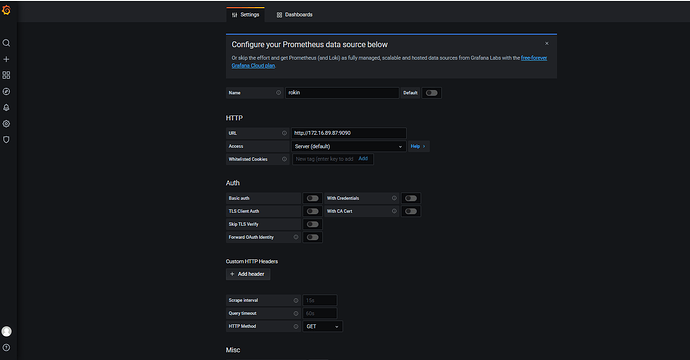

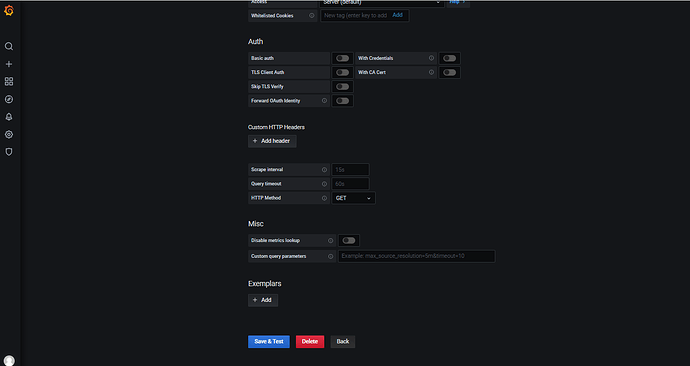

Grafana URL: http://192.168.6.32:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

192.168.6.32:9093 alertmanager 192.168.6.32 9093/9094 linux/aarch64 Up /data/tidb-data/alertmanager-9093 /data/tidb-deploy/alertmanager-9093

192.168.6.32:3000 grafana 192.168.6.32 3000 linux/aarch64 Up - /data/tidb-deploy/grafana-3000

192.168.6.31:2379 pd 192.168.6.31 2379/2380 linux/aarch64 Up|L /data/tidb-data/pd-2379 /data/tidb-deploy/pd-2379

192.168.6.34:2379 pd 192.168.6.34 2379/2380 linux/aarch64 Up|UI /data/tidb-data/pd-2379 /data/tidb-deploy/pd-2379

192.168.6.35:2379 pd 192.168.6.35 2379/2380 linux/aarch64 Up /data/tidb-data/pd-2379 /data/tidb-deploy/pd-2379

192.168.6.32:9088 prometheus 192.168.6.32 9088/12020 linux/aarch64 Up /data/tidb-data/prometheus-9088 /data/tidb-deploy/prometheus-9088

192.168.6.31:4000 tidb 192.168.6.31 4000/10080 linux/aarch64 Up - /data/tidb-deploy/tidb-4000

192.168.6.34:4000 tidb 192.168.6.34 4000/10080 linux/aarch64 Up - /data/tidb-deploy/tidb-4000

192.168.6.35:4000 tidb 192.168.6.35 4000/10080 linux/aarch64 Up - /data/tidb-deploy/tidb-4000

192.168.6.33:9000 tiflash 192.168.6.33 9000/8123/3930/20170/20292/8234 linux/aarch64 Up /data/tidb-data/tiflash-9000 /data/tidb-deploy/tiflash-9000

192.168.6.31:20160 tikv 192.168.6.31 20160/20180 linux/aarch64 Up /data/tidb-data/tikv-20160 /data/tidb-deploy/tikv-20160

192.168.6.34:20160 tikv 192.168.6.34 20160/20180 linux/aarch64 Up /data/tidb-data/tikv-20160 /data/tidb-deploy/tikv-20160

192.168.6.35:20160 tikv 192.168.6.35 20160/20180 linux/aarch64 Up /data/tidb-data/tikv-20160 /data/tidb-deploy/tikv-20160

Total nodes: 13

[tidb@1 ~]$

[tidb@4 log]$ netstat -nltp |grep 20160

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

tcp6 0 0 :::20160 :::* LISTEN 1000654/bin/tikv-se

tidb_error.txt (21.0 KB)