【 TiDB 使用环境】生产环境 /测试/ Poc

【 TiDB 版本】v7.5.0

【复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】

checkpoint一直等于start,从来不更新,只有gap值越来越大。

[root@tidb ~]# /root/.tiup/components/br/v7.5.0/br log status

Detail BR log in /tmp/br.log.2024-12-05T19.28.42+0800

● Total 1 Tasks.

#1 <

name: e0bd0d4eb2f811ef8000005056a4b903

status: ● NORMAL

start: 2024-12-05 19:05:47.211 +0800

end: 2090-11-18 22:07:45.624 +0800

storage: s3://bucket-f4f2ffb2/e0bd0d4eb2f811ef8000005056a4b903

speed(est.): 0.00 ops/s

checkpoint[global]: 2024-12-05 19:05:47.211 +0800; gap=22m57s

[root@tidb ~]#

[root@tidb ~]#

[root@tidb ~]# /root/.tiup/components/br/v7.5.0/br log status --json

Detail BR log in /tmp/br.log.2024-12-05T19.29.11+0800

[{“name”:“e0bd0d4eb2f811ef8000005056a4b903”,“start_ts”:454399556900880387,“end_ts”:999999999999999999,“table_filter”:[“.”],“progress”:,“storage”:“s3://bucket-f4f2ffb2/e0bd0d4eb2f811ef8000005056a4b903”,“checkpoint”:454399556900880387,“estimate_qps”:0,“last_errors”:}]

[root@tidb ~]# /root/.tiup/components/br/v7.5.0/br log status

Detail BR log in /tmp/br.log.2024-12-05T19.30.02+0800

● Total 1 Tasks.

#1 <

name: e0bd0d4eb2f811ef8000005056a4b903

status: ● NORMAL

start: 2024-12-05 19:05:47.211 +0800

end: 2090-11-18 22:07:45.624 +0800

storage: s3://bucket-f4f2ffb2/e0bd0d4eb2f811ef8000005056a4b903

speed(est.): 0.00 ops/s

checkpoint[global]: 2024-12-05 19:05:47.211 +0800; gap=24m17s

[root@tidb ~]# /root/.tiup/components/br/v7.5.0/br log status --json

Detail BR log in /tmp/br.log.2024-12-05T19.34.17+0800

[{“name”:“e0bd0d4eb2f811ef8000005056a4b903”,“start_ts”:454399556900880387,“end_ts”:999999999999999999,“table_filter”:[“.”],“progress”:,“storage”:“s3://bucket-f4f2ffb2/e0bd0d4eb2f811ef8000005056a4b903”,“checkpoint”:454399556900880387,“estimate_qps”:0,“last_errors”:}]

[root@tidb ~]# /root/.tiup/components/br/v7.5.0/br log status

Detail BR log in /tmp/br.log.2024-12-06T10.27.19+0800

● Total 1 Tasks.

#1 <

name: e0bd0d4eb2f811ef8000005056a4b903

status: ● NORMAL

start: 2024-12-05 19:05:47.211 +0800

end: 2090-11-18 22:07:45.624 +0800

storage: s3://bucket-f4f2ffb2/e0bd0d4eb2f811ef8000005056a4b903

speed(est.): 0.00 ops/s

checkpoint[global]: 2024-12-05 19:05:47.211 +0800; gap=15h21m33s

[root@tidb ~]# vi /tmp/br.log.2024-12-06T10.27.19+0800

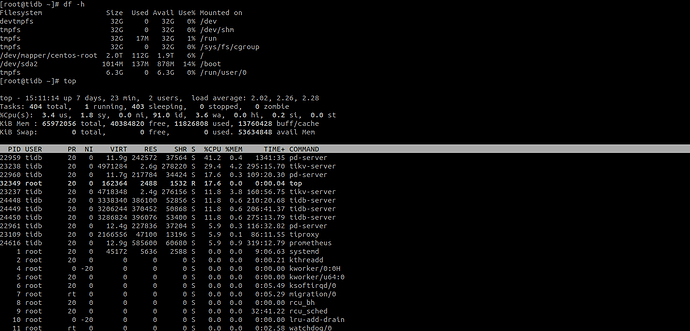

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

你说的资源指的是内存和磁盘吗?

我认为日志数据写不下去主要受到磁盘影响吧,这里磁盘空间还有很多空闲的。

你这里说的日志信息指的是pd节点和kv的日志信息吗,我查看了下,是存在有报错信息的。

其中pd节点的报错是这样的:

[2024/12/06 15:27:56.584 +08:00] [WARN] [etcdutil.go:147] [“kv gets too slow”] [request-key=/pd/7414019279550161566/config] [cost=1.475659567s]

[2024/12/06 15:27:56.771 +08:00] [INFO] [leadership.go:321] [“current leadership is deleted”] [revision=4481566] [leader-key=/pd/7414019279550161566/leader] [purpose=“leader election”]

[2024/12/06 15:27:56.771 +08:00] [ERROR] [client.go:150] [“region sync with leader meet error”] [error=“[PD:grpc:ErrGRPCRecv]rpc error: code = Canceled desc = context canceled: rpc error: code = Canceled desc = context canceled”]

[2024/12/06 15:27:56.786 +08:00] [INFO] [trace.go:152] [“trace[943044851] linearizableReadLoop”] [detail=“{readStateIndex:4512570; appliedIndex:4512569; }”] [duration=201.02968ms] [start=2024/12/06 15:27:56.585 +08:00] [end=2024/12/06 15:27:56.786 +08:00] [steps=“["trace[943044851] ‘read index received’ (duration: 185.697283ms)","trace[943044851] ‘applied index is now lower than readState.Index’ (duration: 15.331173ms)"]”]

[2024/12/06 15:27:56.786 +08:00] [WARN] [util.go:163] [“apply request took too long”] [took=201.25776ms] [expected-duration=100ms] [prefix=“read-only range “] [request=“key:"/pd/7414019279550161566/member/1859303800845153097/leader_priority" “] [response=“range_response_count:0 size:7”]

[2024/12/06 15:27:56.786 +08:00] [INFO] [trace.go:152] [“trace[1908474156] range”] [detail=”{range_begin:/pd/7414019279550161566/member/1859303800845153097/leader_priority; range_end:; response_count:0; response_revision:4481569; }”] [duration=201.360166ms] [start=2024/12/06 15:27:56.585 +08:00] [end=2024/12/06 15:27:56.786 +08:00] [steps=”["trace[1908474156] ‘agreement among raft nodes before linearized reading’ (duration: 201.191282ms)"]”]

[2024/12/06 15:27:57.772 +08:00] [INFO] [server.go:1605] [“pd leader has changed, try to re-campaign a pd leader”]

[2024/12/06 15:27:57.772 +08:00] [INFO] [server.go:1630] [“skip campaigning of pd leader and check later”] [server-name=pd-192.168.110.238-2379] [etcd-leader-id=10773358837178006005] [member-id=1859303800845153097]

[2024/12/06 15:27:58.692 +08:00] [WARN] [util.go:163] [“apply request took too long”] [took=1.419582997s] [expected-duration=100ms] [prefix=“read-only range “] [request=“key:"/pd/7414019279550161566/member/14515535160256361248/leader_priority" “] [response=“range_response_count:0 size:7”]

[2024/12/06 15:27:58.693 +08:00] [INFO] [trace.go:152] [“trace[153361988] range”] [detail=”{range_begin:/pd/7414019279550161566/member/14515535160256361248/leader_priority; range_end:; response_count:0; response_revision:4481571; }”] [duration=1.419715291s] [start=2024/12/06 15:27:57.273 +08:00] [end=2024/12/06 15:27:58.693 +08:00] [steps=”["trace[153361988] ‘range keys from in-memory index tree’ (duration: 1.41764724s)"]”]

kv节点存在的报错是这样的:

[2024/12/06 15:27:56.756 +08:00] [INFO] [util.rs:279] [“acquire_token_buckets sender and receiver are stale, refreshing …”] [thread_id=0x5]

[2024/12/06 15:27:56.757 +08:00] [INFO] [util.rs:302] [“update pd client”] [via=] [leader=http://192.168.110.238:2381] [prev_via=] [prev_leader=http://192.168.110.238:2381] [thread_id=0x5]

[2024/12/06 15:27:56.757 +08:00] [INFO] [util.rs:434] [“trying to update PD client done”] [spend=6.369478ms] [thread_id=0x5]

[2024/12/06 15:27:56.757 +08:00] [INFO] [tso.rs:162] [“TSO worker terminated”] [receiver_cause=None] [sender_cause=None] [thread_id=0x5]

[2024/12/06 15:27:57.151 +08:00] [ERROR] [util.rs:496] [“request failed, retry”] [err_code=KV:Pd:Grpc] [err=“Grpc(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "not leader", details: }))”] [thread_id=0x5]

[2024/12/06 15:27:57.151 +08:00] [INFO] [util.rs:638] [“connecting to PD endpoint”] [endpoints=http://192.168.110.238:2379] [thread_id=0x5]

[2024/12/06 15:27:57.153 +08:00] [INFO] [util.rs:638] [“connecting to PD endpoint”] [endpoints=http://192.168.110.238:2383] [thread_id=0x5]

[2024/12/06 15:27:57.156 +08:00] [INFO] [util.rs:638] [“connecting to PD endpoint”] [endpoints=http://192.168.110.238:2381] [thread_id=0x5]

[2024/12/06 15:27:57.169 +08:00] [ERROR] [util.rs:496] [“request failed, retry”] [err_code=KV:Pd:Grpc] [err=“Grpc(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "not leader", details: }))”] [thread_id=0x5]

[2024/12/06 15:27:57.170 +08:00] [ERROR] [util.rs:496] [“request failed, retry”] [err_code=KV:Pd:Grpc] [err=“Grpc(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "not leader", details: }))”] [thread_id=0x5]

[2024/12/06 15:27:57.170 +08:00] [ERROR] [util.rs:496] [“request failed, retry”] [err_code=KV:Pd:Grpc] [err=“Grpc(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "not leader", details: }))”] [thread_id=0x5]

[2024/12/06 15:27:57.453 +08:00] [ERROR] [util.rs:496] [“request failed, retry”] [err_code=KV:Pd:Grpc] [err=“Grpc(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "not leader", details: }))”] [thread_id=0x5]

[2024/12/06 15:27:57.738 +08:00] [ERROR] [util.rs:496] [“request failed, retry”] [err_code=KV:Pd:Grpc] [err=“Grpc(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "not leader", details: }))”] [thread_id=0x5]

[2024/12/06 15:27:57.739 +08:00] [ERROR] [util.rs:496] [“request failed, retry”] [err_code=KV:Pd:Grpc] [err=“Grpc(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "not leader", details: }))”] [thread_id=0x5]

[2024/12/06 15:27:57.739 +08:00] [ERROR] [util.rs:496] [“request failed, retry”] [err_code=KV:Pd:Grpc] [err=“Grpc(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "not leader", details: }))”] [thread_id=0x5]

[2024/12/06 15:27:58.159 +08:00] [ERROR] [util.rs:496] [“request failed, retry”] [err_code=KV:Pd:Grpc] [err=“Grpc(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "not leader", details: }))”] [thread_id=0x5]

[2024/12/06 15:27:58.159 +08:00] [INFO] [util.rs:638] [“connecting to PD endpoint”] [endpoints=http://192.168.110.238:2379] [thread_id=0x5]

[2024/12/06 15:27:58.162 +08:00] [INFO] [util.rs:638] [“connecting to PD endpoint”] [endpoints=http://192.168.110.238:2383] [thread_id=0x5]

[2024/12/06 15:27:58.165 +08:00] [INFO] [util.rs:638] [“connecting to PD endpoint”] [endpoints=http://192.168.110.238:2381] [thread_id=0x5]

WalterWj

(王军 - PingCAP)

9

pitr 重启下试试,感觉夯住了。

版本推荐升级到 7.5.5 我看还是修复了几个 br 相关问题的,但是不确定有没有覆盖你这个情况。