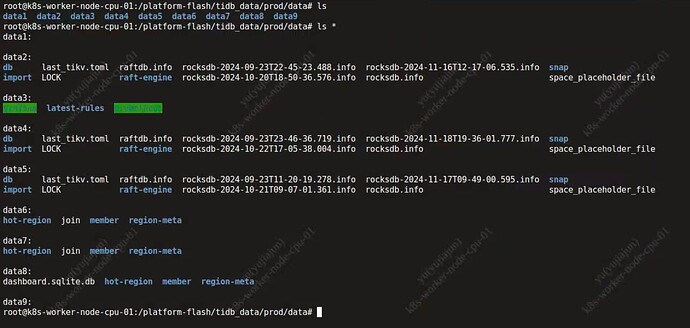

我的pv 挂载卷如何编写yaml 让指定data1 ,2 ,3 衔接上对应kv 和 pd

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: “local-storage-prod”

provisioner: “kubernetes.io/no-provisioner”

volumeBindingMode: “WaitForFirstConsumer”

apiVersion: v1

kind: ConfigMap

metadata:

name: local-provisioner-config

namespace: prod

data:

setPVOwnerRef: “true”

nodeLabelsForPV: |

- kubernetes.io/hostname

storageClassMap: |

local-storage-prod:

hostDir: /data/tidb/prod/data

mountDir: /data

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: local-volume-provisioner

namespace: prod

labels:

app: local-volume-provisioner

spec:

selector:

matchLabels:

app: local-volume-provisioner

template:

metadata:

labels:

app: local-volume-provisioner

spec:

serviceAccountName: local-storage-admin

containers:

- image: “quay.io/external_storage/local-volume-provisioner:v2.3.4”

name: provisioner

securityContext:

privileged: true

env:

- name: MY_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: MY_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: JOB_CONTAINER_IMAGE

value: “Quay”

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 100m

memory: 100Mi

volumeMounts:

- mountPath: /etc/provisioner/config

name: provisioner-config

readOnly: true

- mountPath: /data

name: local-disks

mountPropagation: “HostToContainer”

volumes:

- name: provisioner-config

configMap:

name: local-provisioner-config

- name: local-disks

hostPath:

path: /data/tidb/prod/data

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-storage-admin

namespace: prod

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-storage-provisioner-pv-binding

namespace: prod

subjects:

- kind: ServiceAccount

name: local-storage-admin

namespace: prod

roleRef:

kind: ClusterRole

name: system:persistent-volume-provisioner

apiGroup: rbac.authorization.k8s.io