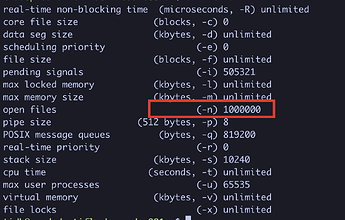

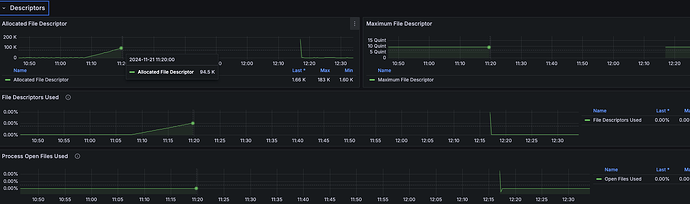

TiFlash Compute 节点日志报错:Download s3_key=s18195849145/data/t_79608/dmf_3917491/6.dat failed: Code: 49, e.displayText() = DB::Exception: Check ostr.good() failed: Write /home/tidb/tidb-data/tiflash-cache/dtfile/s18195849145/data/t_79608/dmf_3917491/6.dat.tmp content_length 419562 failed: Resource temporarily unavailable

完整报错内容见:

[2024/11/21 11:21:40.801 +08:00] [ERROR] [Exception.cpp:91] ["Download s3_key=s18195849145/data/t_12366/dmf_4358615/3.dat failed: Code: 49, e.displayText() = DB::Exception: Check ostr.good() failed: Write /home/tidb/tidb-data/tiflash-cache/dtfile/s18195849145/data/t_12366/dmf_4358615/3.dat.tmp content_length 518771 failed: Resource temporarily unavailable, e.what() = DB::Exception, Stack trace:\n\n\n 0x80b3166\tstd::__1::__function::__func<DB::FileCache::bgDownload(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::shared_ptr<DB::FileSegment>&)::$_12, std::__1::allocator<DB::FileCache::bgDownload(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::shared_ptr<DB::FileSegment>&)::$_12>, void ()>::operator()() [tiflash+134951270]\n \t/usr/local/bin/../include/c++/v1/__functional/function.h:345\n 0x1fb3957\tDB::ThreadPoolImpl<DB::ThreadFromGlobalPoolImpl<false> >::worker(std::__1::__list_iterator<DB::ThreadFromGlobalPoolImpl<false>, void*>) [tiflash+33241431]\n \tdbms/src/Common/UniThreadPool.cpp:306\n 0x1fb5993\tstd::__1::__function::__func<DB::ThreadFromGlobalPoolImpl<false>::ThreadFromGlobalPoolImpl<void DB::ThreadPoolImpl<DB::ThreadFromGlobalPoolImpl<false> >::scheduleImpl<void>(std::__1::function<void ()>, long, std::__1::optional<unsigned long>, bool)::'lambda0'()>(void&&)::'lambda'(), std::__1::allocator<DB::ThreadFromGlobalPoolImpl<false>::ThreadFromGlobalPoolImpl<void DB::ThreadPoolImpl<DB::ThreadFromGlobalPoolImpl<false> >::scheduleImpl<void>(std::__1::function<void ()>, long, std::__1::optional<unsigned long>, bool)::'lambda0'()>(void&&)::'lambda'()>, void ()>::operator()() [tiflash+33249683]\n \t/usr/local/bin/../include/c++/v1/__functional/function.h:345\n 0x1fb4a38\tvoid* std::__1::__thread_proxy<std::__1::tuple<std::__1::unique_ptr<std::__1::__thread_struct, std::__1::default_delete<std::__1::__thread_struct> >, void DB::ThreadPoolImpl<std::__1::thread>::scheduleImpl<void>(std::__1::function<void ()>, long, std::__1::optional<unsigned long>, bool)::'lambda0'()> >(void*) [tiflash+33245752]\n \t/usr/local/bin/../include/c++/v1/thread:291\n 0x7efee903dac3\t<unknown symbol> [libc.so.6+608963]\n 0x7efee90cf850\t<unknown symbol> [libc.so.6+1206352]"] [source=FileCache] [thread_id=1626]

-

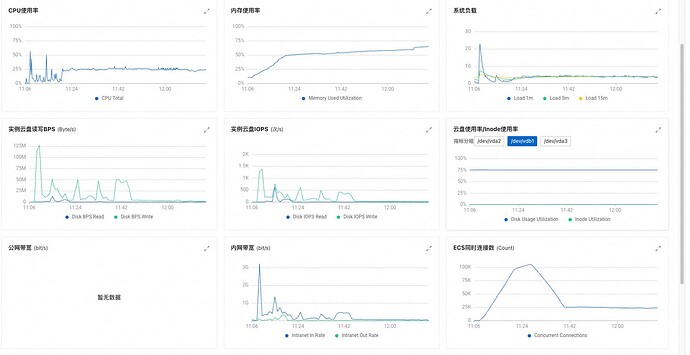

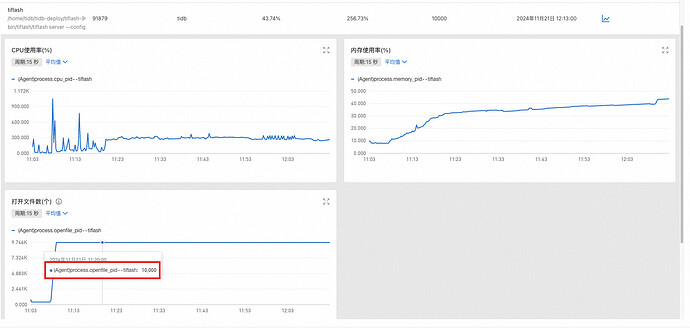

故障节点的负载如下,CPU 为 3C,内存在缓慢上升,磁盘 IO 很小。

-

业务表现: 只要走到 TiFlash 的查询都特别慢,但是不会直接报错。

-

恢复方法: 重启故障节点后,一切恢复正常。

想知道是什么原因引起的? 有参数可以优化避免这类问题出现么?