【 TiDB 使用环境】生产环境 / 测试环境

【 TiDB 版本】8.1.1

【遇到的问题:问题现象及影响】

分页查询同一单表3000W数据量不走索引的同样情况下,测试环境检索时间7s左右,生产环境需要50秒左右。

【资源配置】

单台物理机配置:40c、256G、机械

测试环境配置:一台物理机(1个tidb-server、1个pd、3个tikv、1个tiflash)

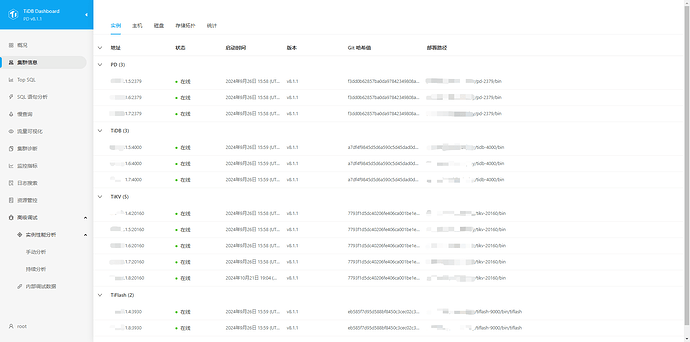

正式环境配置:五台物理机(3个tidb-server、3个pd、5个tikv、2个tiflash、dashboard),具体如下图所示:

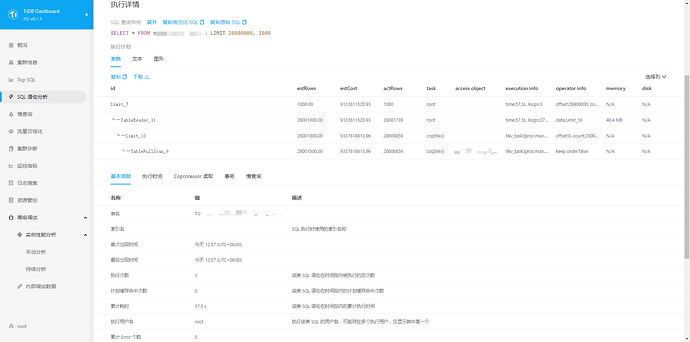

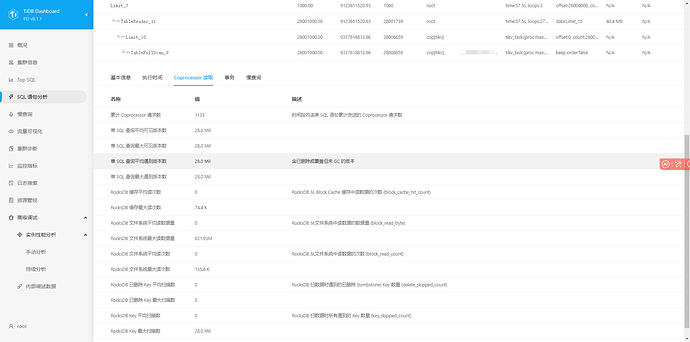

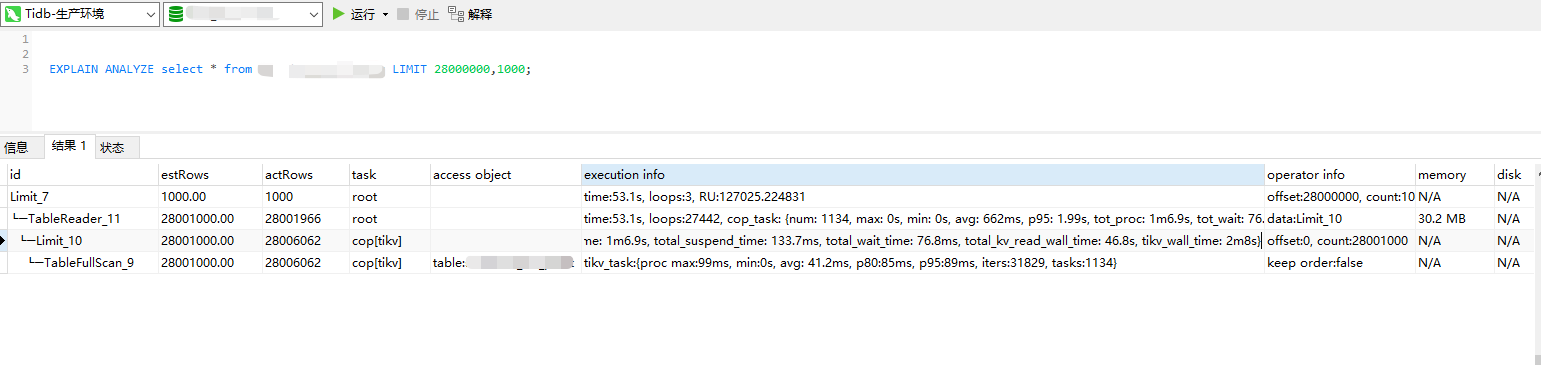

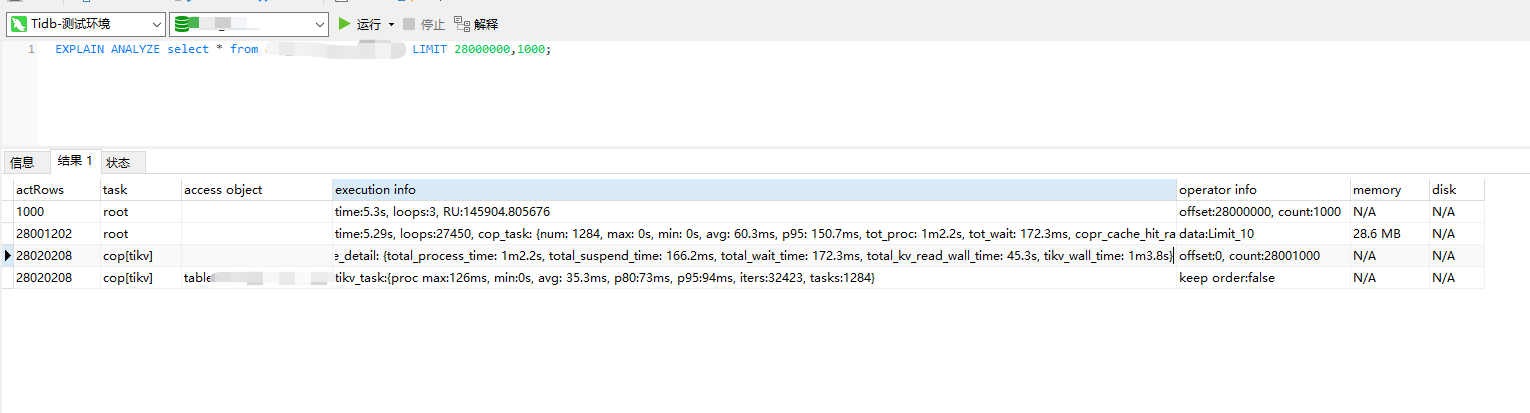

【执行计划截图】

单表分页查询同一条sql的情况下,

1.生产环境:

具体信息:

Limit_7 1000.00 1000 root time:53.1s, loops:3, RU:127025.224831 offset:28000000, count:1000 N/A N/A

└─TableReader_11 28001000.00 28001966 root time:53.1s, loops:27442, cop_task: {num: 1134, max: 0s, min: 0s, avg: 662ms, p95: 1.99s, tot_proc: 1m6.9s, tot_wait: 76.8ms, copr_cache_hit_ratio: 0.01, build_task_duration: 101.2µs, max_distsql_concurrency: 15}, rpc_info:{Cop:{num_rpc:1134, total_time:12m30.6s}} data:Limit_10 30.2 MB N/A

└─Limit_10 28001000.00 28006062 cop[tikv] tikv_task:{proc max:99ms, min:0s, avg: 41.3ms, p80:85ms, p95:89ms, iters:31829, tasks:1134}, scan_detail: {total_process_keys: 27992974, total_process_keys_size: 6828766393, total_keys: 27994101, get_snapshot_time: 55.6ms, rocksdb: {key_skipped_count: 27992974, block: {cache_hit_count: 38872, read_count: 189670, read_byte: 782.7 MB, read_time: 476.6ms}}}, time_detail: {total_process_time: 1m6.9s, total_suspend_time: 133.7ms, total_wait_time: 76.8ms, total_kv_read_wall_time: 46.8s, tikv_wall_time: 2m8s} offset:0, count:28001000 N/A N/A

└─TableFullScan_9 28001000.00 28006062 cop[tikv] table:*** tikv_task:{proc max:99ms, min:0s, avg: 41.2ms, p80:85ms, p95:89ms, iters:31829, tasks:1134} keep order:false N/A N/A

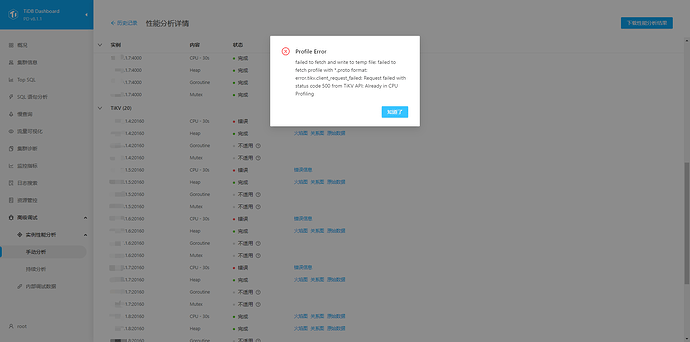

dashboard性能分析提示有错误:

- 测试环境:

具体信息:

|Limit_7|1000.00|1000|root||time:5.19s, loops:3, RU:145453.027595|offset:28000000, count:1000|N/A|N/A|

|—|—|—|—|—|—|—|—|—|

|└─TableReader_11|28001000.00|28001268|root||time:5.19s, loops:27457, cop_task: {num: 1284, max: 0s, min: 0s, avg: 58.7ms, p95: 149.7ms, tot_proc: 1m1.4s, tot_wait: 203.5ms, copr_cache_hit_ratio: 0.03, build_task_duration: 156.8µs, max_distsql_concurrency: 15}, rpc_info:{Cop:{num_rpc:1284, total_time:1m15.4s}}|data:Limit_10|28.6 MB|N/A|

| └─Limit_10|28001000.00|28007822|cop[tikv]||tikv_task:{proc max:151ms, min:0s, avg: 35ms, p80:72ms, p95:95ms, iters:32411, tasks:1284}, scan_detail: {total_process_keys: 27973486, total_process_keys_size: 8150209479, total_keys: 27974731, get_snapshot_time: 156.4ms, rocksdb: {key_skipped_count: 27973486, block: {cache_hit_count: 263687}}}, time_detail: {total_process_time: 1m1.4s, total_suspend_time: 159.5ms, total_wait_time: 203.5ms, total_kv_read_wall_time: 44.8s, tikv_wall_time: 1m2.7s}|offset:0, count:28001000|N/A|N/A|

| └─TableFullScan_9|28001000.00|28007822|cop[tikv]|table:***|tikv_task:{proc max:151ms, min:0s, avg: 35ms, p80:72ms, p95:94ms, iters:32411, tasks:1284}|keep order:false|N/A|N/A|

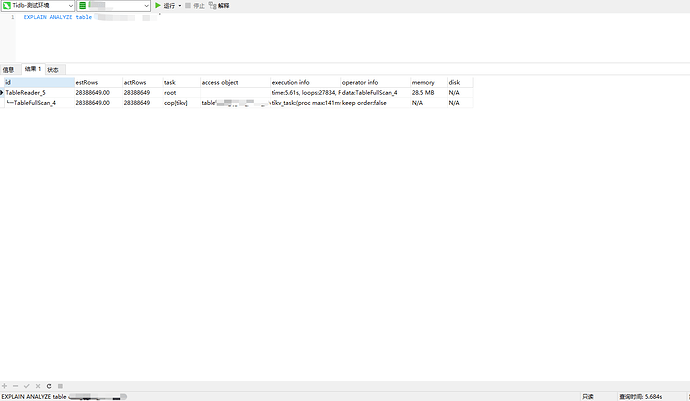

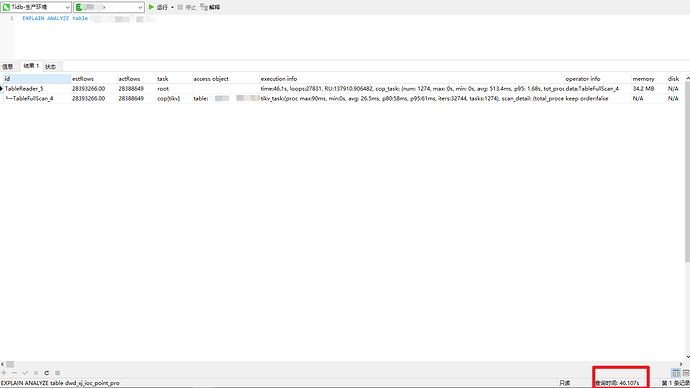

【Analysis Table执行分析】

-

测试环境:

具体信息如下:

|TableReader_5|28388649.00|28388649|root||time:5.61s, loops:27834, RU:147636.168265, cop_task: {num: 1292, max: 0s, min: 0s, avg: 59.4ms, p95: 154.8ms, tot_proc: 1m2.4s, tot_wait: 160.2ms, copr_cache_hit_ratio: 0.00, build_task_duration: 146.9µs, max_distsql_concurrency: 15}, rpc_info:{Cop:{num_rpc:1292, total_time:1m16.7s}}|data:TableFullScan_4|28.5 MB|N/A|

|—|—|—|—|—|—|—|—|—|

|└─TableFullScan_4|28388649.00|28388649|cop[tikv]|table:***|tikv_task:{proc max:141ms, min:0s, avg: 35.4ms, p80:71ms, p95:95ms, iters:32817, tasks:1292}, scan_detail: {total_process_keys: 28388649, total_process_keys_size: 8271171685, total_keys: 28389941, get_snapshot_time: 113.3ms, rocksdb: {key_skipped_count: 28388649, block: {cache_hit_count: 267790}}}, time_detail: {total_process_time: 1m2.4s, total_suspend_time: 167.9ms, total_wait_time: 160.2ms, total_kv_read_wall_time: 45.8s, tikv_wall_time: 1m3.7s}|keep order:false|N/A|N/A| -

生产环境

具体信息如下:

|TableReader_5|28393266.00|28388649|root||time:46.1s, loops:27831, RU:137910.906482, cop_task: {num: 1274, max: 0s, min: 0s, avg: 513.4ms, p95: 1.68s, tot_proc: 50.2s, tot_wait: 91.3ms, copr_cache_hit_ratio: 0.00, build_task_duration: 136.9µs, max_distsql_concurrency: 15}, rpc_info:{Cop:{num_rpc:1274, total_time:10m54.1s}}|data:TableFullScan_4|34.2 MB|N/A|

|—|—|—|—|—|—|—|—|—|

|└─TableFullScan_4|28393266.00|28388649|cop[tikv]|table:***|tikv_task:{proc max:90ms, min:0s, avg: 26.5ms, p80:58ms, p95:61ms, iters:32744, tasks:1274}, scan_detail: {total_process_keys: 28388649, total_process_keys_size: 7902119218, total_keys: 28389923, get_snapshot_time: 67.9ms, rocksdb: {key_skipped_count: 28388649, block: {cache_hit_count: 242669}}}, time_detail: {total_process_time: 50.2s, total_suspend_time: 88.1ms, total_wait_time: 91.3ms, total_kv_read_wall_time: 33.7s, tikv_wall_time: 1m46.1s}|keep order:false|N/A|N/A|