【 TiDB 使用环境】生产环境

上个月我把数据库从5.4.3升级到了6.5.10,我在6.5.10版本的数据库上创建的cdc发送增量数据到kafka,每条消息后面都会带一个空行,早期5.4.3版本就没有空行,刚刚把数据库升级到7.5.4后再看kafka消息就没有空行了。

期间没有改过cdc任务配置。

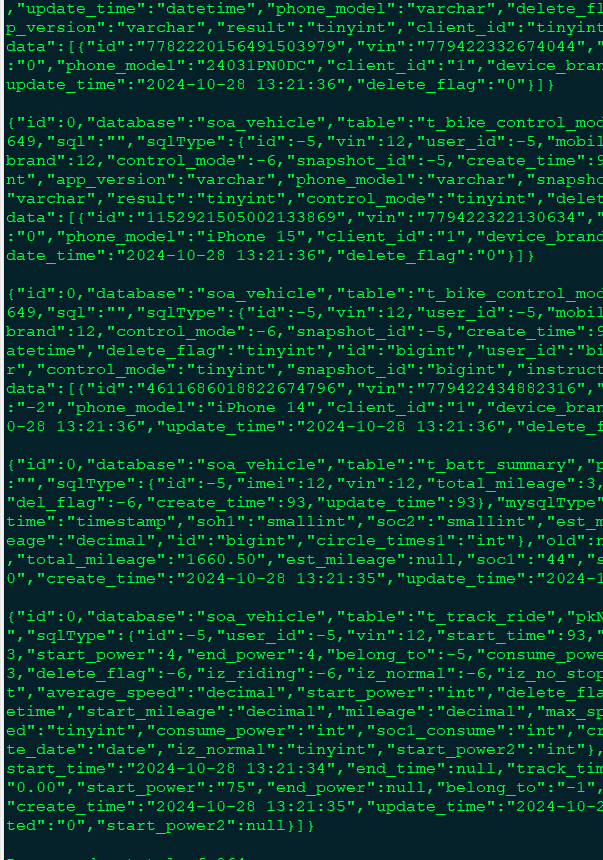

截图是有空行的记录

以下是changefeed 的配置详情

{

"upstream_id": 6947119091400868786,

"namespace": "default",

"id": "kafka-tidb-bike-info",

"sink_uri": "kafka://10.20.20.179:9092,10.20.20.180:9092,10.20.50.247:9092/tidb-bike-info?partition-num=5\u0026enable-old-value=true\u0026max-message-bytes=10000000\u0026replication-factor=3\u0026protocol=canal-json",

"config": {

"memory_quota": 1073741824,

"case_sensitive": true,

"enable_old_value": true,

"force_replicate": false,

"ignore_ineligible_table": false,

"check_gc_safe_point": true,

"enable_sync_point": false,

"bdr_mode": false,

"sync_point_interval": 600000000000,

"sync_point_retention": 86400000000000,

"filter": {

"rules": [

"soa_vehicle.t_bike_info",

"!test.*"

],

"event_filters": null

},

"mounter": {

"worker_num": 4

},

"sink": {

"protocol": "canal-json",

"schema_registry": "",

"csv": {

"delimiter": ",",

"quote": "\"",

"null": "\\N",

"include_commit_ts": false,

"binary_encoding_method": "base64"

},

"dispatchers": [

{

"matcher": [

"*.*"

],

"partition": "",

"topic": ""

}

],

"column_selectors": null,

"transaction_atomicity": "",

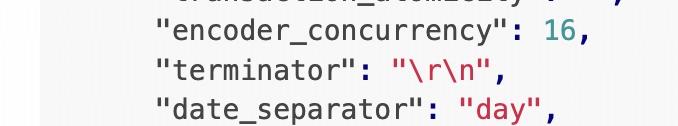

"encoder_concurrency": 16,

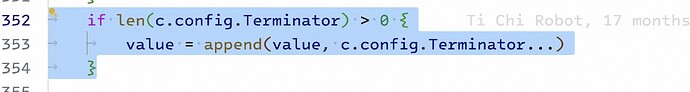

"terminator": "\r\n",

"date_separator": "day",

"enable_partition_separator": true,

"file_index_width": 0,

"kafka_config": null,

"advance_timeout": 150

},

"consistent": {

"level": "none",

"max_log_size": 64,

"flush_interval": 2000,

"meta_flush_interval": 200,

"encoding_worker_num": 16,

"flush_worker_num": 8,

"use_file_backend": false,

"memory_usage": {

"memory_quota_percentage": 50,

"event_cache_percentage": 0

}

},

"changefeed_error_stuck_duration": 1800000000000,

"sql_mode": "ONLY_FULL_GROUP_BY,STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION",

"synced_status": {

"synced_check_interval": 300,

"checkpoint_interval": 15

}

},

"create_time": "2024-09-28 01:03:15.506",

"start_ts": 452841583568486456,

"resolved_ts": 453536950647259837,

"target_ts": 0,

"checkpoint_tso": 453536950647259837,

"checkpoint_time": "2024-10-28 17:02:45.494",

"sort_engine": "unified",

"state": "normal",

"error": null,

"error_history": null,

"creator_version": "v6.5.10",

"task_status": [

{

"capture_id": "c662a571-6e80-4df3-9304-99738cc47e5f",

"table_ids": [

217

],

"table_operations": null

}

]

}