vcdog

2024 年10 月 23 日 01:53

1

【 TiDB 使用环境】生产环境

【遇到的问题:问题现象及影响】

执行升级操作命令:

# tiup cluster upgrade pro-tidb-cluster v7.5.4

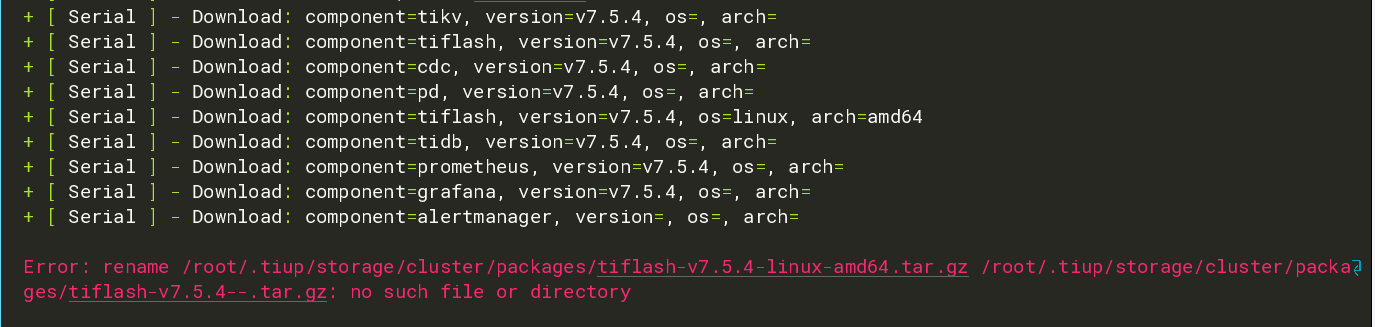

tidb集群升级v6.5.0->v7.5.4版本,报错:

Error: rename /root/.tiup/storage/cluster/packages/tiflash-v7.5.4-linux-amd64.tar.gz /root/.tiup/storage/cluster/packages/tiflash-v7.5.4–.tar.gz: no such file or directory

【资源配置】*进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面*

【附件:截图/日志/监控】

vcdog

2024 年10 月 23 日 01:58

2

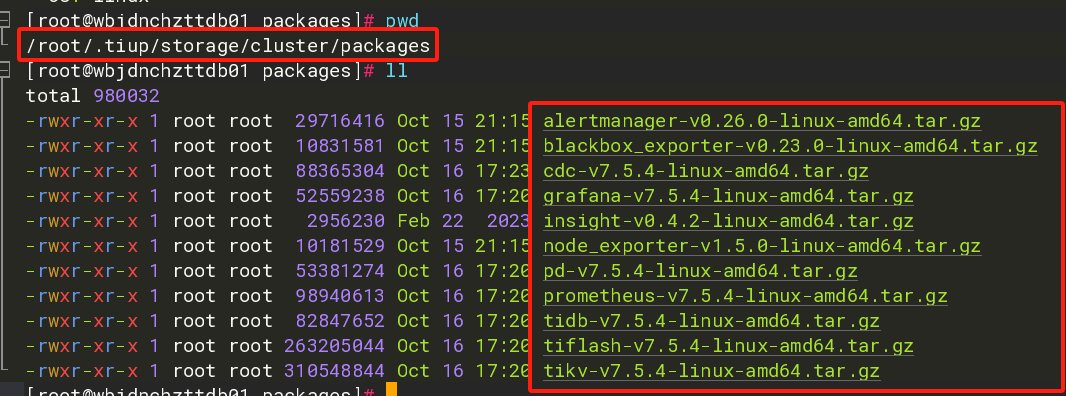

tiflash-v7.5.4-linux-amd64.tar.gz,这些文件是提前拷贝到目标路径的。

vcdog

2024 年10 月 23 日 02:14

3

vcdog:

tiflash-v7.5.4–.tar.gz

升级的完整操作步骤如下:

下载:

cd /acdata/dba_tools/tidb_source

wget https://download.pingcap.org/tidb-community-server-v7.5.4-linux-amd64.tar.gz

wget https://download.pingcap.org/tidb-community-toolkit-v7.5.4-linux-amd64.tar.gz

可以参考使用 TiUP 部署 TiDB 集群 的步骤下载部署新版本的 TiUP 离线镜像,上传到中控机。在执行 local_install.sh 后,TiUP 会完成覆盖升级。

tar xvfz tidb-community-server-v7.5.4-linux-amd64.tar.gz

sh tidb-community-server-v7.5.4-linux-amd64/local_install.sh

source /root/.bash_profile

tiup --version

覆盖升级完成后,需将 server 和 toolkit 两个离线镜像合并,执行以下命令合并离线组件到 server 目录下。

tar xvfz tidb-community-toolkit-v7.5.4-linux-amd64.tar.gz

ls -ld tidb-community-server-v7.5.4-linux-amd64 tidb-community-toolkit-v7.5.4-linux-amd64

cd tidb-community-server-v7.5.4-linux-amd64/

cp -rp keys ~/.tiup/

tiup mirror merge ../tidb-community-toolkit-v7.5.4-linux-amd64

[root@wtj7vpnchtdb05 tidb_source]# tiup update cluster

Updated successfully!

# tiup cluster check pro-sunac-tidb-newfee --cluster

.... ....

10.3.8.193 selinux Pass SELinux is disabled

10.3.8.193 command Pass numactl: policy: default

10.3.8.193 os-version Pass OS is CentOS Linux 7 (Core) 7.9.2009

10.3.8.193 cpu-cores Pass number of CPU cores / threads: 48

10.3.8.193 cpu-governor Warn Unable to determine current CPU frequency governor policy

Checking region status of the cluster pro-sunac-tidb-newfee...

All regions are healthy.

# tiup cluster upgrade pro-sunac-tidb-newfee v7.5.4

vcdog

2024 年10 月 23 日 02:18

4

集群的拓扑结构配置如下:

# tiup cluster show-config pro-sunac-tidb-newfee

global:

user: tidb

ssh_port: 22

ssh_type: builtin

deploy_dir: /acdata/tidb-cluster/tidb-deploy

data_dir: /acdata/tidb-cluster/tidb-data

os: linux

systemd_mode: system

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

deploy_dir: /acdata/tidb-cluster/tidb-deploy/monitor-9100

data_dir: /acdata/tidb-cluster/tidb-data/monitor-9100

log_dir: /acdata/tidb-cluster/tidb-deploy/monitor-9100/log

server_configs:

tidb:

log.level: error

new_collations_enabled_on_first_bootstrap: true

performance.max-procs: 30

performance.txn-total-size-limit: 4221225472

prepared-plan-cache.enabled: true

tidb_mem_oom_action: CANCEL

tidb_mem_quota_query: 1073741824

tmp-storage-path: /acdata/tidb-memory-cache

tmp-storage-quota: -1

tikv:

coprocessor.region-max-size: 384MB

coprocessor.region-split-size: 256MB

raftdb.defaultcf.block-cache-size: 3932MB

raftdb.max-background-jobs: 48

raftstore.region-split-check-diff: 196MB

raftstore.store-pool-size: 48

readpool.coprocessor.use-unified-pool: true

readpool.storage.use-unified-pool: false

readpool.unified.max-thread-count: 48

rocksdb.defaultcf.block-cache-size: 45875MB

rocksdb.lockcf.block-cache-size: 3932MB

rocksdb.max-background-jobs: 48

rocksdb.writecf.block-cache-size: 32768MB

server.end-point-concurrency: 32

server.grpc-concurrency: 48

storage.reserve-space: 0MB

storage.scheduler-worker-pool-size: 32

pd: {}

tso: {}

scheduling: {}

tidb_dashboard: {}

tiflash: {}

tiproxy: {}

tiflash-learner: {}

pump: {}

drainer: {}

cdc: {}

kvcdc: {}

grafana: {}

tidb_servers:

- host: 10.3.8.193

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /acdata/tidb-cluster/tidb-deploy/tidb-4000

log_dir: /acdata/tidb-cluster/tidb-deploy/tidb-4000/log

- host: 10.3.8.194

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /acdata/tidb-cluster/tidb-deploy/tidb-4000

log_dir: /acdata/tidb-cluster/tidb-deploy/tidb-4000/log

- host: 10.3.8.212

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /acdata/tidb-cluster/tidb-deploy/tidb-4000

log_dir: /acdata/tidb-cluster/tidb-deploy/tidb-4000/log

- host: 10.3.8.237

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /acdata/tidb-cluster/tidb-deploy/tidb-4000

log_dir: /acdata/tidb-cluster/tidb-deploy/tidb-4000/log

tikv_servers:

- host: 10.3.8.198

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /acdata/tidb-cluster/tidb-deploy/tikv-20160

data_dir: /acdata/tidb-cluster/tidb-data/tikv-20160

log_dir: /acdata/tidb-cluster/tidb-deploy/tikv-20160/log

- host: 10.3.8.199

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /acdata/tidb-cluster/tidb-deploy/tikv-20160

data_dir: /acdata/tidb-cluster/tidb-data/tikv-20160

log_dir: /acdata/tidb-cluster/tidb-deploy/tikv-20160/log

- host: 10.3.8.200

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /acdata/tidb-cluster/tidb-deploy/tikv-20160

data_dir: /acdata/tidb-cluster/tidb-data/tikv-20160

log_dir: /acdata/tidb-cluster/tidb-deploy/tikv-20160/log

tiflash_servers:

- host: 10.3.8.201

ssh_port: 22

tcp_port: 9000

http_port: 8123

flash_service_port: 3930

flash_proxy_port: 20170

flash_proxy_status_port: 20292

metrics_port: 8234

deploy_dir: /acdata/tidb-cluster/tidb-deploy/tiflash-9000

data_dir: /acdata/tidb-cluster/tidb-data/tiflash-9000

log_dir: /acdata/tidb-cluster/tidb-deploy/tiflash-9000/log

- host: 10.3.8.202

ssh_port: 22

tcp_port: 9000

http_port: 8123

flash_service_port: 3930

flash_proxy_port: 20170

flash_proxy_status_port: 20292

metrics_port: 8234

deploy_dir: /acdata/tidb-cluster/tidb-deploy/tiflash-9000

data_dir: /acdata/tidb-cluster/tidb-data/tiflash-9000

log_dir: /acdata/tidb-cluster/tidb-deploy/tiflash-9000/log

arch: amd64

os: linux

tiproxy_servers: []

pd_servers:

- host: 10.3.8.244

ssh_port: 22

name: pd-10.3.8.244-2379

client_port: 2379

peer_port: 2380

deploy_dir: /acdata/tidb-cluster/tidb-deploy/pd-2379

data_dir: /acdata/tidb-cluster/tidb-data/pd-2379

log_dir: /acdata/tidb-cluster/tidb-deploy/pd-2379/log

- host: 10.3.8.245

ssh_port: 22

name: pd-10.3.8.245-2379

client_port: 2379

peer_port: 2380

deploy_dir: /acdata/tidb-cluster/tidb-deploy/pd-2379

data_dir: /acdata/tidb-cluster/tidb-data/pd-2379

log_dir: /acdata/tidb-cluster/tidb-deploy/pd-2379/log

- host: 10.3.8.246

ssh_port: 22

name: pd-10.3.8.246-2379

client_port: 2379

peer_port: 2380

deploy_dir: /acdata/tidb-cluster/tidb-deploy/pd-2379

data_dir: /acdata/tidb-cluster/tidb-data/pd-2379

log_dir: /acdata/tidb-cluster/tidb-deploy/pd-2379/log

cdc_servers:

- host: 10.3.8.203

ssh_port: 22

port: 8300

deploy_dir: /acdata/tidb-cluster/tidb-deploy/cdc-8300

data_dir: /acdata/tidb-cluster/tidb-data/cdc-8300

log_dir: /acdata/tidb-cluster/tidb-deploy/cdc-8300/log

gc-ttl: 86400

ticdc_cluster_id: ""

monitoring_servers:

- host: 10.3.8.194

ssh_port: 22

port: 9090

ng_port: 12020

deploy_dir: /acdata/tidb-cluster/tidb-deploy/prometheus-9090

data_dir: /acdata/tidb-cluster/tidb-data/prometheus-9090

log_dir: /acdata/tidb-cluster/tidb-deploy/prometheus-9090/log

external_alertmanagers: []

grafana_servers:

- host: 10.3.8.194

ssh_port: 22

port: 3000

deploy_dir: /acdata/tidb-cluster/tidb-deploy/grafana-3000

username: admin

password: admin

anonymous_enable: false

root_url: ""

domain: ""

alertmanager_servers:

- host: 10.3.8.194

ssh_port: 22

web_port: 9093

cluster_port: 9094

deploy_dir: /acdata/tidb-cluster/tidb-deploy/alertmanager-9093

data_dir: /acdata/tidb-cluster/tidb-data/alertmanager-9093

log_dir: /acdata/tidb-cluster/tidb-deploy/alertmanager-9093/log

1、tiup cluster edit-config pro-sunac-tidb-newfee

舞动梦灵

2024 年10 月 23 日 07:36

8

最好还是在线吧,不停机升级,你还搞离线。风险增大。