【 TiDB 使用环境】生产环境

【 TiDB 版本】V4.0.5

【遇到的问题:问题现象及影响】

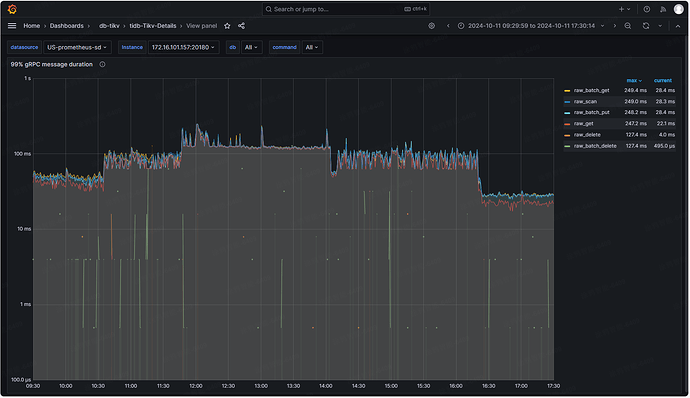

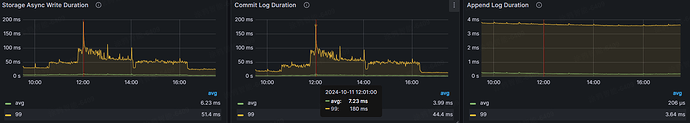

线上业务 gRPC message duration 经常变大,分析下来是 Commit log duration 变大的原因(基本 Commit log duration 涨多少 gRPC message duration 也涨多少),看官方有以下说明:

Commit log duration 是 Raftstore 将 Raft 日志提交到相应 Region 的多数成员所花费的时间。如果该指标的值较高且波动较大,可能的原因有:

- Raftstore 的负载较高

- append log 较慢

- Raft 日志由于网络阻塞无法及时提交

自己分析:raftstore.store-pool-size=8,延迟大时也只有 380% 左右,负载不高。append log 延迟监控中没变化也不是。那大概率是网络阻塞的原因了,另外官方也有说 raft 有流控机制可能会限制 tcp 传输,想请教大佬们有哪个指标或者日志能看到流控相关,如果分析的有不对的地方也请教下

raft commit log 慢。TiKV Grafana 的 Raft I/O 和 commit log duration 比较高(4.x 版本的 Grafana 才有该 metric)。每个 Region 对应一个独立的 Raft group,Raft 本身是有流控机制的,类似 TCP 的滑动窗口机制,通过参数 [raftstore] raft-max-inflight-msgs = 256 来控制滑动窗口的大小,如果有热点写入并且 commit log duration 比较高可以适度调大改参数,比如 1024。

官方文档:

海量 Region 集群调优最佳实践 | TiDB 文档中心 (pingcap.com)

TiDB 磁盘 I/O 过高的处理办法 | TiDB 文档中心 (pingcap.com)

【资源配置】

有猫万事足

2

https://docs.pingcap.com/zh/tidb/stable/grafana-overview-dashboard#system-info

这个面板下面的tcp retrans有异常嘛?网络没问题的话,增大滑动窗口确实对小的网络抖动会容忍度高一些,如果网络的问题不解决,只调大滑动窗口,感觉作用有限。

配置的原因没有加这个面板。应该也不是服务器网络的原因,因为一般出现这种情况时,restart 这个节点就又恢复正常了。还没调这个参数,不清楚效果如何,想先来问问大家有没有碰到过类似问题

commit log 延迟变大时,tikv 的日志如下,看起来是 rcp 连接异常了,但其它时间点也经常有这类错

[2024/10/11 02:34:48.638 +00:00] [WARN] [raft_client.rs:296] [“RPC batch_raft fail”] [err=“Some(RpcFailure(RpcStatus { status: 1-CANCELLED, details: Some("Cancelled") }))”] [sink_err=“Some(RpcFinished(Some(RpcStatus { status: 1-CANC

ELLED, details: Some("Cancelled") })))”] [to_addr=172.16.120.56:20160]

[2024/10/11 02:34:48.638 +00:00] [INFO] [store.rs:2108] [“broadcasting unreachable”] [unreachable_store_id=10659048] [store_id=621378]

[2024/10/11 02:34:48.638 +00:00] [WARN] [raft_client.rs:199] [“send to 172.16.120.56:20160 fail, the gRPC connection could be broken”]

[2024/10/11 02:34:48.639 +00:00] [ERROR] [transport.rs:163] [“send raft msg err”] [err=“Other("[src/server/raft_client.rs:208]: RaftClient send fail")”]

[2024/10/11 02:34:48.639 +00:00] [INFO] [raft_client.rs:48] [“server: new connection with tikv endpoint”] [addr=172.16.120.56:20160]

[2024/10/11 02:34:48.640 +00:00] [INFO] [transport.rs:144] [“resolve store address ok”] [addr=172.16.120.56:20160] [store_id=10659048]

[2024/10/11 02:34:48.654 +00:00] [INFO] [] [“New connected subchannel at 0x7f3c77f1c7d0 for subchannel 0x7f3c84db3e80”]

[2024/10/11 02:34:55.882 +00:00] [INFO] [peer.rs:900] [“failed to schedule peer tick”] [err=“sending on a disconnected channel”] [tick=CHECK_PEER_STALE_STATE] [peer_id=10878347] [region_id=10598774]

[2024/10/11 03:46:30.916 +00:00] [WARN] [raft_client.rs:296] [“RPC batch_raft fail”] [err=“Some(RpcFailure(RpcStatus { status: 1-CANCELLED, details: Some("Cancelled") }))”] [sink_err=“Some(RpcFinished(Some(RpcStatus { status: 1-CANCELLED, details: Some("Cancelled") })))”] [to_addr=172.16.110.110:20160]

[2024/10/11 03:46:30.916 +00:00] [INFO] [store.rs:2108] [“broadcasting unreachable”] [unreachable_store_id=10698785] [store_id=621378]

[2024/10/11 03:46:30.917 +00:00] [WARN] [raft_client.rs:199] [“send to 172.16.110.110:20160 fail, the gRPC connection could be broken”]

[2024/10/11 03:46:30.919 +00:00] [ERROR] [transport.rs:163] [“send raft msg err”] [err=“Other("[src/server/raft_client.rs:208]: RaftClient send fail")”]

[2024/10/11 03:46:30.922 +00:00] [INFO] [transport.rs:144] [“resolve store address ok”] [addr=172.16.110.110:20160] [store_id=10698785]

[2024/10/11 03:46:30.922 +00:00] [INFO] [raft_client.rs:48] [“server: new connection with tikv endpoint”] [addr=172.16.110.110:20160]

[2024/10/11 03:46:30.923 +00:00] [INFO] [transport.rs:144] [“resolve store address ok”] [addr=172.16.110.110:20160] [store_id=10698785]

[2024/10/11 03:46:30.940 +00:00] [INFO] [] [“New connected subchannel at 0x7f3cf7d22cf0 for subchannel 0x7f3c7dccec00”]

[2024/10/11 03:46:52.654 +00:00] [INFO] [size.rs:171] [“approximate size over threshold, need to do split check”] [threshold=150994944] [size=154676072] [region_id=10691942]

[2024/10/11 03:46:53.755 +00:00] [INFO] [split_check.rs:283] [“update approximate size and keys with accurate value”] [keys=2310373] [size=140101646] [region_id=10691942]

[2024/10/11 03:46:53.760 +00:00] [WARN] [mod.rs:89] [“handle task [split check worker] Split Check Task for 10691942, auto_split: true”] [takes=1104]

有猫万事足

5

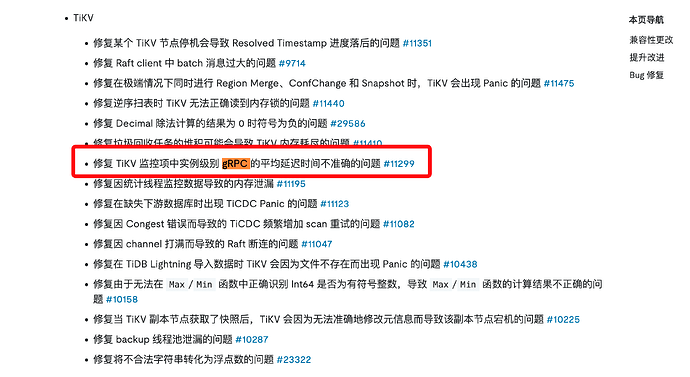

像是bug。最好的办法还是升级,4.0都已经EOL了。实在不行也要尝试升到子版本最新看看。4.0.5还是有点低。

WalterWj

(王军 - PingCAP)

7

看监控,你这 batch get 也不低  。我觉得还是可以先找找慢 sql 优化 优化看看。

。我觉得还是可以先找找慢 sql 优化 优化看看。

版本还是要升级的。 4.0 已经 EOL 半年了。

业务直接写的 tikv,没用 tidb,看不了慢 sql

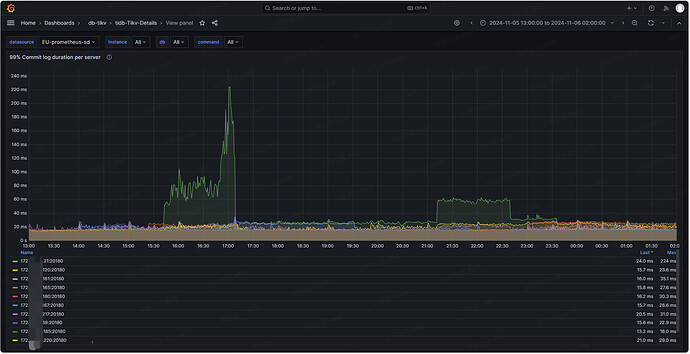

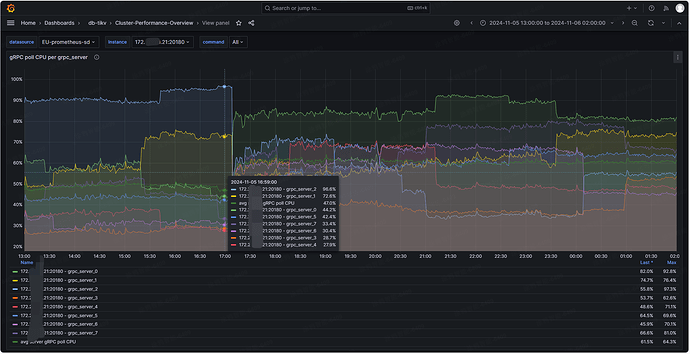

大佬,分析下来,感觉是某个 grpc_server cpu 过高的原因,看监控,只要有其中一个 grpc_server cpu 高于 90% commit log 延迟就变大,再高些延迟更大。但整体 grpc_server cpu 使用率不高,平均在 50% 左右。这个是什么分配策略呢,能不能调整下

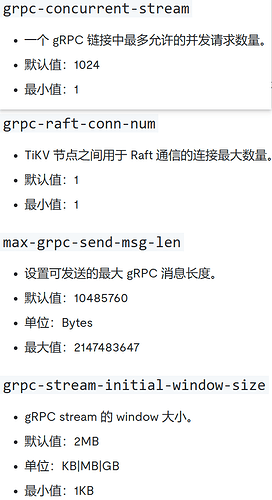

官方 grpc 有这几个参数,但描述的太简单了,不清楚是干吗用的

这个也能复现出来,只要有一个 grpc_server cpu 高了,延迟就会变大