【 TiDB 使用环境】生产环境

【 TiDB 版本】

7.1.5

【复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】

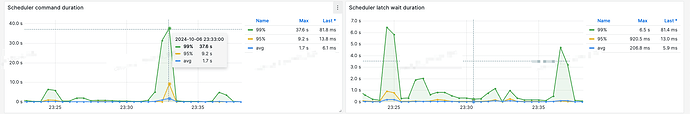

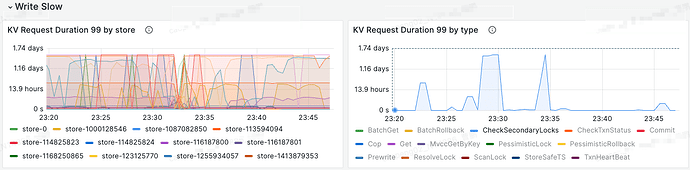

tidb集群抖动频繁,业务经常出现瞬间大批量超时的情况,查看监控日志发现出现问题时scheduler延迟变高、tikv部分类型请求延迟变高

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

异常的tikv日志 伴随着大量的 如下日志

[WARN] [scheduler.rs:1132] [“[region 2271758687] scheduler handle command: prewrite, ts: 453102623728076289, details: SchedulerDetails { tracker: TrackerToken { shard_id: 24, seq: 39744222, key: 308 }, stat: Statistics { lock: CfStatistics { processed_keys: 0, get: 1, next: 0, prev: 0, seek: 0, seek_for_prev: 0, over_seek_bound: 0, flow_stats: FlowStatistics { read_keys: 0, read_bytes: 0 }, next_tombstone: 0, prev_tombstone: 0, seek_tombstone: 0, seek_for_prev_tombstone: 0, raw_value_tombstone: 0 }, write: CfStatistics { processed_keys: 0, get: 0, next: 0, prev: 0, seek: 2, seek_for_prev: 0, over_seek_bound: 0, flow_stats: FlowStatistics { read_keys: 0, read_bytes: 0 }, next_tombstone: 0, prev_tombstone: 0, seek_tombstone: 0, seek_for_prev_tombstone: 0, raw_value_tombstone: 0 }, data: CfStatistics { processed_keys: 0, get: 0, next: 0, prev: 0, seek: 0, seek_for_prev: 0, over_seek_bound: 0, flow_stats: FlowStatistics { read_keys: 0, read_bytes: 0 }, next_tombstone: 0, prev_tombstone: 0, seek_tombstone: 0, seek_for_prev_tombstone: 0, raw_value_tombstone: 0 }, processed_size: 0 }, start_process_instant: Monotonic(Timespec { sec: 128641583, nsec: 582539576 }), quota_limit_delay_nanos: 0, flow_control_nanos: 7873000000 }”] [takes=7993]

那个时间点服务器的资源使用呢?

同时也会伴随着如下的日志

[WARN] [prewrite.rs:767] [“commit_ts is too large, fallback to normal 2PC”] [lock=“Lock { lock_type: Put, primary_key: 748000000000081D3D5F6980000000000000010164617933302F7261FF77732F6433307878FF7075622F32303234FF5F31305F30392F39FF6B31397969366468FF676668737236646FFF356261306B347973FF30766B6C3778765FFF302F323936373339FF3036315F32363836FF352E6F7468657200FE010000000000000000F7, start_ts: TimeStamp(453102625681047932), ttl: 3000, short_value: 30, for_update_ts: TimeStamp(0), txn_size: 1, min_commit_ts: TimeStamp(453102625681047933), use_async_commit: true, secondaries: , rollback_ts: , last_change_ts: TimeStamp(0), versions_to_last_change: 0, txn_source: 0 }”] [max_commit_ts=453102626205335932] [min_commit_ts=453102631395793792] [start_ts=453102625681047932] [key=748000000000081DFF3D5F698000000000FF0000020164617933FF302F7261FF77732FFF6433307878FF7075FF622F32303234FF5FFF31305F30392F39FFFF6B31397969366468FFFF67666873723664FF6FFF356261306B34FF7973FF30766B6C37FF78765FFF302F3239FF36373339FF303631FF5F32363836FF352EFF6F7468657200FE04FF72CA737E7F901600FF0380000000000000FF0001000000000000FF0000F70308000000FF828CF6F500000000FB]

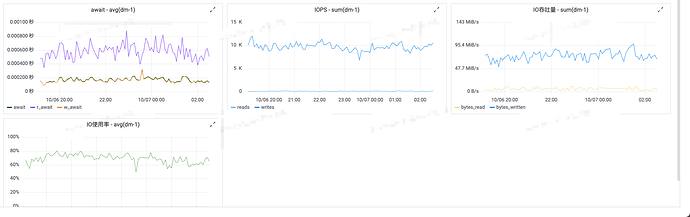

看这个日志像是磁盘读写跟不上了。磁盘有监控吗?

慢日志里面的慢 sql 的耗时分布看下。感觉都是在等 lock?

看下 kv virtualization 是不是有明显的热点region

每个节点的 IO 在同一个时间段的波动都类似么?还是差别很大?

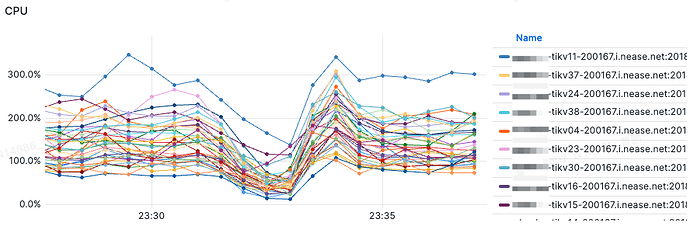

从你提供的图来看,cpu 的使用率很低了,

这儿的 lock 的问题,什么时候出现的?

1、先查看什么情况导致的,系统资源此刻的使用情况;

2、系统日志、数据库日志都看看。

感觉是 1pc 遇到 commit ts too large 然后回退到 2pc。

然后 lock key 是:

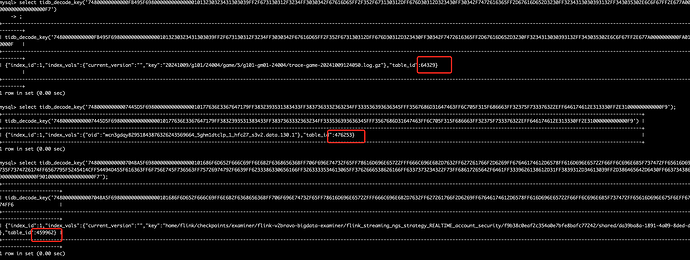

(root@127.0.0.1) [(none)]>select tidb_decode_key('748000000000081D3D5F6980000000000000010164617933302F7261FF77732F6433307878FF7075622F32303234FF5F31305F30392F39FF6B31397969366468FF676668737236646FFF356261306B347973FF30766B6C3778765FFF302F323936373339FF3036315F32363836FF352E6F7468657200FE010000000000000000F7');

+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| tidb_decode_key('748000000000081D3D5F6980000000000000010164617933302F7261FF77732F6433307878FF7075622F32303234FF5F31305F30392F39FF6B31397969366468FF676668737236646FFF356261306B347973FF30766B6C3778765FFF302F323936373339FF3036315F32363836FF352E6F7468657200FE0 |

+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| {"index_id":1,"index_vals":"day30/raws/d30xxpub/2024_10_09/9k19yi6dhgfhsr6do5ba0k4ys0vkl7xv_0/296739061_26865.other, ","table_id":531773} |

+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

1 row in set (0.00 sec)

(root@127.0.0.1) [(none)]>select tidb_decode_key('748000000000081DFF3D5F698000000000FF0000020164617933FF302F7261FF77732FFF6433307878FF7075FF622F32303234FF5FFF31305F30392F39FFFF6B31397969366468FFFF67666873723664FF6FFF356261306B34FF7973FF30766B6C37FF78765FFF302F3239FF36373339FF303631FF5F32363836FF352EFF6F7468657200FE04FF72CA737E7F901600FF0380000000000000FF0001000000000000FF0000F70308000000FF828CF6F500000000FB');

+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| tidb_decode_key('748000000000081DFF3D5F698000000000FF0000020164617933FF302F7261FF77732FFF6433307878FF7075FF622F32303234FF5FFF31305F30392F39FFFF6B31397969366468FFFF67666873723664FF6FFF356261306B34FF7973FF30766B6C37FF78765FFF302F3239FF36373339FF303631FF5F323 |

+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| {"index_id":2,"index_vals":"day30/raws/d30xxpub/2024_10_09/9k19yi6dhgfhsr6do5ba0k4ys0vkl7xv_0/296739061_26865.other, 8271550652762560000, 0, , -8646911282361075979","table_id":531773} |

+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

1 row in set (0.00 sec)

你们自己看看 531773 表,1/2 索引是啥,有没有逻辑上的锁冲突。

那感觉是哪里夯住了。

感觉有io阻塞

看到前面日志有 flow_control_nanos: 7873000000,检查一下 TiKV Details 里面的 Flow Control 行呢?可能是 RocksDB compact 不过来导致触发流控了。