【 TiDB 使用环境】测试

【 TiDB 版本】 v8.1.0

【复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

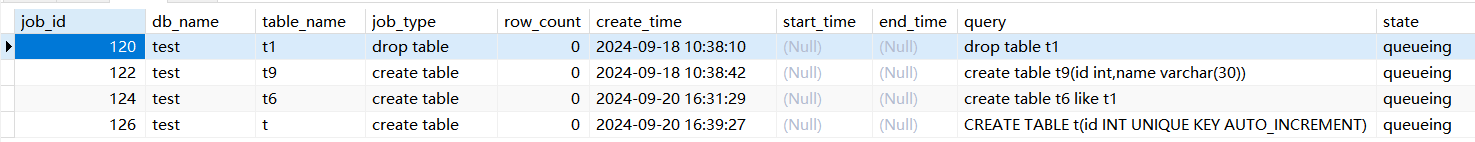

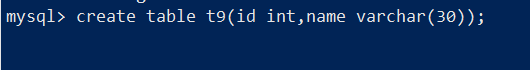

刚刚启动了自己的虚拟机,然后执行如下语句建表,一直处于等待中:这个要如何定位?

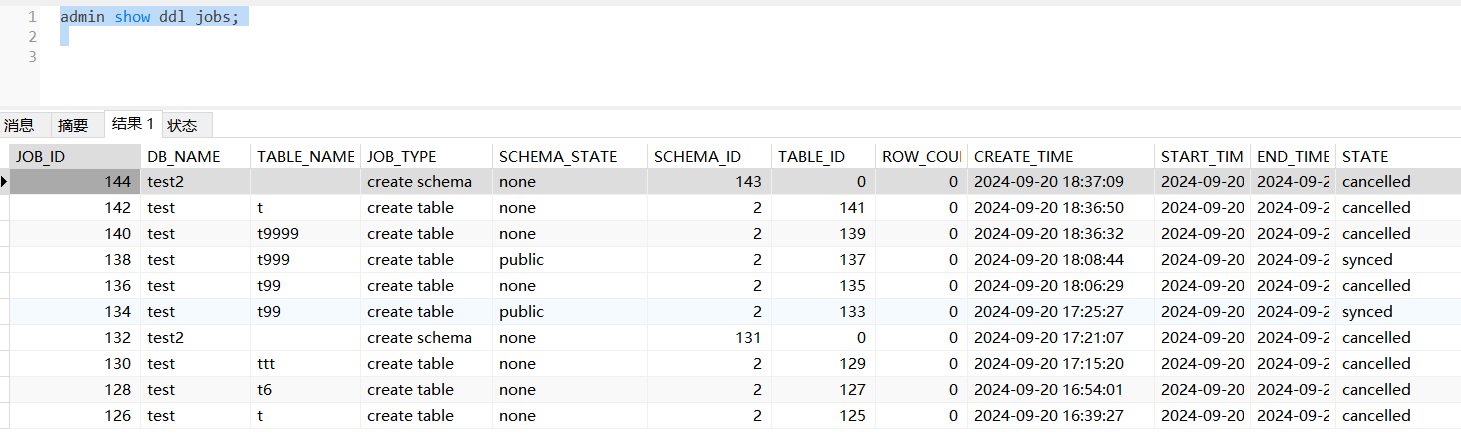

ADMIN SHOW DDL JOBS;

看看DDL队列什么情况先,再看看后台日志,有无明显的报错,集群状态是否正常

- 在正常集群启动后,第一个 DDL 操作的执行时间可能会比较久,一般在 30s 左右,这个原因是刚启动时 TiDB 在竞选处理 DDL 的 leader。

- 看集群状态是否正常。

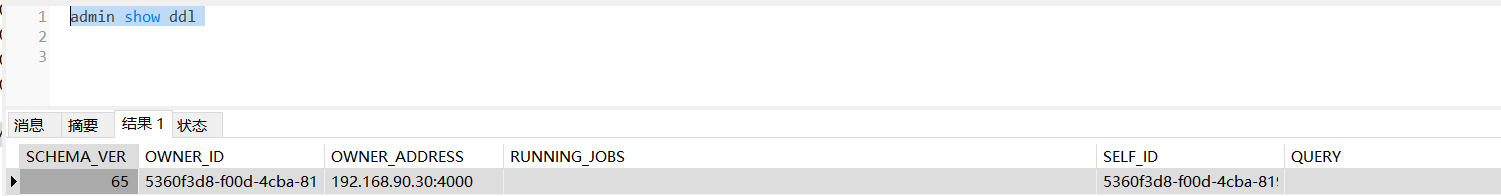

- ADMIN SHOW DDL;查看是否有正常运行的DDL。

- ADMIN SHOW DDL JOBS;查看DDL运行状态。

- 再查看对应段的TiDB日志。

SHOW DDL JOBS

admin show ddl jobs; 然后再看看tidb日志有没有什么错误,集群状态是否异常

我觉得这个不错

我这通过admin cancel ddl jobs 120,122,124,126;中断了这些ddl job后,再次执行建表语句,还是会卡住:create table t6(id int auto_increment primary key,name varchar(30));

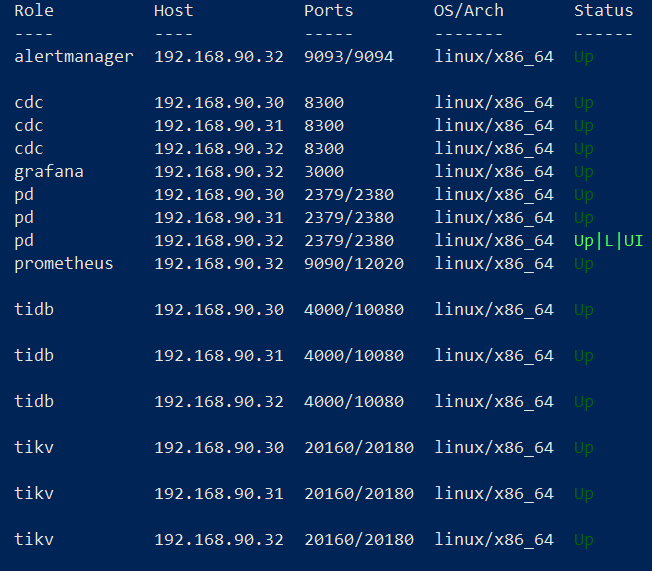

tiup display 看一下集群的状态,以及看一下 tidb.log

集群状态是正常的:

tidb.log的内容:

[2024/09/20 16:51:49.549 +08:00] [INFO] [domain.go:2872] [“refreshServerIDTTL succeed”] [serverID=1616] [“lease id”=2702920d2d457e59]

[2024/09/20 16:52:28.781 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=781.131455637s] [conn=3388997850] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“CREATE TABLE t(id INT UNIQUE KEY AUTO_INCREMENT)”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:52:31.081 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1261.516178957s] [conn=3388997848] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6 like t1”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:52:45.396 +08:00] [INFO] [server.go:895] [kill] [conn=3388997848] [query=false]

[2024/09/20 16:53:19.874 +08:00] [INFO] [server.go:895] [kill] [conn=3388997850] [query=false]

[2024/09/20 16:53:28.782 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=841.132391204s] [conn=3388997850] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“CREATE TABLE t(id INT UNIQUE KEY AUTO_INCREMENT)”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:53:28.793 +08:00] [INFO] [server.go:895] [kill] [conn=3388997850] [query=true]

[2024/09/20 16:53:31.182 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1321.616363632s] [conn=3388997848] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6 like t1”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:53:31.576 +08:00] [INFO] [server.go:895] [kill] [conn=3388997848] [query=true]

[2024/09/20 16:54:01.011 +08:00] [INFO] [session.go:3894] [“CRUCIAL OPERATION”] [conn=3388997862] [schemaVersion=63] [cur_db=test] [sql=“create table t6(id int auto_increment primary key,name varchar(30))”] [user=root@%]

[2024/09/20 16:54:01.052 +08:00] [INFO] [ddl_worker.go:260] [“add DDL jobs”] [category=ddl] [“batch count”=1] [jobs="ID:128, Type:create table, State:queueing, SchemaState:none, SchemaID:2, TableID:127, RowCount:0, ArgLen:2, start time: 2024-09-20 16:54:01.013 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0, LocalMode: false; "] [table=true]

[2024/09/20 16:54:01.055 +08:00] [INFO] [ddl.go:1181] [“start DDL job”] [category=ddl] [job=“ID:128, Type:create table, State:queueing, SchemaState:none, SchemaID:2, TableID:127, RowCount:0, ArgLen:2, start time: 2024-09-20 16:54:01.013 +0800 CST, Err:, ErrCount:0, SnapshotVersion:0, LocalMode: false”] [query=“create table t6(id int auto_increment primary key,name varchar(30))”]

[2024/09/20 16:54:28.881 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=901.231444853s] [conn=3388997850] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“CREATE TABLE t(id INT UNIQUE KEY AUTO_INCREMENT)”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:54:31.281 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1381.71586338s] [conn=3388997848] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6 like t1”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:55:01.081 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=60.070759342s] [conn=3388997862] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6(id int auto_increment primary key,name varchar(30))”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:55:28.982 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=961.33255519s] [conn=3388997850] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“CREATE TABLE t(id INT UNIQUE KEY AUTO_INCREMENT)”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:55:31.381 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1441.816035236s] [conn=3388997848] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6 like t1”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:56:01.081 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=120.070932435s] [conn=3388997862] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6(id int auto_increment primary key,name varchar(30))”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:56:29.081 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1021.431849561s] [conn=3388997850] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“CREATE TABLE t(id INT UNIQUE KEY AUTO_INCREMENT)”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:56:31.481 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1501.915771587s] [conn=3388997848] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6 like t1”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:56:49.548 +08:00] [INFO] [domain.go:2872] [“refreshServerIDTTL succeed”] [serverID=1616] [“lease id”=2702920d2d457e59]

[2024/09/20 16:57:01.181 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=180.170547533s] [conn=3388997862] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6(id int auto_increment primary key,name varchar(30))”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:57:29.081 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1081.432061163s] [conn=3388997850] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“CREATE TABLE t(id INT UNIQUE KEY AUTO_INCREMENT)”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:57:31.481 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1561.916059075s] [conn=3388997848] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6 like t1”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:58:01.182 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=240.171566652s] [conn=3388997862] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6(id int auto_increment primary key,name varchar(30))”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:58:29.082 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1141.432550775s] [conn=3388997850] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“CREATE TABLE t(id INT UNIQUE KEY AUTO_INCREMENT)”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:58:31.581 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1622.01556988s] [conn=3388997848] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6 like t1”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:59:01.281 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=300.270906897s] [conn=3388997862] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6(id int auto_increment primary key,name varchar(30))”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:59:29.181 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1201.531992107s] [conn=3388997850] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“CREATE TABLE t(id INT UNIQUE KEY AUTO_INCREMENT)”] [session_alias=] [“affected rows”=0]

[2024/09/20 16:59:31.581 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=1682.015948569s] [conn=3388997848] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6 like t1”] [session_alias=] [“affected rows”=0]

[2024/09/20 17:00:01.382 +08:00] [WARN] [expensivequery.go:145] [expensive_query] [cost_time=360.371186813s] [conn=3388997862] [user=root] [database=test] [txn_start_ts=0] [mem_max=“0 Bytes (0 Bytes)”] [sql=“create table t6(id int auto_increment primary key,name varchar(30))”] [session_alias=] [“affected rows”=0]

定位 DDL 执行卡住的问题

- 先排除 DDL 语句通常执行慢的可能原因。

- 使用以下任一方法找出 DDL owner 节点:

-

通过

curl http://{TiDBIP}:10080/info/all获取当前集群的 Owner -

通过监控 DDL > DDL META OPM 查看某个时间段的 Owner

-

如果 Owner 不存在,尝试手动触发 Owner 选举:

curl -X POST http://{TiDBIP}:10080/ddl/owner/resign。 -

如果 Owner 存在,导出 Goroutine 堆栈并检查可能卡住的地方。

我看我这的owner是存在的,不过“导出 Goroutine 堆栈并检查可能卡住的地方”这个操作就不知道怎么操作了,或者说操作后大概率也是看不到这个堆栈信息的

$ curl http://{192.168.90.30}:10080/info/all

{

“servers_num”: 3,

“owner_id”: “44c44aa1-846d-4cb8-b311-d568c6504526”,

“is_all_server_version_consistent”: true,

“all_servers_info”: {

“44c44aa1-846d-4cb8-b311-d568c6504526”: {

“version”: “8.0.11-TiDB-v8.1.0”,

“git_hash”: “945d07c5d5c7a1ae212f6013adfb187f2de24b23”,

“ddl_id”: “44c44aa1-846d-4cb8-b311-d568c6504526”,

“ip”: “192.168.90.32”,

“listening_port”: 4000,

“status_port”: 10080,

“lease”: “45s”,

“binlog_status”: “Off”,

“start_timestamp”: 1726798009,

“labels”: {},

“server_id”: 169

},

“6615af9e-9346-41eb-a7b3-0fa7d157e4bc”: {

“version”: “8.0.11-TiDB-v8.1.0”,

“git_hash”: “945d07c5d5c7a1ae212f6013adfb187f2de24b23”,

“ddl_id”: “6615af9e-9346-41eb-a7b3-0fa7d157e4bc”,

“ip”: “192.168.90.30”,

“listening_port”: 4000,

“status_port”: 10080,

“lease”: “45s”,

“binlog_status”: “Off”,

“start_timestamp”: 1726798009,

“labels”: {},

“server_id”: 1616

},

“cd275b9d-ed0d-47ad-a2d0-e485ff6ed809”: {

“version”: “8.0.11-TiDB-v8.1.0”,

“git_hash”: “945d07c5d5c7a1ae212f6013adfb187f2de24b23”,

“ddl_id”: “cd275b9d-ed0d-47ad-a2d0-e485ff6ed809”,

“ip”: “192.168.90.31”,

“listening_port”: 4000,

“status_port”: 10080,

“lease”: “45s”,

“binlog_status”: “Off”,

“start_timestamp”: 1726803316,

“labels”: {},

“server_id”: 1897

}

}

是不是阻塞了啊? drop table t1一直排队中,是不是在t1表上有什么操作

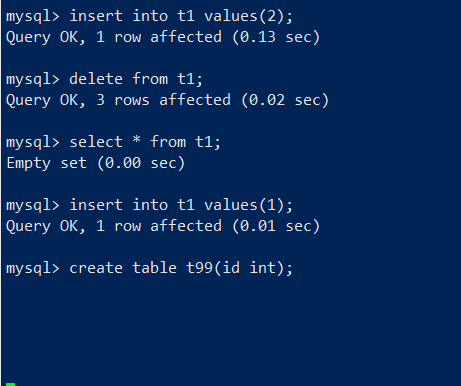

就是哪怕我先把所有queueing排队的ddl job都先cancel了,然后再去建表,都会卡住,即使集群重启了,就卡create table操作

附件是通过:curl -G “http://{192.168.90.30}:10080/debug/pprof/goroutine?debug=2” > goroutine 获取的Goroutine 堆栈信息(在获取的时候,有先执行create table卡在那边

goroutine.txt (182.7 KB)

),当前owner id在192.168.90.30上,刚刚有重启集群,owner id信息如下所示:

{

“servers_num”: 3,

“owner_id”: “d6de42c4-d0c4-47a7-be90-9a3a1fe219f7”,

“is_all_server_version_consistent”: true,

“all_servers_info”: {

“3f6add4e-8f62-4368-8a4e-e9cc6d1a9f87”: {

“version”: “8.0.11-TiDB-v8.1.0”,

“git_hash”: “945d07c5d5c7a1ae212f6013adfb187f2de24b23”,

“ddl_id”: “3f6add4e-8f62-4368-8a4e-e9cc6d1a9f87”,

“ip”: “192.168.90.32”,

“listening_port”: 4000,

“status_port”: 10080,

“lease”: “45s”,

“binlog_status”: “Off”,

“start_timestamp”: 1726826671,

“labels”: {},

“server_id”: 89

},

“d6de42c4-d0c4-47a7-be90-9a3a1fe219f7”: {

“version”: “8.0.11-TiDB-v8.1.0”,

“git_hash”: “945d07c5d5c7a1ae212f6013adfb187f2de24b23”,

“ddl_id”: “d6de42c4-d0c4-47a7-be90-9a3a1fe219f7”,

“ip”: “192.168.90.30”,

“listening_port”: 4000,

“status_port”: 10080,

“lease”: “45s”,

“binlog_status”: “Off”,

“start_timestamp”: 1726826658,

“labels”: {},

“server_id”: 1063

},

“e85282ad-d2c1-4e61-9735-aaad1c188a4b”: {

“version”: “8.0.11-TiDB-v8.1.0”,

“git_hash”: “945d07c5d5c7a1ae212f6013adfb187f2de24b23”,

“ddl_id”: “e85282ad-d2c1-4e61-9735-aaad1c188a4b”,

“ip”: “192.168.90.31”,

“listening_port”: 4000,

“status_port”: 10080,

“lease”: “45s”,

“binlog_status”: “Off”,

“start_timestamp”: 1726826663,

“labels”: {},

“server_id”: 1592

}

}

当 TiDB 中的 DDL 操作卡住时,可以采取以下步骤来处理[1][2]:

- 首先,使用

ADMIN SHOW DDL命令查看当前正在执行的 DDL 任务状态:

ADMIN SHOW DDL;

- 如果发现 DDL 任务长时间没有进展,可以尝试使用

ADMIN CANCEL DDL JOBS命令取消该 DDL 任务:

ADMIN CANCEL DDL JOBS <job_id>;

其中 <job_id> 是从 ADMIN SHOW DDL 命令输出中获得的任务 ID。

-

如果取消操作不成功,可以考虑重启 TiDB 集群中的 DDL Owner 节点。可以通过

ADMIN SHOW DDL命令查看当前的 DDL Owner 节点。 -

对于物理 DDL 操作(如

ADD INDEX),可能需要更长的执行时间,特别是对于大表。在这种情况下,可以考虑使用 TiDB 的在线 DDL 功能,它允许在 DDL 执行期间继续进行 DML 操作[3]。 -

如果问题仍然存在,可以检查 TiDB 和 TiKV 的日志,查看是否有任何错误信息或异常情况。

-

对于分布式执行的 DDL 任务(如 TiDB v6.2.0 及以后版本中的并行 DDL 框架),可能需要检查多个 TiDB 节点的状态[4]。

看下你 dashboard的 资源呗

目前是恢复了:先全部cancel 当前在的ddl job(这些job也是create table新表的操作),然后重启整个集群。如果只是cancel全部ddl job,不重启集群的话,是不行的,后面我看看是否还会重现这种问题,谢谢大家了