【 TiDB 使用环境】生产环境

【 TiDB 版本】8.1.0

【复现路径】起了114个同步任务都显示成功,并且list查看状态都是normal

【遇到的问题:问题现象及影响】但在log路径下一直产生日志文件,日志内容就是[2024/08/21 09:48:31.344 +08:00] [WARN] [sink.go:488] [“meet nil column data, should not happened in production env”] [cols=“[{"column_id":1,"value":2594073387516685639},{"column_id":2,"value":"NDIzZThmMTE5YjU5YzI3MmJkMWNjN2JlZDUxZmYwOGE="},{"column_id":3,"value":"MTMxNTM1NDky"},{"column_id":4,"value":"MjAyMw=="},{"column_id":5,"value":"NDQwMzA2MTE3MDE2NzIz"},{"column_id":6,"value":"OTE0NDAzMDBNQTVESEs2UjQ3"},null,{"column_id":8,"value":"5rex5Zyz5biC5aSn5qKB5Zu95Yib56eR5oqA5pyJ6ZmQ5YWs5Y+4"},{"column_id":9,"value":"MjAyM+W5tOW6puaKpeWRig=="},{"column_id":10,"value":"MjAyNC0wOC0xMg=="},{"column_id":11,"value":null},{"column_id":12,"value":null},{"column_id":13,"value":null},{"column_id":14,"value":"MTM1MTA0OTIzMjE="},{"column_id":15,"value":"MTA0NjQ5NDIwNEBxcS5jb20="},{"column_id":16,"value":null},{"column_id":17,"value":null},{"column_id":18,"value":null},{"column_id":19,"value":null},{"column_id":20,"value":null},{"column_id":21,"value":"5rex5Zyz5biC5a6d5a6J5Yy65rKZ5LqV6KGX6YGT5aOG5bKX5Y2O576O5bGFM+agizIwMDg="},{"column_id":22,"value":"55S15a2Q5Lqn5ZOB5Y+K5ZGo6L656YWN5Lu255qE5oqA5pyv5byA5Y+R5LiO6ZSA5ZSu77yb55S16ISR5ZGo6L656YWN5Lu244CB5omL5py66YWN5Lu244CB56e75Yqo55S15rqQ44CB6Z+z5ZON44CB55S15a2Q5raI6LS55ZOB5Y+K55S15a2Q56S85ZOB55qE6ZSA5ZSu77yb5Zu95YaF6LS45piT77yM6LSn54mp5Y+K5oqA5pyv6L+b5Ye65Y+j44CCKOazleW+i+OAgeihjOaUv+azleinhOOAgeWbveWKoemZouWGs+WumuinhOWumuWcqOeZu+iusOWJjemhu+e7j+aJueWHhueahOmhueebrumZpOWklu+8iQ=="},null,{"column_id":24,"value":"NTE4MDAw"},{"column_id":25,"value":null},null,null,{"column_id":28,"value":null},{"column_id":29,"value":"5ZCm"},{"column_id":30,"value":"5ZCm"},{"column_id":31,"value":"5ZCm"},{"column_id":32,"value":"5piv"},null,{"column_id":34,"value":"5byA5Lia"},null,null,{"column_id":37,"value":null},{"column_id":38,"value":null},null,null,{"column_id":41,"value":null},{"column_id":42,"value":null},{"column_id":43,"value":null},null,{"column_id":45,"value":null},{"column_id":46,"value":null},null,null,null,null,null,{"column_id":52,"value":null},{"column_id":53,"value":null},null,null,null,null,null,null,{"column_id":60,"value":"2024-08-21 09:37:31"},{"column_id":61,"value":"2024-08-21 09:37:31"},{"column_id":62,"value":null},null]”] [tableInfo=“TableInfo, ID: 3643, Name:xmcicdata.t_biz_ent_report, ColNum: 63, IdxNum: 5, PKIsHandle: true”]

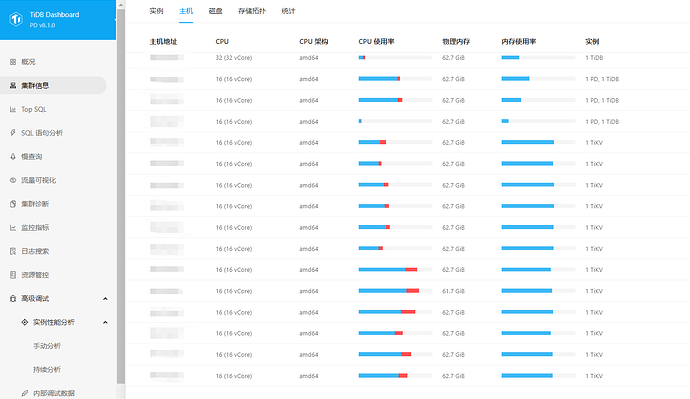

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

怎么这么多null,null,null,null,nul啊,对应表数据xmcicdata.t_biz_ent_report正常吗?

你好,我通过主键排查过这条数据是正常有值的。而且这个表总共有64个字段,我使用column-selectors过滤条件筛选了40个字段推向kafka。其中有些字段是有值,有些字段为空值。为什么日志会显示64个字段呢。

你要不发下你的同步任务配置呢?

WARN级别的,看一下任务和数据正常不,如果正常,可以忽略

你好,这个是我同步任务配置信息。

# 指定配置文件中涉及的库名、表名是否为大小写敏感

case-sensitive = false

# changefeed 发生内部错误或异常时允许自动重试的时间,默认值为 30 分钟。

changefeed-error-stuck-duration = "30m"

[mounter]

worker-num = 16

[filter]

rules = ['xmcicdata.t_base_entity_info']

[[filter.event-filters]]

matcher = ["xmcicdata.t_base_entity_info"]

ignore-event = ["all ddl"]

[sink]

dispatchers = [

{matcher = ['xmcicdata.t_base_entity_info'], topic = "{table}_topic", partition = "columns", columns = ["rid"]},

]

# column-selectors 从 v7.5.0 开始引入,仅对 Kafka Sink 生效。

# column-selectors 用于选择部分列进行同步。

column-selectors = [

{matcher = ['xmcicdata.t_base_entity_info'], columns = ['rid','entity_id','entity_name','credit_code','register_code','organization_code','register_date','register_department','organization_type','entity_type_code','entity_type','legal_person','legal_person_type','business_scope','business_start_time','business_end_time','check_date','entity_status','register_capital','actual_capital','currency_code','employee_cnt','revoke_date','revoke_reason','revoke_basis','cancel_date','cancel_reason','province_code','province_code','district_code','cd_create_time','cd_update_time','cd_opt_type']},

]

# delete-only-output-handle-key-columns 用于指定 Delete 事件的输出内容,只对 canal-json 和 open-protocol 协议有效。从 v7.2.0 开始引入。

# 该参数和 `force-replicate` 参数不兼容,如果同时将该参数和 `force-replicate` 设置为 true,创建 changefeed 会报错。

# 默认值为 false,即输出所有列的内容。当设置为 true 时,只输出主键列,或唯一索引列的内容。

delete-only-output-handle-key-columns = false

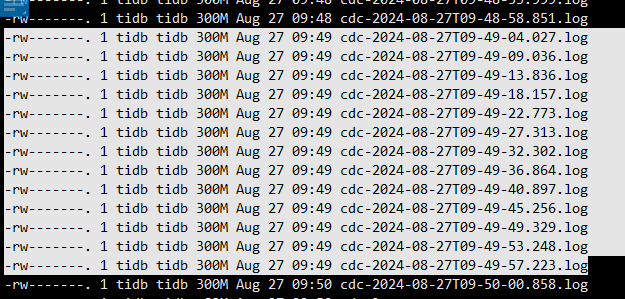

你好,我测试是可以同步数据的。但我起了很多的同步任务,担心会漏数据所以想找个解决方案。而且这个日志生成速度非常快,基本一个分钟就能写4G左右的日志文件。内容都是各种表的这种报错。

请问tidb有限制日志大小功能吗

看起来配置没啥问题,

- 你当前同步到 kafka 内容是否正确?

- 看起来只是感觉日志比较奇怪么?

看一下楼上回复的贴子,里面的参数配置可以设置日志大小和数量

1 个赞

能同步到kafka的信息是正常可以消费的,只是日志一直报warm。不清楚原因。

关于日志问题解决了,谢谢

好的,感谢你的帮助

我改下帖属性吧。感觉可以优化。日志太多也不合理。

后续跟踪下这个 ISSUE 即可。The log prints too much when using CDC sync column with nil values to Kafka. · Issue #11537 · pingcap/tiflow · GitHub

1 个赞

这个配置是针对xmcicdata.t_base_entity_info这张表的,日志显示的表是xmcicdata.t_biz_ent_report。

上游有执行添加字段的操作吗?

此话题已在最后回复的 60 天后被自动关闭。不再允许新回复。