tidb狂热爱好者:

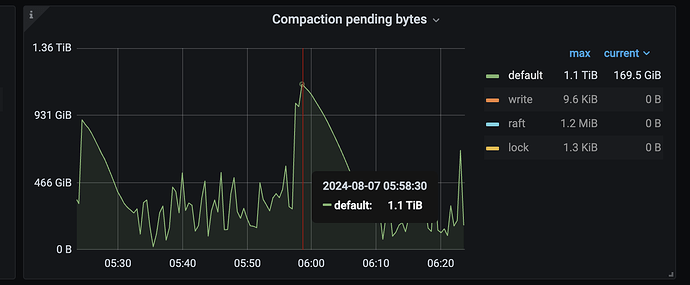

我给你看一下有些磁盘性能极差的案例

随机读性能(1),r(1), (2)][100.0%][r=1950MiB/s][r=499k IOPS][eta 00m:00s]

Run status group 0 (all jobs):

Disk stats (read/write):

随机写性能

Run status group 0 (all jobs):

Disk stats (read/write):