【 TiDB 使用环境】生产环境 /测试/ Poc

生产环境

【 TiDB 版本】

v8.1.0

【复现路径】做过哪些操作出现的问题

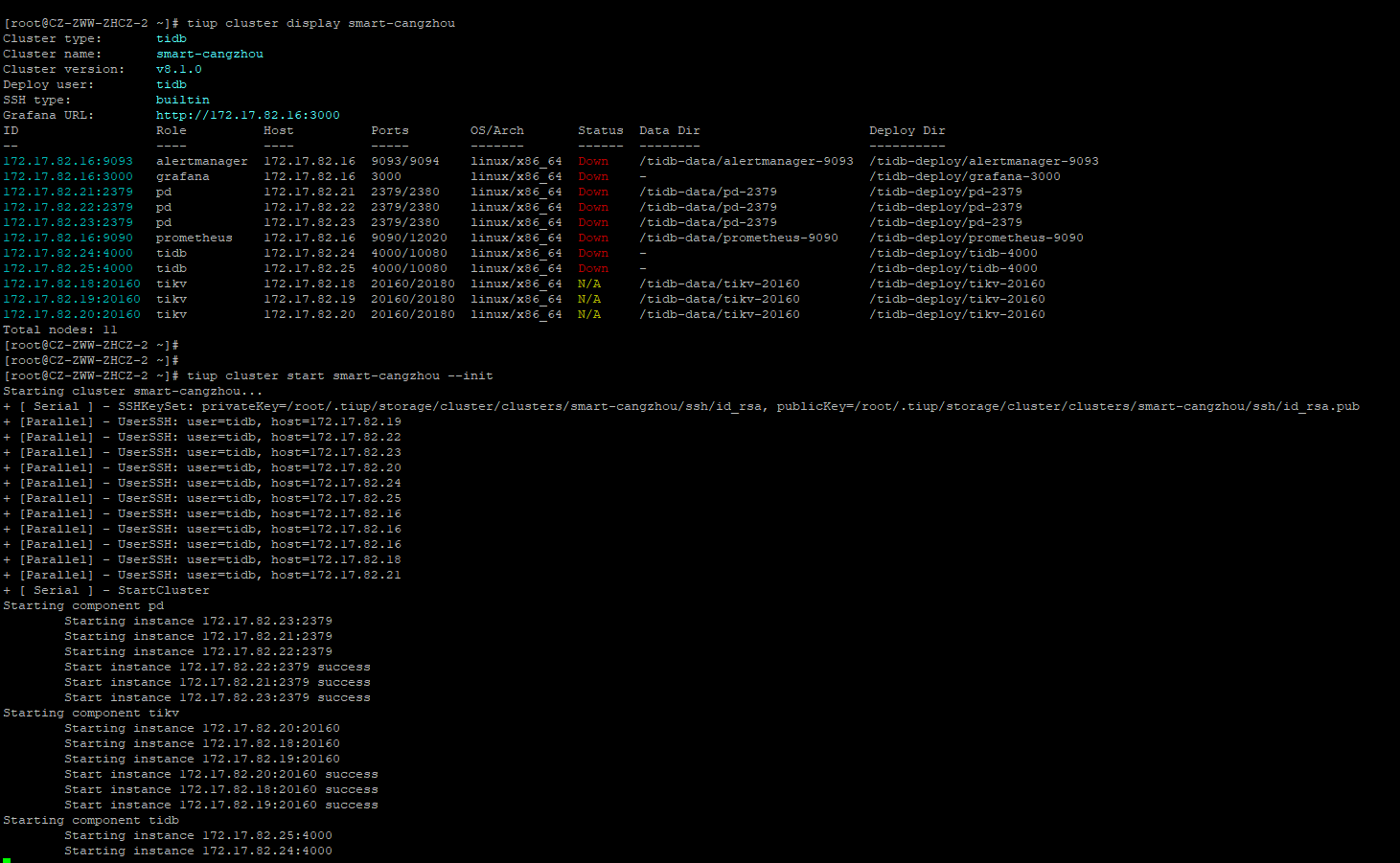

正常 启动 tiup cluster start tidb-test --init

【遇到的问题:问题现象及影响】

tidb组件没有启动成功

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

配置附件

topology.yaml (3.5 KB)

集群销毁了,重新安装还是一样的问题,在启动db组件的时候卡住

zhanggame1

(Ti D Ber G I13ecx U)

4

这个问题升级遇到可以手工建表,新安装不知道怎么处理。如果是在线安装换离线安装试试

我这个是离线安装的,第一次安装,db启动不起来,没办法建表吧,所以有啥解决办法吗

tiup用的1.6还是1.16啊?1.6的话先升级tiup吧。。。。

h5n1

(H5n1)

12

tiup cluster dispaly 的完整输出看下

升级tiup到最新的1.16,cluster也升级到1.16了,重新创建集群启动还是这个问题

h5n1

(H5n1)

15

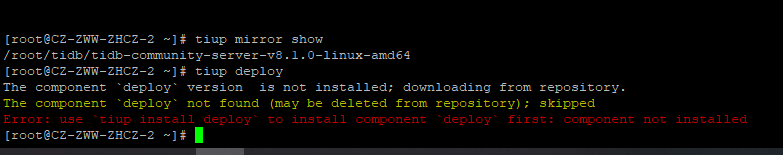

tiup mirror show 和 tiup deploy的命令 输出也都发下看看

h5n1

(H5n1)

16

Kongdom

(Kongdom)

17

是的。又看了一下原贴,可能不是这里的问题,那个最佳答案可能标注错了。

tiup deploy 这个组件没有啊,我第一次安装,不太清楚,deploy是那个组件

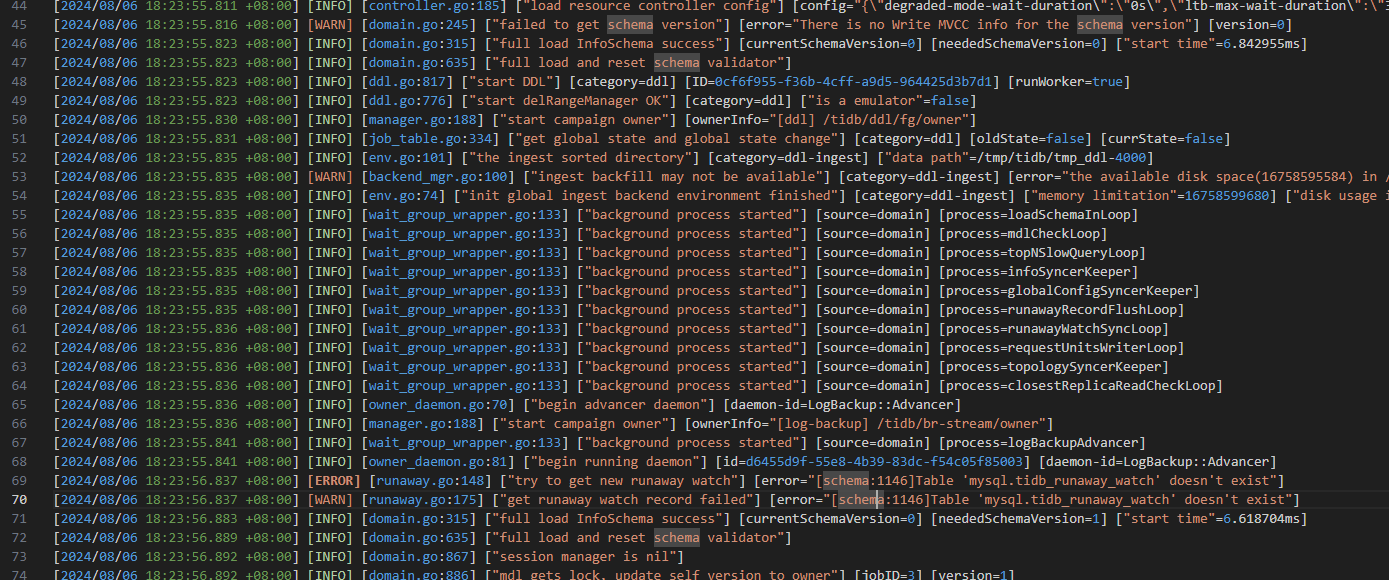

在启动的时候,db服务会卡住,日志输出就是缺少系统表watch

[root@CZ-ZWW-ZHCZ-2 tidb]# tiup cluster deploy smart-cangzhou v8.1.0 ./topology.yaml --user root -p

Input SSH password:

-

Detect CPU Arch Name

- Detecting node 172.17.82.21 Arch info … ⠸ Shell: host=172.17.82.21, sudo=false, command=

uname -m

- Detecting node 172.17.82.22 Arch info … ⠸ Shell: host=172.17.82.22, sudo=false, command=

uname -m

- Detecting node 172.17.82.23 Arch info … ⠸ Shell: host=172.17.82.23, sudo=false, command=

uname -m

-

Detect CPU Arch Name

- Detecting node 172.17.82.21 Arch info … Done

- Detecting node 172.17.82.22 Arch info … Done

- Detecting node 172.17.82.23 Arch info … Done

- Detecting node 172.17.82.18 Arch info … Done

- Detecting node 172.17.82.19 Arch info … Done

- Detecting node 172.17.82.20 Arch info … Done

- Detecting node 172.17.82.24 Arch info … Done

- Detecting node 172.17.82.25 Arch info … Done

- Detecting node 172.17.82.16 Arch info … Done

-

Detect CPU OS Name

- Detecting node 172.17.82.21 OS info … ⠙ Shell: host=172.17.82.21, sudo=false, command=

uname -s

- Detecting node 172.17.82.22 OS info … ⠙ Shell: host=172.17.82.22, sudo=false, command=

uname -s

- Detecting node 172.17.82.23 OS info … ⠙ Shell: host=172.17.82.23, sudo=false, command=

uname -s

-

Detect CPU OS Name

- Detecting node 172.17.82.21 OS info … Done

- Detecting node 172.17.82.22 OS info … Done

- Detecting node 172.17.82.23 OS info … Done

- Detecting node 172.17.82.18 OS info … Done

- Detecting node 172.17.82.19 OS info … Done

- Detecting node 172.17.82.20 OS info … Done

- Detecting node 172.17.82.24 OS info … Done

- Detecting node 172.17.82.25 OS info … Done

- Detecting node 172.17.82.16 OS info … Done

Please confirm your topology:

Cluster type: tidb

Cluster name: smart-cangzhou

Cluster version: v8.1.0

Role Host Ports OS/Arch Directories

pd 172.17.82.21 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

pd 172.17.82.22 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

pd 172.17.82.23 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

tikv 172.17.82.18 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 172.17.82.19 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 172.17.82.20 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tidb 172.17.82.24 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tidb 172.17.82.25 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

prometheus 172.17.82.16 9090/12020 linux/x86_64 /tidb-deploy/prometheus-9090,/tidb-data/prometheus-9090

grafana 172.17.82.16 3000 linux/x86_64 /tidb-deploy/grafana-3000

alertmanager 172.17.82.16 9093/9094 linux/x86_64 /tidb-deploy/alertmanager-9093,/tidb-data/alertmanager-9093

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

- Generate SSH keys … Done

- Download TiDB components

- Download pd:v8.1.0 (linux/amd64) … Done

- Download tikv:v8.1.0 (linux/amd64) … Done

- Download tidb:v8.1.0 (linux/amd64) … Done

- Download prometheus:v8.1.0 (linux/amd64) … Done

- Download grafana:v8.1.0 (linux/amd64) … Done

- Download alertmanager: (linux/amd64) … Done

- Download node_exporter: (linux/amd64) … Done

- Download blackbox_exporter: (linux/amd64) … Done

- Initialize target host environments

- Prepare 172.17.82.25:22 … Done

- Prepare 172.17.82.16:22 … Done

- Prepare 172.17.82.22:22 … Done

- Prepare 172.17.82.23:22 … Done

- Prepare 172.17.82.18:22 … Done

- Prepare 172.17.82.19:22 … Done

- Prepare 172.17.82.20:22 … Done

- Prepare 172.17.82.24:22 … Done

- Prepare 172.17.82.21:22 … Done

- Deploy TiDB instance

- Copy pd → 172.17.82.21 … Done

- Copy pd → 172.17.82.22 … Done

- Copy pd → 172.17.82.23 … Done

- Copy tikv → 172.17.82.18 … Done

- Copy tikv → 172.17.82.19 … Done

- Copy tikv → 172.17.82.20 … Done

- Copy tidb → 172.17.82.24 … Done

- Copy tidb → 172.17.82.25 … Done

- Copy prometheus → 172.17.82.16 … Done

- Copy grafana → 172.17.82.16 … Done

- Copy alertmanager → 172.17.82.16 … Done

- Deploy node_exporter → 172.17.82.20 … Done

- Deploy node_exporter → 172.17.82.24 … Done

- Deploy node_exporter → 172.17.82.21 … Done

- Deploy node_exporter → 172.17.82.23 … Done

- Deploy node_exporter → 172.17.82.18 … Done

- Deploy node_exporter → 172.17.82.19 … Done

- Deploy node_exporter → 172.17.82.22 … Done

- Deploy node_exporter → 172.17.82.25 … Done

- Deploy node_exporter → 172.17.82.16 … Done

- Deploy blackbox_exporter → 172.17.82.22 … Done

- Deploy blackbox_exporter → 172.17.82.25 … Done

- Deploy blackbox_exporter → 172.17.82.16 … Done

- Deploy blackbox_exporter → 172.17.82.21 … Done

- Deploy blackbox_exporter → 172.17.82.23 … Done

- Deploy blackbox_exporter → 172.17.82.18 … Done

- Deploy blackbox_exporter → 172.17.82.19 … Done

- Deploy blackbox_exporter → 172.17.82.20 … Done

- Deploy blackbox_exporter → 172.17.82.24 … Done

- Copy certificate to remote host

- Init instance configs

- Generate config pd → 172.17.82.21:2379 … Done

- Generate config pd → 172.17.82.22:2379 … Done

- Generate config pd → 172.17.82.23:2379 … Done

- Generate config tikv → 172.17.82.18:20160 … Done

- Generate config tikv → 172.17.82.19:20160 … Done

- Generate config tikv → 172.17.82.20:20160 … Done

- Generate config tidb → 172.17.82.24:4000 … Done

- Generate config tidb → 172.17.82.25:4000 … Done

- Generate config prometheus → 172.17.82.16:9090 … Done

- Generate config grafana → 172.17.82.16:3000 … Done

- Generate config alertmanager → 172.17.82.16:9093 … Done

- Init monitor configs

- Generate config node_exporter → 172.17.82.22 … Done

- Generate config node_exporter → 172.17.82.25 … Done

- Generate config node_exporter → 172.17.82.16 … Done

- Generate config node_exporter → 172.17.82.19 … Done

- Generate config node_exporter → 172.17.82.20 … Done

- Generate config node_exporter → 172.17.82.24 … Done

- Generate config node_exporter → 172.17.82.21 … Done

- Generate config node_exporter → 172.17.82.23 … Done

- Generate config node_exporter → 172.17.82.18 … Done

- Generate config blackbox_exporter → 172.17.82.24 … Done

- Generate config blackbox_exporter → 172.17.82.21 … Done

- Generate config blackbox_exporter → 172.17.82.23 … Done

- Generate config blackbox_exporter → 172.17.82.18 … Done

- Generate config blackbox_exporter → 172.17.82.19 … Done

- Generate config blackbox_exporter → 172.17.82.20 … Done

- Generate config blackbox_exporter → 172.17.82.22 … Done

- Generate config blackbox_exporter → 172.17.82.25 … Done

- Generate config blackbox_exporter → 172.17.82.16 … Done

Enabling component pd

Enabling instance 172.17.82.23:2379

Enabling instance 172.17.82.21:2379

Enabling instance 172.17.82.22:2379

Enable instance 172.17.82.22:2379 success

Enable instance 172.17.82.23:2379 success

Enable instance 172.17.82.21:2379 success

Enabling component tikv

Enabling instance 172.17.82.20:20160

Enabling instance 172.17.82.18:20160

Enabling instance 172.17.82.19:20160

Enable instance 172.17.82.19:20160 success

Enable instance 172.17.82.18:20160 success

Enable instance 172.17.82.20:20160 success

Enabling component tidb

Enabling instance 172.17.82.25:4000

Enabling instance 172.17.82.24:4000

Enable instance 172.17.82.25:4000 success

Enable instance 172.17.82.24:4000 success

Enabling component prometheus

Enabling instance 172.17.82.16:9090

Enable instance 172.17.82.16:9090 success

Enabling component grafana

Enabling instance 172.17.82.16:3000

Enable instance 172.17.82.16:3000 success

Enabling component alertmanager

Enabling instance 172.17.82.16:9093

Enable instance 172.17.82.16:9093 success

Enabling component node_exporter

Enabling instance 172.17.82.16

Enabling instance 172.17.82.21

Enabling instance 172.17.82.20

Enabling instance 172.17.82.18

Enabling instance 172.17.82.19

Enabling instance 172.17.82.22

Enabling instance 172.17.82.23

Enabling instance 172.17.82.24

Enabling instance 172.17.82.25

Enable 172.17.82.25 success

Enable 172.17.82.23 success

Enable 172.17.82.18 success

Enable 172.17.82.24 success

Enable 172.17.82.20 success

Enable 172.17.82.19 success

Enable 172.17.82.22 success

Enable 172.17.82.21 success

Enable 172.17.82.16 success

Enabling component blackbox_exporter

Enabling instance 172.17.82.16

Enabling instance 172.17.82.22

Enabling instance 172.17.82.18

Enabling instance 172.17.82.23

Enabling instance 172.17.82.21

Enabling instance 172.17.82.20

Enabling instance 172.17.82.19

Enabling instance 172.17.82.24

Enabling instance 172.17.82.25

Enable 172.17.82.25 success

Enable 172.17.82.23 success

Enable 172.17.82.18 success

Enable 172.17.82.19 success

Enable 172.17.82.24 success

Enable 172.17.82.20 success

Enable 172.17.82.16 success

Enable 172.17.82.22 success

Enable 172.17.82.21 success

Cluster smart-cangzhou deployed successfully, you can start it with command: tiup cluster start smart-cangzhou --init