【 TiDB 使用环境】测试

【 TiDB 版本】6.5.0

【复现路径】通过tidb-operator的tidbcluster.yaml开启TLS

【遇到的问题:通过tidb-operator部署TIDB集群,PD启动异常】

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

1、目前所有POD可调度

kubectl -n csc get pod -owide | grep basic

basic-discovery-7d7f9f8644-kj7lh 1/1 Running 0 4h36m 100.65.108.98 rxx-ipv6-02

basic-pd-0 1/1 Running 0 4h36m 100.107.182.85 rxx-ipv6-03

basic-pd-1 1/1 Running 0 4h36m 100.64.175.82 rxx-ipv6-01

basic-pd-2 1/1 Running 5 4h35m 100.65.108.66 rxx-ipv6-02

basic-tidb-0 2/2 Running 0 4h35m 100.107.182.73 rxx-ipv6-03

basic-tidb-1 2/2 Running 0 4h35m 100.65.108.103 rxx-ipv6-02

basic-tidb-2 2/2 Running 0 4h35m 100.64.175.77 rxx-ipv6-01

basic-tidb-initializerd-6jh88 0/1 Completed 0 4h49m 100.65.108.93 rxx-ipv6-02

basic-tikv-0 1/1 Running 0 4h35m 100.65.108.101 rxx-ipv6-02

basic-tikv-1 1/1 Running 0 4h35m 100.64.175.83 rxx-ipv6-01

basic-tikv-2 1/1 Running 0 4h35m 100.107.182.74 rxx-ipv6-03

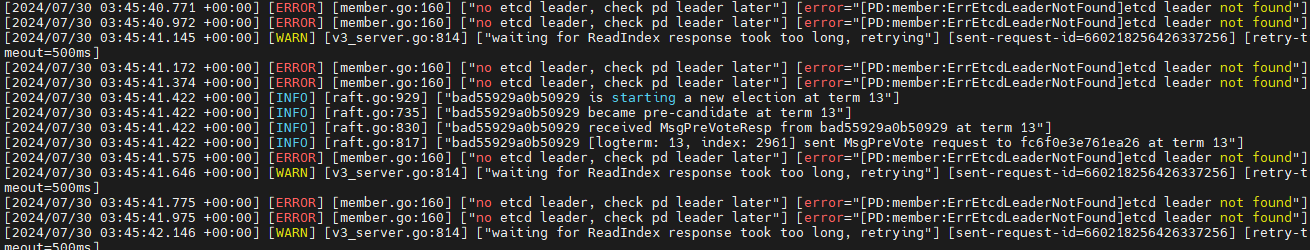

2、tidb\tikv由于PD异常日志报错;

3、PD集群无法构建,选不出leader

[2024/07/30 03:45:42.780 +00:00] [ERROR] [member.go:160] [“no etcd leader, check pd leader later”] [error=“[PD:member:ErrEtcdLeaderNotFound]etcd leader not found”]

[2024/07/30 03:45:42.980 +00:00] [ERROR] [member.go:160] [“no etcd leader, check pd leader later”] [error=“[PD:member:ErrEtcdLeaderNotFound]etcd leader not found”]

[2024/07/30 03:45:43.148 +00:00] [WARN] [v3_server.go:814] [“waiting for ReadIndex response took too long, retrying”] [sent-request-id=660218256426337256] [retry-timeout=500ms]

[2024/07/30 03:45:43.181 +00:00] [ERROR] [member.go:160] [“no etcd leader, check pd leader later”] [error=“[PD:member:ErrEtcdLeaderNotFound]etcd leader not found”]

[2024/07/30 03:45:43.381 +00:00] [ERROR] [member.go:160] [“no etcd leader, check pd leader later”] [error=“[PD:member:ErrEtcdLeaderNotFound]etcd leader not found”]

[2024/07/30 03:45:43.435 +00:00] [WARN] [peer_status.go:68] [“peer became inactive (message send to peer failed)”] [peer-id=fc6f0e3e761ea26] [error=“failed to heartbeat fc6f0e3e761ea26 on stream Message (write tcp 100.107.182.85:2380->100.64.175.82:45216: write: broken pipe)”]

[2024/07/30 03:45:43.437 +00:00] [WARN] [stream.go:193] [“lost TCP streaming connection with remote peer”] [stream-writer-type=“stream Message”] [local-member-id=bad55929a0b50929] [remote-peer-id=fc6f0e3e761ea26]

[2024/07/30 03:45:43.440 +00:00] [WARN] [stream.go:193] [“lost TCP streaming connection with remote peer”] [stream-writer-type=“stream MsgApp v2”] [local-member-id=bad55929a0b50929] [remote-peer-id=fc6f0e3e761ea26]

[2024/07/30 03:45:43.582 +00:00] [ERROR] [member.go:160] [“no etcd leader, check pd leader later”] [error=“[PD:member:ErrEtcdLeaderNotFound]etcd leader not found”]

[2024/07/30 03:45:43.648 +00:00] [WARN] [v3_server.go:814] [“waiting for ReadIndex response took too long, retrying”] [sent-request-id=660218256426337256] [retry-timeout=500ms]

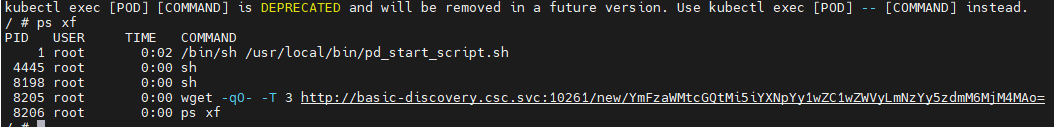

4、pd-2 POD报错

waiting for discovery service to return start args …

waiting for discovery service to return start args …

waiting for discovery service to return start args …

waiting for discovery service to return start args …

waiting for discovery service to return start args …

waiting for discovery service to return start args …

waiting for discovery service to return start args …

waiting for discovery service to return start args …

POD内从discovery 获取信息使用的是http,目前已经开启TLS,此处应该用https?

5、集群使用tidb-operator1.4.3、tidb6.5.0、helm3.2.1部署;

6、tidb-cluster.yaml配置如下:

apiVersion: pingcap.com/v1alpha1

kind: TidbCluster

metadata:

name: basic

namespace: csc

spec:

version: v6.5.0

timezone: UTC

pvReclaimPolicy: Retain

discovery: {}

tlsCluster:

enabled: true

pd:

podManagementPolicy: Parallel

baseImage: pingcap/pd

replicas: 3

tlsClientSecretName: basic-pd-cluster-secret

mountClusterClientSecret: true

# if storageClassName is not set, the default Storage Class of the Kubernetes cluster will be used

storageClassName: tidb-pd-storage-csc

requests:

storage: “1Gi”

config: {}

nodeSelector:

node-role.kubernetes.io/master: “”

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/component

operator: “In”

values:

- “pd”

topologyKey: “kubernetes.io/hostname”

tikv:

podManagementPolicy: Parallel

baseImage: pingcap/tikv

replicas: 3

mountClusterClientSecret: true

# if storageClassName is not set, the default Storage Class of the Kubernetes cluster will be used

storageClassName: tidb-kv-storage-csc

requests:

storage: “20Gi”

config:

raftdb:

compaction-readahead-size: 2MiB

defaultcf:

max-write-buffer-number: 10

target-file-size-base: 32MiB

raftstore:

sync-log: true

readpool:

coprocessor:

use-unified-pool: true

storage:

use-unified-pool: true

unified:

max-thread-count: 10

rocksdb:

compaction-readahead-size: 2MiB

defaultcf:

max-write-buffer-number: 10

target-file-size-base: 32MiB

storage:

block-cache:

capacity: 2GiB

shared: true

strict-capacity-limit: true

reserve-space: 0MB

nodeSelector:

node-role.kubernetes.io/master: “”

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/component

operator: “In”

values:

- “tikv”

topologyKey: “kubernetes.io/hostname”

tidb:

podManagementPolicy: Parallel

baseImage: pingcap/tidb

replicas: 3

tlsClient:

enabled: true

limits:

memory: 8Gi

service:

type: ClusterIP

config: |

[instance]

tidb_slow_log_threshold = 10000

nodeSelector:

node-role.kubernetes.io/master: “”

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/component

operator: “In”

values:

- “tidb”

topologyKey: “kubernetes.io/hostname”

7、集群为3节点:pd3、kv3、tidb*3,crd.yaml官方下载未修改,operator values.yaml使用k8s scheduler;